Picture by Creator

As a knowledge skilled, you’re in all probability aware of the price of poor knowledge high quality. For all knowledge tasks—large or small—it is best to carry out important knowledge high quality checks.

There are devoted libraries and frameworks for knowledge high quality evaluation. However if you’re a newbie, you may run easy but necessary knowledge high quality checks with pandas. And this tutorial will train you the way.

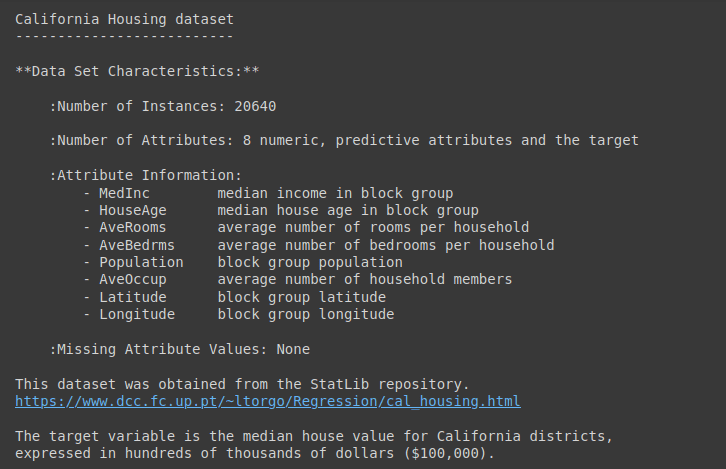

We’ll use the California Housing Dataset from scikit-learn for this tutorial.

We’ll use the California housing dataset from Scikit-learn’s datasets module. The information set accommodates over 20,000 information of eight numeric options and a goal median home worth.

Let’s learn the dataset right into a pandas dataframe df:

from sklearn.datasets import fetch_california_housing

import pandas as pd

# Fetch the California housing dataset

knowledge = fetch_california_housing()

# Convert the dataset to a Pandas DataFrame

df = pd.DataFrame(knowledge.knowledge, columns=knowledge.feature_names)

# Add goal column

df['MedHouseVal'] = knowledge.goal

For an in depth description of the dataset, run knowledge.DESCR as proven:

Output of knowledge.DESCR

Let’s get some primary info on the dataset:

Right here’s the output:

Output >>>

RangeIndex: 20640 entries, 0 to 20639

Knowledge columns (whole 9 columns):

# Column Non-Null Rely Dtype

--- ------ -------------- -----

0 MedInc 20640 non-null float64

1 HouseAge 20640 non-null float64

2 AveRooms 20640 non-null float64

3 AveBedrms 20640 non-null float64

4 Inhabitants 20640 non-null float64

5 AveOccup 20640 non-null float64

6 Latitude 20640 non-null float64

7 Longitude 20640 non-null float64

8 MedHouseVal 20640 non-null float64

dtypes: float64(9)

reminiscence utilization: 1.4 MB

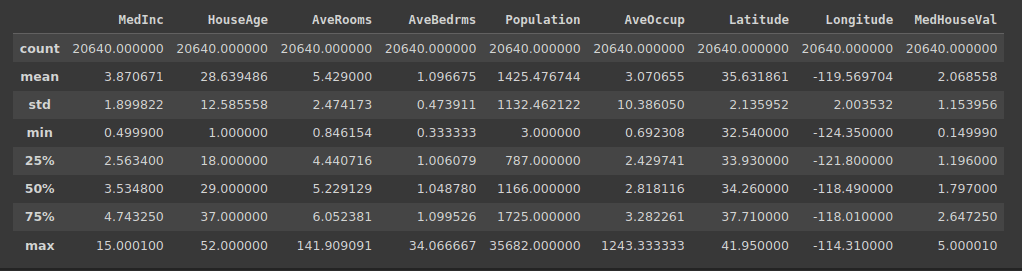

As a result of now we have numeric options, allow us to additionally get the abstract begins utilizing the describe() methodology:

Output of df.describe()

Actual-world datasets typically have lacking values. To investigate the info and construct fashions, you must deal with these lacking values.

To make sure knowledge high quality, it is best to verify if the fraction of lacking values is inside a selected tolerance restrict. You may then impute the lacking values utilizing appropriate imputation methods.

Step one, due to this fact, is to verify for lacking values throughout all options within the dataset.

This code checks for lacking values in every column of the dataframe df:

# Examine for lacking values within the DataFrame

missing_values = df.isnull().sum()

print("Lacking Values:")

print(missing_values)

The result’s a pandas collection that exhibits the rely of lacking values for every column:

Output >>>

Lacking Values:

MedInc 0

HouseAge 0

AveRooms 0

AveBedrms 0

Inhabitants 0

AveOccup 0

Latitude 0

Longitude 0

MedHouseVal 0

dtype: int64

As seen, there are not any lacking values on this dataset.

Duplicate information within the dataset can skew evaluation. So it is best to verify for and drop the duplicate information as wanted.

Right here’s the code to determine and return duplicate rows in df. If there are any duplicate rows, they are going to be included within the outcome:

# Examine for duplicate rows within the DataFrame

duplicate_rows = df[df.duplicated()]

print("Duplicate Rows:")

print(duplicate_rows)

The result’s an empty dataframe. That means there are not any duplicate information within the dataset:

Output >>>

Duplicate Rows:

Empty DataFrame

Columns: [MedInc, HouseAge, AveRooms, AveBedrms, Population, AveOccup, Latitude, Longitude, MedHouseVal]

Index: []

When analyzing a dataset, you’ll typically have to rework or scale a number of options. To keep away from surprising errors when performing such operations, it is very important verify if the columns are the entire anticipated knowledge kind.

This code checks the info kinds of every column within the dataframe df:

# Examine knowledge kinds of every column within the DataFrame

data_types = df.dtypes

print("Knowledge Varieties:")

print(data_types)

Right here, all numeric options are of float knowledge kind as anticipated:

Output >>>

Knowledge Varieties:

MedInc float64

HouseAge float64

AveRooms float64

AveBedrms float64

Inhabitants float64

AveOccup float64

Latitude float64

Longitude float64

MedHouseVal float64

dtype: object

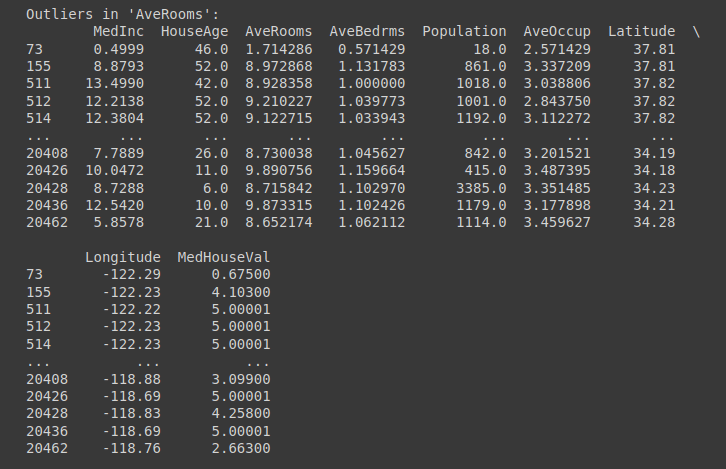

Outliers are knowledge factors which can be considerably completely different from different factors within the dataset. In the event you keep in mind, we ran the describe() methodology on the dataframe.

Primarily based on the quartile values and the utmost worth, you would’ve recognized {that a} subset of options comprise outliers. Particularly, these options:

- MedInc

- AveRooms

- AveBedrms

- Inhabitants

One strategy to dealing with outliers is to make use of the interquartile vary, the distinction between the seventy fifth and twenty fifth quartiles. If Q1 is the twenty fifth quartile and Q3 is the seventy fifth quartile, then the interquartile vary is given by: Q3 – Q1.

We then use the quartiles and the IQR to outline the interval [Q1 - 1.5 * IQR, Q3 + 1.5 * IQR]. And all factors outdoors this vary are outliers.

columns_to_check = ['MedInc', 'AveRooms', 'AveBedrms', 'Population']

# Operate to seek out information with outliers

def find_outliers_pandas(knowledge, column):

Q1 = knowledge[column].quantile(0.25)

Q3 = knowledge[column].quantile(0.75)

IQR = Q3 - Q1

lower_bound = Q1 - 1.5 * IQR

upper_bound = Q3 + 1.5 * IQR

outliers = knowledge[(data[column] < lower_bound) | (knowledge[column] > upper_bound)]

return outliers

# Discover information with outliers for every specified column

outliers_dict = {}

for column in columns_to-check:

outliers_dict[column] = find_outliers_pandas(df, column)

# Print the information with outliers for every column

for column, outliers in outliers_dict.objects():

print(f"Outliers in '{column}':")

print(outliers)

print("n")

Outliers in ‘AveRooms’ Column | Truncated Output for Outliers Examine

An necessary verify for numeric options is to validate the vary. This ensures that each one observations of a characteristic tackle values in an anticipated vary.

This code validates that the ‘MedInc’ worth falls inside an anticipated vary and identifies knowledge factors that don’t meet this standards:

# Examine numerical worth vary for the 'MedInc' column

valid_range = (0, 16)

value_range_check = df[~df['MedInc'].between(*valid_range)]

print("Worth Vary Examine (MedInc):")

print(value_range_check)

You may attempt for different numeric options of your selection. However we see that each one values within the ‘MedInc’ column lie within the anticipated vary:

Output >>>

Worth Vary Examine (MedInc):

Empty DataFrame

Columns: [MedInc, HouseAge, AveRooms, AveBedrms, Population, AveOccup, Latitude, Longitude, MedHouseVal]

Index: []

Most knowledge units comprise associated options. So it is necessary to incorporate checks primarily based on logically related relationships between columns (or options).

Whereas options—individually—could tackle values within the anticipated vary, the connection between them could also be inconsistent.

Right here is an instance for our dataset. In a legitimate document, the ‘AveRooms’ ought to usually be better than or equal to the ‘AveBedRms’.

# AveRooms shouldn't be smaller than AveBedrooms

invalid_data = df[df['AveRooms'] < df['AveBedrms']]

print("Invalid Data (AveRooms < AveBedrms):")

print(invalid_data)

Within the California housing dataset we’re working with, we see that there are not any such invalid information:

Output >>>

Invalid Data (AveRooms < AveBedrms):

Empty DataFrame

Columns: [MedInc, HouseAge, AveRooms, AveBedrms, Population, AveOccup, Latitude, Longitude, MedHouseVal]

Index: []

Inconsistent knowledge entry is a standard knowledge high quality subject in most datasets. Examples embrace:

- Inconsistent formatting in datetime columns

- Inconsistent logging of categorical variable values

- Recording of studying in numerous models

In our dataset, we’ve verified the info kinds of columns and have recognized outliers. However you may as well run checks for inconsistent knowledge entry.

Let’s whip up a easy instance to verify if all of the date entries have a constant formatting.

Right here we use common expressions along with pandas apply() perform to verify if all date entries are within the YYYY-MM-DD format:

import pandas as pd

import re

knowledge = {'Date': ['2023-10-29', '2023-11-15', '23-10-2023', '2023/10/29', '2023-10-30']}

df = pd.DataFrame(knowledge)

# Outline the anticipated date format

date_format_pattern = r'^d{4}-d{2}-d{2}$' # YYYY-MM-DD format

# Operate to verify if a date worth matches the anticipated format

def check_date_format(date_str, date_format_pattern):

return re.match(date_format_pattern, date_str) just isn't None

# Apply the format verify to the 'Date' column

date_format_check = df['Date'].apply(lambda x: check_date_format(x, date_format_pattern))

# Establish and retrieve entries that don't observe the anticipated format

non_adherent_dates = df[~date_format_check]

if not non_adherent_dates.empty:

print("Entries that don't observe the anticipated format:")

print(non_adherent_dates)

else:

print("All dates are within the anticipated format.")

This returns the entries that don’t observe the anticipated format:

Output >>>

Entries that don't observe the anticipated format:

Date

2 23-10-2023

3 2023/10/29

On this tutorial, we went over frequent knowledge high quality checks with pandas.

If you find yourself engaged on smaller knowledge evaluation tasks, these knowledge high quality checks with pandas are an excellent place to begin. Relying on the issue and the dataset, you may embrace further checks.

In the event you’re focused on studying knowledge evaluation, take a look at the information 7 Steps to Mastering Knowledge Wrangling with Pandas and Python.

Bala Priya C is a developer and technical author from India. She likes working on the intersection of math, programming, knowledge science, and content material creation. Her areas of curiosity and experience embrace DevOps, knowledge science, and pure language processing. She enjoys studying, writing, coding, and low! At the moment, she’s engaged on studying and sharing her information with the developer neighborhood by authoring tutorials, how-to guides, opinion items, and extra.