It wasn’t that far again that if you happen to needed a processor with tons of cache buried inside it, then CPUs had been the plain alternative. Now, even budget-level GPUs come filled with extra inner reminiscence than a high-end CPU from barely a couple of years in the past.

So, what modified? Why did graphics chips abruptly want extra cache than a generalized, central processor? Is the specialised reminiscence totally different between the 2 and can we see GPUs sooner or later with gigabytes of cache?

To reply these questions, we have to peek beneath the hood of the newest chips and observe the modifications through the years.

TL;DR: Why GPUs have gained a lot cache

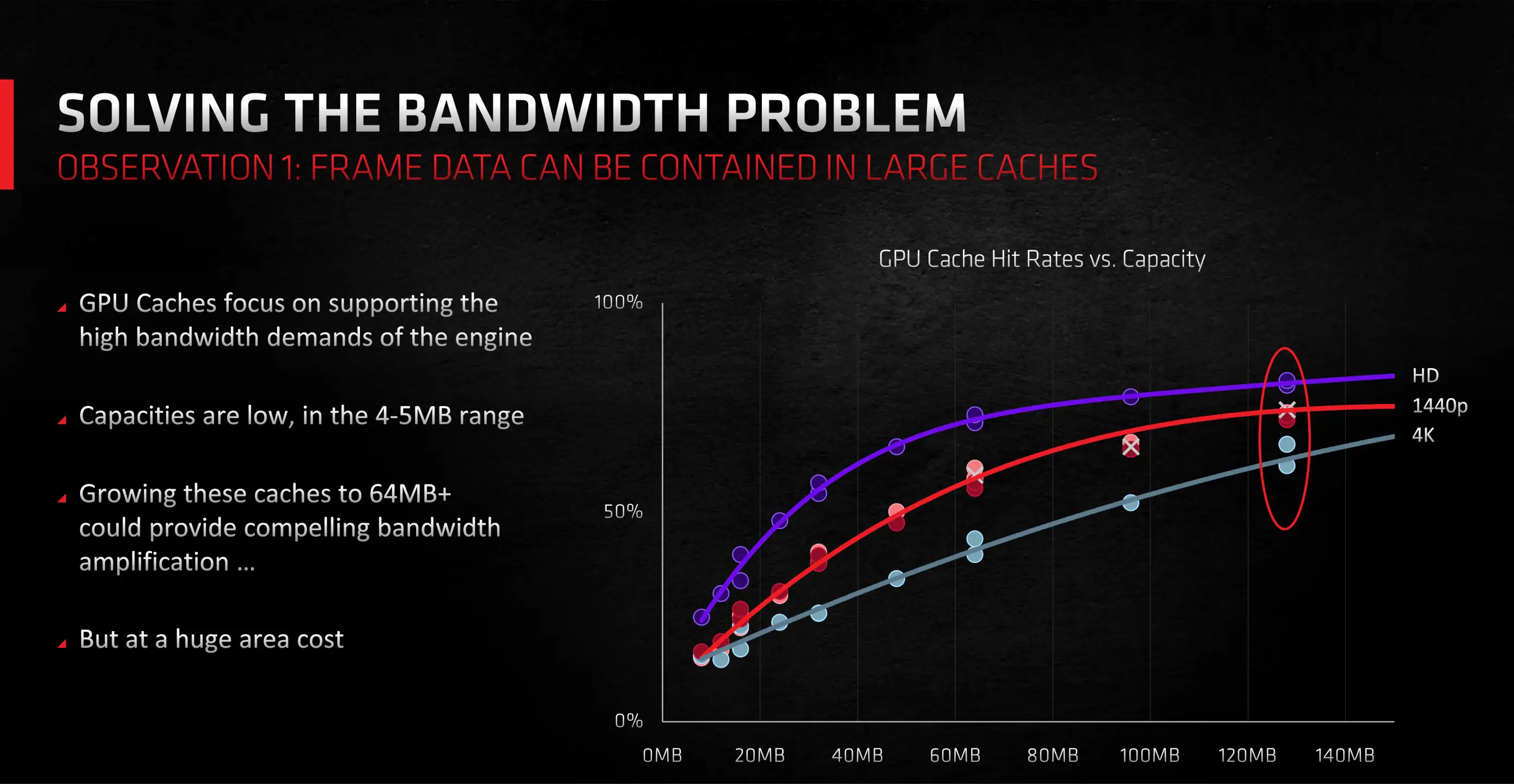

Low-level information caches have grown in dimension as a result of GPUs are actually utilized in quite a lot of functions, not simply graphics. To enhance their capabilities in general-purpose computing, graphics chips require bigger caches. This ensures that no math core is left idle, ready for information.

Final-level caches have expanded significantly to offset the truth that DRAM efficiency hasn’t saved tempo with the developments in processor efficiency. Substantial L2 or L3 caches scale back cache misses. This additionally prevents cores from being idle and minimizes the necessity for very huge reminiscence buses.

Moreover, advances in rendering strategies, particularly ray tracing, place immense calls for on a GPU’s cache hierarchy. Giant last-level caches are important to make sure that sport efficiency, when utilizing these strategies, stays playable.

Cache course 101

To deal with the subject of cache in full, we should first perceive what cache is and its significance. All processors require reminiscence to retailer the numbers they crunch and the ensuing calculations. Additionally they want particular directions on duties, comparable to which calculations to carry out. These directions are saved and conveyed numerically.

This reminiscence is often known as RAM (Random Entry Reminiscence). Each digital machine with a processor is supplied with RAM. For a number of a long time, PCs have employed DRAM (the “D” stands for dynamic) as non permanent storage for information, with disk drives serving as long-term storage.

Since its invention, DRAM has seen large enhancements, changing into exponentially quicker over time. The identical applies to information storage, with as soon as dominant however sluggish laborious drives being changed by swift solid-state storage (SSD). Nevertheless, regardless of these developments, each kinds of reminiscence are nonetheless desperately sluggish in comparison with how shortly a primary processor can perform a single calculation.

Whereas a chip can add two numbers in a couple of nanoseconds, retrieving these values or storing the outcome can take tons of to hundreds of nanoseconds – even with the quickest accessible RAM. If there was no method to get round this, then PCs would not be that a lot better than these from the Nineteen Seventies, despite the fact that they’ve a lot greater clock speeds.

Fortunately, there’s SRAM (Static RAM) to bridge this hole. SRAM is constructed from the identical transistors as these within the processors performing the calculations. This implies SRAM will be built-in instantly into the chip and function on the chip’s velocity. Its proximity to the logic models shortens information retrieval or storage occasions to tens of nanoseconds.

The draw back to that is that the association of transistors wanted for even a single reminiscence bit, together with different mandatory circuitry, occupies a substantial quantity of house. Utilizing present manufacturing strategies, 64 MB of SRAM can be roughly equal in dimension to 2 GB of DRAM.

That is why modern processors incorporate varied SRAM blocks – some minuscule, containing just a few bits, whereas others maintain a number of MBs. These bigger blocks bypass the slowness of DRAM, considerably boosting chip efficiency.

These reminiscence sorts go by varied names, primarily based on their utilization, however essentially the most prevalent is often known as “cache.” And that is the place the dialogue turns into a tad extra difficult.

All hail the hierarchy

The logic models inside a processor’s cores usually work on small items of knowledge. The directions they obtain and the numbers they course of are not often bigger than 64-bits. Consequently, the tiniest blocks of SRAM, which retailer these values, are equally sized and are termed “registers.”

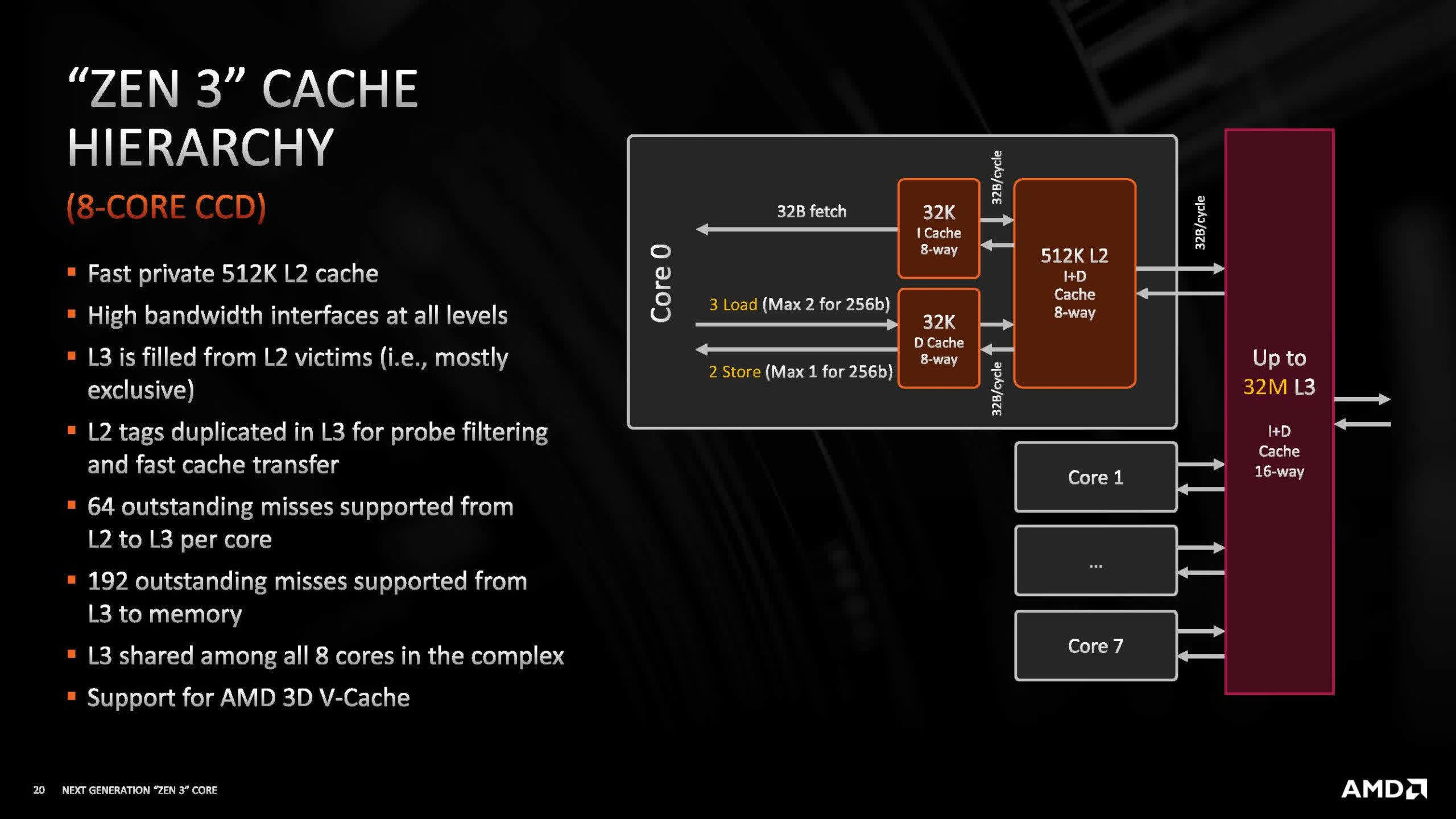

To make sure that these models do not stall, awaiting the following set of instructions or information, chips usually prefetch this data and retain regularly issued ones. This information is housed in two distinct SRAM units, usually known as the Stage 1 Instruction and Stage 1 Information caches. Because the names suggest, every has a particular sort of knowledge it holds. Regardless of their significance, they don’t seem to be expansive. As an illustration, AMD’s latest desktop processors allocate 32 kB for every.

Although not very massive, these caches are ample sufficient to carry a big quantity of instructions and information, making certain cores aren’t idling. Nevertheless, to take care of this movement of knowledge, caches have to be constantly provided. When a core requires a particular worth not current in a Stage 1 cache (L1), the L2 cache turns into essential.

The L2 cache is a a lot bigger block, storing a various vary of knowledge. Keep in mind, a single core has a number of logic unit traces. With out the L2, L1 caches would shortly be overwhelmed. Trendy processors have a number of cores, prompting the introduction of one other cache layer that providers all cores: the Stage 3 (L3) cache. It is much more expansive, spanning a number of MBs. Traditionally, some CPUs even featured a fourth degree.

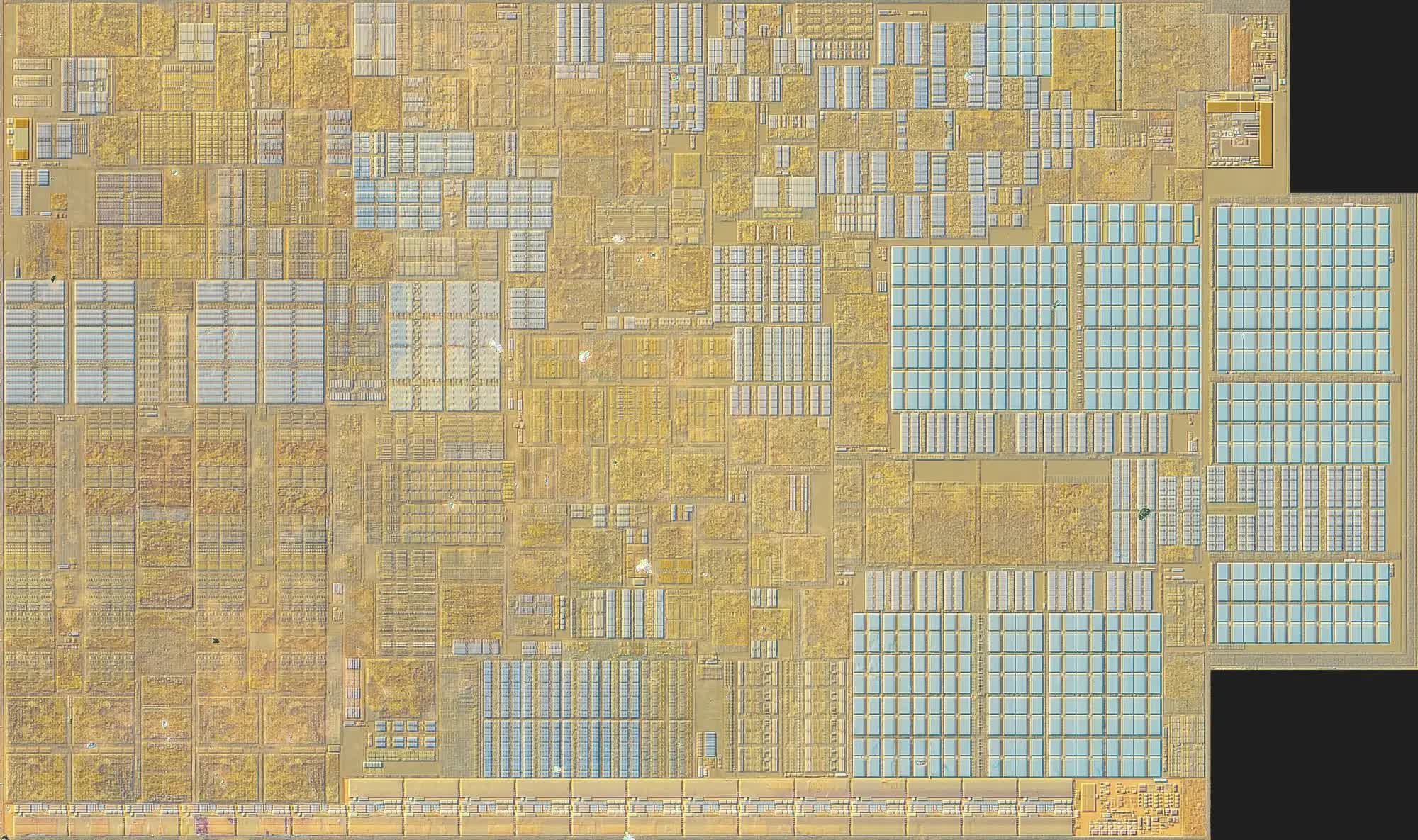

The above picture is that of a single P-core from one in all Intel’s Raptor Lake CPUs. The assorted grids in pale blue, dotted concerning the construction, are a mix of registers and varied caches. You may see a extra detailed breakdown of every part on this web site. Nevertheless, in essence, the L1 caches are centrally positioned within the core, whereas the L2 dominates the right-hand portion.

The final degree of cache in processors typically acts as the primary port of name for any information coming from the system’s DRAM, earlier than it will get transferred onward, however that is not at all times the case. That is the half about cache that tends to get very complicated, nevertheless it’s additionally essential to understanding why CPUs and GPUs have very totally different cache preparations.

The way in which that the complete system of SRAM block will get used is called the cache hierarchy of the chip and it varies enormously, relying on components such because the age of the structure and what sector the chip is focused towards. However for a CPU, there are some elements which can be at all times the identical, one in all which is the hierarchy’s coherence.

Information in a cache could also be replicated from the system’s DRAM. If a core modifies it, it is crucial that the DRAM model is concurrently up to date. In consequence, CPU cache constructions possess mechanisms to make sure information accuracy and well timed updates. This intricate design provides to the complexity, and within the realm of processors, complexity interprets to transistors and subsequently, house.

That is why the primary few ranges of cache aren’t very massive – not simply because SRAM is fairly spacious, however on account of all the extra programs required to maintain it coherent. Nevertheless, not each processor wants this, and there is one very particular sort that typically eschews it altogether.

Cores over cache, the GPU approach

Immediately’s graphics chip, by way of how their internals are organized and performance, took kind in 2007. That is when each Nvidia and ATI launched their unified shader GPUs, however for the latter, the actual change occurred 5 years later.

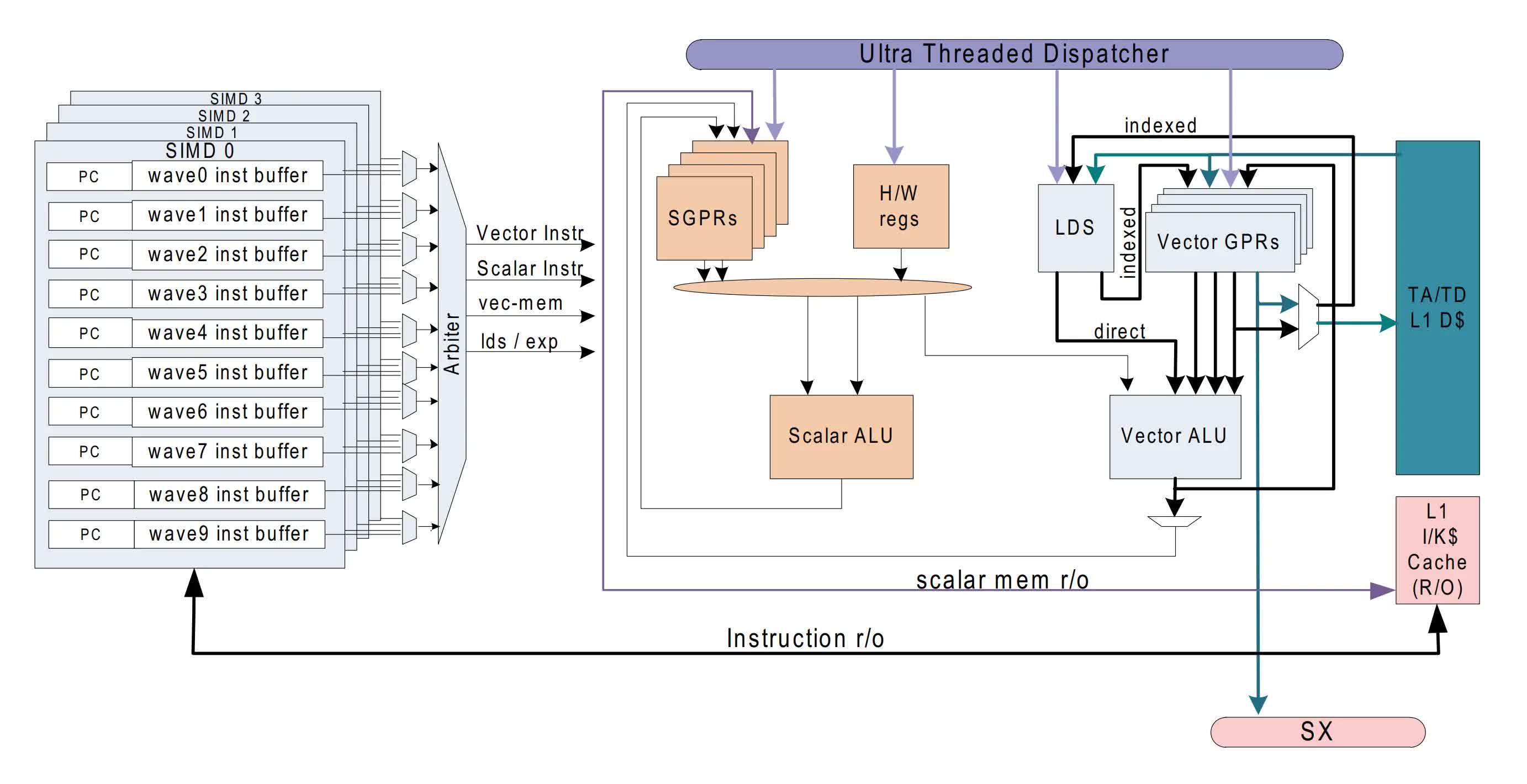

In 2012, AMD (who by then had acquired ATI) unveiled their Graphics Core Subsequent (GCN) structure. This design stays in use at the moment, although it has undergone important modifications and has developed into types like RDNA and CDNA. We’ll reference GCN to elucidate the cache variations between CPUs and GPUs, because it presents a transparent instance.

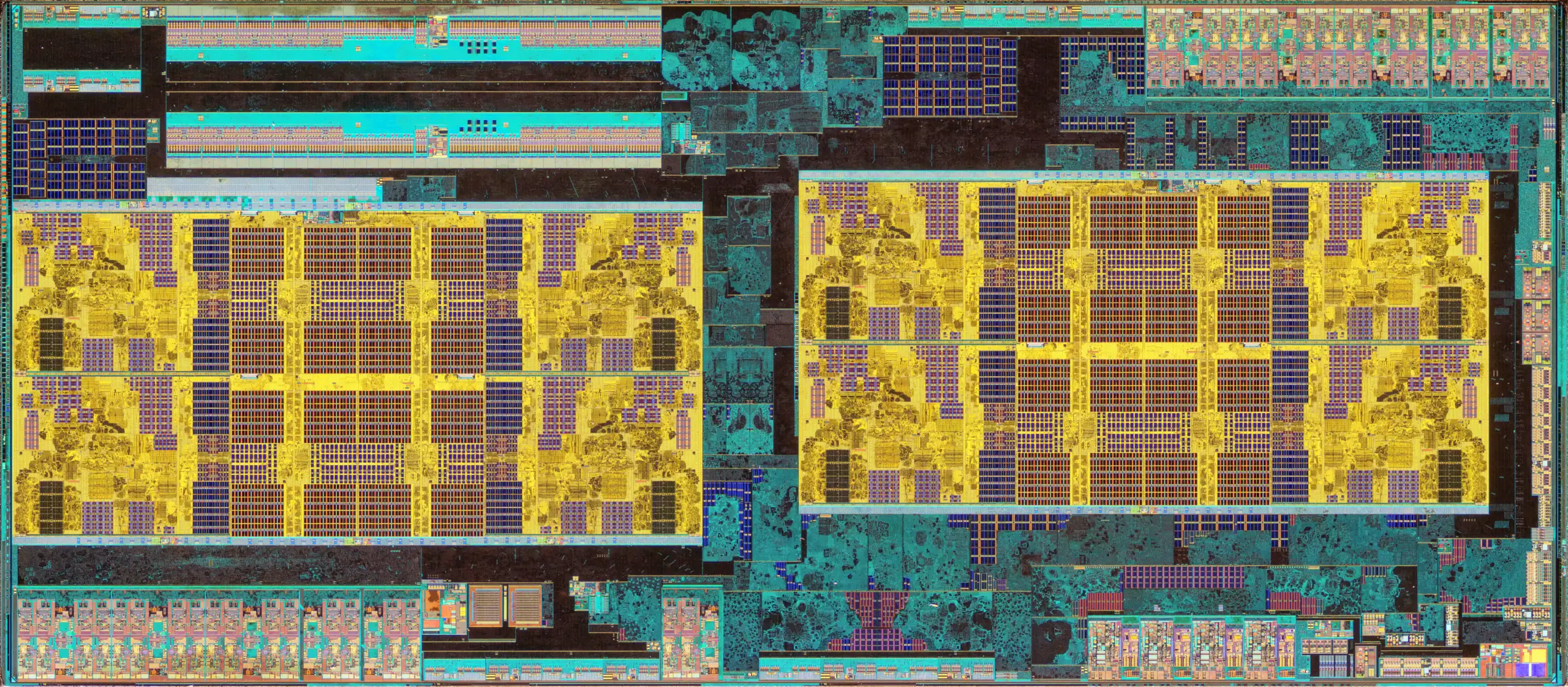

Leaping to 2017, let’s distinction AMD’s Ryzen 7 1800X CPU (above) with the Radeon RX Vega 64 GPU. The previous homes 8 cores, with every core containing 8 pipelines. 4 of those pipelines deal with customary mathematical operations, two focus on intensive floating-point calculations, and the final two oversee information administration. Its cache hierarchy is structured as follows: 64 kB L1 instruction, 32 kB L1 information, 512 kB L2, and 16 MB L3.

The Vega 64 GPU options 4 processing blocks. Every of those blocks incorporates 64 pipelines, extra generally termed Compute Models (CUs). Moreover, every CU accommodates 4 units of 16 logic models. Each CU possesses 16 kB of L1 information cache and 64 kB of scratchpad reminiscence, primarily functioning as cache sans the coherency mechanisms (AMD labels this because the Native Information Share).

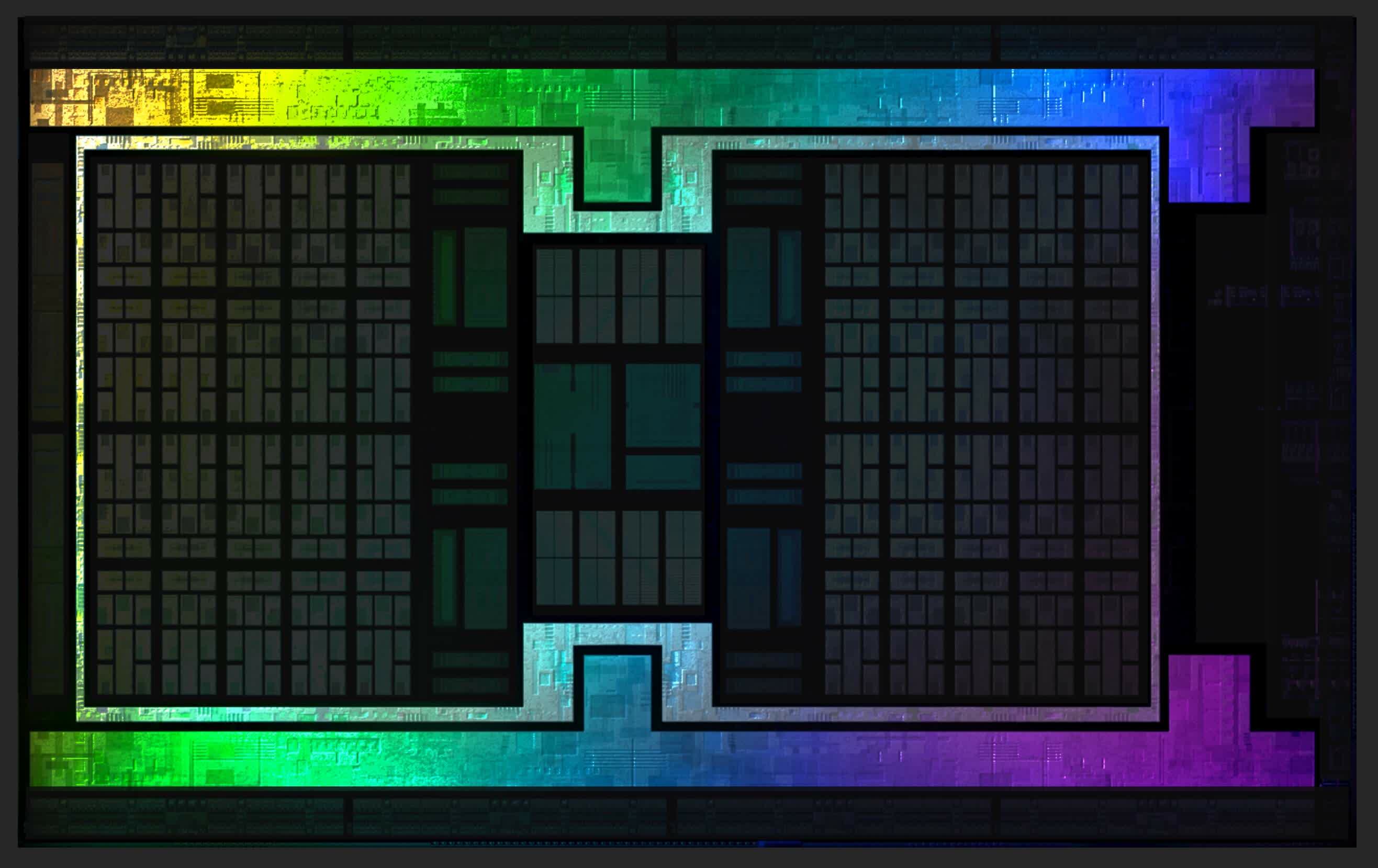

Moreover, there are two extra caches (16 kB L1 instruction and 32 kB L1 information) that cater to teams of 4 CUs. The Vega GPU additionally boasts 4 MB of L2 cache, positioned in two strips, one on the base and the opposite close to the highest of the illustrated picture under.

This particular graphics processor is double the scale of the Ryzen chip by way of die space. Nevertheless, its cache occupies a remarkably smaller house than that within the CPU. Why does this GPU keep a minimal cache, particularly in regards to the L2 section, compared to the CPU?

Given its considerably greater variety of ‘cores’ in comparison with the Ryzen chip, one would possibly anticipate that with a complete of 4096 math models, a considerable cache can be crucial to take care of a gentle information provide. Nevertheless, CPU and GPU workloads differ essentially.

Whereas the Ryzen chip can handle as much as 16 threads concurrently and course of 16 distinct instructions, the Vega processor would possibly deal with a bigger variety of threads, however its CUs usually execute equivalent directions.

Furthermore, the maths models inside every CU synchronously carry out equivalent computations throughout a cycle. This uniformity classifies them as SIMT (single instruction, a number of threads) gadgets. GPUs function sequentially, seldom deviating into different processing routes.

To attract a parallel, a CPU processes a various vary of directions whereas making certain information coherence. Quite the opposite, a GPU repetitively executes related duties, eliminating the necessity for information coherence and always restarting its operations.

As a result of the duty of rendering 3D graphics consists largely of repetitive mathematical operations, a GPU would not must be as complicated as a CPU. As an alternative, GPUs are designed to be massively parallel, processing hundreds of knowledge factors concurrently. That is why they’ve smaller caches however much more cores, in comparison with a central processor.

Nevertheless, if that is the case, why do AMD and Nvidia’s newest graphics playing cards have monumental quantities of cache, even finances fashions? The Radeon RX 7600 solely has 2 MB of L2, nevertheless it additionally sports activities 32 MB of L3; Nvidia’s GeForce RTX 4060 would not have L3, nevertheless it does include 24 MB of L2.

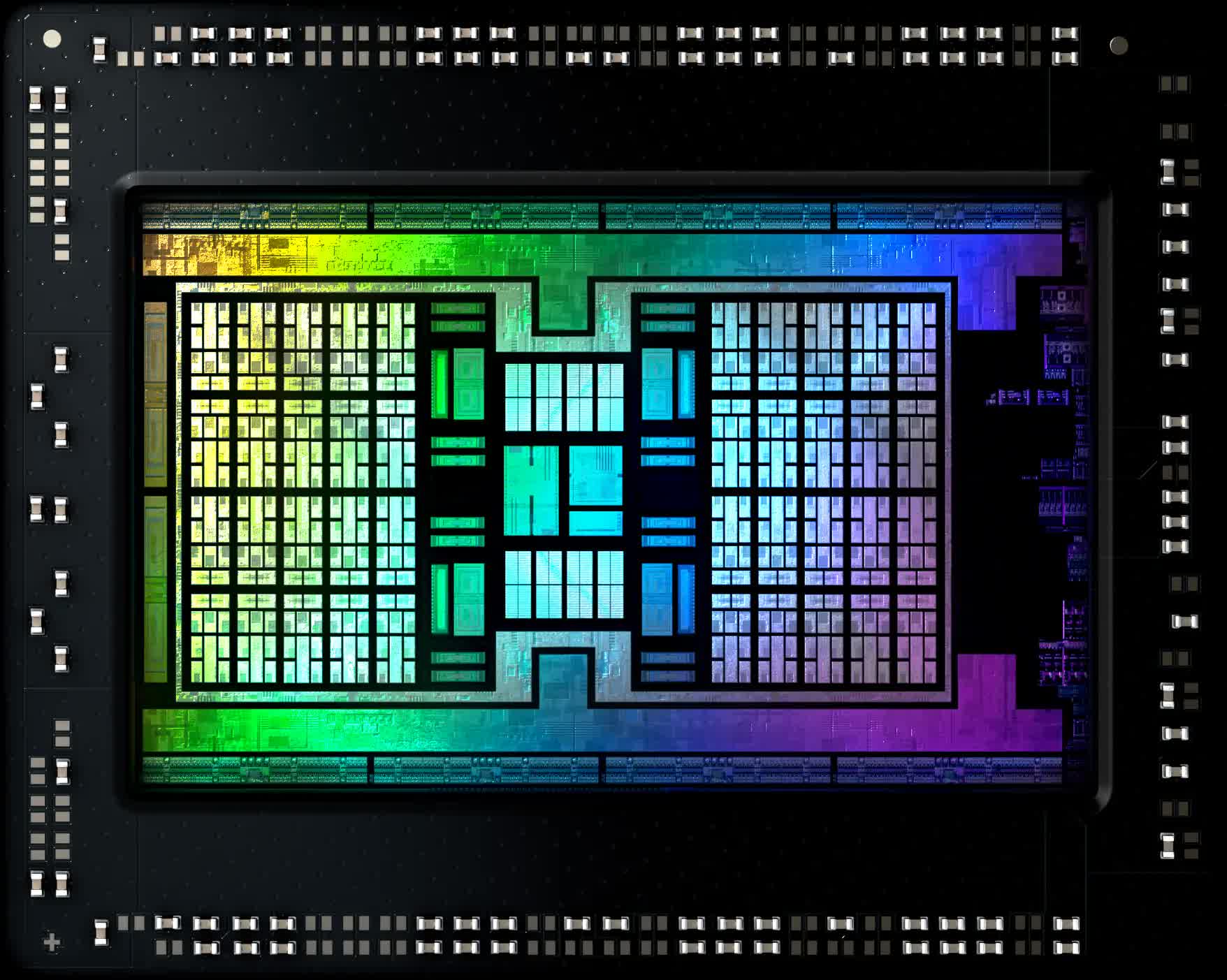

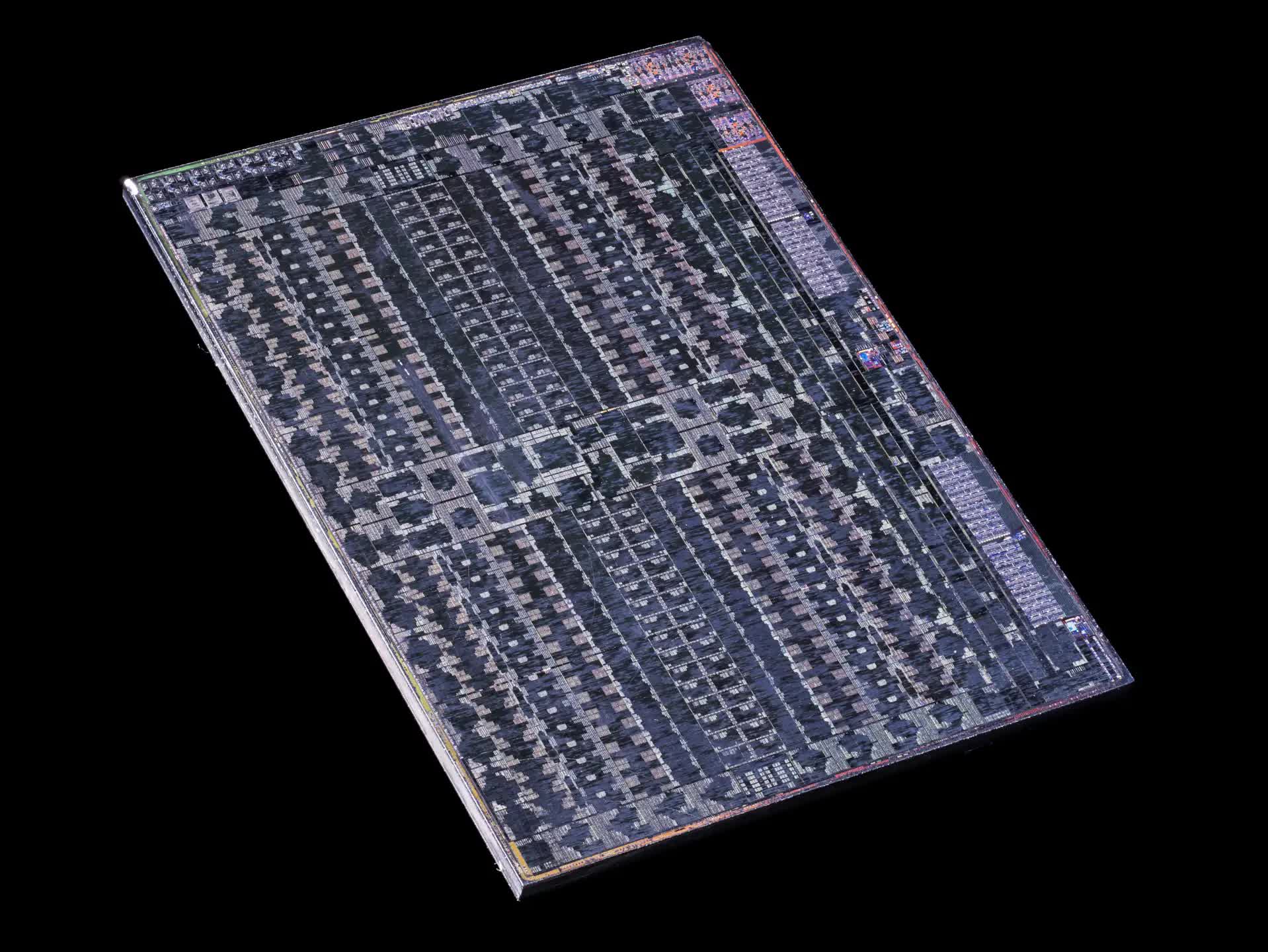

And relating to their halo merchandise, the numbers are gigantic – the GeForce RTX 4090 boasts 72 MB of L2 and AMD’s Navi 21 chip (under) within the Radeon RX 6800 / 6900 playing cards wades in with 128 MB of L3!

There’s fairly a bit to unpack right here – for instance, why did AMD preserve the caches so small for thus lengthy, however then abruptly enhance them in dimension and throw in an enormous quantity of L3 for good measure?

Why did Nvidia enhance the L1 sizes a lot, however preserve the L2 comparatively small, solely to repeat AMD and go L2 cache loopy?

That G in GPU is not simply graphics anymore

There are various causes for this transformation, however for Nvidia, the shift was pushed by modifications in how its GPUs had been being utilized. Though they’re known as Graphics Processing Models, these chips had been crafted to do way more than merely show spectacular photos on screens.

Whereas the overwhelming majority of GPUs excel on this perform, these chips have ventured past the confines of rendering. They now deal with mathematical masses in information processing and scientific algorithms throughout a spectrum of disciplines, together with engineering, physics, chemistry, biology, medication, economics, and geography. The explanation? As a result of they’re exceptionally good at doing the identical calculation on hundreds of knowledge factors, all on the identical time.

Although CPUs also can carry out this perform, for sure duties, a single GPU will be as environment friendly as a number of central processors. With Nvidia’s GPUs evolving to be extra general-purpose, each the amount of logic models inside the chip and their operational speeds have witnessed exponential development.

Nvidia’s debut into critical general-purpose computing was marked by the Tesla C870 in 2007. The structure of this card, with merely two ranges in its cache hierarchy (one may technically argue for two.5, however let’s sidestep that debate), ensured the L1 caches had been expansive sufficient to repeatedly provide information to all of the models. This was bolstered by ever-faster VRAM. The L2 cache grew in dimension, too, although nothing like what we’re seeing now.

Nvidia’s first couple of unified shader GPUs received by with simply 16 kB of L1 information (and a tiny quantity for directions and different values), however this jumped to 64 kB inside a few years. For the previous two architectures, GeForce chips have sported 128 kB of L1 and its server-grade processors sport much more.

The L1 cache in these first chips solely needed to serve 10 logic models (8 normal function + 2 particular perform). By the point the Pascal structure appeared (roughly the identical period as AMD’s RX Vega 64), the cache had grown to 96 kB for over 150 logic models.

This cache will get its information from the L2, in fact, and because the variety of clusters of these models elevated with every era, so too did the quantity of L2 cache. Nevertheless, since 2012, the quantity of L2 per logic cluster (higher often known as a Streaming Multiprocessor, SM) has remained comparatively the identical – within the order of 70 to 130 MB. The exception is, in fact, the newest Ada Lovelace structure, and we’ll come again to this one in a second.

For a few years, AMD’s focus was closely centered on its CPUs, with the graphics division being comparatively small – by way of staffing and finances. As a elementary design, although, GCN labored rather well, discovering a house in PCs, consoles, laptops, workstations, and servers.

Whereas maybe not at all times the quickest GPU one may purchase, AMD’s graphics processors had been greater than ok and the cache construction of those chips seemingly did not want a critical replace. However whereas CPUs and GPUs had been rising in leaps and bounds, there was one other piece of the puzzle that was proving far tougher to enhance.

DRAM drags its heels

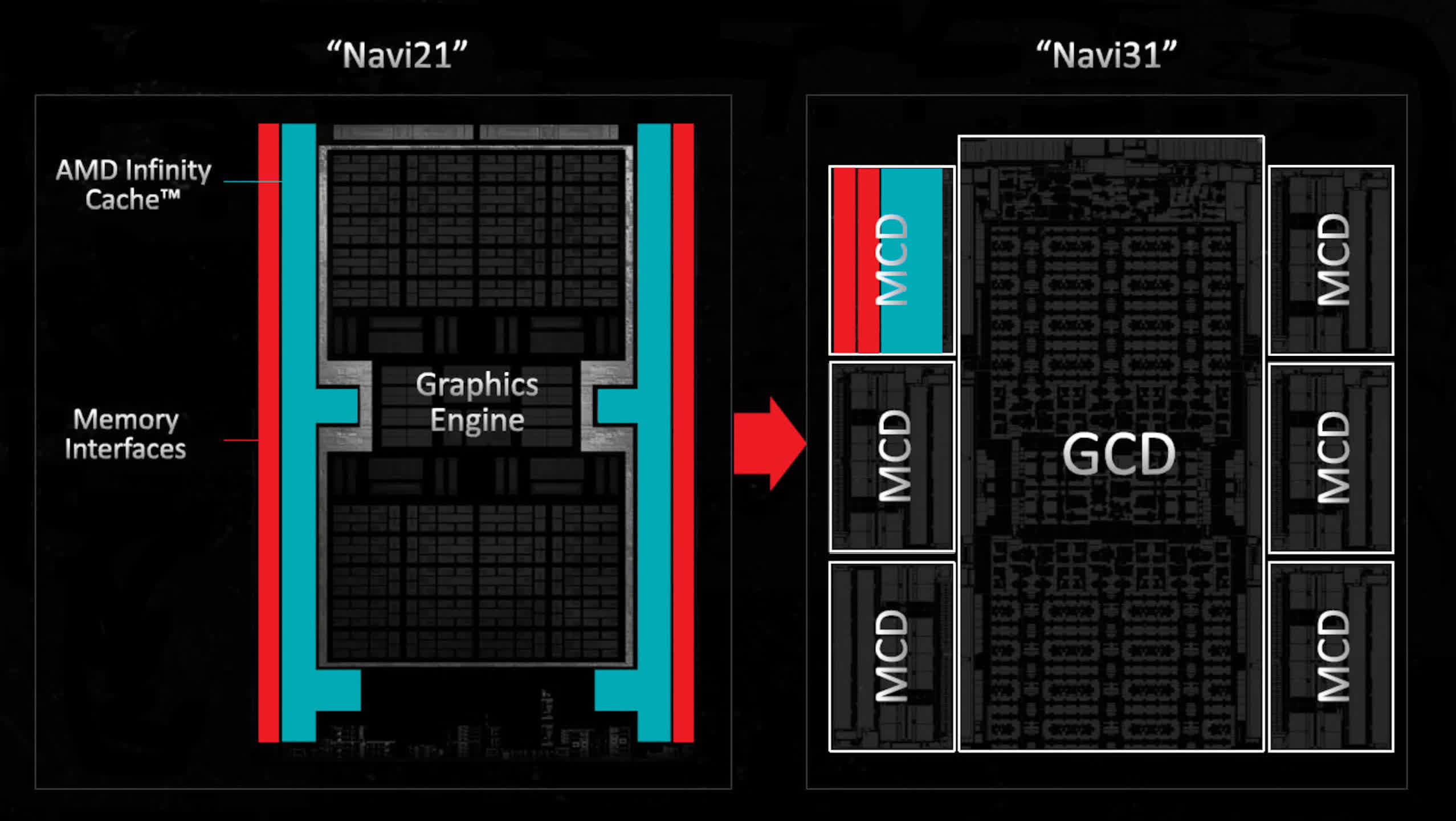

The successor to GCN was the 2019 RDNA structure, for which AMD rejigged all the things in order that their new GPUs used three ranges of cache, whereas nonetheless maintaining them comparatively small. Then, for its follow-up RDNA 2 design, AMD leveraged its experience in CPU cache engineering to shoehorn a fourth degree of cache into the die – one which was vastly bigger than something seen in a GPU previous to that time.

However why make such a change, particularly when these chips had been primarily geared for gaming and the GCN caches had seen minimal modifications through the years?

The explanations are easy:

- Chip Dimension and Complexity: Whereas incorporating extra cache ranges does complicate the chip design, it prevents the chips from changing into excessively massive. A smaller chip means extra models will be extracted from a single silicon wafer, making them less expensive to provide.

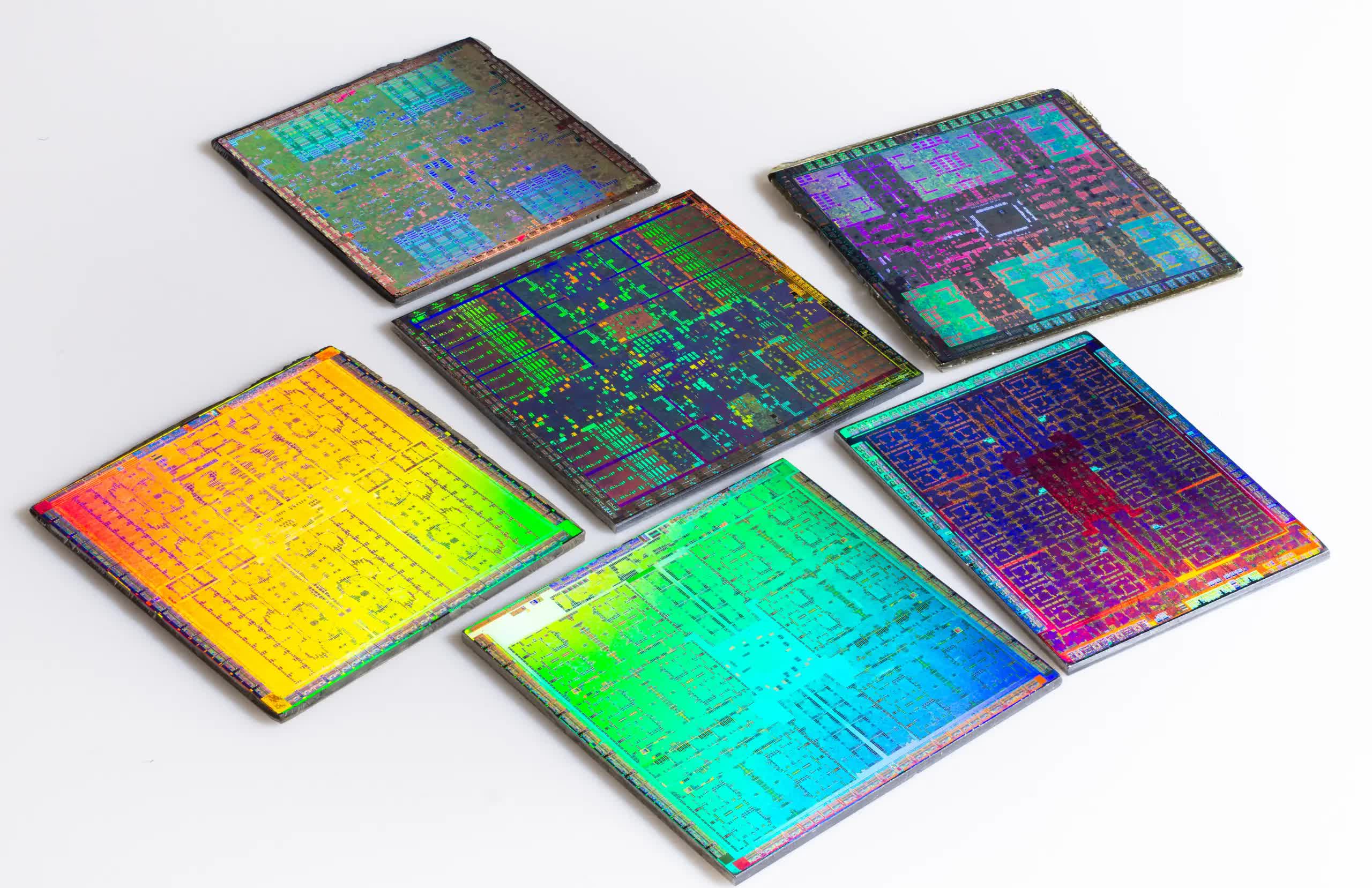

- Reminiscence Velocity vs. Processor Velocity: Processor speeds have been on a constant rise through the years, however DRAM hasn’t matched that tempo. As an illustration, within the Radeon RX Vega 64, AMD utilized Excessive Bandwidth Reminiscence (HBM) to reinforce the info switch charge between the VRAM and GPU. These modules, displayed above to the left of the primary GPU die, are primarily a number of DRAM dies stacked collectively, facilitating extra information to be learn or written per cycle. Nevertheless, HBM is notably costly. Ideally, graphics playing cards would have ample reminiscence, quite a few buses, all working at excessive speeds. However on account of DRAM’s building, its efficiency could not be escalated to match that of a CPU or GPU.

When information mandatory for a computation is not current within the caches (what’s often discuss with as a “cache miss”), it needs to be fetched from the VRAM. As this course of is slower than retrieving from a cache, ready for information saved in DRAM simply leads to the thread that wants it changing into stalled. This situation happens regularly, even with fashionable graphics chips.

This really occurs on a regular basis, even with the newest graphics chips, however as they turned more and more extra highly effective, cache misses had been changing into a big efficiency restrict at excessive resolutions.

In GPUs, last-level caches are structured such that every VRAM module’s interface has its devoted SRAM slice. The remaining processor makes use of a crossbar connection system to entry any module. With GCN and the inaugural RDNA designs, AMD usually employed 256 or 512 kB L3 slices. However with RDNA 2, this surged to a powerful 16 to 32 MB per slice.

This adjustment not solely considerably diminished thread delays brought on by DRAM reads but additionally diminished the necessity for an ultra-wide reminiscence bus. A wider bus necessitates a extra expansive GPU die perimeter to accommodate all reminiscence interfaces.

Whereas large caches will be cumbersome and sluggish on account of inherent lengthy latencies, AMD’s design was the alternative – the hulking L3 cache allowed the RDNA 2 chips to have a efficiency equal to that if that they had wider reminiscence buses, all whereas maintaining the die sizes beneath management.

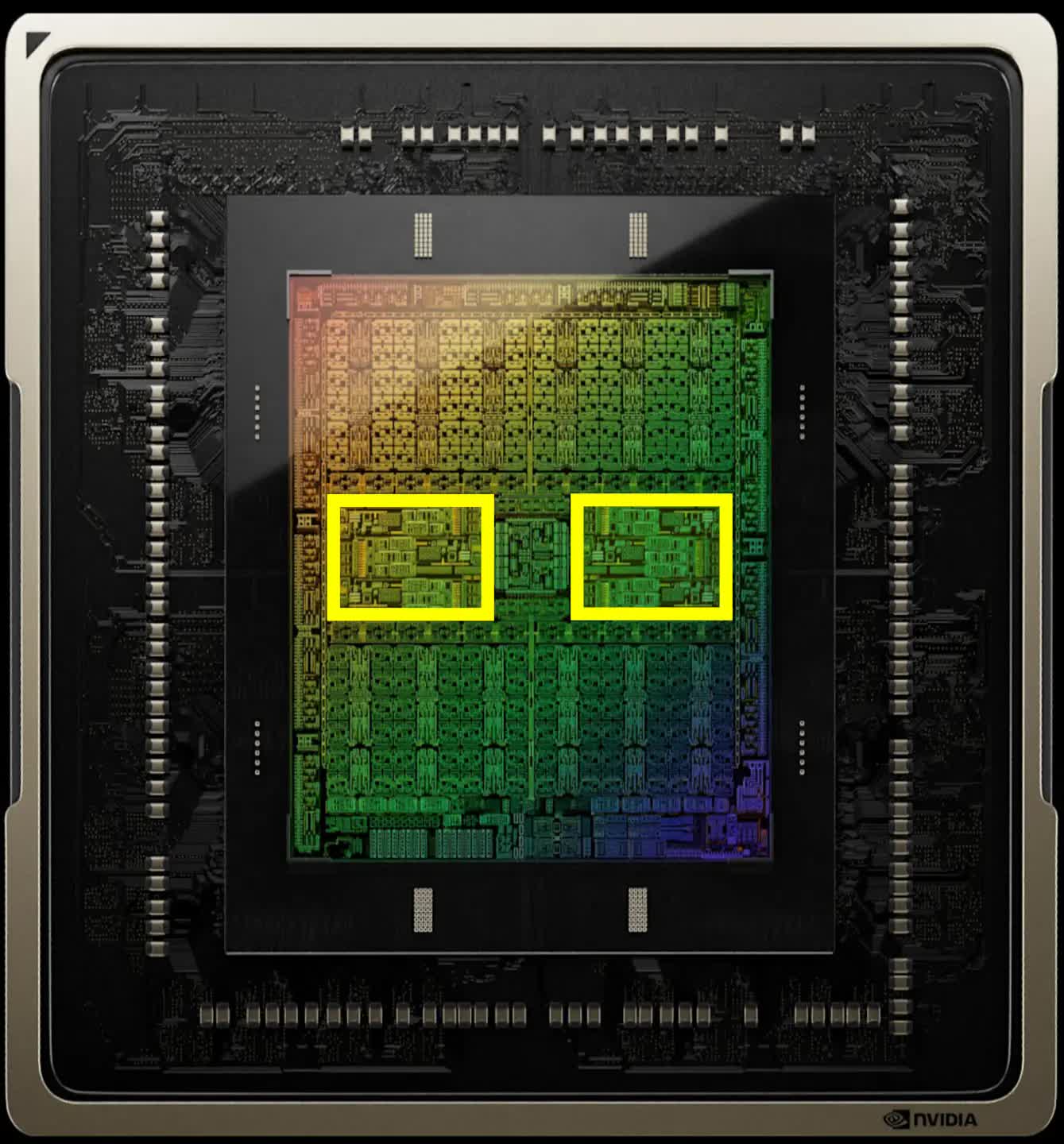

Nvidia adopted go well with with its newest Ada Lovelace era and for a similar causes – the earlier Ampere design had a most L2 cache dimension of 6 MB in its largest consumer-grade GPU, however this was considerably elevated within the new design. The total AD102 die, a cut-down model of which is used within the RTX 4090, incorporates 96 MB of L2 cache.

As to why they did not simply go together with one other degree of cache and make that one extraordinarily massive, is presumably all the way down to not having the identical degree of experience on this space as AMD or maybe not wanting to look prefer it was instantly copying the agency. When one seems on the die, proven above, all that L2 cache would not really take up a lot house on the die, anyway.

Along with the rise in general-purpose GPU computing, there may be another excuse why the last-level cache is now so massive and it has all the things to do with the newest scorching matter in rendering: ray tracing.

Large graphics want massive information

With out going into an excessive amount of element concerning the course of, the act of ray tracing, as used within the newest video games, entails finishing up what appears to be a reasonably easy algorithm – drawing a line from the place of the digital camera within the 3D world, by one pixel of the body, and hint its path by house. When it interacts with an object, verify what it’s and if it is seen, and from there work out what colour to make the pixel.

There’s extra to it than that, however that is the elemental course of. One facet of what makes ray tracing so demanding is the thing checking. Figuring out all the particulars concerning the object that the ray has reached is a colossal job, so to assist velocity up the routine, one thing known as a bounding quantity hierarchy is used (BVH for brief).

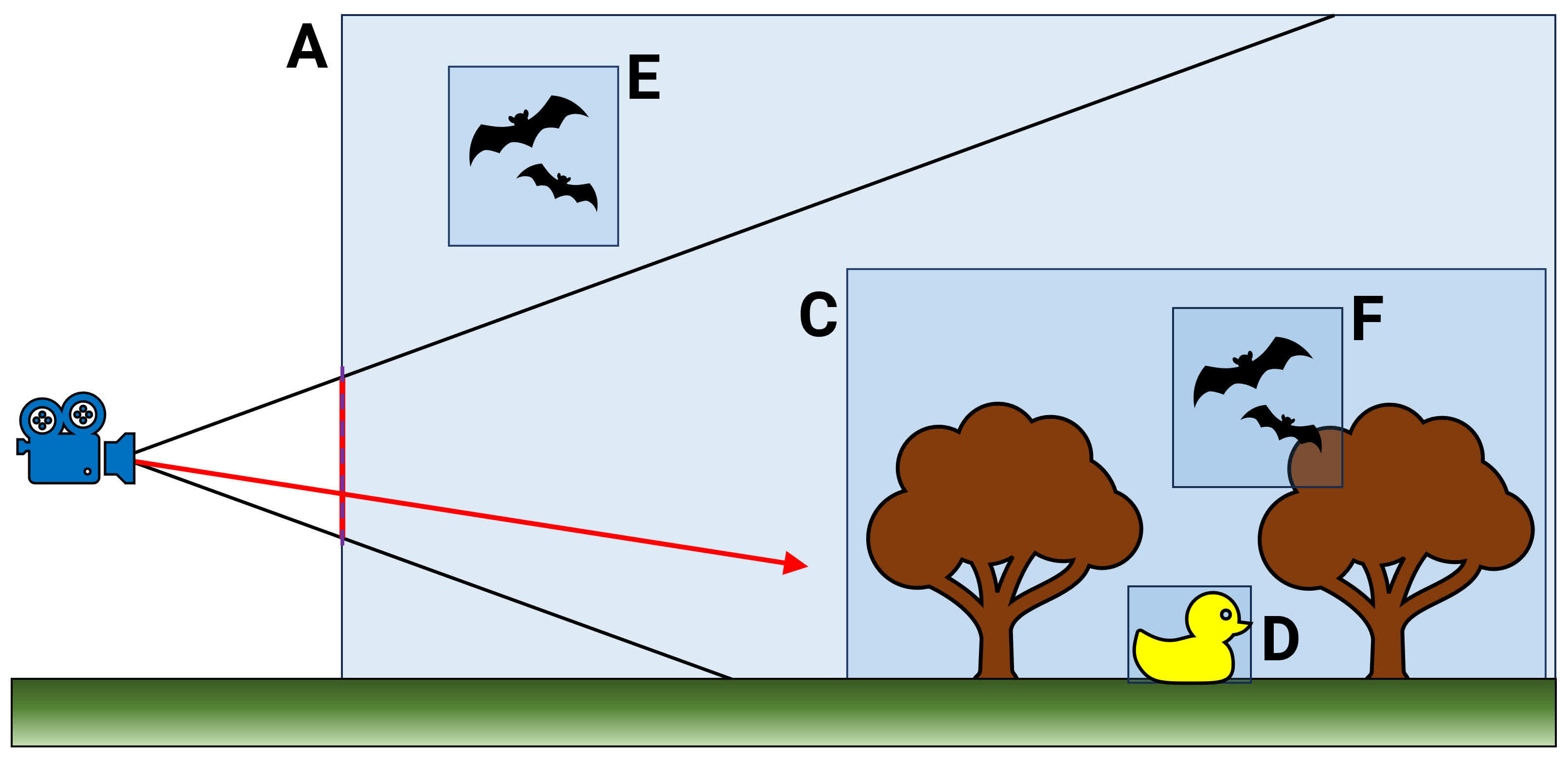

Consider this as being a big database of all of the objects which can be used inside a 3D scene – every entry not solely gives details about what the construction is but additionally the way it pertains to the opposite objects. Take the above (and very over-simplified) instance.

The highest of the hierarchy begins with quantity A. Every thing else is contained inside that, however word that quantity E is outdoors of quantity C, which itself incorporates D and F. When a ray is forged out into this scene (the purple arrow), a course of takes place the place the hierarchy is traversed, checking to see what volumes the ray’s path passes by.

Nevertheless, the BVH is organized like a tree, and the traversal solely must comply with branches the place the verify leads to successful. So quantity E will be rejected instantly, as it is not a part of C which the ray will clearly undergo. In fact, the truth of a BVH in a contemporary sport is vastly extra complicated.

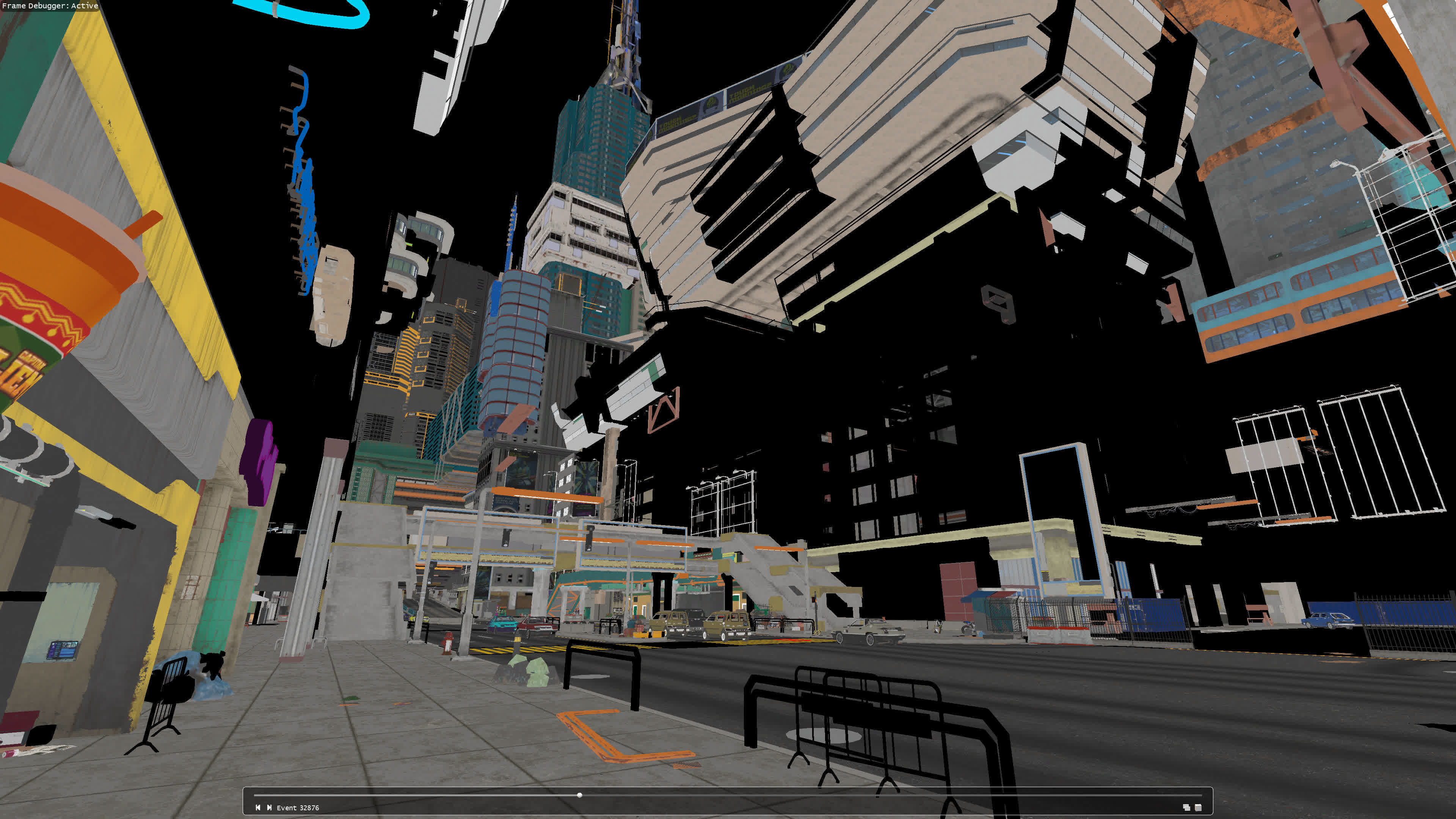

For the above picture, we took a snapshot of Cyberpunk 2077, pausing the sport’s rendering mid-frame to point out you the way anyone given scene is constructed up by rising layers of triangles.

Now, attempt to think about tracing a line out of your eye, by a pixel within the monitor, after which making an attempt to find out precisely which triangle(s) will intersect with the ray. That is why the usage of the BVH is so necessary and it significantly hurries up the entire course of.

On this specific sport, like many who make use of ray tracing to mild a complete scene, the BVH contains a number of databases of two varieties – top-level acceleration constructions (TLAS) and bottom-level acceleration constructions (BLAS).

The previous is actually a big overview of the complete world, not simply the very small half we’re taking a look at. On a PC that makes use of an Nvidia graphics card, it seems one thing like this:

We have zoomed in a bit to point out you among the element it incorporates, however as you may see, it is very massive – virtually 18 MB in dimension. Notice how the record is one in all cases and every one contains no less than one BLAS. The sport solely makes use of two TLAS constructions (the second is way smaller), however there are lots of hundreds of BLAS in complete.

The one under is for an merchandise of clothes that would possibly be worn by a personality considered on this planet. It would seem to be a ridiculous factor to have so many however this hierarchy means if this specific BLAS shouldn’t be in a bigger mum or dad construction that lies within the ray’s path, it can by no means get checked nor used within the coloring stage of the rendering.

For our snapshot of Cyberpunk 2077, a complete of 11,360 BLAS are used, taking over vastly extra reminiscence than the TLAS. Nevertheless, with GPUs now sporting massive quantities of cache, there’s sufficient room to retailer the latter on this SRAM and switch lots of the related BLAS throughout from the VRAM, making the method of ray tracing go a lot quicker.

The so-called holy grail of rendering remains to be actually solely accessible to these with the perfect graphics playing cards, and even then, extra applied sciences (comparable to picture upscaling and body era) are employed to deliver the general efficiency into the realm of playable.

BVHs, hundreds of cores, and devoted ray tracing models in GPUs make all of this attainable, however monstrous caches present a much-needed enhance to all of it.

Contenders for the crown

As soon as a couple of extra generations of GPU architectures have handed us by, seeing graphics chips with large L2 or L3 caches would be the norm, quite than a novel promoting level for a brand new design. GPUs will proceed for use in broad general-purpose situations, ray tracing will turn into more and more extra prevalent in video games, and DRAM will nonetheless lag behind the developments in processor applied sciences.

That each one mentioned, GPUs will not have all of it their approach, relating to packing within the SRAM. In reality, there are a few exceptions to this now.

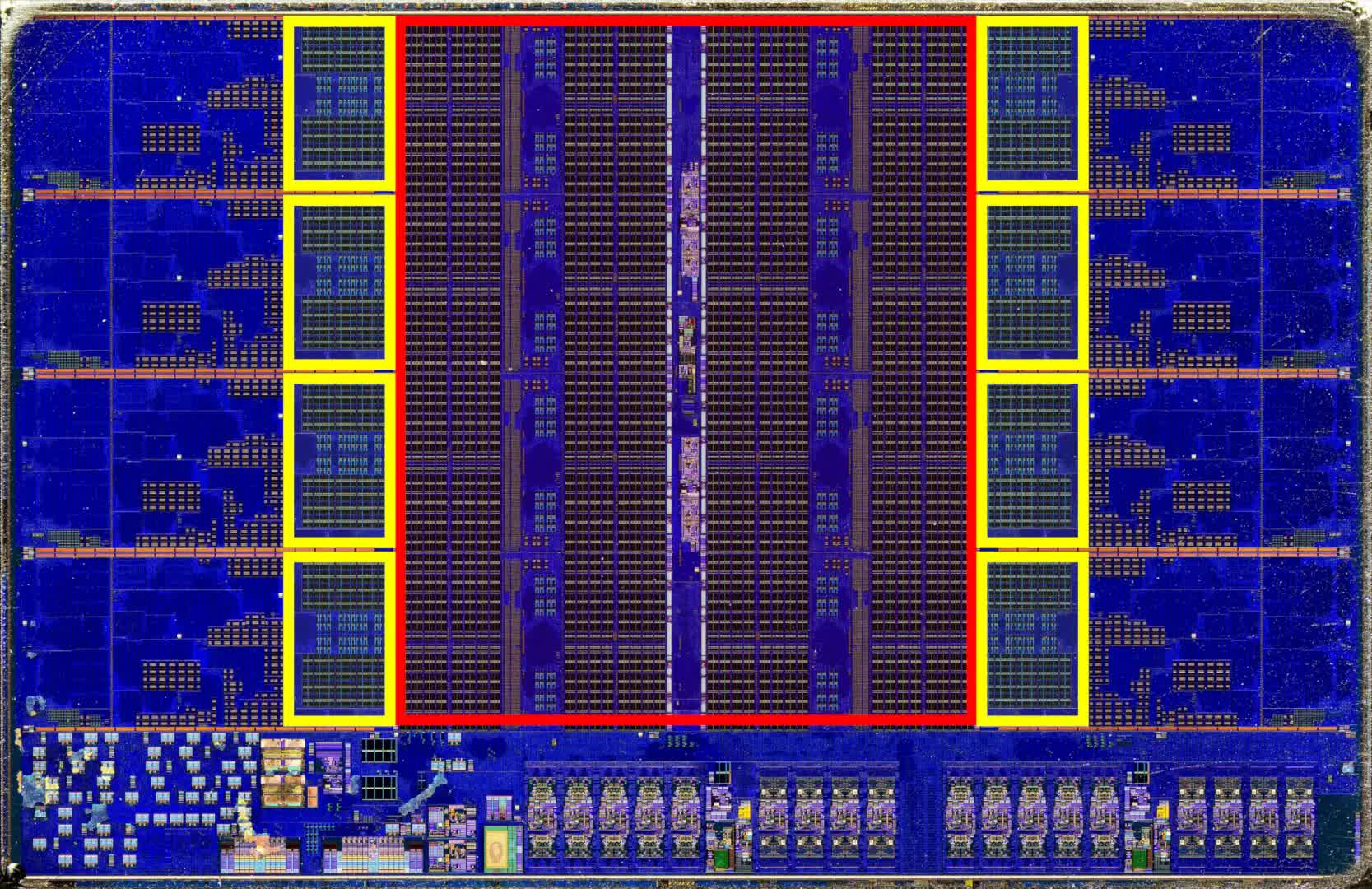

We’re not speaking about AMD’s X3D vary of Ryzen CPUs, despite the fact that the Ryzen 9 7950X3D comes with an astonishing 128 MB of L3 cache (Intel’s largest consumer-grade CPU, the Core i9-13900K, will get by with simply 36 MB). It is nonetheless an AMD product, although, particularly its newest entries within the EPYC 9000 sequence of server processors.

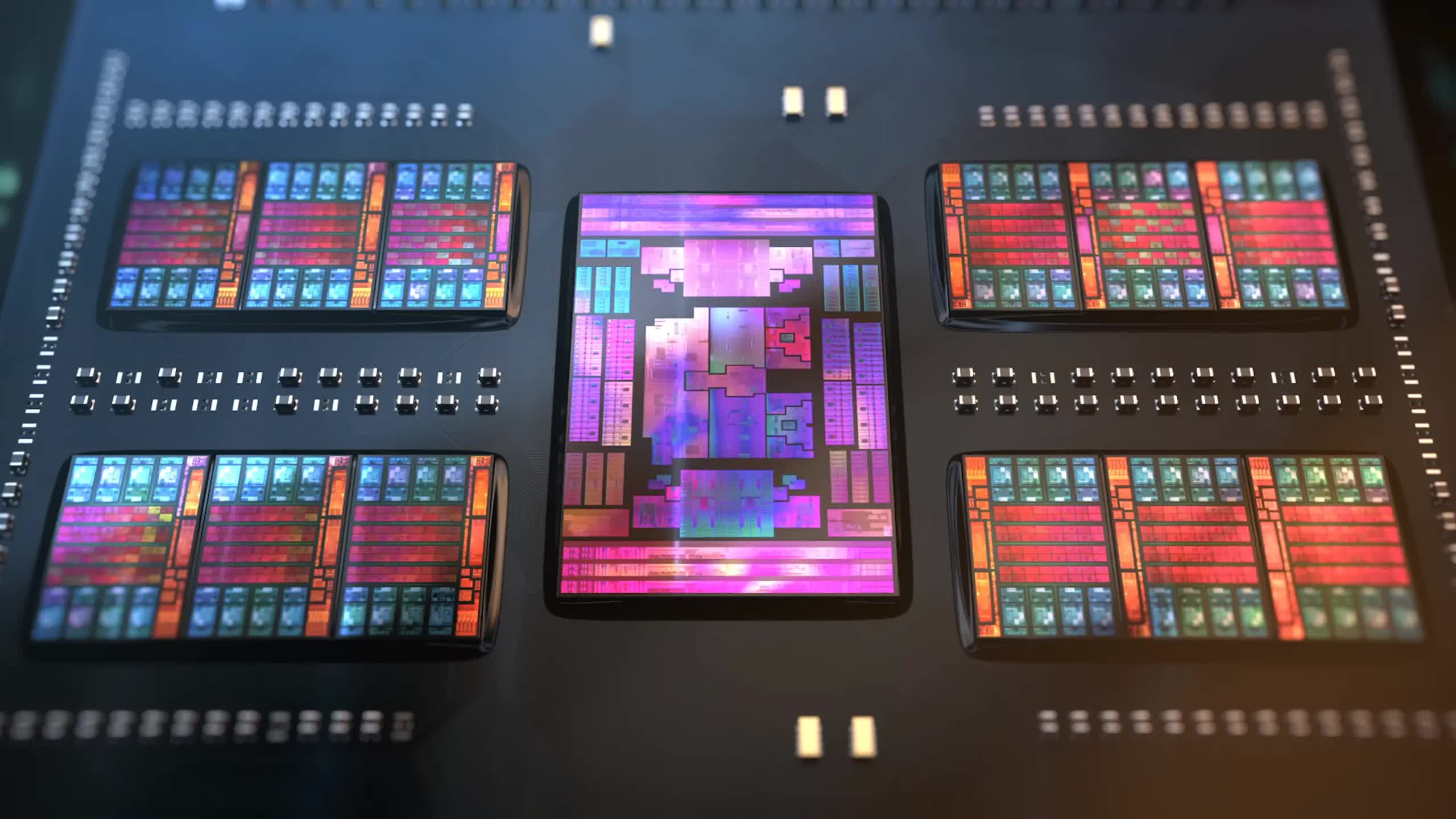

The $14,756 EPYC 9684X (above) contains 13 chiplets, twelve of which home the processor’s cores and cache. Every of those incorporates 8 cores and a 64 MB slice of AMD’s 3D V-cache on prime of the chiplet’s built-in 32 MB of L3 cache. Put all collectively, that is a mind-boggling complete of 1152 MB of last-level cache! Even the 16-core model (the 9174F) boasts 256 MB, though it is nonetheless not what you’d name low cost, at $3,840.

In fact, such processors aren’t designed for use by mere mortals and their gaming PCs, and the bodily dimension, price ticket, and energy consumption determine are all so massive that we’re not going to see something like them in an bizarre desktop laptop for a few years.

A part of it’s because not like semiconductor circuitry used for logic models, it is getting more and more tougher to shrink SRAM with every new course of node (the tactic by which chips are manufactured). AMD’s EPYC processors have a lot cache just because there are many chips beneath the warmth spreader.

All GPUs will in all probability go down the same route in some unspecified time in the future sooner or later and AMD’s top-end Radeon 9000 fashions already do, with the reminiscence interfaces and related L3 cache slices being housed in separate chiplets to the primary processing die.

There are diminishing features with utilizing ever bigger caches although, so do not count on to see GPUs sporting gigabytes price of cache all over. Besides, the latest modifications are fairly outstanding.

Twenty years in the past, graphics chips had little or no cache in them, only a handful of kB of SRAM, right here and there. Now, you may exit and for lower than $400, decide up a graphics card with a lot cache, you can match everything of the unique Doom inside them – twice over!

GPUs really are the kings of cache.