Picture by Writer. Base picture from pch-vector.

Pure Language Processing —or NLP-has developed enormously, and GPT fashions are on the forefront of this revolution.

Right this moment LLM fashions can be utilized in all kinds of functions.

To keep away from pointless duties and improve my workflow, I started exploring the opportunity of coaching GPT to formulate SQL queries for me.

And that is when a superb thought appeared:

Utilizing the ability of GPT fashions in deciphering pure language and reworking it into structured SQL queries.

Might this be potential?

Let’s uncover all of it collectively!

So let’s begin from the start…

A few of you is likely to be already aware of the idea of few shot prompting, whereas others might need not heard of it by no means earlier than.

So…What’s it?

The fundamental thought right here is to make use of some specific examples-or shots-to information the LLM to reply in a particular manner.

For this reason it’s known as Few Shot prompting.

To place it merely, by showcasing a number of examples of the consumer input-sample prompts-along with the specified LLM output, we will educate the mannequin to ship some enhanced output that follows our preferences.

By doing so we’re increasing the information of the mannequin on some particular area to generate some output that aligns higher with our desired activity.

So let’s exemplify this!

All through this tutorial, I’ll be utilizing a predefined perform known as chatgpt_call() to immediate the GPT mannequin. If you wish to additional perceive it, you go verify the next article.

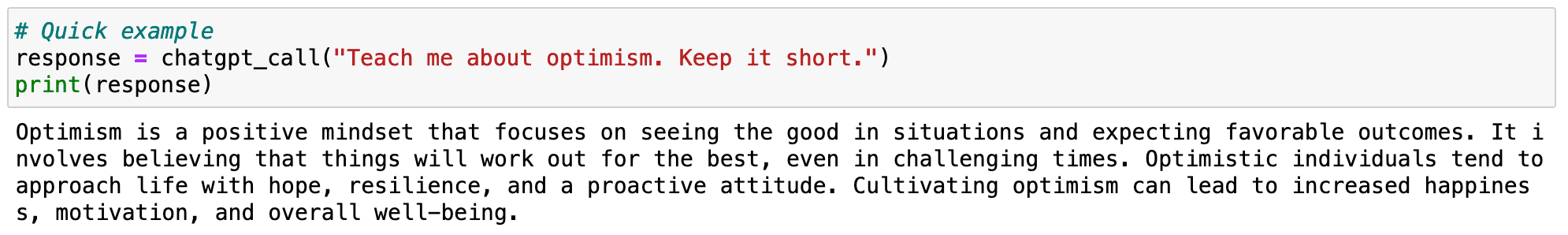

Think about I need ChatGPT to explain the time period optimism.

If I merely ask GPT to explain it, I’ll get hold of a serious-and boring-description.

## Code Block

response = chatgpt_call("Train me about optimism. Preserve it brief.")

print(response)

With the corresponding output:

Screenshot of my Jupyter Pocket book. Prompting GPT.

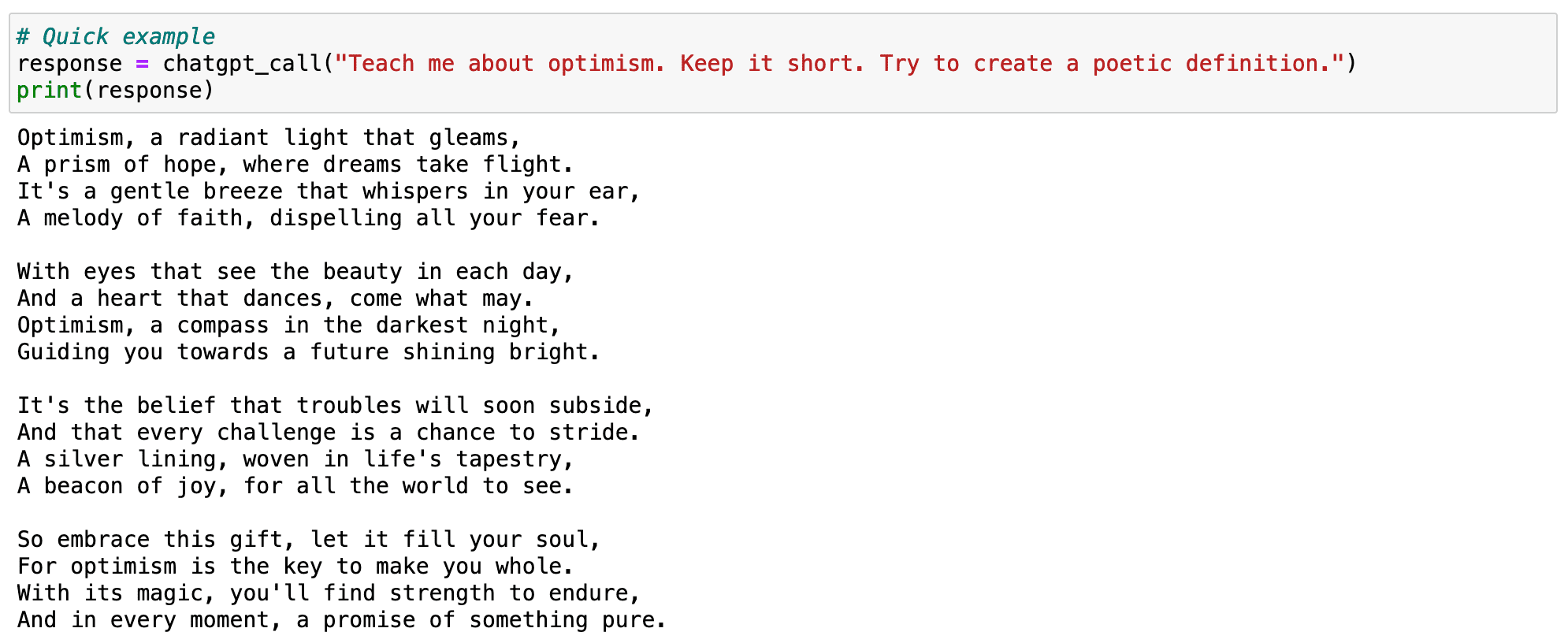

Nonetheless, think about I might moderately prefer to get one thing extra poetic. I can add to my immediate some extra element specifying that I need a poetic definition.

## Code Block

response = chatgpt_call("Train me about optimism. Preserve it brief. Attempt to create a poetic definition.")

print(response)

However this second output appears to be like similar to a poem and has nothing to do with my desired output.

Screenshot of my Jupyter Pocket book. Prompting GPT.

What can I do?

I may element much more the immediate, and maintain iterating till I obtain some good output. Nonetheless, this might take numerous time.

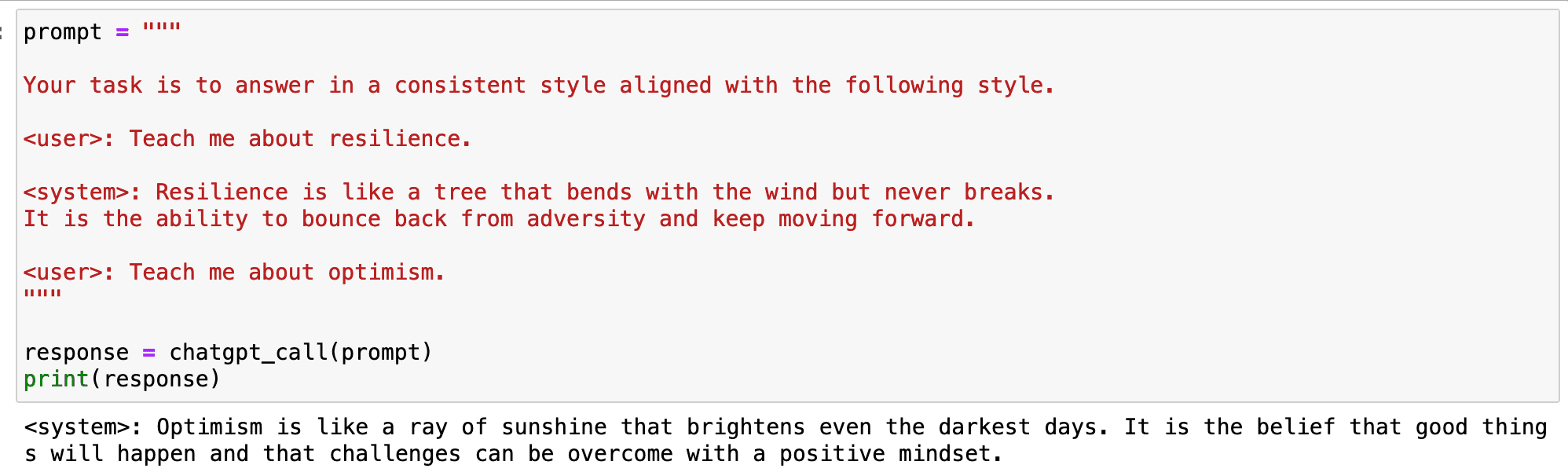

As an alternative, I can present the mannequin what the form of poetic description I desire designing an instance and exhibiting it to the mannequin.

## Code Block

immediate = """

Your activity is to reply in a constant fashion aligned with the next fashion.

: Train me about resilience.

: Resilience is sort of a tree that bends with the wind however by no means breaks.

It's the capability to bounce again from adversity and maintain transferring ahead.

: Train me about optimism.

"""

response = chatgpt_call(immediate)

print(response)

And the output is precisely what I used to be searching for.

Screenshot of my Jupyter Pocket book. Prompting GPT.

So… how can we translate this into our particular case of SQL queries?

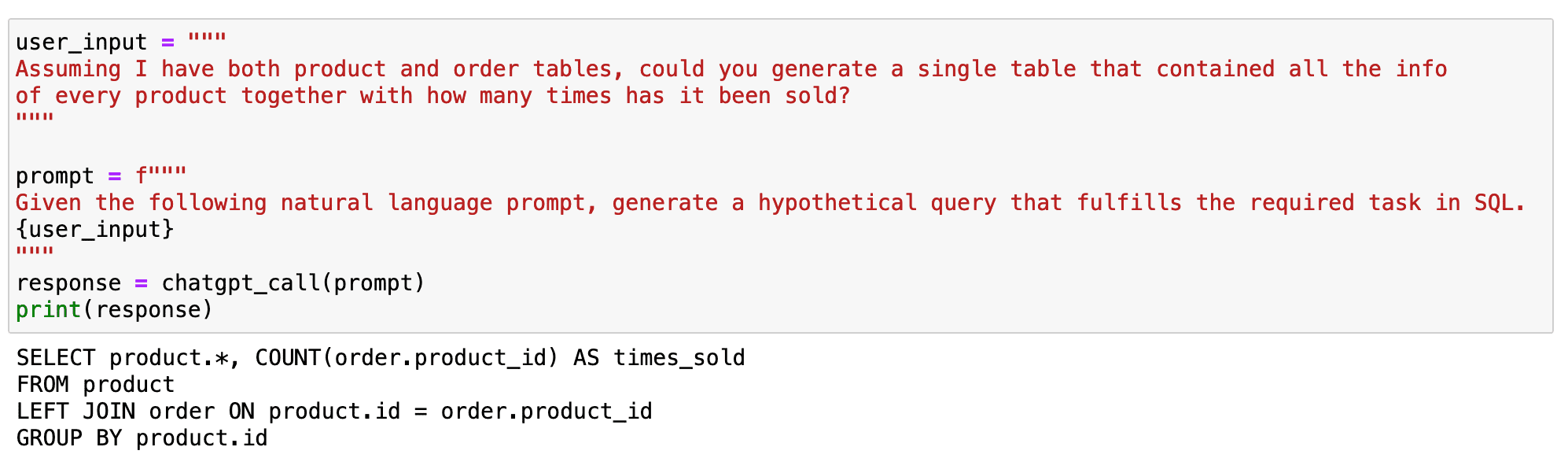

ChatGPT is already able to producing SQL queries out of Pure Language prompts. We don’t even have to point out the mannequin any desk, simply formulate a hypothetical computation and it’ll do it for us.

## Code Block

user_input = """

Assuming I've each product and order tables, may you generate a single desk that contained all the information

of each product along with what number of occasions has it been offered?

"""

immediate = f"""

Given the next pure language immediate, generate a hypothetical question that fulfills the required activity in SQL.

{user_input}

"""

response = chatgpt_call(immediate)

print(response)

Nonetheless, and as you already know, the extra context we give to the mannequin, the higher outputs it should generate.

Screenshot of my Jupyter Pocket book. Prompting GPT.

All through this tutorial I’m splitting the enter prompts into the particular demand of the consumer and the high-level behaviour anticipated from the mannequin. This can be a good apply to enhance our interplay with the LLM and be extra concise in our prompts. You may be taught extra within the following article.

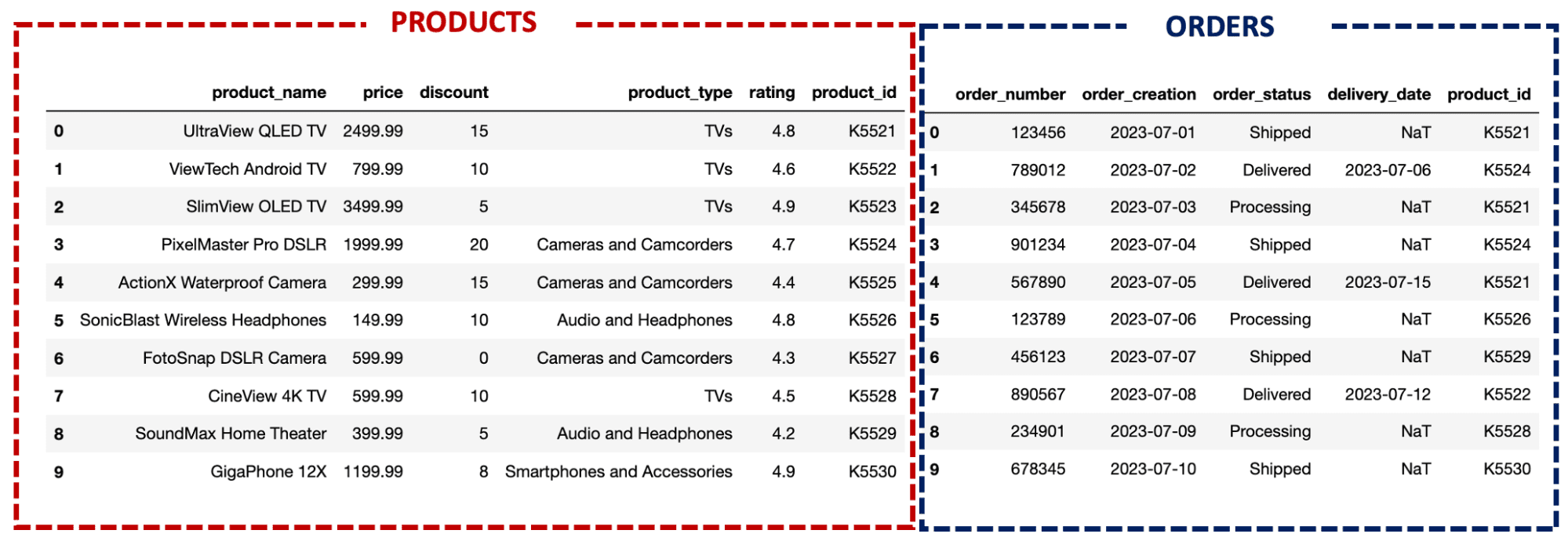

So let’s think about I’m working with two fundamental tables: PRODUCTS and ORDERS

Picture by Writer. Tables for use all through the tutorial.

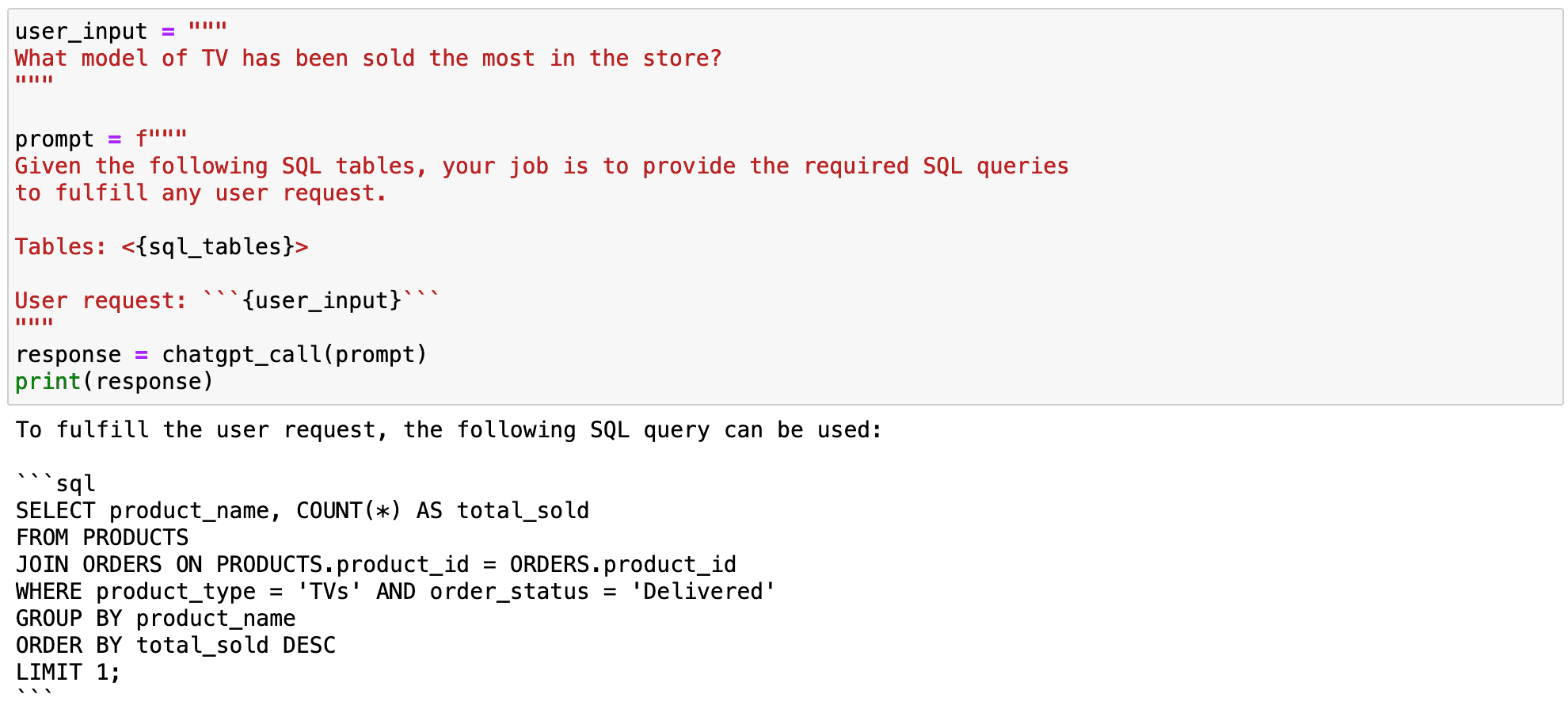

If I ask GPT for a easy question, the mannequin will give an answer straight away, simply because it did at first, however with particular tables for my case.

## Code Block

user_input = """

What mannequin of TV has been offered essentially the most within the retailer?

"""

immediate = f"""

Given the next SQL tables, your job is to offer the required SQL queries to fulfil any consumer request.

Tables: <{sql_tables}>

Consumer request: ```{user_input}```

"""

response = chatgpt_call(immediate)

print(response)

Yow will discover the sql_tables ultimately of this text!

And the output appears to be like like as follows!

Screenshot of my Jupyter Pocket book. Prompting GPT.

Nonetheless, we will observe some issues within the earlier output.

- The computation is partially unsuitable, as it’s only contemplating these TVs which were already delivered. And any issued order-be it delivered or not-should be thought of as a sale.

- The question will not be formatted as I would really like it to be.

So first let’s deal with exhibiting the mannequin tips on how to compute the required question.

#1. Fixing some misunderstandings of the mannequin

On this first case, the mannequin considers solely these merchandise which were delivered as offered, however this isn’t true. We will merely repair this misunderstanding by displaying two totally different examples the place I compute comparable queries.

## Few_shot examples

fewshot_examples = """

-------------- FIRST EXAMPLE

Consumer: What mannequin of TV has been offered essentially the most within the retailer when contemplating all issued orders.

System: You first want to affix each orders and merchandise tables, filter solely these orders that correspond to TVs

and rely the variety of orders which were issued:

SELECT P.product_name AS model_of_tv, COUNT(*) AS total_sold

FROM merchandise AS P

JOIN orders AS O ON P.product_id = O.product_id

WHERE P.product_type="TVs"

GROUP BY P.product_name

ORDER BY total_sold DESC

LIMIT 1;

-------------- SECOND EXAMPLE

Consumer: What is the offered product that has been already delivered essentially the most?

System: You first want to affix each orders and merchandise tables, rely the variety of orders which have

been already delivered and simply maintain the primary one:

SELECT P.product_name AS model_of_tv, COUNT(*) AS total_sold

FROM merchandise AS P

JOIN orders AS O ON P.product_id = O.product_id

WHERE P.order_status="Delivered"

GROUP BY P.product_name

ORDER BY total_sold DESC

LIMIT 1;

"""

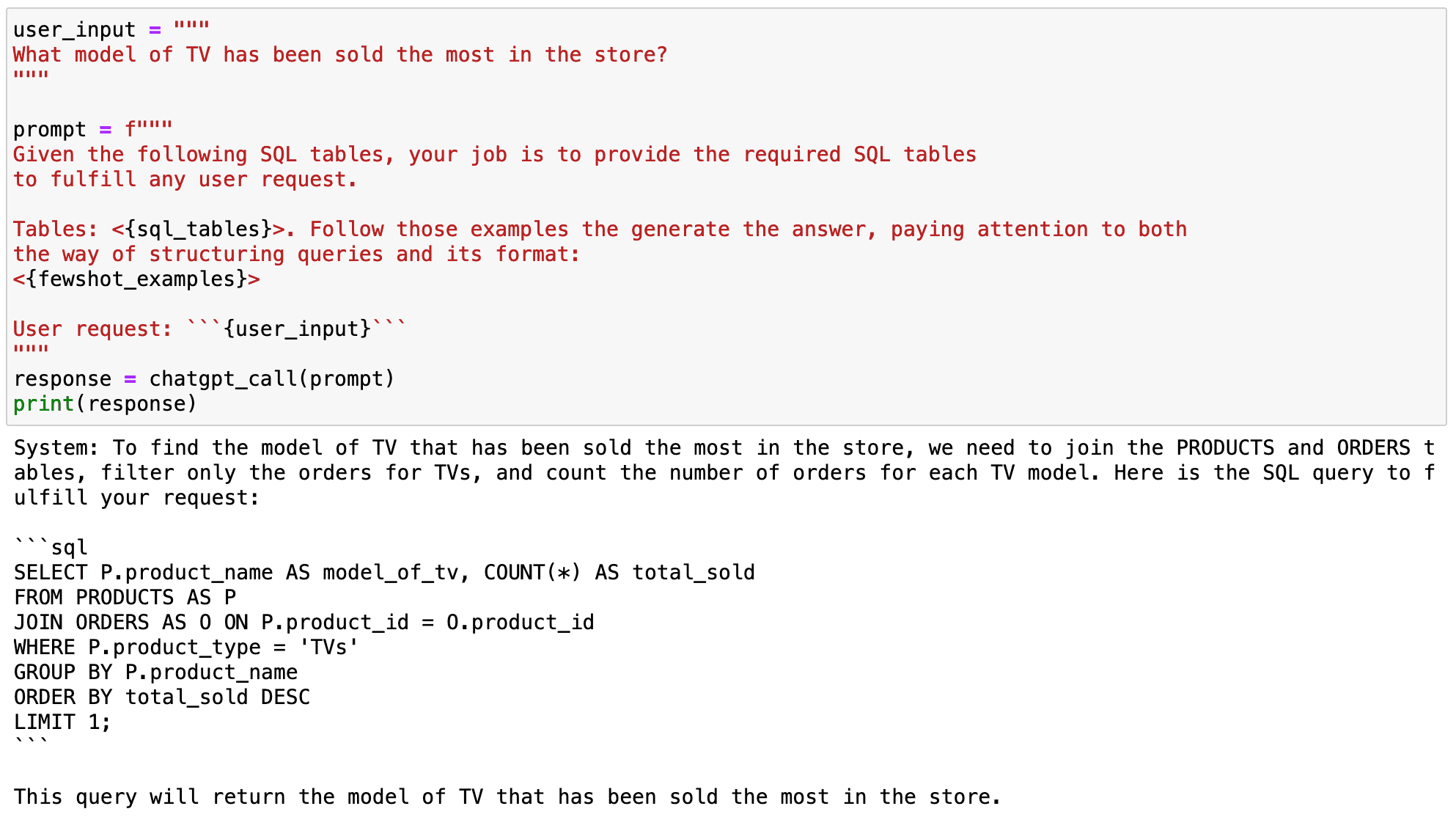

And now if we immediate once more the mannequin and embody the earlier examples on it, one can see that the corresponding question won’t be solely correct-the earlier question was already working-but will even contemplate gross sales as we would like it to!

## Code Block

user_input = """

What mannequin of TV has been offered essentially the most within the retailer?

"""

immediate = f"""

Given the next SQL tables, your job is to offer the required SQL tables

to satisfy any consumer request.

Tables: <{sql_tables}>. Observe these examples the generate the reply, being attentive to each

the best way of structuring queries and its format:

<{fewshot_examples}>

Consumer request: ```{user_input}```

"""

response = chatgpt_call(immediate)

print(response)

With the next output:

Screenshot of my Jupyter Pocket book. Prompting GPT.

Now if we verify the corresponding question…

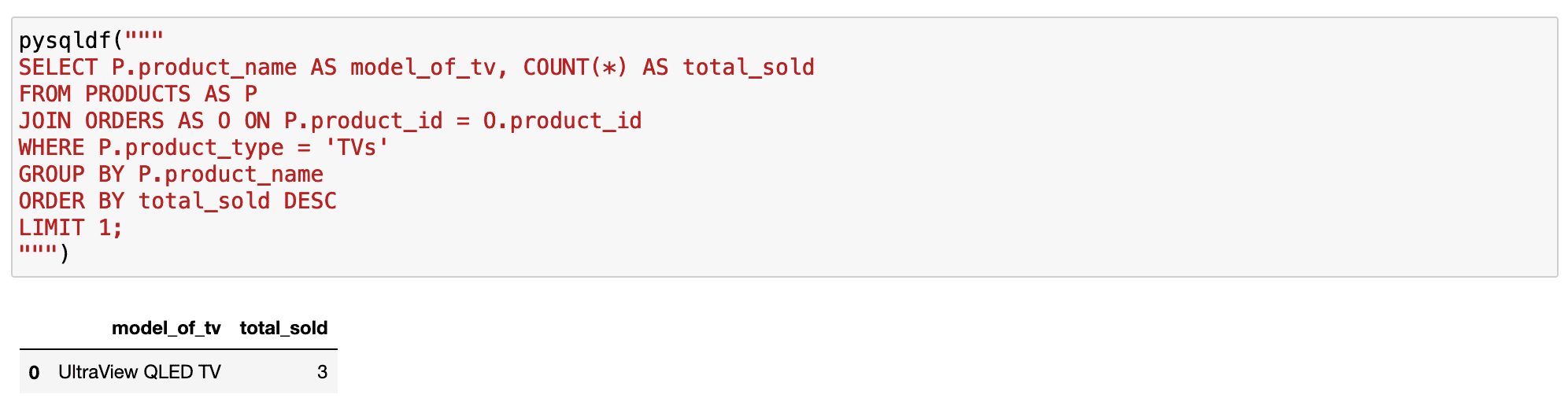

## Code Block

pysqldf("""

SELECT P.product_name AS model_of_tv, COUNT(*) AS total_sold

FROM PRODUCTS AS P

JOIN ORDERS AS O ON P.product_id = O.product_id

WHERE P.product_type="TVs"

GROUP BY P.product_name

ORDER BY total_sold DESC

LIMIT 1;

""")

It really works completely!

Screenshot of my Jupyter Pocket book. Prompting GPT.

#2. Formatting SQL Queries

Few-short prompting will also be a technique to customise the mannequin for our personal objective or fashion.

If we return to the examples earlier than, the queries had no format in any respect. And everyone knows there are some good practices-together with some private oddities-that enable us to higher learn SQL queries.

For this reason we will use few-shot prompting to point out the mannequin the best way we like to question – with our good practices or simply our oddities-and practice the mannequin to offer us our formatted desired SQL queries.

So, now I’ll put together the identical examples as earlier than however following my format preferences.

## Code Block

fewshot_examples = """

---- EXAMPLE 1

Consumer: What mannequin of TV has been offered essentially the most within the retailer when contemplating all issued orders.

System: You first want to affix each orders and merchandise tables, filter solely these orders that correspond to TVs

and rely the variety of orders which were issued:

SELECT

P.product_name AS model_of_tv,

COUNT(*) AS total_sold

FROM merchandise AS P

JOIN orders AS O

ON P.product_id = O.product_id

WHERE P.product_type="TVs"

GROUP BY P.product_name

ORDER BY total_sold DESC

LIMIT 1;

---- EXAMPLE 2

Consumer: What's the newest order that has been issued?

System: You first want to affix each orders and merchandise tables and filter by the most recent order_creation datetime:

SELECT

P.product_name AS model_of_tv

FROM merchandise AS P

JOIN orders AS O

ON P.product_id = O.product_id

WHERE O.order_creation = (SELECT MAX(order_creation) FROM orders)

GROUP BY p.product_name

LIMIT 1;

"""

As soon as the examples have been outlined, we will enter them into the mannequin in order that it could possibly mimic the fashion showcased.

As you may observe within the following code field, after exhibiting GPT what we anticipate from it, it replicates the fashion of the given examples to supply any new output accordingly.

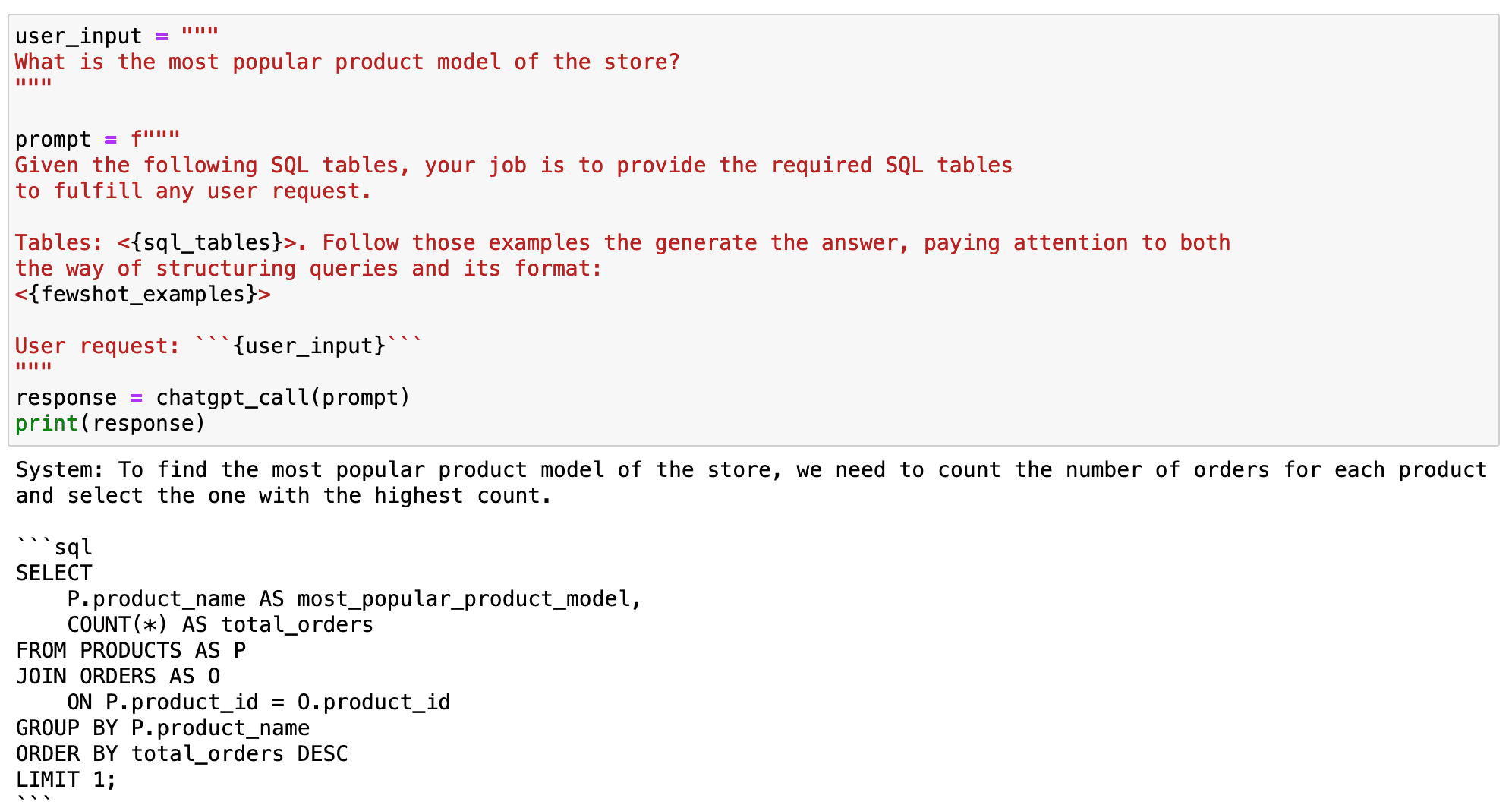

## Code Block

user_input = """

What's the hottest product mannequin of the shop?

"""

immediate = f"""

Given the next SQL tables, your job is to offer the required SQL tables

to satisfy any consumer request.

Tables: <{sql_tables}>. Observe these examples the generate the reply, being attentive to each

the best way of structuring queries and its format:

<{fewshot_examples}>

Consumer request: ```{user_input}```

"""

response = chatgpt_call(immediate)

print(response)

And as you may observe within the following output, it labored!

Screenshot of my Jupyter Pocket book. Prompting GPT.

#3. Coaching the mannequin to compute some particular variable.

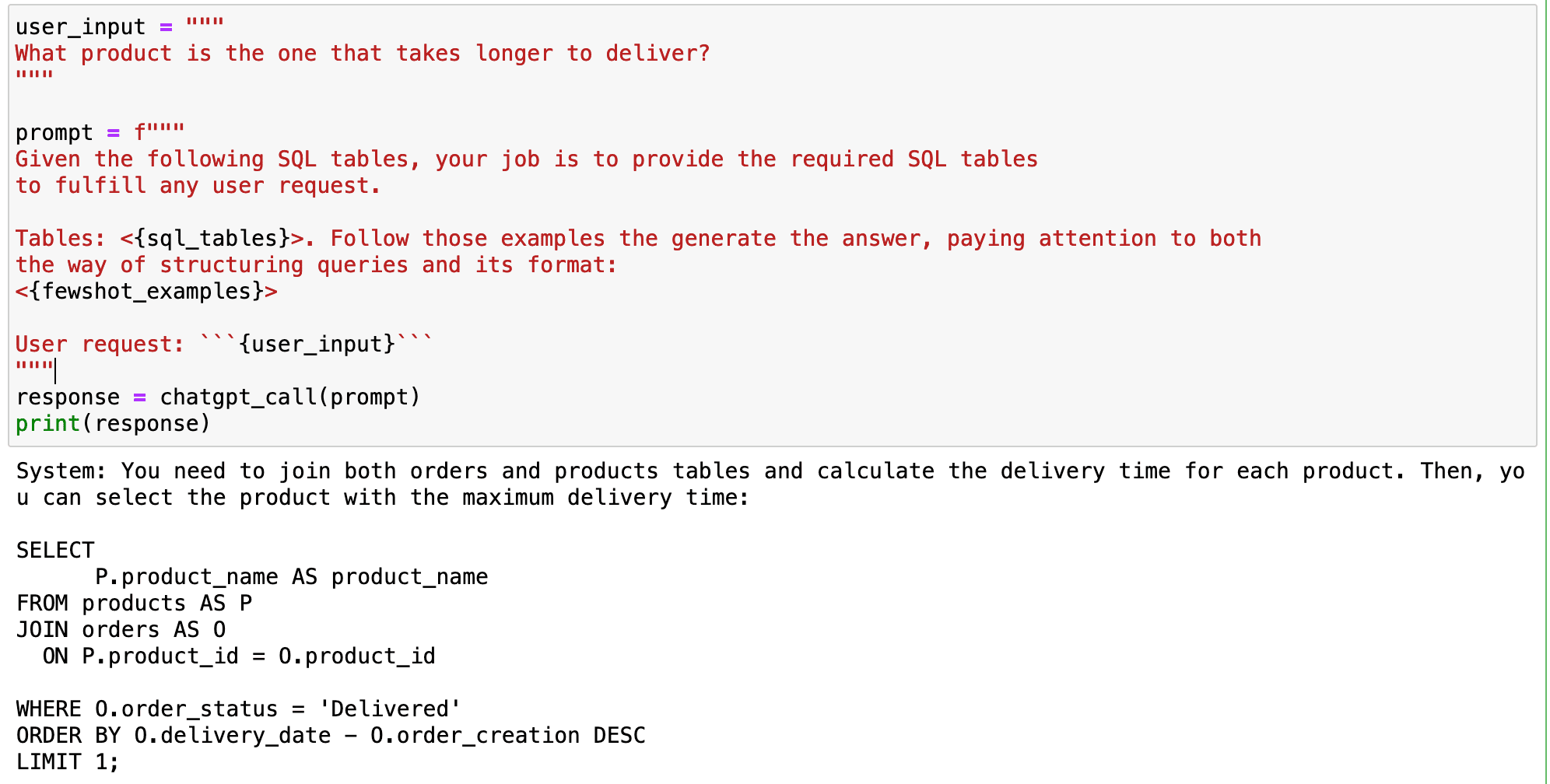

Let’s dive deeper into an illustrative state of affairs. Suppose we purpose to compute which product takes the longest to ship. We pose this query to the mannequin in pure language, anticipating an accurate SQL question.

## Code Block

user_input = """

What product is the one which takes longer to ship?

"""

immediate = f"""

Given the next SQL tables, your job is to offer the required SQL tables

to satisfy any consumer request.

Tables: <{sql_tables}>. Observe these examples the generate the reply, being attentive to each

the best way of structuring queries and its format:

<{fewshot_examples}>

Consumer request: ```{user_input}```

"""

response = chatgpt_call(immediate)

print(response)

But, the reply we obtain is way from appropriate.

Screenshot of my Jupyter Pocket book. Prompting GPT.

What went unsuitable?

The GPT mannequin makes an attempt to calculate the distinction between two datetime SQL variables straight. This computation is incompatible with most SQL variations, creating a difficulty, particularly for SQLite customers.

How will we rectify this drawback?

The answer is true below our noses-we resort again to few-shot prompting.

By demonstrating to the mannequin how we usually compute time variables-in this case, the supply time-we practice it to copy the method at any time when it encounters comparable variable sorts.

For instance, SQLite customers could use the julianday() perform. This perform converts any date into the variety of days which have elapsed for the reason that preliminary epoch within the Julian calendar.

This might assist GPT mannequin to deal with date variations in SQLite database higher.

## Including another instance

fewshot_examples += """

------ EXAMPLE 4

Consumer: Compute the time that it takes to supply each product?

System: You first want to affix each orders and merchandise tables, filter solely these orders which have

been delivered and compute the distinction between each order_creation and delivery_date.:

SELECT

P.product_name AS product_with_longest_delivery,

julianday(O.delivery_date) - julianday(O.order_creation) AS TIME_DIFF

FROM

merchandise AS P

JOIN

orders AS O ON P.product_id = O.product_id

WHERE

O.order_status="Delivered";

"""

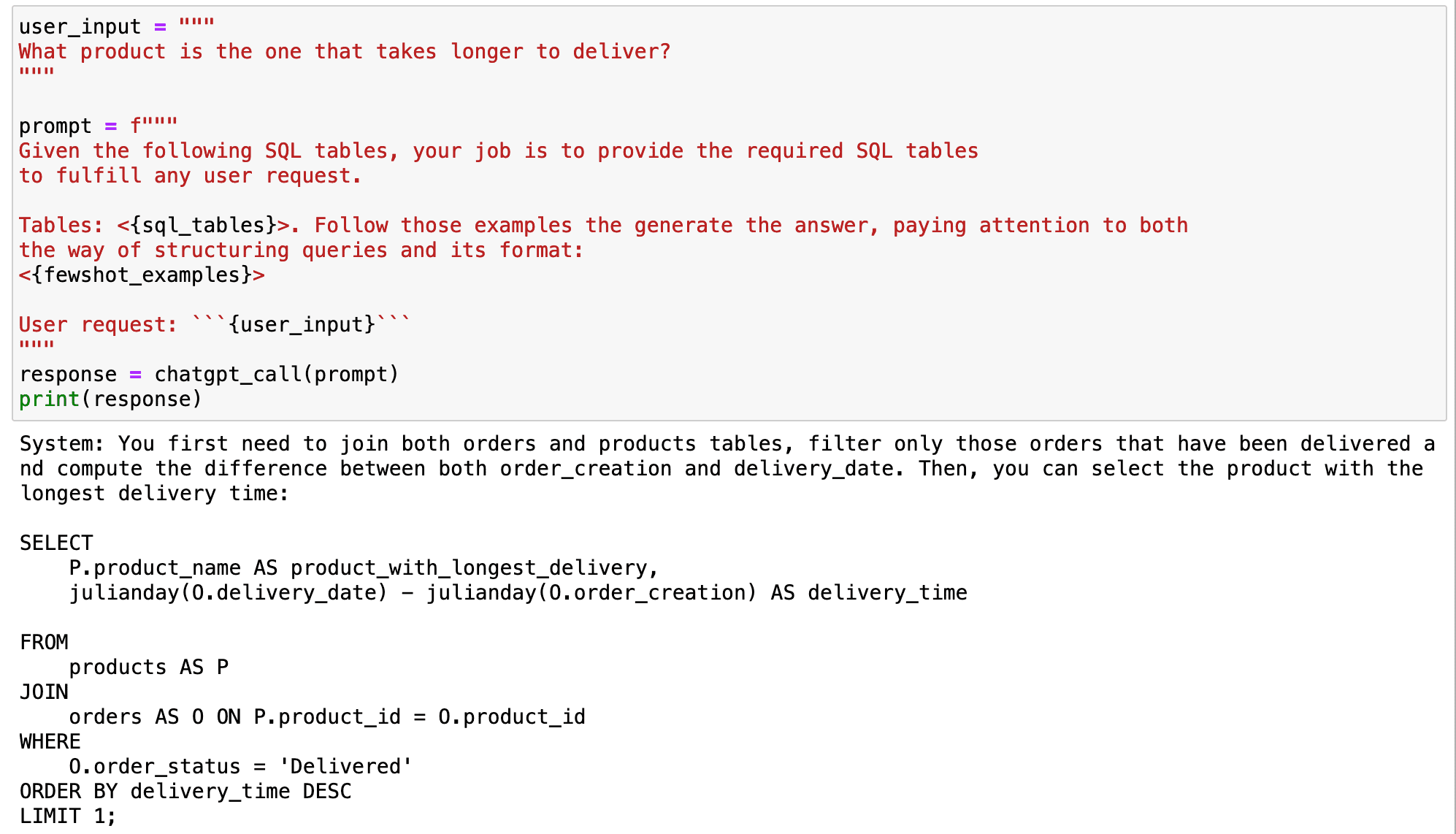

After we use this methodology for example for the mannequin, it learns our most well-liked manner of computing the supply time. This makes the mannequin higher suited to generate practical SQL queries which can be customised to our particular surroundings.

If we use the earlier instance as an enter, the mannequin will replicate the best way we compute the supply time and can present practical queries for our concrete surroundings any more.

## Code Block

user_input = """

What product is the one which takes longer to ship?

"""

immediate = f"""

Given the next SQL tables, your job is to offer the required SQL tables

to satisfy any consumer request.

Tables: <{sql_tables}>. Observe these examples the generate the reply, being attentive to each

the best way of structuring queries and its format:

<{fewshot_examples}>

Consumer request: ```{user_input}```

"""

response = chatgpt_call(immediate)

print(response)

Screenshot of my Jupyter Pocket book. Prompting GPT.

In conclusion, the GPT mannequin is a wonderful software for changing pure language into SQL queries.

Nonetheless, it’s not excellent.

The mannequin could not have the ability to perceive context-aware queries or particular operations with out correct coaching.

By utilizing few-shot prompting, we will information the mannequin to know our question fashion and computing preferences.

This enables us to totally harness the ability of the GPT mannequin in our information science workflows, turning the mannequin into a strong software that adapts to our distinctive wants.

From unformatted queries to completely customised SQL queries, GPT fashions convey the magic of personalization to our fingertips!

You may go verify my code straight in my GitHub.

## SQL TABLES

sql_tables = """

CREATE TABLE PRODUCTS (

product_name VARCHAR(100),

worth DECIMAL(10, 2),

low cost DECIMAL(5, 2),

product_type VARCHAR(50),

ranking DECIMAL(3, 1),

product_id VARCHAR(100)

);

INSERT INTO PRODUCTS (product_name, worth, low cost, product_type, ranking, product_id)

VALUES

('UltraView QLED TV', 2499.99, 15, 'TVs', 4.8, 'K5521'),

('ViewTech Android TV', 799.99, 10, 'TVs', 4.6, 'K5522'),

('SlimView OLED TV', 3499.99, 5, 'TVs', 4.9, 'K5523'),

('PixelMaster Professional DSLR', 1999.99, 20, 'Cameras and Camcorders', 4.7, 'K5524'),

('ActionX Waterproof Digital camera', 299.99, 15, 'Cameras and Camcorders', 4.4, 'K5525'),

('SonicBlast Wi-fi Headphones', 149.99, 10, 'Audio and Headphones', 4.8, 'K5526'),

('FotoSnap DSLR Digital camera', 599.99, 0, 'Cameras and Camcorders', 4.3, 'K5527'),

('CineView 4K TV', 599.99, 10, 'TVs', 4.5, 'K5528'),

('SoundMax Dwelling Theater', 399.99, 5, 'Audio and Headphones', 4.2, 'K5529'),

('GigaPhone 12X', 1199.99, 8, 'Smartphones and Equipment', 4.9, 'K5530');

CREATE TABLE ORDERS (

order_number INT PRIMARY KEY,

order_creation DATE,

order_status VARCHAR(50),

product_id VARCHAR(100)

);

INSERT INTO ORDERS (order_number, order_creation, order_status, delivery_date, product_id)

VALUES

(123456, '2023-07-01', 'Shipped','', 'K5521'),

(789012, '2023-07-02', 'Delivered','2023-07-06', 'K5524'),

(345678, '2023-07-03', 'Processing','', 'K5521'),

(901234, '2023-07-04', 'Shipped','', 'K5524'),

(567890, '2023-07-05', 'Delivered','2023-07-15', 'K5521'),

(123789, '2023-07-06', 'Processing','', 'K5526'),

(456123, '2023-07-07', 'Shipped','', 'K5529'),

(890567, '2023-07-08', 'Delivered','2023-07-12', 'K5522'),

(234901, '2023-07-09', 'Processing','', 'K5528'),

(678345, '2023-07-10', 'Shipped','', 'K5530');

"""

Josep Ferrer is an analytics engineer from Barcelona. He graduated in physics engineering and is at present working within the Knowledge Science discipline utilized to human mobility. He’s a part-time content material creator targeted on information science and know-how. You may contact him on LinkedIn, Twitter or Medium.