Picture by Creator

Because the wave of curiosity in Massive Language Fashions (LLMs) surges, many builders and organisations are busy constructing purposes harnessing their energy. Nevertheless, when the pre-trained LLMs out of the field don’t carry out as anticipated or hoped, the query on the best way to enhance the efficiency of the LLM utility. And finally we get to the purpose of the place we ask ourselves: Ought to we use Retrieval-Augmented Era (RAG) or mannequin finetuning to enhance the outcomes?

Earlier than diving deeper, let’s demystify these two strategies:

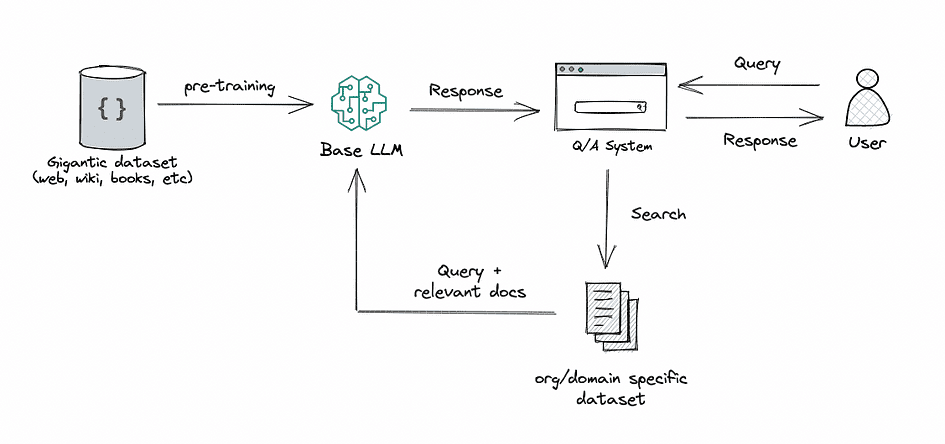

RAG: This strategy integrates the ability of retrieval (or looking) into LLM textual content era. It combines a retriever system, which fetches related doc snippets from a big corpus, and an LLM, which produces solutions utilizing the knowledge from these snippets. In essence, RAG helps the mannequin to “lookup” exterior data to enhance its responses.

Picture by Creator

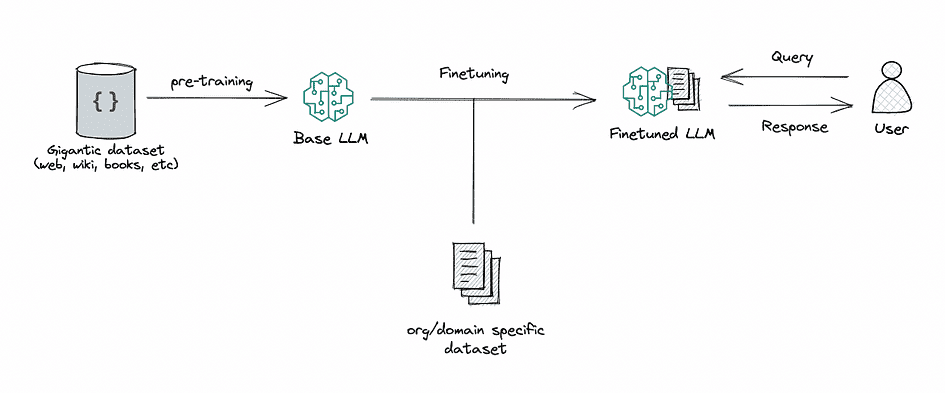

Finetuning: That is the method of taking a pre-trained LLM and additional coaching it on a smaller, particular dataset to adapt it for a selected activity or to enhance its efficiency. By finetuning, we’re adjusting the mannequin’s weights primarily based on our knowledge, making it extra tailor-made to our utility’s distinctive wants.

Picture by Creator

Each RAG and finetuning function highly effective instruments in enhancing the efficiency of LLM-based purposes, however they handle totally different points of the optimisation course of, and that is essential in the case of selecting one over the opposite.

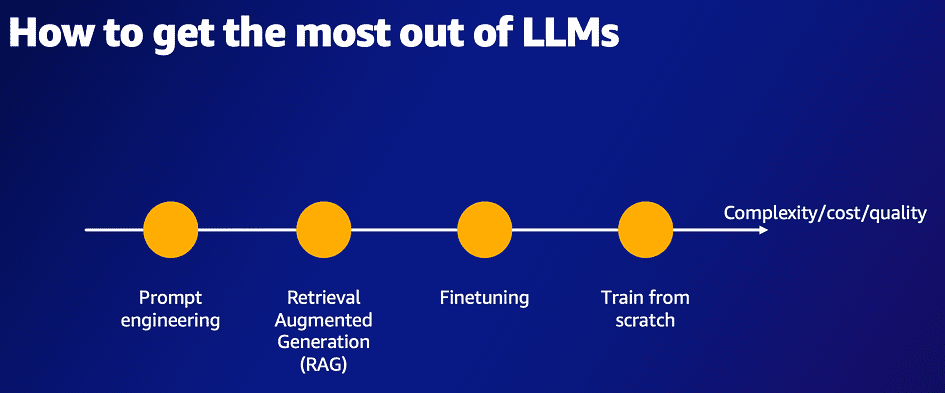

Beforehand, I’d usually recommend to organisations that they experiment with RAG earlier than diving into finetuning. This was primarily based on my notion that each approaches achieved related outcomes however assorted when it comes to complexity, value, and high quality. I even used for example this level with diagrams equivalent to this one:

Picture by Creator

On this diagram, numerous elements like complexity, value, and high quality are represented alongside a single dimension. The takeaway? RAG is less complicated and cheaper, however its high quality won’t match up. My recommendation normally was: begin with RAG, gauge its efficiency, and if discovered missing, shift to finetuning.

Nevertheless, my perspective has since advanced. I imagine it’s an oversimplification to view RAG and finetuning as two methods that obtain the identical end result, simply the place one is simply cheaper and fewer advanced than the opposite. They’re essentially distinct — as an alternative of co-linear they’re really orthogonal — and serve totally different necessities of an LLM utility.

To make this clearer, think about a easy real-world analogy: When posed with the query, “Ought to I exploit a knife or a spoon to eat my meal?”, essentially the most logical counter-question is: “Properly, what are you consuming?” I requested family and friends this query and everybody instinctively replied with that counter-question, indicating that they don’t view the knife and spoon as interchangeable, or one as an inferior variant of the opposite.

On this weblog put up, we’ll dive deep into the nuances that differentiate RAG and finetuning throughout numerous dimensions which are, in my view, essential in figuring out the optimum method for a selected activity. Furthermore, we’ll be taking a look at among the hottest use instances for LLM purposes and use the size established within the first half to determine which method is likely to be greatest fitted to which use case. Within the final a part of this weblog put up we’ll determine extra points that needs to be thought of when constructing LLM purposes. Every a type of would possibly warrant its personal weblog put up and subsequently we are able to solely contact briefly on them within the scope of this put up.

Choosing the proper method for adapting giant language fashions can have a serious affect on the success of your NLP purposes. Deciding on the incorrect strategy can result in:

- Poor mannequin efficiency in your particular activity, leading to inaccurate outputs.

- Elevated compute prices for mannequin coaching and inference if the method will not be optimized in your use case.

- Further growth and iteration time if it is advisable to pivot to a unique method afterward.

- Delays in deploying your utility and getting it in entrance of customers.

- A scarcity of mannequin interpretability for those who select a very advanced adaptation strategy.

- Issue deploying the mannequin to manufacturing as a result of dimension or computational constraints.

The nuances between RAG and finetuning span mannequin structure, knowledge necessities, computational complexity, and extra. Overlooking these particulars can derail your undertaking timeline and price range.

This weblog put up goals to stop wasted effort by clearly laying out when every method is advantageous. With these insights, you’ll be able to hit the bottom operating with the suitable adaptation strategy from day one. The detailed comparability will equip you to make the optimum know-how selection to attain your small business and AI targets. This information to deciding on the suitable device for the job will set your undertaking up for fulfillment.

So let’s dive in!

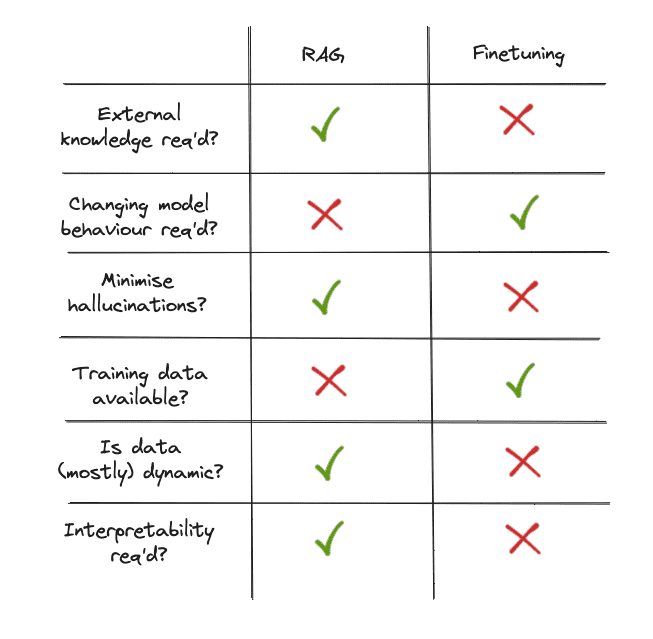

Earlier than we select RAG vs Fintuning, we must always assess the necessities of our LLM undertaking alongside some dimensions and ask ourselves a couple of questions.

Does our use case require entry to exterior knowledge sources?

When selecting between finetuning an LLM or utilizing RAG, one key consideration is whether or not the applying requires entry to exterior knowledge sources. If the reply is sure, RAG is probably going the higher choice.

RAG programs are, by definition, designed to enhance an LLM’s capabilities by retrieving related data from data sources earlier than producing a response. This makes this system well-suited for purposes that want to question databases, paperwork, or different structured/unstructured knowledge repositories. The retriever and generator elements will be optimised to leverage these exterior sources.

In distinction, whereas it’s potential to finetune an LLM to be taught some exterior data, doing so requires a big labelled dataset of question-answer pairs from the goal area. This dataset have to be up to date because the underlying knowledge modifications, making it impractical for ceaselessly altering knowledge sources. The finetuning course of additionally doesn’t explicitly mannequin the retrieval and reasoning steps concerned in querying exterior data.

So in abstract, if our utility must leverage exterior knowledge sources, utilizing a RAG system will doubtless be simpler and scalable than making an attempt to “bake in” the required data by finetuning alone.

Do we have to modify the mannequin’s behaviour, writing model, or domain-specific data?

One other crucial side to think about is how a lot we want the mannequin to regulate its behaviour, its writing model, or tailor its responses for domain-specific purposes.

Finetuning excels in its means to adapt an LLM’s behaviour to particular nuances, tones, or terminologies. If we wish the mannequin to sound extra like a medical skilled, write in a poetic model, or use the jargon of a selected trade, finetuning on domain-specific knowledge permits us to attain these customisations. This means to affect the mannequin’s behaviour is crucial for purposes the place alignment with a selected model or area experience is significant.

RAG, whereas highly effective in incorporating exterior data, primarily focuses on data retrieval and doesn’t inherently adapt its linguistic model or domain-specificity primarily based on the retrieved data. It should pull related content material from the exterior knowledge sources however won’t exhibit the tailor-made nuances or area experience {that a} finetuned mannequin can supply.

So, if our utility calls for specialised writing kinds or deep alignment with domain-specific vernacular and conventions, finetuning presents a extra direct path to reaching that alignment. It provides the depth and customisation essential to genuinely resonate with a selected viewers or experience space, guaranteeing the generated content material feels genuine and well-informed.

Fast recap

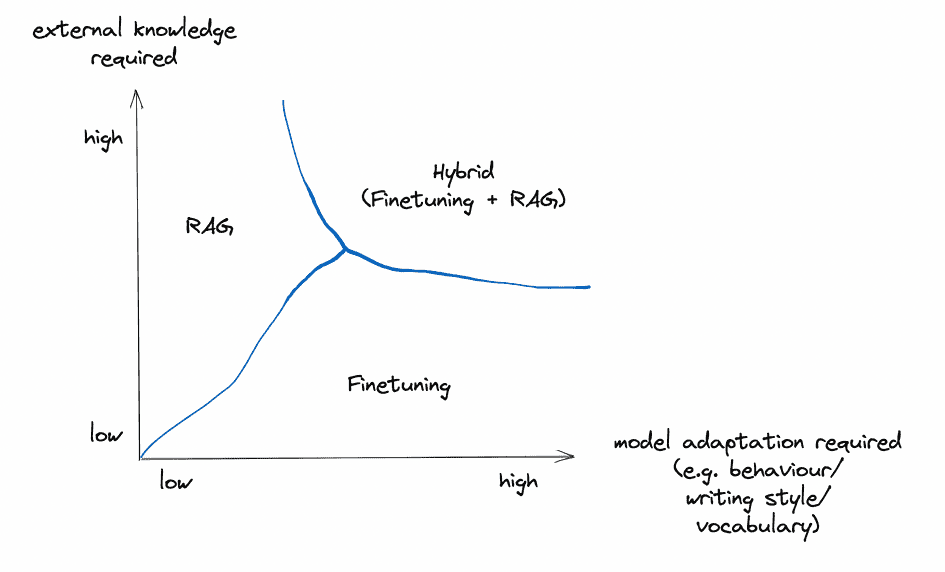

These two points are by far a very powerful ones to think about when deciding which methodology to make use of to spice up LLM utility efficiency. Curiously, they’re, in my view, orthogonal and can be utilized independently (and in addition be mixed).

Picture by Creator

Nevertheless, earlier than diving into the use instances, there are a couple of extra key points we must always think about earlier than selecting a way:

How essential is it to suppress hallucinations?

One draw back of LLMs is their tendency to hallucinate — making up info or particulars that don’t have any foundation in actuality. This may be extremely problematic in purposes the place accuracy and truthfulness are vital.

Finetuning may help cut back hallucinations to some extent by grounding the mannequin in a selected area’s coaching knowledge. Nevertheless, the mannequin should fabricate responses when confronted with unfamiliar inputs. Retraining on new knowledge is required to repeatedly minimise false fabrications.

In distinction, RAG programs are inherently much less liable to hallucination as a result of they floor every response in retrieved proof. The retriever identifies related info from the exterior data supply earlier than the generator constructs the reply. This retrieval step acts as a fact-checking mechanism, lowering the mannequin’s means to confabulate. The generator is constrained to synthesise a response supported by the retrieved context.

So in purposes the place suppressing falsehoods and imaginative fabrications is significant, RAG programs present in-built mechanisms to minimise hallucinations. The retrieval of supporting proof previous to response era provides RAG a bonus in guaranteeing factually correct and truthful outputs.

How a lot labelled coaching knowledge is offered?

When deciding between RAG and finetuning, a vital issue to think about is the amount of domain- or task-specific, labelled coaching knowledge at our disposal.

Finetuning an LLM to adapt to particular duties or domains is closely depending on the standard and amount of the labelled knowledge obtainable. A wealthy dataset may help the mannequin deeply perceive the nuances, intricacies, and distinctive patterns of a selected area, permitting it to generate extra correct and contextually related responses. Nevertheless, if we’re working with a restricted dataset, the enhancements from finetuning is likely to be marginal. In some instances, a scant dataset would possibly even result in overfitting, the place the mannequin performs nicely on the coaching knowledge however struggles with unseen or real-world inputs.

Quite the opposite, RAG programs are unbiased from coaching knowledge as a result of they leverage exterior data sources to retrieve related data. Even when we don’t have an in depth labelled dataset, a RAG system can nonetheless carry out competently by accessing and incorporating insights from its exterior knowledge sources. The mixture of retrieval and era ensures that the system stays knowledgeable, even when domain-specific coaching knowledge is sparse.

In essence, if we have now a wealth of labelled knowledge that captures the area’s intricacies, finetuning can supply a extra tailor-made and refined mannequin behaviour. However in eventualities the place such knowledge is proscribed, a RAG system offers a strong different, guaranteeing the applying stays data-informed and contextually conscious by its retrieval capabilities.

How static/dynamic is the information?

One other basic side to think about when selecting between RAG and finetuning is the dynamic nature of our knowledge. How ceaselessly is the information up to date, and the way crucial is it for the mannequin to remain present?

Finetuning an LLM on a selected dataset means the mannequin’s data turns into a static snapshot of that knowledge on the time of coaching. If the information undergoes frequent updates, modifications, or expansions, this could shortly render the mannequin outdated. To maintain the LLM present in such dynamic environments, we’d should retrain it ceaselessly, a course of that may be each time-consuming and resource-intensive. Moreover, every iteration requires cautious monitoring to make sure that the up to date mannequin nonetheless performs nicely throughout totally different eventualities and hasn’t developed new biases or gaps in understanding.

In distinction, RAG programs inherently possess a bonus in environments with dynamic knowledge. Their retrieval mechanism always queries exterior sources, guaranteeing that the knowledge they pull in for producing responses is up-to-date. Because the exterior data bases or databases replace, the RAG system seamlessly integrates these modifications, sustaining its relevance with out the necessity for frequent mannequin retraining.

In abstract, if we’re grappling with a quickly evolving knowledge panorama, RAG provides an agility that’s arduous to match with conventional finetuning. By all the time staying linked to the newest knowledge, RAG ensures that the responses generated are in tune with the present state of knowledge, making it an excellent selection for dynamic knowledge eventualities.

How clear/interpretable does our LLM app have to be?

The final side to think about is the diploma to which we want insights into the mannequin’s decision-making course of.

Finetuning an LLM, whereas extremely highly effective, operates like a black field, making the reasoning behind its responses extra opaque. Because the mannequin internalises the knowledge from the dataset, it turns into difficult to discern the precise supply or reasoning behind every response. This may make it tough for builders or customers to belief the mannequin’s outputs, particularly in vital purposes the place understanding the “why” behind a solution is significant.

RAG programs, then again, supply a stage of transparency that’s not sometimes present in solely finetuned fashions. Given the two-step nature of RAG — retrieval after which era — customers can peek into the method. The retrieval part permits for the inspection of which exterior paperwork or knowledge factors are chosen as related. This offers a tangible path of proof or reference that may be evaluated to know the muse upon which a response is constructed. The flexibility to hint again a mannequin’s reply to particular knowledge sources will be invaluable in purposes that demand a excessive diploma of accountability or when there’s a have to validate the accuracy of the generated content material.

In essence, if transparency and the power to interpret the underpinnings of a mannequin’s responses are priorities, RAG provides a transparent benefit. By breaking down the response era into distinct phases and permitting perception into its knowledge retrieval, RAG fosters larger belief and understanding in its outputs.

Abstract

Selecting between RAG and finetuning turns into extra intuitive when contemplating these dimensions. If we want lean in the direction of accessing exterior data and valuing transparency, RAG is our go-to. Alternatively, if we’re working with secure labelled knowledge and goal to adapt the mannequin extra intently to particular wants, finetuning is the higher selection.

Picture by Creator

Within the following part, we’ll see how we are able to assess widespread LLM use instances primarily based on these standards.

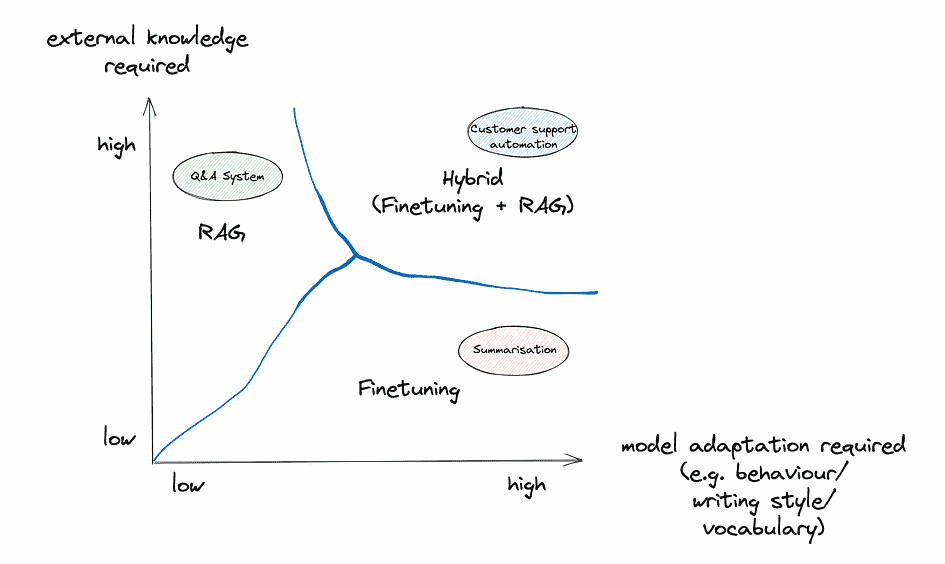

Let’s have a look at some widespread use instances and the way the above framework can be utilized to decide on the suitable methodology:

Summarisation (in a specialised area and/or a selected model)

1. Exterior data required? For the duty of summarizing within the model of earlier summaries, the first knowledge supply could be the earlier summaries themselves. If these summaries are contained inside a static dataset, there’s no use for steady exterior knowledge retrieval. Nevertheless, if there’s a dynamic database of summaries that ceaselessly updates and the aim is to repeatedly align the model with the latest entries, RAG is likely to be helpful right here.

2. Mannequin adaptation required? The core of this use case revolves round adapting to a specialised area or a and/or a selected writing model. Finetuning is especially adept at capturing stylistic nuances, tonal variations, and particular area vocabularies, making it an optimum selection for this dimension.

3. Essential to minimise hallucinations? Hallucinations are problematic in most LLM purposes, together with summarisation. Nevertheless, on this use case, the textual content to be summarised is usually supplied as context. This makes hallucinations much less of a priority in comparison with different use instances. The supply textual content constrains the mannequin, lowering imaginative fabrications. So whereas factual accuracy is all the time fascinating, suppressing hallucinations is a decrease precedence for summarisation given the contextual grounding.

4. Coaching knowledge obtainable? If there’s a considerable assortment of earlier summaries which are labelled or structured in a means that the mannequin can be taught from them, finetuning turns into a really engaging choice. Alternatively, if the dataset is proscribed, and we’re leaning on exterior databases for stylistic alignment, RAG may play a job, though its major energy isn’t model adaptation.

5. How dynamic is the information? If the database of earlier summaries is static or updates occasionally, the finetuned mannequin’s data will doubtless stay related for an extended time. Nevertheless, if the summaries replace ceaselessly and there’s a necessity for the mannequin to align with the latest stylistic modifications repeatedly, RAG may need an edge as a result of its dynamic knowledge retrieval capabilities.

6. Transparency/Interpretability required? The first aim right here is stylistic alignment, so the “why” behind a selected summarisation model is likely to be much less vital than in different use instances. That stated, if there’s a have to hint again and perceive which earlier summaries influenced a selected output, RAG provides a bit extra transparency. Nonetheless, this is likely to be a secondary concern for this use case.

Suggestion: For this use case finetuning seems to be the extra becoming selection. The first goal is stylistic alignment, a dimension the place finetuning shines. Assuming there’s a good quantity of earlier summaries obtainable for coaching, finetuning an LLM would enable for deep adaptation to the specified model, capturing the nuances and intricacies of the area. Nevertheless, if the summaries database is extraordinarily dynamic and there’s worth in tracing again influences, contemplating a hybrid strategy or leaning in the direction of RAG may very well be explored.

Query/answering system on organisational data (i.e. exterior knowledge)

1. Exterior data required? A query/answering system counting on organisational data bases inherently requires entry to exterior knowledge, on this case, the org’s inner databases and doc shops. The system’s effectiveness hinges on its means to faucet into and retrieve related data from these sources to reply queries. Given this, RAG stands out because the extra appropriate selection for this dimension, because it’s designed to enhance LLM capabilities by retrieving pertinent knowledge from data sources.

2. Mannequin adaptation required? Relying on the group and its subject, there is likely to be a requirement for the mannequin to align with particular terminologies, tones, or conventions. Whereas RAG focuses totally on data retrieval, finetuning may help the LLM modify its responses to the corporate’s inner vernacular or the nuances of its area. Thus, for this dimension, relying on the particular necessities finetuning would possibly play a job.

3. Essential to minimise hallucinations? Hallucinations are a serious concern on this use case, because of the knowledge-cutoff of LLMs. If the mannequin is unable to reply a query primarily based on the information it has been skilled on, it would nearly definitely revert to (partially or completely) making up a believable however incorrect reply.

4. Coaching knowledge obtainable? If the group has a structured and labeled dataset of beforehand answered questions, this could bolster the finetuning strategy. Nevertheless, not all inner databases are labeled or structured for coaching functions. In eventualities the place the information isn’t neatly labeled or the place the first focus is on retrieving correct and related solutions, RAG’s means to faucet into exterior knowledge sources while not having an enormous labeled dataset makes it a compelling choice.

5. How dynamic is the information? Inner databases and doc shops in organisations will be extremely dynamic, with frequent updates, modifications, or additions. If this dynamism is attribute of the organisation’s data base, RAG provides a definite benefit. It frequently queries the exterior sources, guaranteeing its solutions are primarily based on the most recent obtainable knowledge. Finetuning would require common retraining to maintain up with such modifications, which is likely to be impractical.

6. Transparency/Interpretability required? For inner purposes, particularly in sectors like finance, healthcare, or authorized, understanding the reasoning or supply behind a solution will be paramount. Since RAG offers a two-step strategy of retrieval after which era, it inherently provides a clearer perception into which paperwork or knowledge factors influenced a selected reply. This traceability will be invaluable for inner stakeholders who would possibly have to validate or additional examine the sources of sure solutions.

Suggestion: For this use case a RAG system appears to be the extra becoming selection. Given the necessity for dynamic entry to the organisation’s evolving inner databases and the potential requirement for transparency within the answering course of, RAG provides capabilities that align nicely with these wants. Nevertheless, if there’s a big emphasis on tailoring the mannequin’s linguistic model or adapting to domain-specific nuances, incorporating components of finetuning may very well be thought of.

Buyer Help Automation (i.e. automated chatbots or assist desk options offering instantaneous responses to buyer inquiries)

1. Exterior data required? Buyer help usually necessitates entry to exterior knowledge, particularly when coping with product particulars, account-specific data, or troubleshooting databases. Whereas many queries will be addressed with normal data, some would possibly require pulling knowledge from firm databases or product FAQs. Right here, RAG’s functionality to retrieve pertinent data from exterior sources could be useful. Nevertheless, it’s value noting that lots of buyer help interactions are additionally primarily based on predefined scripts or data, which will be successfully addressed with a finetuned mannequin.

2. Mannequin adaptation required? Buyer interactions demand a sure tone, politeness, and readability, and may also require company-specific terminologies. Finetuning is very helpful for guaranteeing the LLM adapts to the corporate’s voice, branding, and particular terminologies, guaranteeing a constant and brand-aligned buyer expertise.

3. Essential to minimise hallucinations? For buyer help chatbots, avoiding false data is crucial to keep up person belief. Finetuning alone leaves fashions liable to hallucinations when confronted with unfamiliar queries. In distinction, RAG programs suppress fabrications by grounding responses in retrieved proof. This reliance on sourced info permits RAG chatbots to minimise dangerous falsehoods and supply customers with dependable data the place accuracy is significant.

4. Coaching knowledge obtainable? If an organization has a historical past of buyer interactions, this knowledge will be invaluable for finetuning. A wealthy dataset of earlier buyer queries and their resolutions can be utilized to coach the mannequin to deal with related interactions sooner or later. If such knowledge is proscribed, RAG can present a fallback by retrieving solutions from exterior sources like product documentation.

5. How dynamic is the information? Buyer help would possibly want to handle queries about new merchandise, up to date insurance policies, or altering service phrases. In eventualities the place the product line up, software program variations, or firm insurance policies are ceaselessly up to date, RAG’s means to dynamically pull from the most recent paperwork or databases is advantageous. Alternatively, for extra static data domains, finetuning can suffice.

6. Transparency/Interpretability required? Whereas transparency is crucial in some sectors, in buyer help, the first focus is on correct, quick, and courteous responses. Nevertheless, for inner monitoring, high quality assurance, or addressing buyer disputes, having traceability concerning the supply of a solution may very well be useful. In such instances, RAG’s retrieval mechanism provides an added layer of transparency.

Suggestion: For buyer help automation a hybrid strategy is likely to be optimum. Finetuning can make sure that the chatbot aligns with the corporate’s branding, tone, and normal data, dealing with nearly all of typical buyer queries. RAG can then function a complementary system, stepping in for extra dynamic or particular inquiries, guaranteeing the chatbot can pull from the most recent firm paperwork or databases and thereby minimising hallucinations. By integrating each approaches, corporations can present a complete, well timed, and brand-consistent buyer help expertise.

Picture by Creator

As talked about above, there are different elements that needs to be thought of when deciding between RAG and finetuning (or each). We will’t presumably dive deep into them, as all of them are multi-faceted and don’t have clear solutions like among the points above (for instance, if there isn’t any coaching knowledge the finetuning is simply merely not potential). However that doesn’t imply we must always disregard them:

Scalability

As an organisation grows and its wants evolve, how scalable are the strategies in query? RAG programs, given their modular nature, would possibly supply extra easy scalability, particularly if the data base grows. Alternatively, ceaselessly finetuning a mannequin to cater to increasing datasets will be computationally demanding.

Latency and Actual-time Necessities

If the applying requires real-time or near-real-time responses, think about the latency launched by every methodology. RAG programs, which contain retrieving knowledge earlier than producing a response, would possibly introduce extra latency in comparison with a finetuned LLM that generates responses primarily based on internalised data.

Upkeep and Help

Take into consideration the long-term. Which system aligns higher with the organisation’s means to offer constant upkeep and help? RAG would possibly require maintenance of the database and the retrieval mechanism, whereas finetuning would necessitate constant retraining efforts, particularly if the information or necessities change.

Robustness and Reliability

How strong is every methodology to several types of inputs? Whereas RAG programs can pull from exterior data sources and would possibly deal with a broad array of questions, a nicely finetuned mannequin would possibly supply extra consistency in sure domains.

Moral and Privateness Considerations

Storing and retrieving from exterior databases would possibly increase privateness issues, particularly if the information is delicate. Alternatively, a finetuned mannequin, whereas not querying dwell databases, would possibly nonetheless produce outputs primarily based on its coaching knowledge, which may have its personal moral implications.

Integration with Current Programs

Organisations would possibly have already got sure infrastructure in place. The compatibility of RAG or finetuning with present programs — be it databases, cloud infrastructures, or person interfaces — can affect the selection.

Person Expertise

Take into account the end-users and their wants. In the event that they require detailed, reference-backed solutions, RAG may very well be preferable. In the event that they worth velocity and domain-specific experience, a finetuned mannequin is likely to be extra appropriate.

Price

Finetuning can get costly, particularly for actually giant fashions. However prior to now few months prices have gone down considerably because of parameter environment friendly methods like QLoRA. Establishing RAG is usually a giant preliminary funding — overlaying the combination, database entry, possibly even licensing charges — however then there’s additionally the common upkeep of that exterior data base to consider.

Complexity

Finetuning can get advanced shortly. Whereas many suppliers now supply one-click finetuning the place we simply want to offer the coaching knowledge, conserving monitor of mannequin variations and guaranteeing that the brand new fashions nonetheless carry out nicely throughout the board is difficult. RAG, then again, also can get advanced shortly. There’s the setup of a number of elements, ensuring the database stays contemporary, and guaranteeing the items — like retrieval and era — match collectively good.

As we’ve explored, selecting between RAG and finetuning requires a nuanced analysis of an LLM utility’s distinctive wants and priorities. There is no such thing as a one-size-fits-all resolution; success lies in aligning the optimisation methodology with the particular necessities of the duty. By assessing key standards — the necessity for exterior knowledge, adapting mannequin behaviour, coaching knowledge availability, knowledge dynamics, end result transparency, and extra — organisations could make an knowledgeable resolution on the very best path ahead. In sure instances, a hybrid strategy leveraging each RAG and finetuning could also be optimum.

The bottom line is avoiding assumptions that one methodology is universally superior. Like all device, their suitability will depend on the job at hand. Misalignment of strategy and targets can hinder progress, whereas the suitable methodology accelerates it. As an organisation evaluates choices for enhancing LLM purposes, it should resist oversimplification and never view RAG and finetuning as interchangeable and select the device that empowers the mannequin to fulfil its capabilities aligned to the wants of the use case. The chances these strategies unlock are astounding however chance alone isn’t sufficient — execution is every part. The instruments are right here — now let’s put them to work.

Heiko Hotz is the Founding father of NLP London, an AI consultancy serving to organizations implement pure language processing and conversational AI. With over 15 years of expertise within the tech trade, Heiko is an skilled in leveraging AI and machine studying to resolve advanced enterprise challenges.

Authentic. Reposted with permission.

Heiko Hotz is the Founding father of NLP London, an AI consultancy serving to organizations implement pure language processing and conversational AI. With over 15 years of expertise within the tech trade, Heiko is an skilled in leveraging AI and machine studying to resolve advanced enterprise challenges.