Introduction

Welcome to our information of LlamaIndex!

In easy phrases, LlamaIndex is a helpful software that acts as a bridge between your customized information and huge language fashions (LLMs) like GPT-4 that are highly effective fashions able to understanding human-like textual content. Whether or not you will have information saved in APIs, databases, or in PDFs, LlamaIndex makes it simple to deliver that information into dialog with these sensible machines. This bridge-building makes your information extra accessible and usable, paving the best way for smarter purposes and workflows.

Understanding LlamaIndex

Initially often known as GPT Index, LlamaIndex has advanced into an indispensable ally for builders. It is like a multi-tool that helps in varied levels of working with information and huge language fashions –

- Firstly, it helps in ‘ingesting’ information, which implies getting the information from its unique supply into the system.

- Secondly, it helps in ‘structuring’ that information, which implies organizing it in a approach that the language fashions can simply perceive.

- Thirdly, it aids in ‘retrieval’, which implies discovering and fetching the best items of knowledge when wanted.

- Lastly, it simplifies ‘integration’, making it simpler to meld your information with varied utility frameworks.

Once we dive slightly deeper into the mechanics of LlamaIndex, we discover three major heroes doing the heavy lifting.

- The ‘information connectors’ are the diligent gatherers, fetching your information from wherever it resides, be it APIs, PDFs, or databases.

- The ‘information indexes’ are the organized librarians, arranging your information neatly in order that it is simply accessible.

- And the ‘engines’ are the translators (LLMs), making it doable to work together together with your information utilizing pure language.

Within the subsequent sections, we’ll discover the best way to arrange LlamaIndex and begin utilizing it to supercharge your purposes with the ability of huge language fashions.

What’s what in LlamaIndex

LlamaIndex is your go-to platform for creating sturdy purposes powered by Giant Language Fashions (LLMs) over your personalized information. Be it a complicated Q&A system, an interactive chatbot, or clever brokers, LlamaIndex lays down the inspiration to your ventures into the realm of Retrieval Augmented Technology (RAG).

RAG mechanism amplifies the prowess of LLMs with the essence of your customized information. Parts of an RAG workflow –

- Data Base (Enter): The data base is sort of a library crammed with helpful data akin to FAQs, manuals, and different related paperwork. When a query is requested, that is the place the system appears to seek out the reply.

- Set off/Question (Enter): That is the spark that will get issues going. Usually, it is a query or request from a buyer that alerts the system to spring into motion.

- Process/Motion (Output): After understanding the set off or question, the system then performs a sure process to handle it. As an illustration, if it is a query, the system will work on offering a solution, or if it is a request for a particular motion, it should perform that motion accordingly.

Primarily based on the context of our weblog, we might want to implement the next two levels utilizing Llamaindex to supply the 2 inputs to our RAG mechanism –

- Indexing Stage: Making ready a data base.

- Querying Stage: Harnessing the data base & the LLM to answer your queries by producing the ultimate output / performing the ultimate process.

Let’s take a better have a look at these levels underneath the magnifying lens of LlamaIndex.

The Indexing Stage: Crafting the Data Base

LlamaIndex equips you with a collection of instruments to form your data base:

- Information Connectors: These entities, also referred to as Readers, ingest information from various sources and codecs right into a unified Doc illustration.

- Paperwork / Nodes: A Doc is your container for information, whether or not it springs from a PDF, an API, or a database. A Node, then again, is a snippet of a Doc, enriched with metadata and relationships, paving the best way for exact retrieval operations.

- Information Indexes: Submit ingestion, LlamaIndex assists in arranging the information right into a retrievable format. This course of entails parsing, embedding, and metadata inference, and finally leads to the creation of the data base.

The Querying Stage: Partaking with Your Data

On this section, we fetch related context from the data base as per your question, and mix it with the LLM’s insights to generate a response. This not solely offers the LLM with up to date related data but in addition prevents hallucination. The core problem right here orbits round retrieval, orchestration, and reasoning throughout a number of data bases.

LlamaIndex presents modular constructs that will help you use it for Q&A, chatbots, or agent-driven purposes.

These are the first components –

- Question Engines: These are your end-to-end conduits for querying your information, taking a pure language question and returning a response together with the referenced context.

- Chat Engines: They elevate the interplay to a conversational stage, permitting back-and-forths together with your information.

- Brokers: Brokers are your automated decision-makers, interacting with the world via a toolkit, and manoeuvring via duties with a dynamic motion plan slightly than a hard and fast logic.

These are few widespread constructing blocks of the first components current in the entire components mentioned above –

- Retrievers: They dictate the strategy of fetching related context from the data base towards a question. For instance, Dense Retrieval towards a vector index is a prevalent method.

- Node Postprocessors: They refine the set of nodes via transformation, filtering, or re-ranking.

- Response Synthesizers: They channel the LLM to generate responses, mixing the consumer question with retrieved textual content chunks.

As we enterprise into LlamaIndex now, we’ll encounter and be taught extra in regards to the above components.

Join your information and apps with Nanonets AI Assistant to speak with information, deploy customized chatbots & brokers, and create AI workflows that carry out duties in your apps.

Set up and Setup

Earlier than exploring the thrilling options, let’s first set up LlamaIndex in your system. In the event you’re conversant in Python, this will likely be simple. Use this command to put in:

pip set up llama-index

Then observe both of the 2 approaches under –

- By default, LlamaIndex makes use of OpenAI’s gpt-3.5-turbo for creating textual content and text-embedding-ada-002 for fetching and embedding. You want an OpenAI API Key to make use of these. Get your API key without cost by signing up on OpenAI’s web site. Then set your surroundings variable with the identify OPENAI_API_KEY in your python file.

import os

os.environ["OPENAI_API_KEY"] = "your_api_key"- In the event you’d slightly not use OpenAI, the system will swap to utilizing LlamaCPP and llama2-chat-13B for creating textual content and BAAI/bge-small-en for fetching and embedding. These all work offline. To arrange LlamaCPP, observe its setup information right here. This may want about 11.5GB of reminiscence on each your CPU and GPU. Then, to make use of native embedding, set up this:

pip set up sentence-transformers

Creating Llamaindex Paperwork

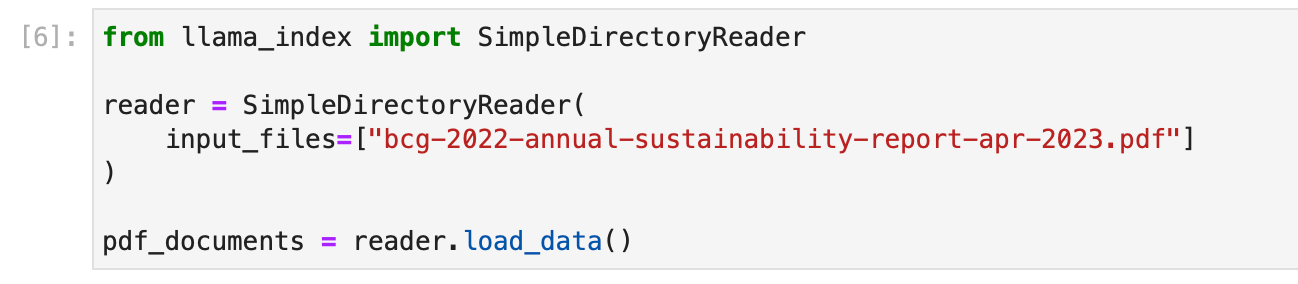

Information connectors, additionally known as Readers, are important parts in LlamaIndex that facilitate the ingestion of knowledge from varied sources and codecs, changing them right into a simplified Doc illustration consisting of textual content and primary metadata.

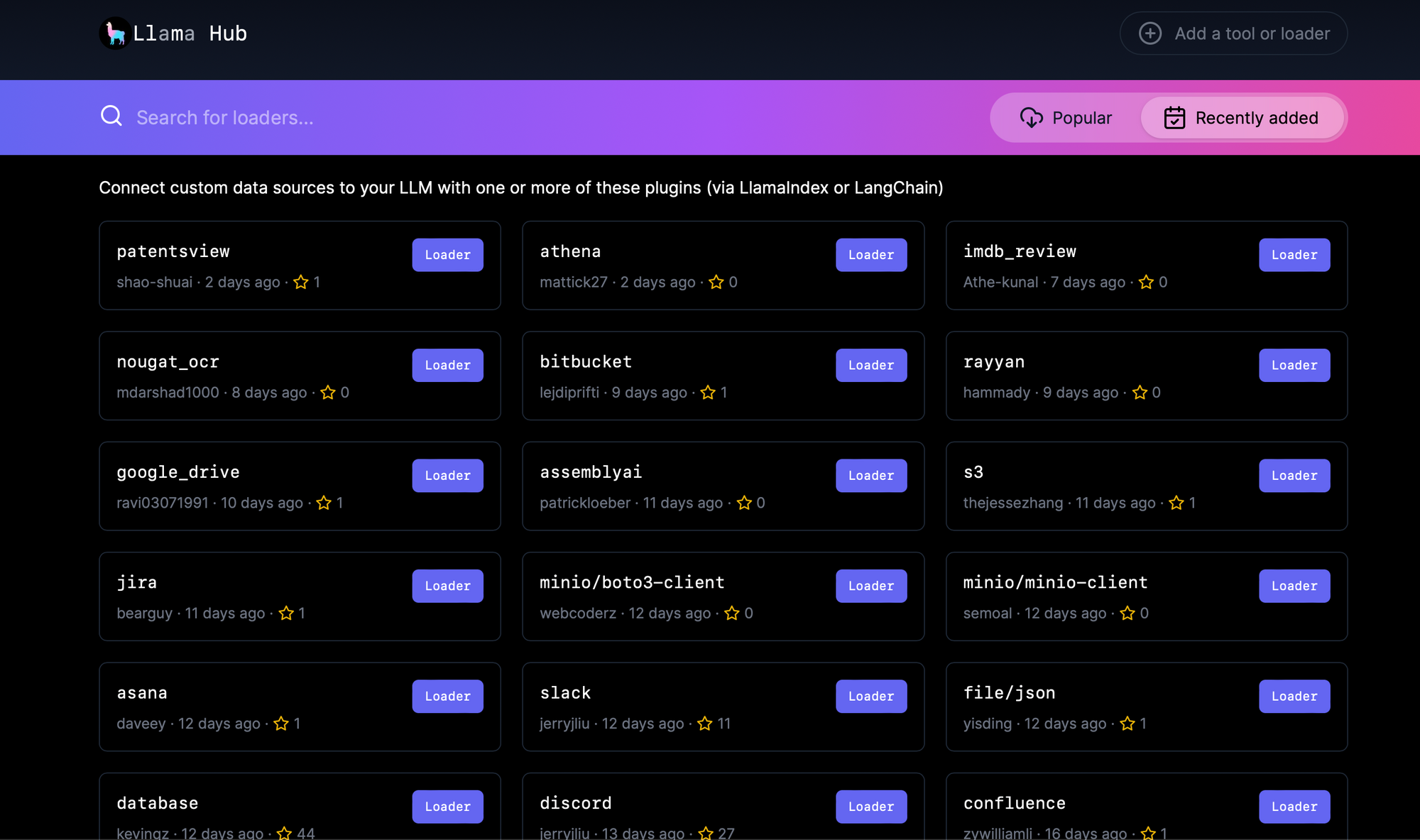

LlamaHub is an open-source repository internet hosting information connectors which might be seamlessly built-in into any LlamaIndex utility. All of the connectors current right here can be utilized as follows –

from llama_index import download_loader

GoogleDocsReader = download_loader('GoogleDocsReader')

loader = GoogleDocsReader()

paperwork = loader.load_data(document_ids=[...])See the complete record of knowledge connectors right here –

Llama Hub

A hub of knowledge loaders for GPT Index and LangChain

The number of information connectors right here is fairly exhaustive, a few of which embody:

- SimpleDirectoryReader: Helps a broad vary of file varieties (.pdf, .jpg, .png, .docx, and so on.) from a neighborhood file listing.

- NotionPageReader: Ingests information from Notion.

- SlackReader: Imports information from Slack.

- ApifyActor: Able to internet crawling, scraping, textual content extraction, and file downloading.

The right way to discover the best information connector?

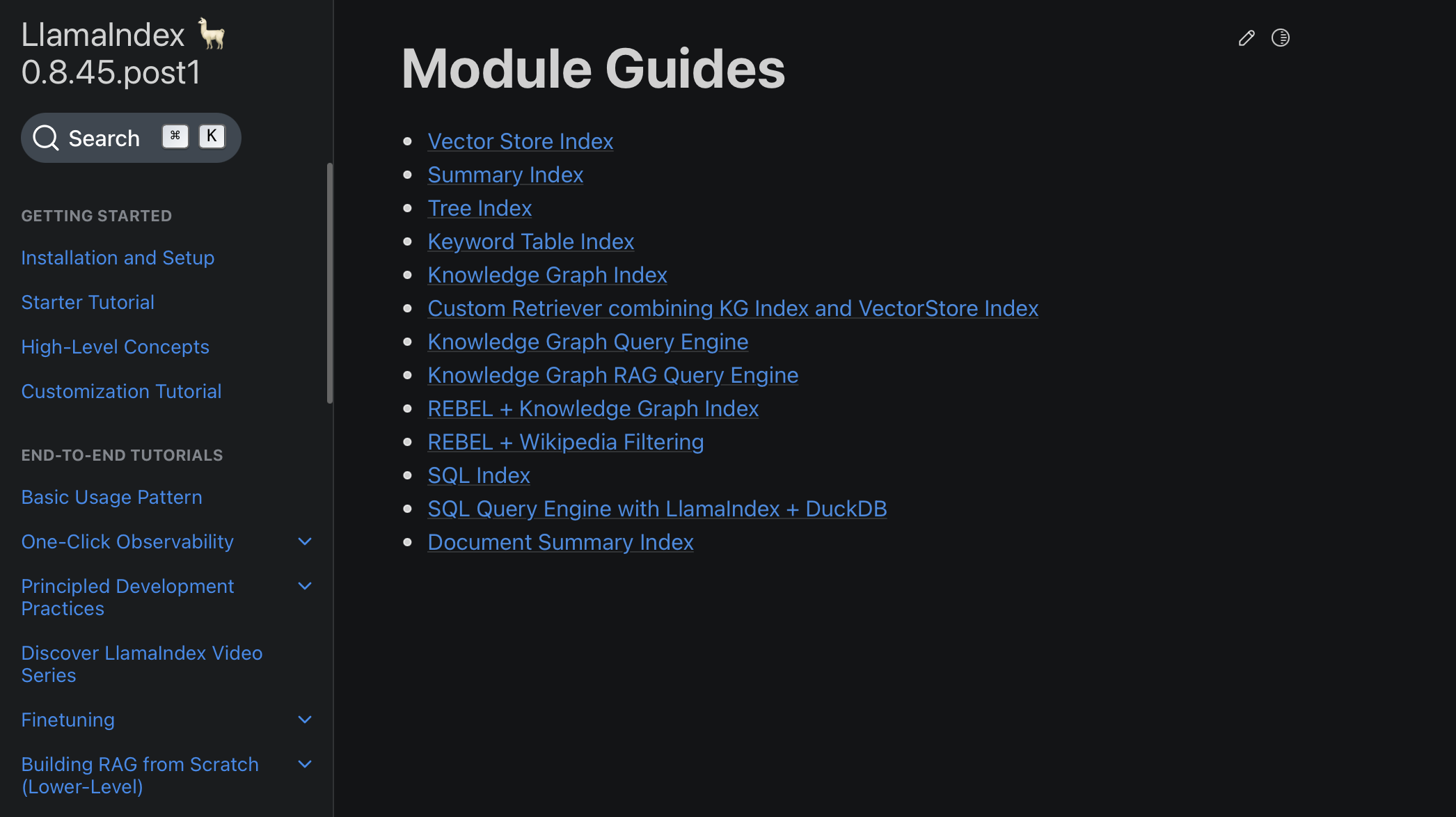

- First search for and examine if a related information connector is listed in Llamaindex documentation right here –

Module Guides – LlamaIndex 🦙 0.8.45.post1

- If not, then determine the related information connector on Llamahub

For instance, allow us to do this on a few information sources.

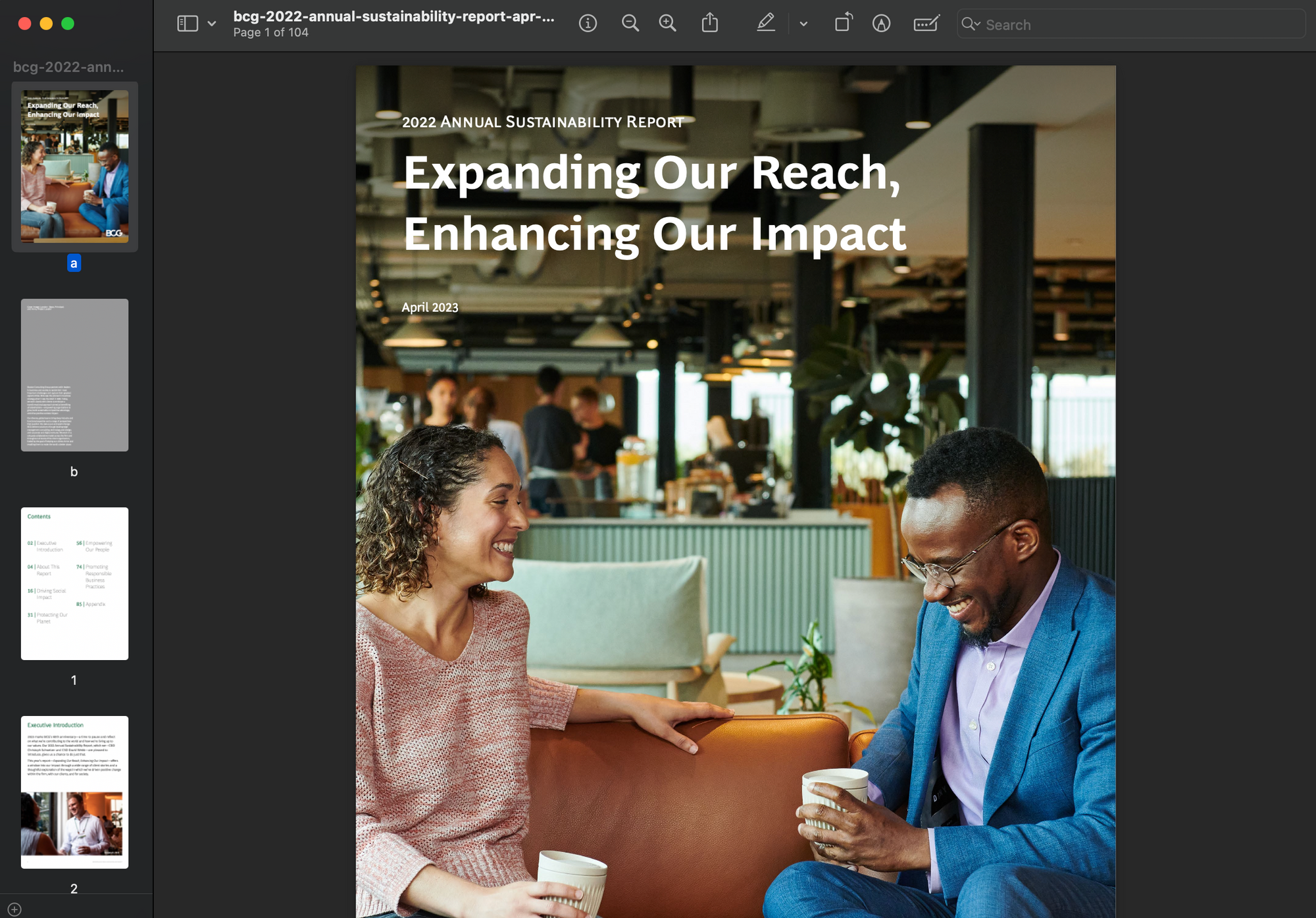

- PDF File : We use the SimpleDirectoryReader information connector for this. The given instance under masses a BCG Annual Sustainability Report.

- Wikipedia Web page : We search Llamahub and discover a related connector for this.

The given instance under masses the wikipedia pages about a couple of nations from across the globe. Mainly, the the highest web page that seems within the search outcomes with every ingredient of the record as a search question is ingested.

Creating LlamaIndex Nodes

In LlamaIndex, as soon as the information has been ingested and represented as Paperwork, there’s an choice to additional course of these Paperwork into Nodes. Nodes are extra granular information entities that signify “chunks” of supply Paperwork, which may very well be textual content chunks, photographs, or different forms of information. Additionally they carry metadata and relationship data with different nodes, which might be instrumental in constructing a extra structured and relational index.

Primary

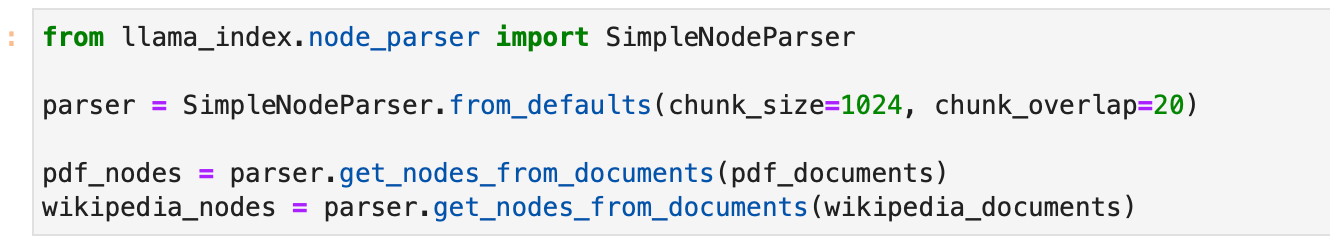

To parse Paperwork into Nodes, LlamaIndex offers NodeParser lessons. These lessons assist in mechanically remodeling the content material of Paperwork into Nodes, adhering to a particular construction that may be utilized additional in index building and querying.

This is how you need to use a SimpleNodeParser to parse your Paperwork into Nodes:

from llama_index.node_parser import SimpleNodeParser

# Assuming paperwork have already been loaded

# Initialize the parser

parser = SimpleNodeParser.from_defaults(chunk_size=1024, chunk_overlap=20)

# Parse paperwork into nodes

nodes = parser.get_nodes_from_documents(paperwork)

On this snippet, SimpleNodeParser.from_defaults() initializes a parser with default settings, and get_nodes_from_documents(paperwork) is used to parse the loaded Paperwork into Nodes.

Superior

Varied customization choices embody:

text_splitter(default: TokenTextSplitter)include_metadata(default: True)include_prev_next_rel(default: True)metadata_extractor(default: None)

Textual content Splitter Customization

Customise textual content splitter, utilizing both SentenceSplitter, TokenTextSplitter, or CodeSplitter from llama_index.text_splitter. Examples:

SentenceSplitter:

import tiktoken

from llama_index.text_splitter import SentenceSplitter

text_splitter = SentenceSplitter(

separator=" ", chunk_size=1024, chunk_overlap=20,

paragraph_separator="nnn", secondary_chunking_regex="[^,.;。]+[,.;。]?",

tokenizer=tiktoken.encoding_for_model("gpt-3.5-turbo").encode

)

node_parser = SimpleNodeParser.from_defaults(text_splitter=text_splitter)

TokenTextSplitter:

import tiktoken

from llama_index.text_splitter import TokenTextSplitter

text_splitter = TokenTextSplitter(

separator=" ", chunk_size=1024, chunk_overlap=20,

backup_separators=["n"],

tokenizer=tiktoken.encoding_for_model("gpt-3.5-turbo").encode

)

node_parser = SimpleNodeParser.from_defaults(text_splitter=text_splitter)

CodeSplitter:

from llama_index.text_splitter import CodeSplitter

text_splitter = CodeSplitter(

language="python", chunk_lines=40, chunk_lines_overlap=15, max_chars=1500,

)

node_parser = SimpleNodeParser.from_defaults(text_splitter=text_splitter)

SentenceWindowNodeParser

For particular scope embeddings, make the most of SentenceWindowNodeParser to separate paperwork into particular person sentences, additionally capturing surrounding sentence home windows.

import nltk

from llama_index.node_parser import SentenceWindowNodeParser

node_parser = SentenceWindowNodeParser.from_defaults(

window_size=3, window_metadata_key="window", original_text_metadata_key="original_sentence"

)

Guide Node Creation

For extra management, manually create Node objects and outline attributes and relationships:

from llama_index.schema import TextNode, NodeRelationship, RelatedNodeInfo

# Create TextNode objects

node1 = TextNode(textual content="<text_chunk>", id_="<node_id>")

node2 = TextNode(textual content="<text_chunk>", id_="<node_id>")

# Outline node relationships

node1.relationships[NodeRelationship.NEXT] = RelatedNodeInfo(node_id=node2.node_id)

node2.relationships[NodeRelationship.PREVIOUS] = RelatedNodeInfo(node_id=node1.node_id)

# Collect nodes

nodes = [node1, node2]

On this snippet, TextNode creates nodes with textual content content material whereas NodeRelationship and RelatedNodeInfo outline node relationships.

Allow us to create primary nodes for the PDF and Wikipedia web page paperwork we now have created.

Create Index with Nodes and Paperwork

The core essence of LlamaIndex lies in its capacity to construct structured indices over ingested information, represented as both Paperwork or Nodes. This indexing facilitates environment friendly querying over the information. Let’s delve into the best way to construct indices with each Doc and Node objects, and what occurs underneath the hood throughout this course of.

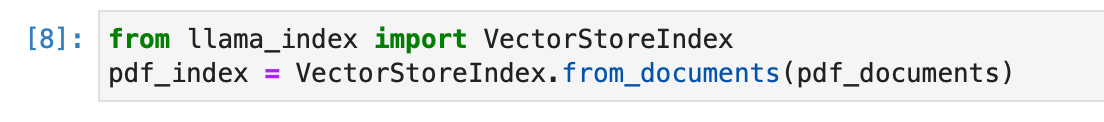

- Constructing Index from Paperwork

This is how one can construct an index straight from Paperwork utilizing the VectorStoreIndex:

from llama_index import VectorStoreIndex

# Assuming docs is your record of Doc objects

index = VectorStoreIndex.from_documents(docs)

Various kinds of indices in LlamaIndex deal with information in distinct methods:

- Abstract Index: Shops Nodes as a sequential chain, and through question time, all Nodes are loaded into the Response Synthesis module if no different question parameters are specified.

- Vector Retailer Index: Shops every Node and a corresponding embedding in a Vector Retailer, and queries contain fetching the top-k most related Nodes.

- Tree Index: Builds a hierarchical tree from a set of Nodes, and queries contain traversing from root nodes right down to leaf nodes.

- Key phrase Desk Index: Extracts key phrases from every Node to construct a mapping, and queries extract related key phrases to fetch corresponding Nodes.

To decide on your index, it is best to rigorously consider the module guides right here and make a alternative right here based on your use case.

Below the Hood:

- The Paperwork are parsed into Node objects, that are light-weight abstractions over textual content strings that moreover maintain monitor of metadata and relationships.

- Index-specific computations are carried out so as to add Node into the index information construction. For instance:

- For a vector retailer index, an embedding mannequin known as (both through API or regionally) to compute embeddings for the Node objects.

- For a doc abstract index, an LLM (Language Mannequin) known as to generate a abstract.

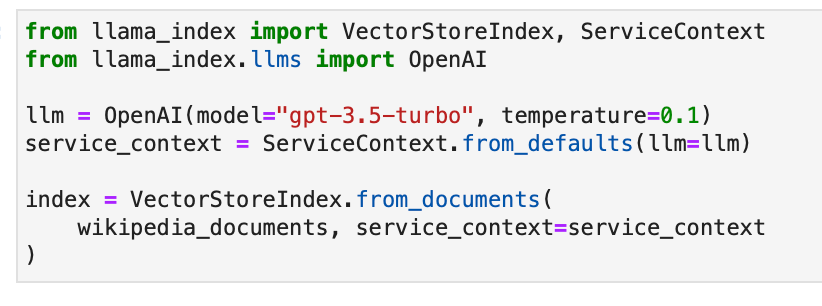

Allow us to create an index for the PDF File utilizing the above code.

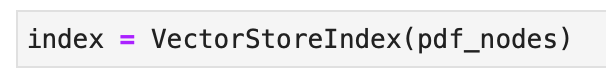

- Constructing Index from Nodes

You can even construct an index straight from Node objects, following the parsing of Paperwork into Nodes or guide Node creation:

from llama_index import VectorStoreIndex

# Assuming nodes is your record of Node objects

index = VectorStoreIndex(nodes)

Allow us to go forward and create a VectorStoreIndex for the PDF nodes.

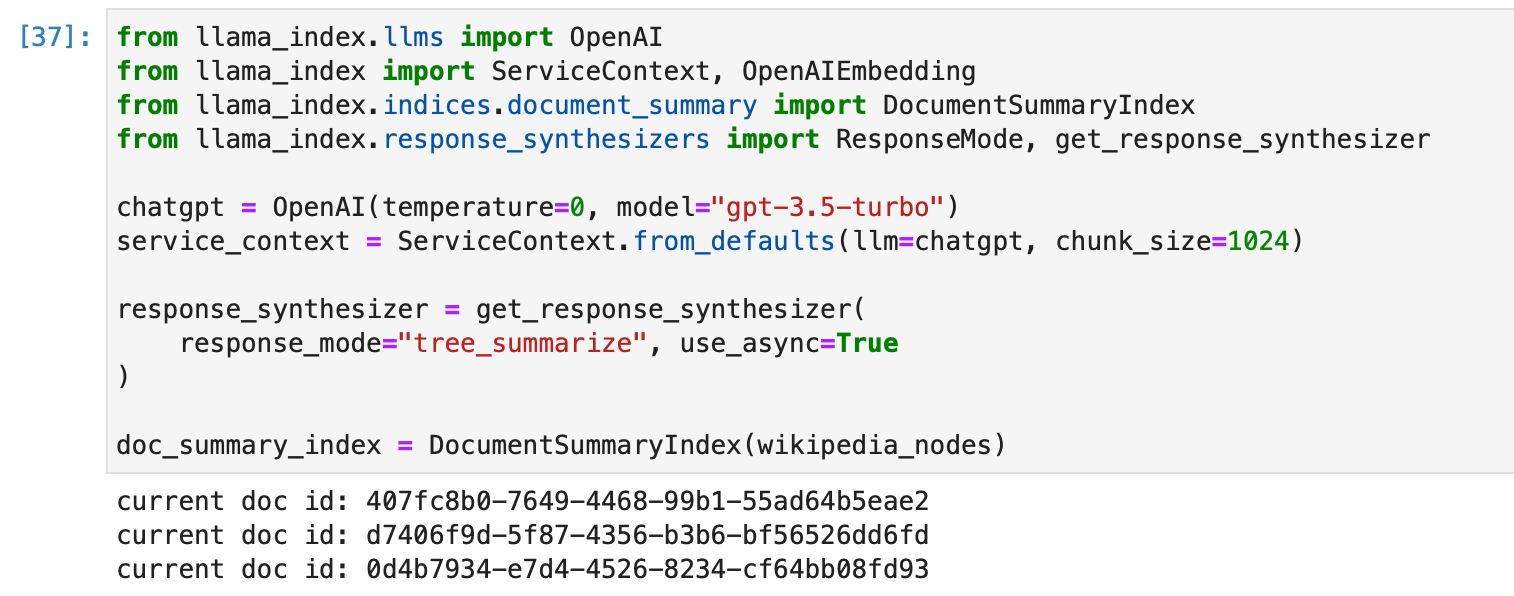

Allow us to now create a abstract index for the Wikipedia nodes. We discover the related index from the record of supported indices, and choose the Doc Abstract Index.

We create the index with some customization as follows –

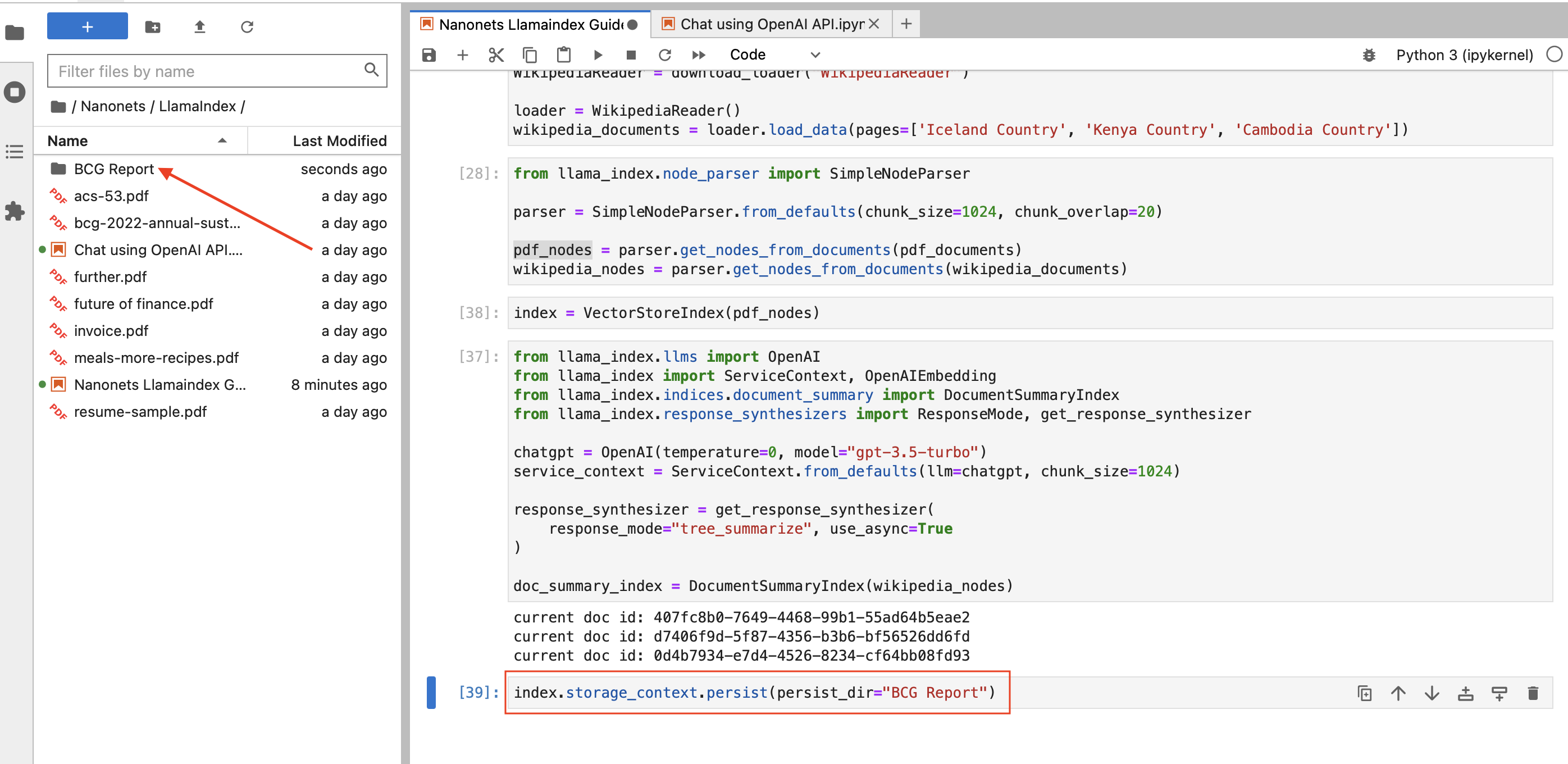

Storing an Index

LlamaIndex’s storage functionality is constructed for adaptability, particularly when coping with evolving information sources. This part outlines the functionalities offered for managing information storage, together with customization and persistence options.

Persistence (Primary)

There is likely to be cases the place you may wish to save the index for future use, and LlamaIndex makes this simple. With the persist() methodology, you’ll be able to retailer information, and with the load_index_from_storage() methodology, you’ll be able to retrieve information effortlessly.

# Persisting to disk

index.storage_context.persist(persist_dir="<persist_dir>")

# Loading from disk

from llama_index import StorageContext, load_index_from_storage

storage_context = StorageContext.from_defaults(persist_dir="<persist_dir>")

index = load_index_from_storage(storage_context)

For instance, we are able to save the PDF index as follows –

Storage Parts (Superior)

At its core, LlamaIndex offers customizable storage parts enabling customers to specify the place varied information components are saved. These parts embody:

- Doc Shops: The repositories for storing ingested paperwork represented as Node objects.

- Index Shops: The locations the place index metadata are stored.

- Vector Shops: The storages for holding embedding vectors.

LlamaIndex is flexible in its storage backend assist, with confirmed assist for:

- Native filesystem

- AWS S3

- Cloudflare R2

These backends are facilitated via the usage of the fsspec library, which permits for a wide range of storage backends.

For a lot of vector shops, each information and index embeddings are saved collectively, eliminating the necessity for separate doc or index shops. This association additionally auto-handles information persistence, simplifying the method of constructing new indexes or reloading present ones.

LlamaIndex has integrations with varied vector shops that deal with the whole index (vectors + textual content). Examine the information right here.

Creating or reloading an index is illustrated under:

# construct a brand new index

from llama_index import VectorStoreIndex, StorageContext

from llama_index.vector_stores import DeepLakeVectorStore

vector_store = DeepLakeVectorStore(dataset_path="<dataset_path>")

storage_context = StorageContext.from_defaults(vector_store=vector_store)

index = VectorStoreIndex.from_documents(paperwork, storage_context=storage_context)

# reload an present index

index = VectorStoreIndex.from_vector_store(vector_store=vector_store)

To leverage storage abstractions, a StorageContext object must be outlined, as proven under:

from llama_index.storage.docstore import SimpleDocumentStore

from llama_index.storage.index_store import SimpleIndexStore

from llama_index.vector_stores import SimpleVectorStore

from llama_index.storage import StorageContext

storage_context = StorageContext.from_defaults(

docstore=SimpleDocumentStore(),

vector_store=SimpleVectorStore(),

index_store=SimpleIndexStore(),

)

Be taught extra about storage and utilizing customized storage components right here.

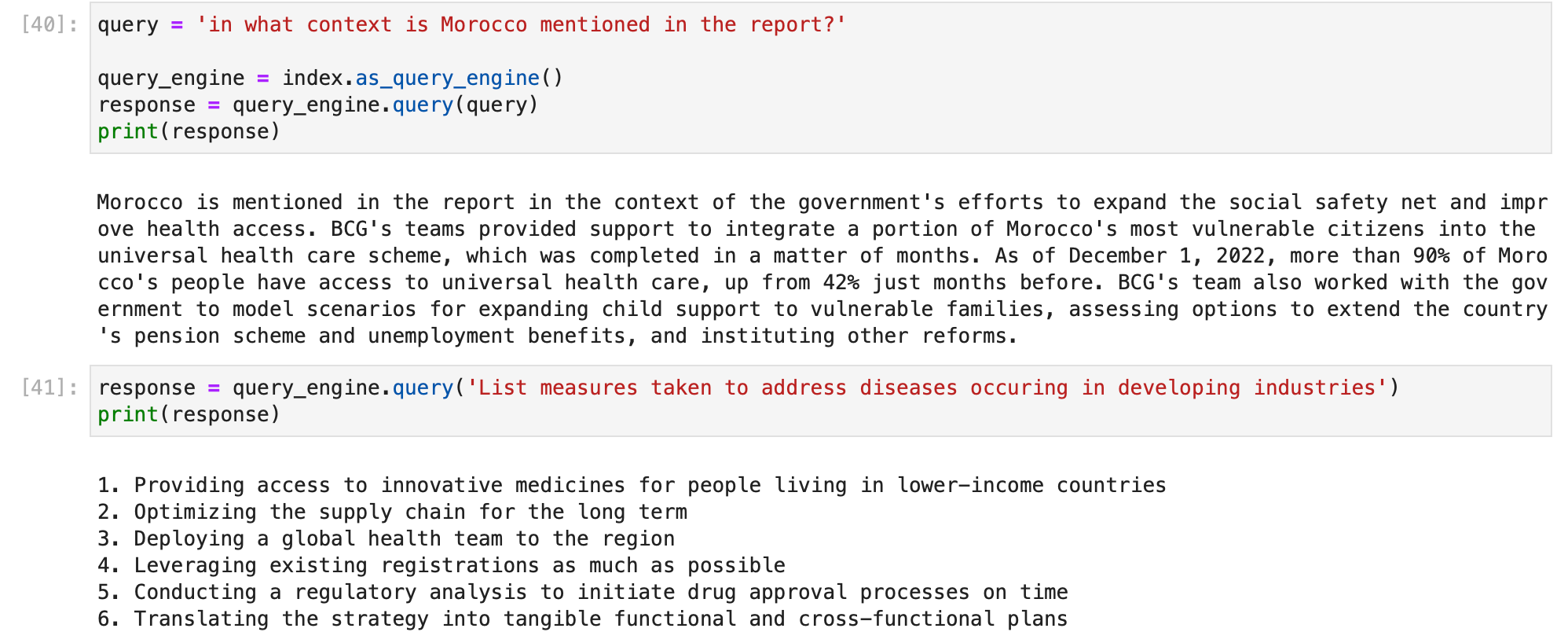

Utilizing Index to Question Information

After having established a well-structured index utilizing LlamaIndex, the subsequent pivotal step is querying this index to extract significant insights or solutions to particular inquiries. This phase elucidates the method and strategies out there for querying the information listed in LlamaIndex.

Earlier than diving into querying, guarantee that you’ve a well-constructed index as mentioned within the earlier part. Your index may very well be constructed on paperwork or nodes, and may very well be a single index or composed of a number of indices.

Excessive-Stage Question API

LlamaIndex offers a high-level API that facilitates simple querying, ultimate for widespread use instances.

# Assuming 'index' is your constructed index object

query_engine = index.as_query_engine()

response = query_engine.question("your_query")

print(response)

On this simplistic method, the as_query_engine() methodology is utilized to create a question engine out of your index, and the question() methodology to execute a question.

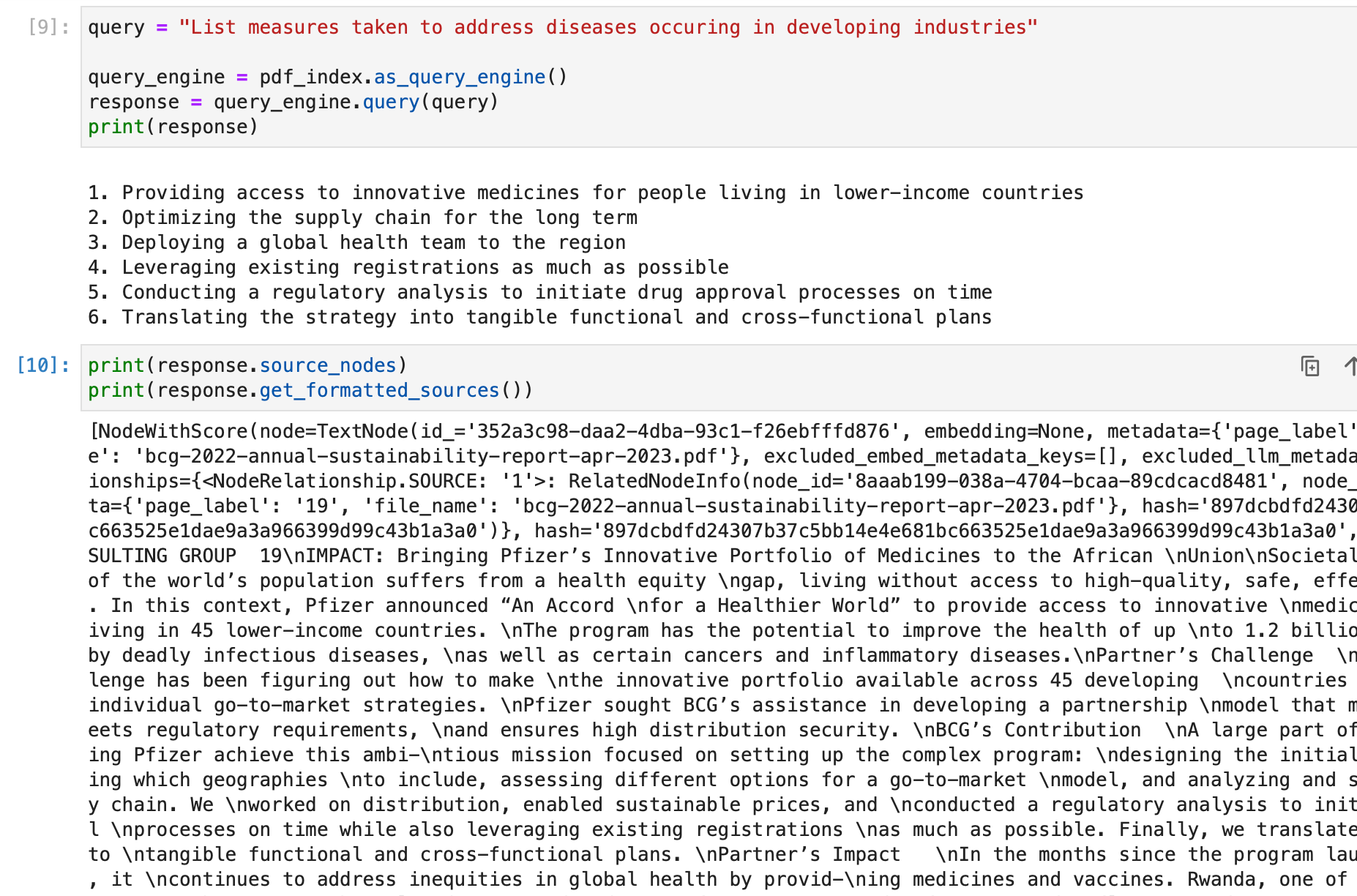

We will do this out on our PDF index –

By default, index.as_query_engine() creates a question engine with the desired default settings in LlamaIndex.

You’ll be able to select your personal question engine based mostly in your use case from the record right here and use that to question your index –

Module Guides – LlamaIndex 🦙 0.8.46

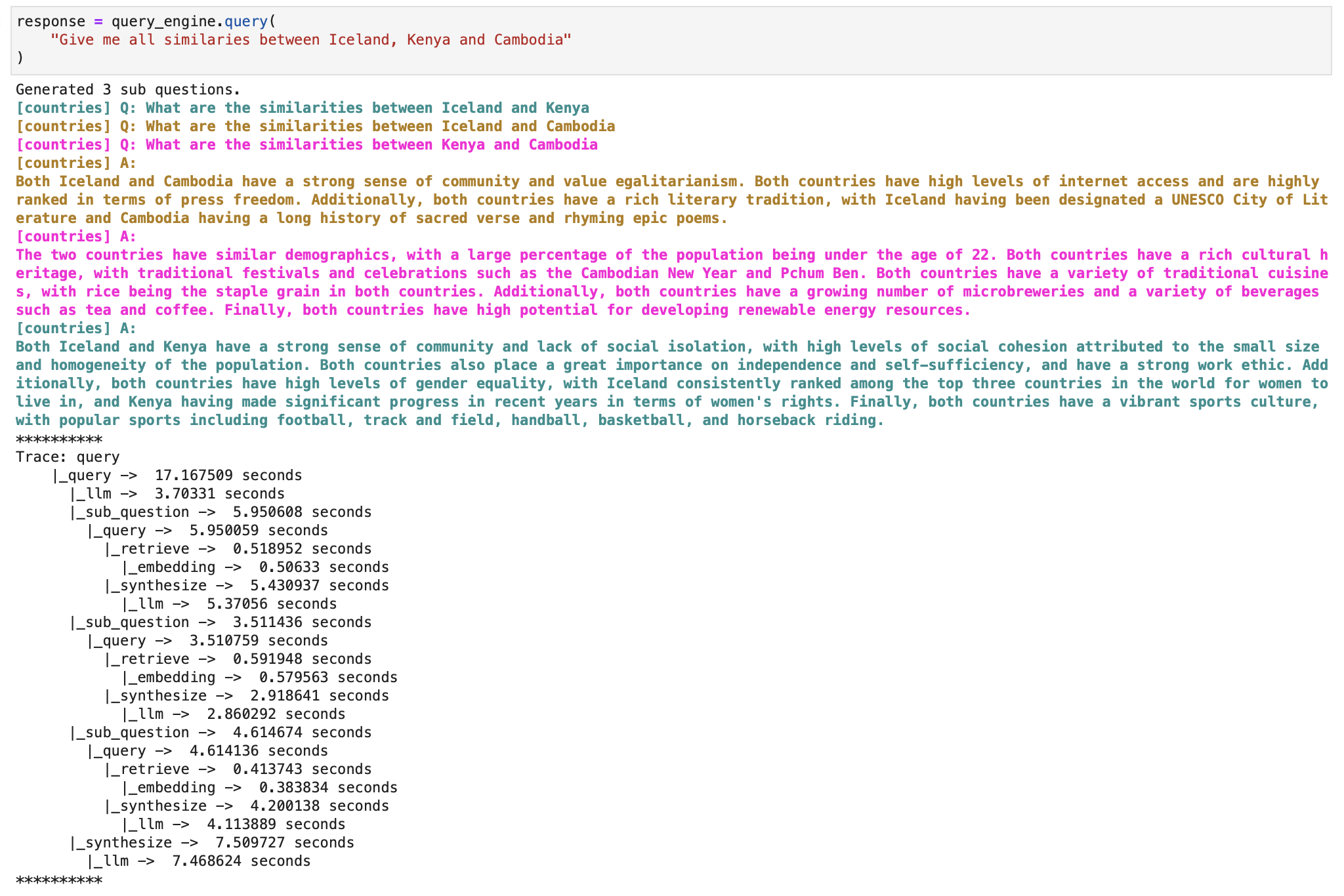

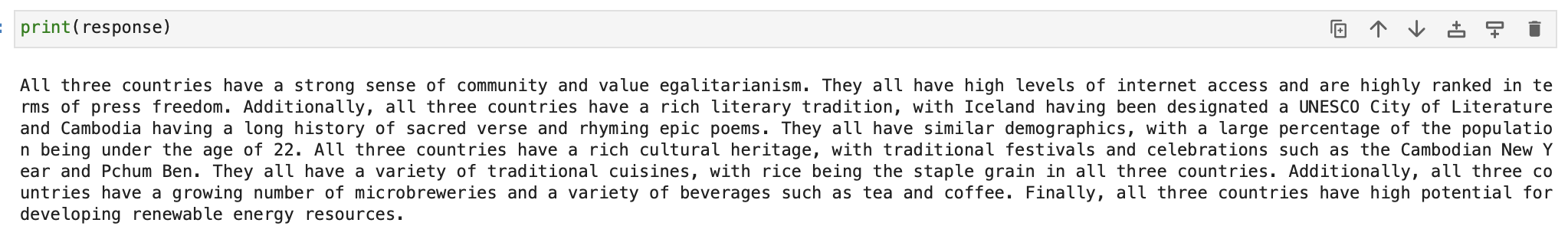

For instance, allow us to use a sub query question engine to sort out the issue of answering a fancy question utilizing a number of information sources. It first breaks down the advanced question into sub questions for every related information supply, then collect all of the intermediate reponses and synthesizes a closing response. We’ll apply it to our Wikipedia index.

We’ll observe the sub query question engine documentation.

Allow us to import required libraries and set context variable to make sure we are able to print the subtasks undertaken by the question engine as a substitute of simply printing the ultimate response.

import nest_asyncio

from llama_index import VectorStoreIndex, SimpleDirectoryReader

from llama_index.instruments import QueryEngineTool, ToolMetadata

from llama_index.query_engine import SubQuestionQueryEngine

from llama_index.callbacks import CallbackManager, LlamaDebugHandler

from llama_index import ServiceContext

nest_asyncio.apply()

# We're utilizing the LlamaDebugHandler to print the hint of the sub questions captured by the SUB_QUESTION callback occasion kind

llama_debug = LlamaDebugHandler(print_trace_on_end=True)

callback_manager = CallbackManager([llama_debug])

service_context = ServiceContext.from_defaults(

callback_manager=callback_manager

)

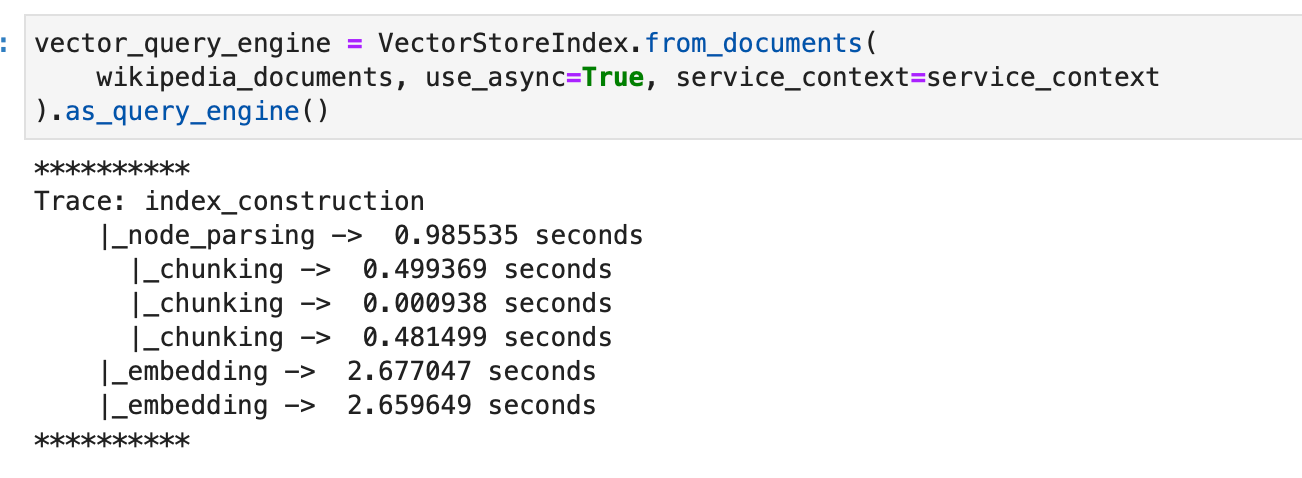

We first create a primary vector index as instructed by the documentation.

Now we create the sub query question engine.

Now, querying and asking for the response traces the subquestions that the question engine internally computed to get to the ultimate response.

And the ultimate response given by the engine can now be printed.

Join your information and apps with Nanonets AI Assistant to speak with information, deploy customized chatbots & brokers, and create AI workflows that carry out duties in your apps.

Low-Stage Composition API

For extra granular management or superior querying eventualities, the low-level composition API is on the market. This permits for personalisation at varied levels of the question course of.

Proper in the beginning of this weblog, we talked about that there are three supplementary blocks throughout the question engine / chat engine / agent that may be configured whereas creating them –

Retrievers

They dictate the strategy of fetching related context from the data base towards a question. For instance, Dense Retrieval towards a vector index is a prevalent method.

Select the best retriever right here –

Module Guides – LlamaIndex 🦙 0.8.46

Node Postprocessors

They refine the set of nodes via transformation, filtering, or re-ranking.

Abstract of LlamaIndex Postprocessors:

- SimilarityPostprocessor:

- Removes nodes under a sure similarity rating.

- Set threshold utilizing

similarity_cutoff.

- KeywordNodePostprocessor:

- Filters nodes based mostly on key phrase inclusion or exclusion.

- Use

required_keywordsandexclude_keywords.

- MetadataReplacementPostProcessor:

- Replaces node content material with information from its metadata.

- Works effectively with

SentenceWindowNodeParser.

- LongContextReorder:

- Addresses fashions’ problem with prolonged contexts. It reorders nodes, which advantages conditions the place a lot of prime outcomes are important.

- SentenceEmbeddingOptimizer:

- Removes irrelevant sentences based mostly on embeddings.

- Select both

percentile_cutofforthreshold_cutofffor relevance.

- CohereRerank:

- Makes use of the Cohere ReRank to reorder nodes, giving again the highest N outcomes.

- SentenceTransformerRerank:

- Makes use of sentence-transformer cross-encoders to reorder nodes, yielding the highest N nodes.

- Varied fashions out there with completely different pace/accuracy trade-offs.

- LLMRerank:

- Makes use of an LLM to reorder nodes, offering a relevance rating for every.

- FixedRecencyPostprocessor:

- Returns nodes sorted by date. Requires a date area in node metadata.

- EmbeddingRecencyPostprocessor:

- Ranks nodes by date, but in addition removes older related nodes based mostly on embedding similarity.

- TimeWeightedPostprocessor:

- Reranks nodes with a bias in the direction of data not not too long ago returned.

- PIINodePostprocessor (Beta):

- Removes personally identifiable data. Can make the most of both a neighborhood LLM or a NER mannequin.

- PrevNextNodePostprocessor (Beta):

- Primarily based on node relationships, retrieves nodes that come earlier than, after, or each in sequence.

- AutoPrevNextNodePostprocessor (Beta):

- Much like the above, however lets the LLM resolve the connection path.

Be aware: Many of those postprocessors include detailed pocket book guides for additional directions. Learn them right here.

Response Synthesizers

They channel the LLM to generate responses, mixing the consumer question with retrieved textual content chunks.

Response synthesizers may sound fancy, however they’re truly instruments that assist generate a reply or reply based mostly in your query and a few given textual content information. Let’s break it down.

Think about you will have a bunch of items of textual content (like a pile of books). Now, you ask a query and wish a solution based mostly on these texts. The response synthesizer is sort of a librarian who goes via the texts, finds related data, and crafts a reply for you.

Consider the entire course of within the question engine as a manufacturing facility line:

- First, a machine pulls out related textual content items based mostly in your query. We’ve already mentioned this. (Retriever)

- Then, if wanted, there is a step that may fine-tune these items. We’ve already mentioned this. (Node Postprocessor)

- Lastly, the response synthesizer takes these items and offers you a neatly crafted reply. (Response Synthesizer)

Response synthesizers are available in varied kinds:

- Refine: This methodology goes via every textual content piece, refining the reply little by little.

- Compact: A shorter model of ‘Refine.’ It bunches the texts collectively, so there are fewer steps to refine.

- Tree Summarize: Think about taking many small solutions, combining them, and summarizing once more till you will have one major reply.

- Easy Summarize: Simply cuts the textual content items to suit and offers a fast abstract.

- No Textual content: This one does not provide you with a solution however tells you which of them textual content items it might have used.

- Accumulate: Consider this as getting a bunch of mini-answers for every textual content piece after which sticking them collectively.

- Compact Accumulate: A blended bag of ‘Compact’ and ‘Accumulate.’

In the event you’re tech-savvy, you’ll be able to even construct your customized synthesizer. The first job of any synthesizer is to take a query and a few textual content items and provides again a string of textual content as a solution.

Under is a primary construction that each response synthesizer ought to have. They need to be capable of absorb a query and elements of textual content after which give again a solution.

class BaseSynthesizer(ABC):

"""Response builder class."""

def __init__(

self,

service_context: Elective[ServiceContext] = None,

streaming: bool = False,

) -> None:

"""Init params."""

self._service_context = service_context or ServiceContext.from_defaults()

self._callback_manager = self._service_context.callback_manager

self._streaming = streaming

@abstractmethod

def get_response(

self,

query_str: str,

text_chunks: Sequence[str],

**response_kwargs: Any,

) -> RESPONSE_TEXT_TYPE:

"""Get response."""

...

@abstractmethod

async def aget_response(

self,

query_str: str,

text_chunks: Sequence[str],

**response_kwargs: Any,

) -> RESPONSE_TEXT_TYPE:

"""Get response asynchronously."""

...

Utilizing a response synthesizer straight (with out the opposite steps we did in earlier sections) can also be doable and might be so simple as:

from llama_index import get_response_synthesizer

# Arrange the synthesizer

my_synthesizer = get_response_synthesizer(response_mode="compact")

# Ask a query

response = my_synthesizer.synthesize("What's the that means of life?", nodes=[...])

Utilizing it in your index alongside together with your configured retrievers and node postprocessors might be completed as follows –

from llama_index import (

VectorStoreIndex,

get_response_synthesizer,

)

from llama_index.retrievers import VectorIndexRetriever

from llama_index.query_engine import RetrieverQueryEngine

from llama_index.indices.postprocessor import SimilarityPostprocessor

# Construct index and configure retriever

index = VectorStoreIndex.from_documents(paperwork)

retriever = VectorIndexRetriever(

index=index,

similarity_top_k=2,

)

# Configure response synthesizer

response_synthesizer = get_response_synthesizer()

# Assemble question engine with postprocessors

query_engine = RetrieverQueryEngine(

retriever=retriever,

response_synthesizer=response_synthesizer,

node_postprocessors=[

SimilarityPostprocessor(similarity_cutoff=0.7)

]

)

# Execute the question

response = query_engine.question("your_query")

print(response)

Within the snippet above, the VectorIndexRetriever, RetrieverQueryEngine, and SimilarityPostprocessor are utilized to assemble a personalized question engine. This instance demonstrates a extra managed question course of.

Parsing the Response

Submit question, a Response object is returned which accommodates the response textual content and the sources of the response.

response = query_engine.question("<query_str>")

# Get response

print(str(response))

# Get sources

print(response.source_nodes)

print(response.get_formatted_sources())

This construction permits for an in depth examination of the question output and the sources contributing to the response.

Structured Outputs

In at the moment’s data-driven world, structured information is pivotal for a streamlined workflow. LlamaIndex understands this and faucets into the capabilities of Giant Language Fashions (LLMs) to ship structured outcomes. Let’s discover how.

Structured outcomes aren’t only a fancy approach of presenting information; they’re essential for purposes that depend upon the precision and glued construction of parsed values.

LLMs may give structured outputs in two methods:

Methodology 1 : Pydantic Packages

With perform calling APIs, you get a naturally structured end result, which then will get molded into the specified format, utilizing Pydantic Packages. These nifty modules convert a immediate right into a well-structured output utilizing a Pydantic object. They’ll both name capabilities or use textual content completions together with output parsers. Plus, they gel effectively with search instruments. LlamaIndex additionally presents ready-to-use Pydantic applications that change sure inputs into particular output varieties, like information tables.

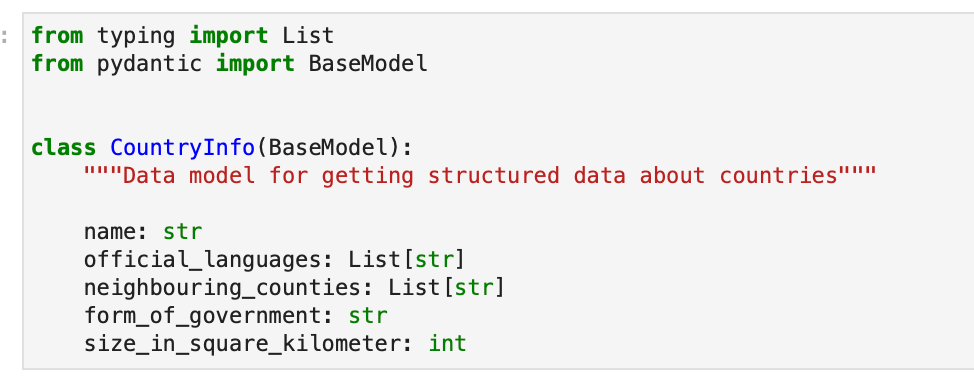

Allow us to use the Pydantic Packages documentation to extract structured information about these nations from the unstructured Wikipedia articles.

We create our pydantic output object –

We then create our index utilizing the wikipedia doc objects.

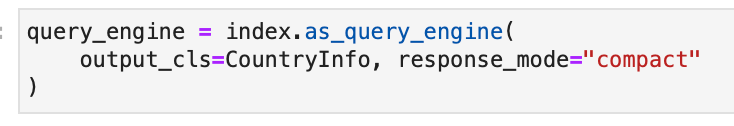

We provoke our question engine and specify the Pydantic output class.

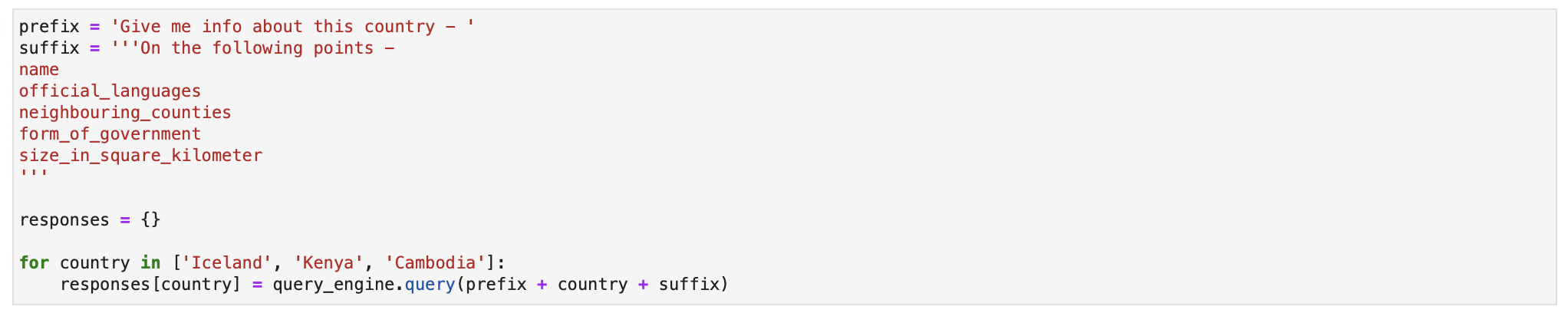

We will now count on structured response from the question engine. Allow us to retrieve this data for the three nations.

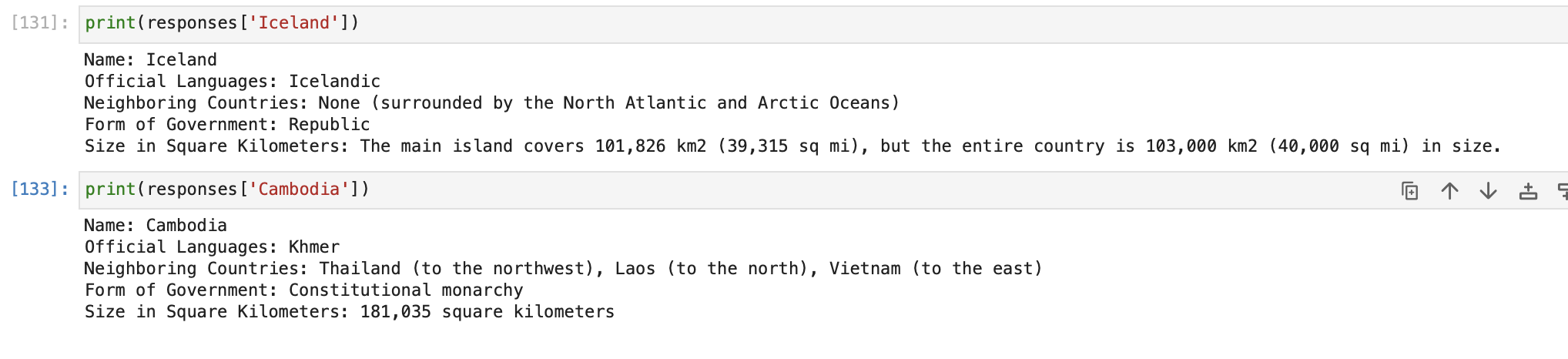

Allow us to examine the responses object now.

Keep in mind, whereas any LLM can technically produce these structured outputs, integration outdoors of OpenAI LLMs continues to be a piece in progress.

Methodology 2 : Output Parsers

We will additionally use generic completion APIs, the place textual content prompts dictate inputs and outputs. Right here, the output parser ensures the ultimate product aligns with the specified construction, guiding the LLM earlier than and after its process. That is completed with the assistance of Output Parsers, which acts as gatekeepers simply earlier than the ultimate response is generated. They sit earlier than and after an LLM textual content response and guarantee all the things’s so as.

Nevertheless, in the event you’re utilizing LLM capabilities mentioned above that already give structured outputs, you will not want these.

We’ll observe the Output Parsers documentation right here.

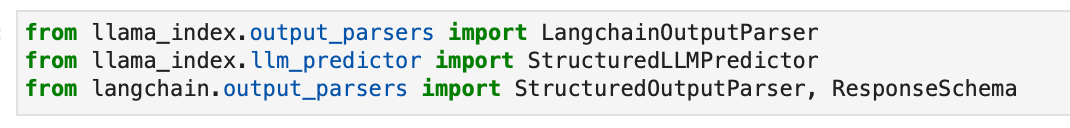

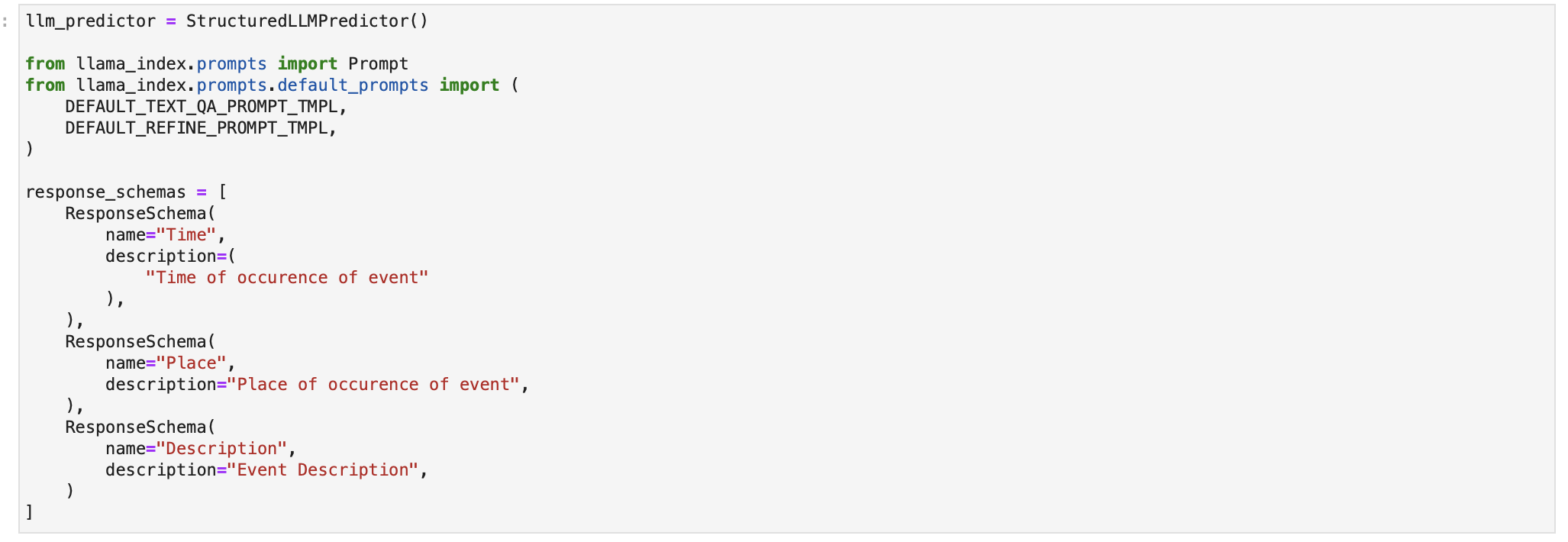

Allow us to import the LangChain output parser now.

We now outline the structured LLM and the response format as proven within the documentation.

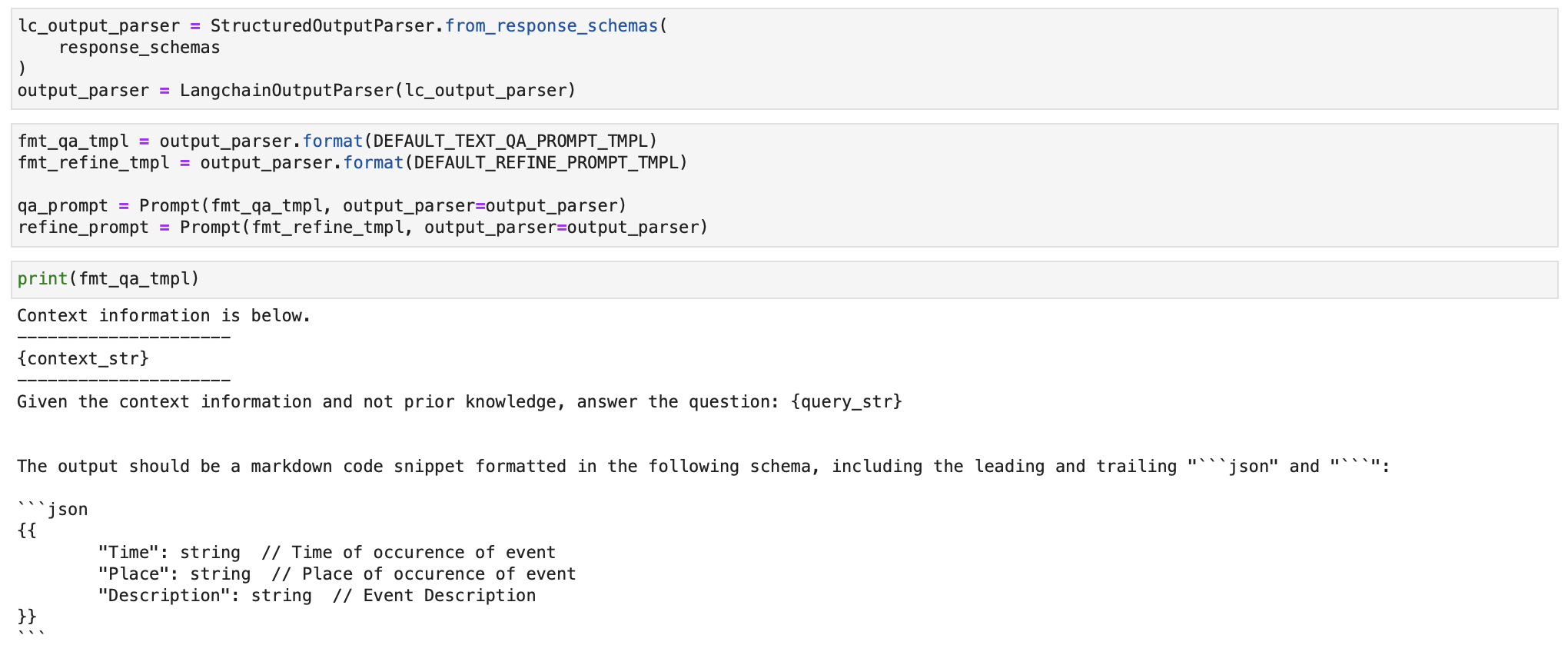

We outline the output parser and it is question template utilizing the response_schemas outlined above.

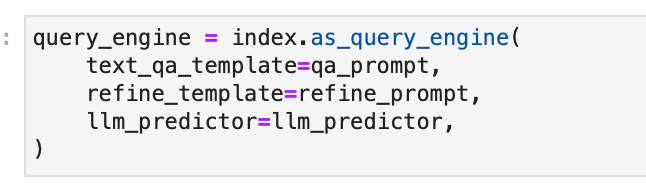

We outline the question engine and cross the structured output parser template to it whereas creating it.

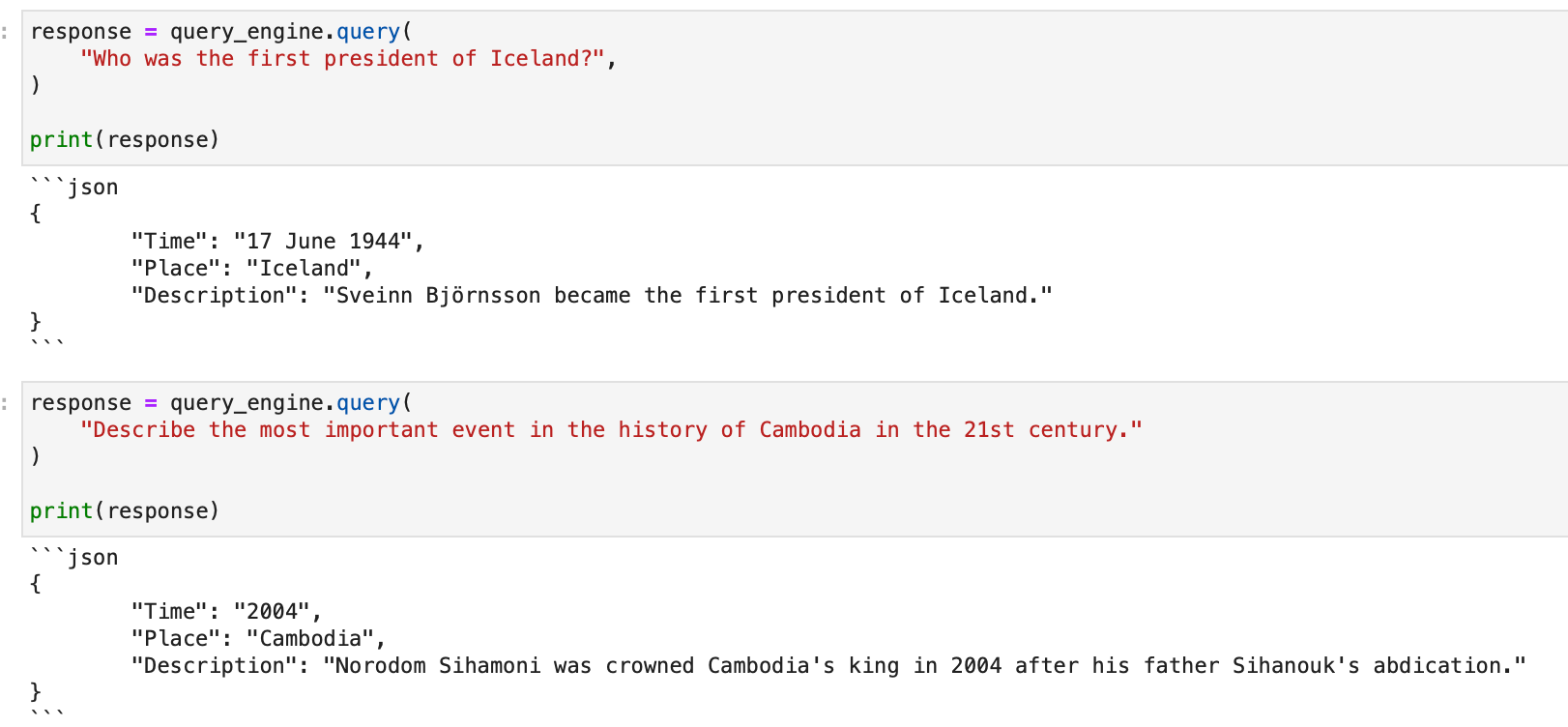

Working any question now fetches a structured json output!

Structured outputs with LlamaIndex make parsing and downstream processes a breeze, making certain you get probably the most out of your LLMs.

Join your information and apps with Nanonets AI Assistant to speak with information, deploy customized chatbots & brokers, and create AI workflows that carry out duties in your apps.

Utilizing Index to Chat with Information

Partaking in a dialog together with your information takes querying a step additional. LlamaIndex introduces the idea of a Chat Engine to facilitate a extra interactive and contextual dialogue together with your information. This part elaborates on organising and using the Chat Engine for a richer interplay together with your listed information.

Understanding the Chat Engine

A Chat Engine offers a high-level interface to have a back-and-forth dialog together with your information, versus a single question-answer interplay facilitated by the Question Engine. By sustaining a historical past of the dialog, the Chat Engine can present solutions which are contextually conscious of earlier interactions.

Tip: For standalone queries with out dialog historical past, use Question Engine.

Getting Began with Chat Engine

Initiating a dialog is easy. Right here’s how one can get began:

# Construct a chat engine from the index

chat_engine = index.as_chat_engine()

# Begin a dialog

response = chat_engine.chat("Inform me a joke.")

# For streaming responses

streaming_response = chat_engine.stream_chat("Inform me a joke.")

for token in streaming_response.response_gen:

print(token, finish="")

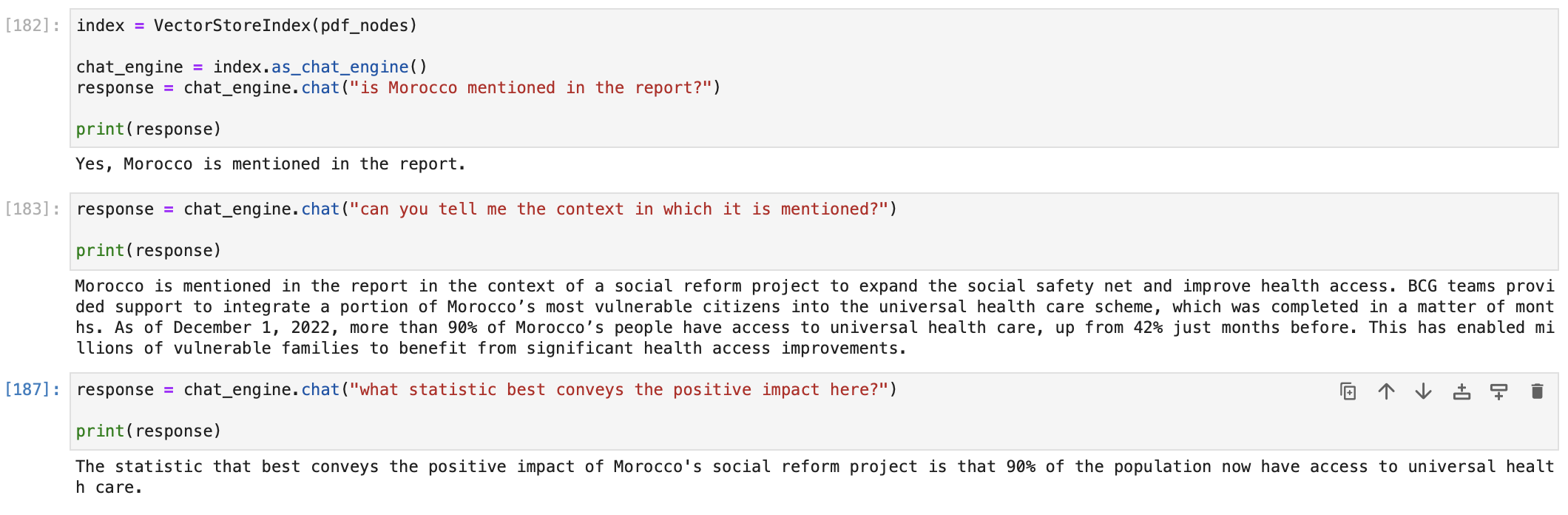

For instance, we are able to begin a chat with our PDF doc on the BCG Annual Sustainability Report as follows.

You’ll be able to select the chat engine based mostly in your use case. LlamaIndex presents a variety of chat engine implementations, catering to completely different wants and ranges of sophistication. These engines are designed to facilitate conversations and interactions with customers, every providing a novel set of options.

- SimpleChatEngine

The SimpleChatEngine is a primary chat mode that doesn’t depend on a data base. It offers a place to begin for chatbot growth. Nevertheless, it won’t deal with advanced queries effectively as a result of its lack of a data base.

- ReAct Agent Mode

ReAct is an agent-based chat mode constructed on prime of a question engine over your information. It follows a versatile method the place the agent decides whether or not to make use of the question engine software to generate responses or not. This mode is flexible however extremely depending on the standard of the language mannequin (LLM) and will require extra management to make sure correct responses. You’ll be able to customise the LLM utilized in ReAct mode.

Implementation Instance:

# Load information and construct index

from llama_index import VectorStoreIndex, SimpleDirectoryReader, ServiceContext

from llama_index.llms import OpenAI

service_context = ServiceContext.from_defaults(llm=OpenAI())

information = SimpleDirectoryReader(input_dir="../information/paul_graham/").load_data()

index = VectorStoreIndex.from_documents(information, service_context=service_context)

# Configure chat engine

chat_engine = index.as_chat_engine(chat_mode="react", verbose=True)

# Chat together with your information

response = chat_engine.chat("What did Paul Graham do in the summertime of 1995?")

- OpenAI Agent Mode

OpenAI Agent Mode leverages OpenAI’s highly effective language fashions like GPT-3.5 Turbo. It is designed for basic interactions and might carry out particular capabilities like querying a data base. It is significantly appropriate for a variety of chat purposes.

Implementation Instance:

# Load information and construct index

from llama_index import VectorStoreIndex, SimpleDirectoryReader, ServiceContext

from llama_index.llms import OpenAI

service_context = ServiceContext.from_defaults(llm=OpenAI(mannequin="gpt-3.5-turbo-0613"))

information = SimpleDirectoryReader(input_dir="../information/paul_graham/").load_data()

index = VectorStoreIndex.from_documents(information, service_context=service_context)

# Configure chat engine

chat_engine = index.as_chat_engine(chat_mode="openai", verbose=True)

# Chat together with your information

response = chat_engine.chat("Hello")

- Context Mode

The ContextChatEngine is a straightforward chat mode constructed on prime of a retriever over your information. It retrieves related textual content from the index based mostly on the consumer’s message, units this retrieved textual content as context within the system immediate, and returns a solution. This mode is good for questions associated to the data base and basic interactions.

Implementation Instance:

# Load information and construct index

from llama_index import VectorStoreIndex, SimpleDirectoryReader

information = SimpleDirectoryReader(input_dir="../information/paul_graham/").load_data()

index = VectorStoreIndex.from_documents(information)

# Configure chat engine

from llama_index.reminiscence import ChatMemoryBuffer

reminiscence = ChatMemoryBuffer.from_defaults(token_limit=1500)

chat_engine = index.as_chat_engine(

chat_mode="context",

reminiscence=reminiscence,

system_prompt=(

"You're a chatbot, capable of have regular interactions, in addition to speak"

" about an essay discussing Paul Graham's life."

),

)

# Chat together with your information

response = chat_engine.chat("Hiya!")

- Condense Query Mode

The Condense Query mode generates a standalone query from the dialog context and the final message. It then queries the question engine with this condensed query to supply a response. This mode is appropriate for questions straight associated to the data base.

Implementation Instance:

# Load information and construct index

from llama_index import VectorStoreIndex, SimpleDirectoryReader

information = SimpleDirectoryReader(input_dir="../information/paul_graham/").load_data()

index = VectorStoreIndex.from_documents(information)

# Configure chat engine

chat_engine = index.as_chat_engine(chat_mode="condense_question", verbose=True)

# Chat together with your information

response = chat_engine.chat("What did Paul Graham do after YC?")

Configuring the Chat Engine

Configuration of a Chat Engine is akin to that of a Question Engine. Nevertheless, the Chat Engine presents completely different modes to tailor the dialog based on your wants.

chat_engine = index.as_chat_engine(

chat_mode="condense_question",

verbose=True

)

Completely different chat modes can be found:

greatest: Optimizes the chat engine to be used with a ReAct information agent or an OpenAI information agent, relying on the LLM assist.

context: Retrieves nodes from the index utilizing each consumer message, inserting the retrieved textual content into the system immediate for extra contextual responses.

condense_question: Rewrites the consumer message to be a question for the index based mostly on the chat historical past.

easy: Direct chat with the LLM, with out involving the question engine.

react: Forces a ReAct information agent.

openai: Forces an OpenAI information agent.

- Low-Stage Composition API

For granular management, the low-level composition API permits specific building of the ChatEngine object:

from llama_index.prompts import PromptTemplate

from llama_index.llms import ChatMessage, MessageRole

# Customized immediate template

custom_prompt = PromptTemplate("""

... (template content material) ...

""")

# Customized chat historical past

custom_chat_history = [ ... ] # Checklist of ChatMessage objects

# Question engine from index

query_engine = index.as_query_engine()

# Configuring the Chat Engine

chat_engine = CondenseQuestionChatEngine.from_defaults(

query_engine=query_engine,

condense_question_prompt=custom_prompt,

chat_history=custom_chat_history,

verbose=True

)

On this instance, a customized immediate template and chat historical past are used to configure the CondenseQuestionChatEngine, offering a tailor-made chat expertise.

Streaming Responses

To allow streaming of responses, merely use the stream_chat endpoint:

streaming_response = chat_engine.stream_chat("Inform me a joke.")

for token in streaming_response.response_gen:

print(token, finish="")

This function offers a strategy to obtain and course of the response tokens as they’re generated, which might be helpful in sure interactive or real-time eventualities.

Resetting the Chat Historical past

To begin a brand new dialog or to discard the present dialog historical past, use the reset methodology:

chat_engine.reset()

Interactive Chat REPL

For an interactive chat session, use the chat_repl methodology which offers a Learn-Eval-Print Loop (REPL) for chatting:

chat_engine.chat_repl()

The Chat Engine in LlamaIndex extends the querying functionality to a conversational paradigm, permitting for a extra interactive and context-aware interplay together with your information. By varied configurations and modes, you’ll be able to tailor the dialog to fit your particular wants, whether or not it’s a easy chat or a extra advanced, context-driven dialogue.

Llamaindex Instruments and Information Brokers

LlamaIndex Information Brokers take pure language as enter, and carry out actions as a substitute of producing responses.

The essence of developing proficient information brokers lies within the artwork of software abstractions.

However what precisely is a software on this context? Consider Instruments as API interfaces, tailor-made for agent interactions slightly than human touchpoints.

Core Ideas:

- Device: At its primary stage, a Device comes with a generic interface and a few basic metadata like identify, description, and performance schema.

- Device Spec: This dives deeper into the API particulars. It outlines a complete service API specification, able to be translated into an assortment of Instruments.

There are completely different flavors of Instruments out there:

- FunctionTool: Remodel any user-defined perform right into a Device. Plus, it will possibly neatly infer the perform’s schema.

- QueryEngineTool: Wraps round an present question engine, and given our agent abstractions are derived from BaseQueryEngine, this software also can embrace brokers (which we are going to focus on later).

You’ll be able to both customized design LlamaHub Device Specs and Instruments or effortlessly import them from the llama-hub package deal. Integrating them into brokers is easy.

For an intensive assortment of Instruments and Device Specs, make your strategy to LlamaHub.

Llama Hub

A hub of knowledge loaders for GPT Index and LangChain

Information Brokers in LlamaIndex are powered by Language Studying Fashions (LLMs) and act as clever data staff over your information, executing each “learn” and “write” operations. They automate search and retrieval throughout various information varieties—unstructured, semi-structured, and structured. In contrast to our question engines which solely “learn” from a static information supply, Information Brokers can dynamically ingest, modify, and work together with information throughout varied instruments. They’ll name exterior service APIs, course of the returned information, and retailer it for future reference.

The 2 constructing blocks of Information Brokers are:

- A Reasoning Loop: Dictates the agent’s decision-making course of on which instruments to make use of, their sequence, and the parameters for every software name based mostly on the enter process.

- Device Abstractions: A set of APIs or Instruments that the agent interacts with to fetch data or alter states.

The kind of reasoning loop is determined by the agent; supported varieties embody OpenAI Perform agent and a ReAct agent (which operates throughout any chat/textual content completion endpoint).

This is the best way to use an OpenAI Perform API-based Information Agent:

from llama_index.agent import OpenAIAgent

from llama_index.llms import OpenAI

... # import and outline instruments

llm = OpenAI(mannequin="gpt-3.5-turbo-0613")

agent = OpenAIAgent.from_tools(instruments, llm=llm, verbose=True)

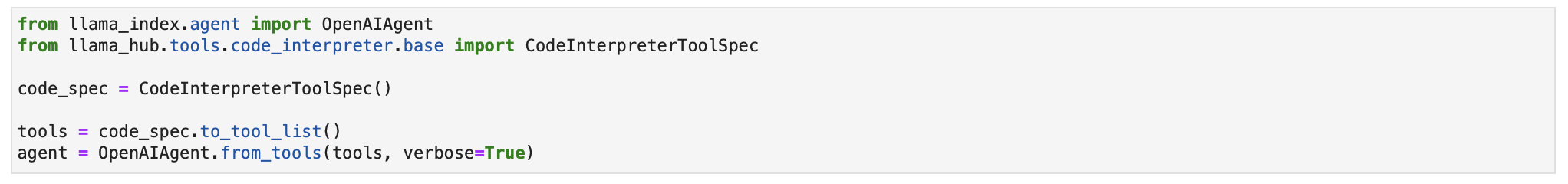

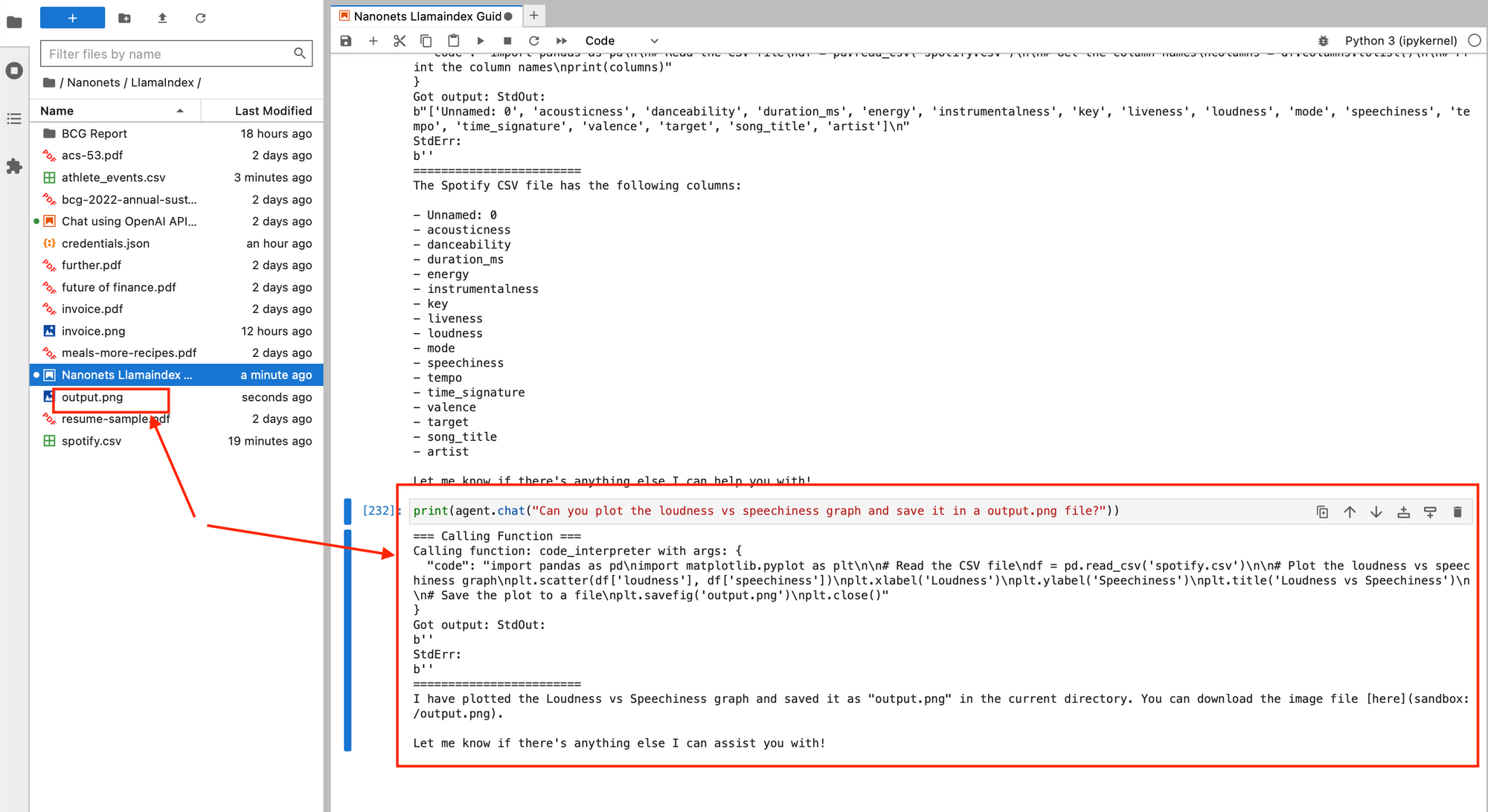

Now, allow us to go forward and use the Code Interpreter software out there in LlamaHub to put in writing and execute code straight by giving pure language directions. We’ll use this Spotify dataset (which is a .csv file) and carry out information evaluation by making our agent execute python code to learn and manipulate the information in pandas.

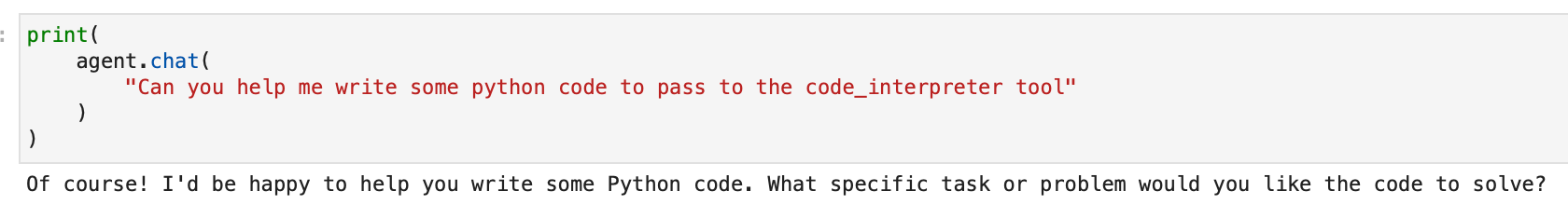

We first import the software.

Let’s begin chatting.

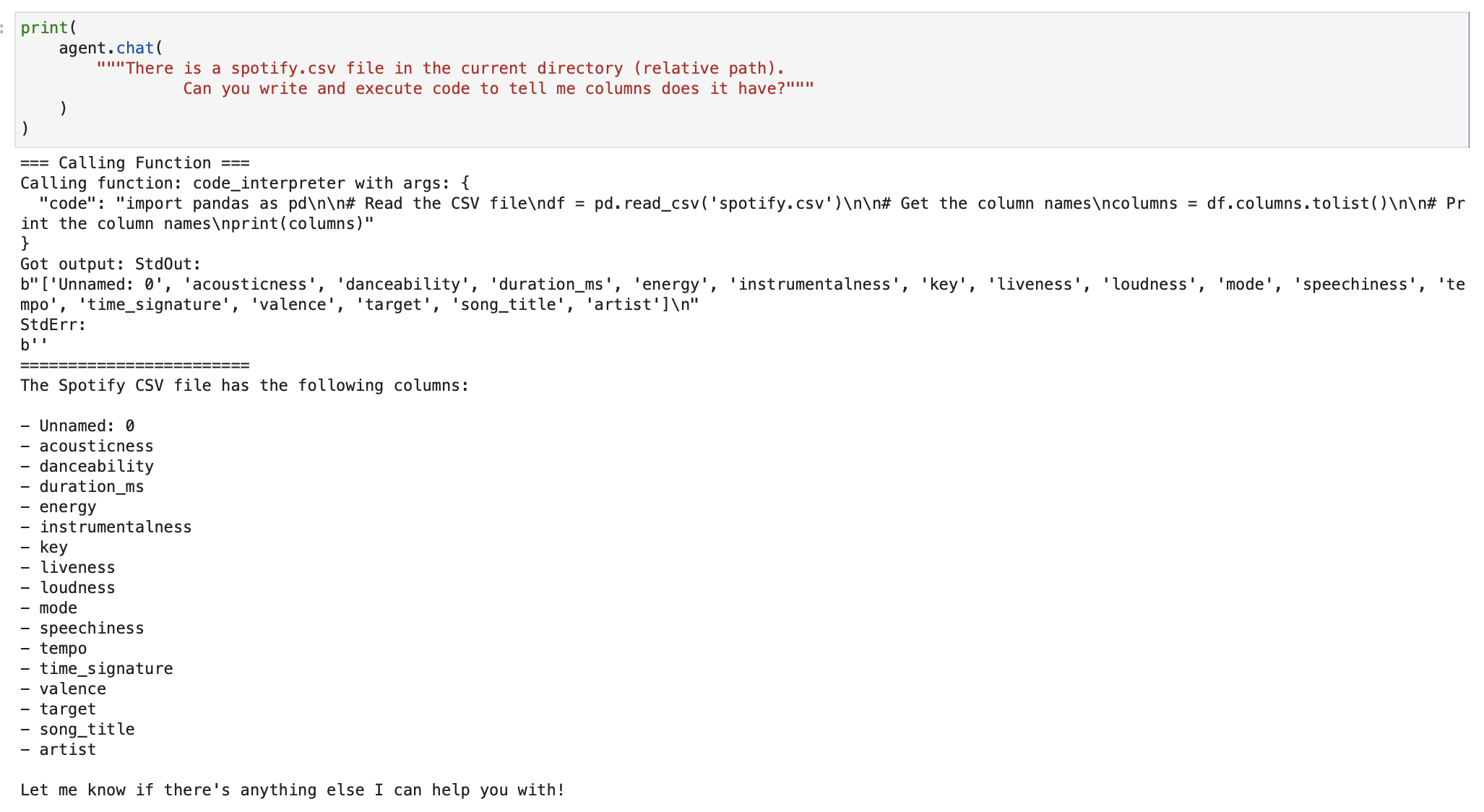

We first ask it to fetch the record of columns. Our agent executes python code and makes use of pandas to learn the column names.

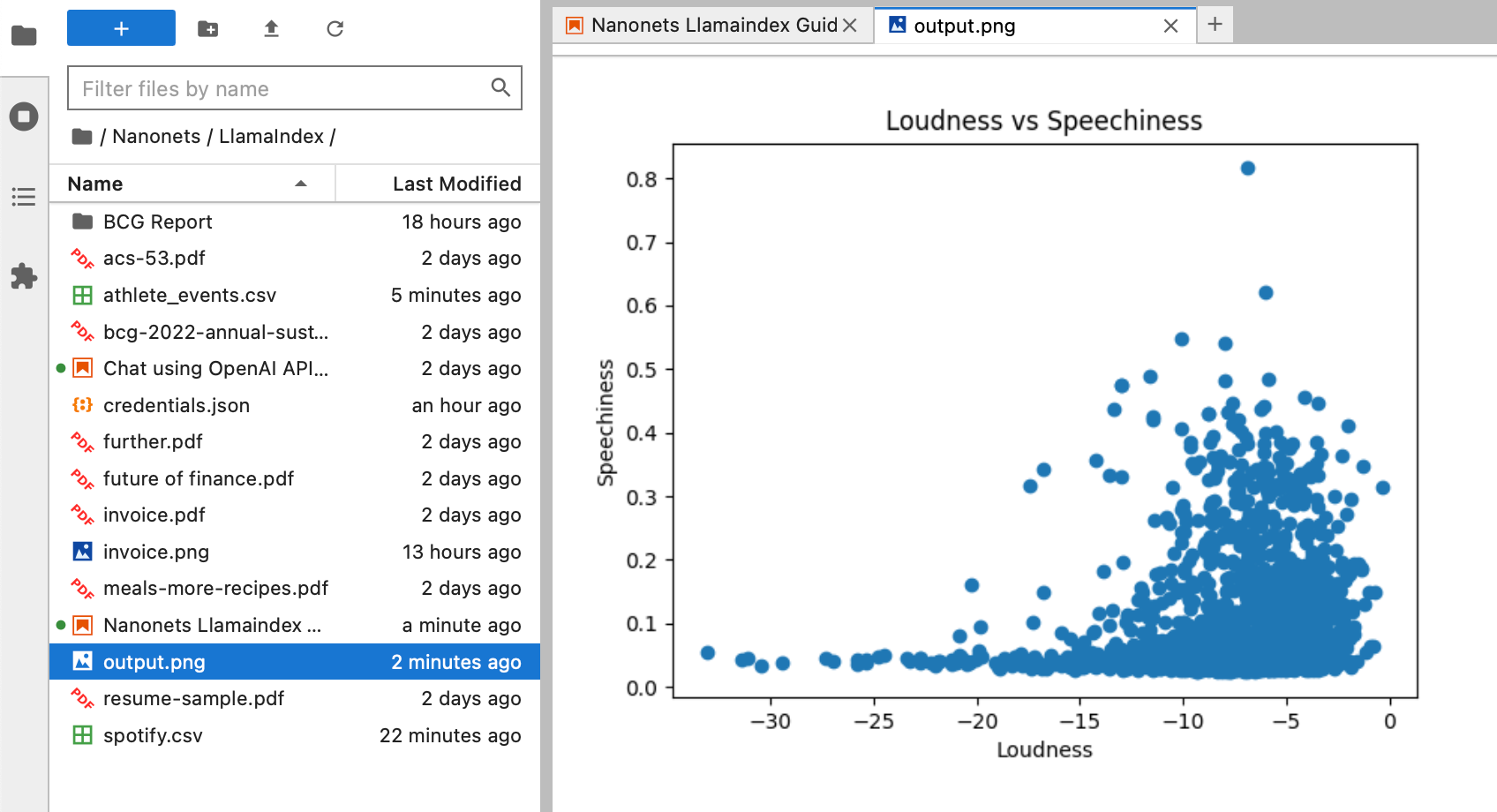

We now ask it to plot a graph of loudness vs ‘speechiness’ and put it aside in a output.png file on our system, all by simply chatting with our agent.

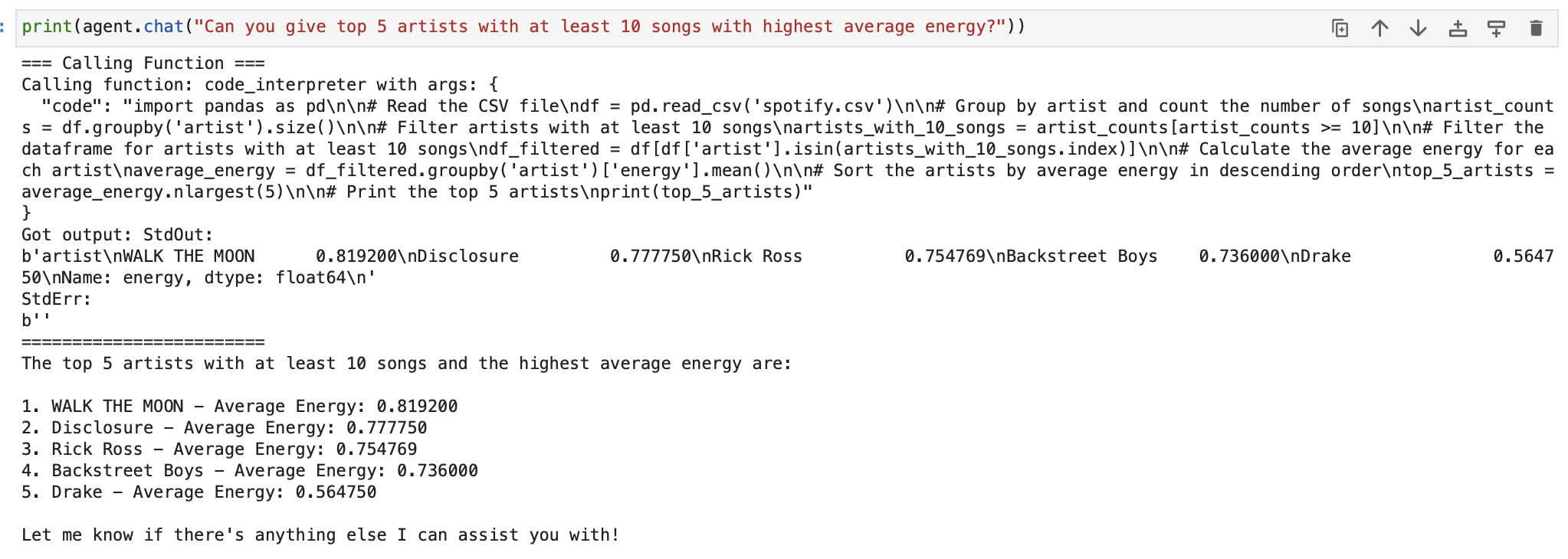

We will carry out EDA intimately as effectively.

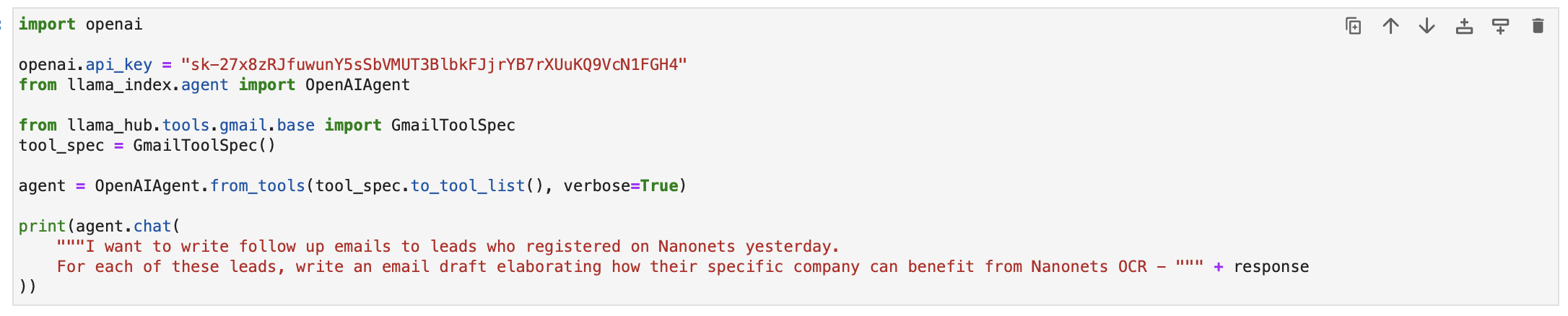

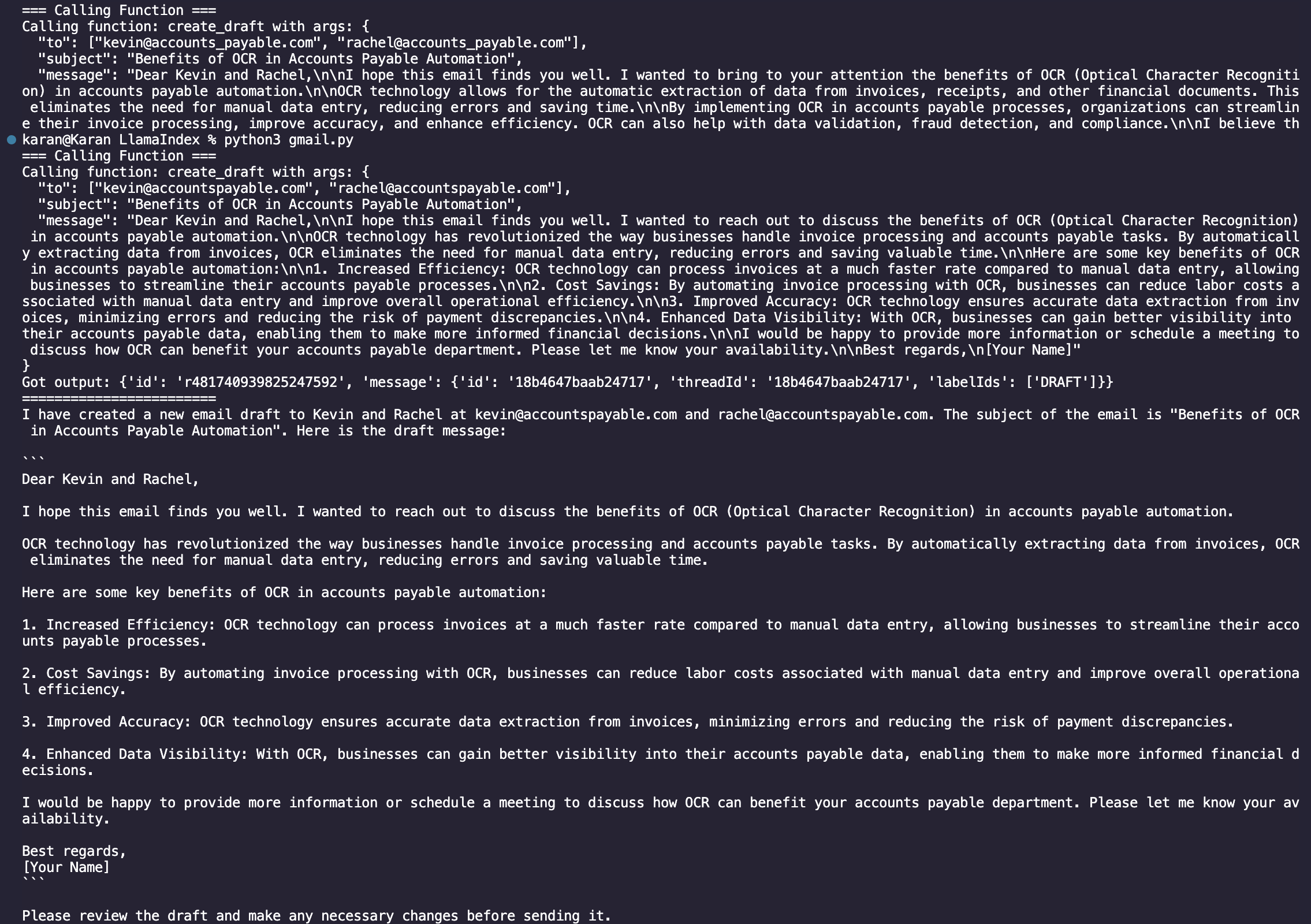

Now, allow us to go forward and use one other software to ship emails from our Gmail account with an agent utilizing pure language enter.

We use the information connector with Hubspot from LlamaHub to fetch a structured record of leads which have been created yesterday.

We now go to the GmailSpecTool Documentation in LlamaHub to create a Gmail Agent to put in writing and ship in the future follow-up emails to those of us.

We begin by making a credentials.json file by following the documentation and add it to our working listing.

We then proceed to supply the e-mail writing immediate.

Upon working the code, we are able to see the execution chain writing the emails and saving them as drafts in your Gmail account.

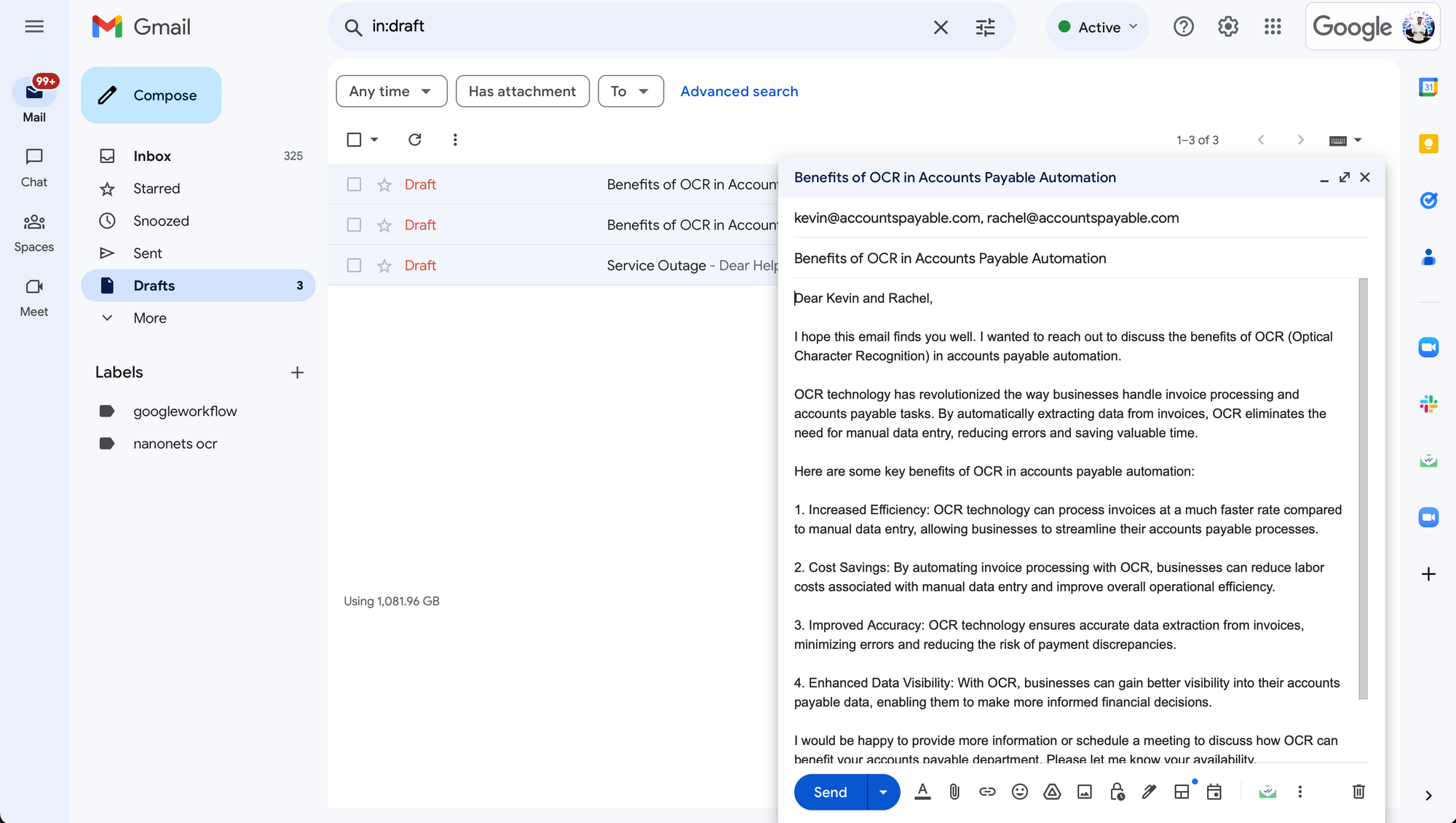

Once we go to our Gmail Draft field, we are able to see the draft that the agent created!

In truth, we may have additionally scheduled this script to run every day and instructed the Gmail agent to ship these emails straight as a substitute of saving them as drafts, leading to a end-to-end totally automated workflow that –

- connects with hubspot every day to fetch yesterday’s leads

- makes use of the area from the e-mail addresses to create personalised observe up emails

- sends the emails out of your Gmail account straight.

Thus, along with querying and chatting with information, LlamaIndex might be even be used to completely execute duties by interacting with purposes and information sources.

And that is a wrap!

Join your information and apps with Nanonets AI Assistant to speak with information, deploy customized chatbots & brokers, and create AI workflows that carry out duties in your apps.

Go Past LlamaIndex with Nanonets

LlamaIndex is a good software to attach your information, regardless of it is format, with LLMs and harness their prowess to work together with it. We’ve seen the best way to use pure language together with your information and apps as a way to generate responses / carry out duties.

Nevertheless, for companies, utilizing LlamaIndex for enterprise purposes may pose a couple of issues –

- Whereas LlamaHub is a good repository to seek out a big number of information connectors, this record continues to be not exhaustive and misses out on offering connections with among the main workspace apps.

- This pattern is much more more and more prevalent within the context of instruments, which take pure language as enter to carry out duties (write and ship emails, create CRM entries, execute SQL queries and fetch outcomes, and so forth). This limits the variety of supported apps and methods during which the brokers can work together with them.

- Finetuning the configuration of every ingredient of the LlamaIndex pipeline (retrievers, synthesizers, indices, and so forth) is a cumbersome course of. On prime of that, figuring out one of the best pipeline for a given dataset and process is time consuming and never at all times intuitive.

- Every process wants a novel implementation. There isn’t any one-stop resolution for connecting your information with LLMs.

- LlamaIndex fetches static information with information connectors, which isn’t up to date with new information flowing into the supply database.

Enter Nanonets AI Assistant.

Nanonets AI Assistant is a safe multi-purpose AI Assistant that connects your and your organization’s data with LLMs, in a straightforward to make use of consumer interface.

- Information Connectors for

- 100+ most generally used workspace apps (Slack, Notion, Google Suite, Salesforce, Zendesk, and so on.)

- unstructured information varieties – PDFs, TXTs, Photographs, Audio Recordsdata, Video Recordsdata, and so on.

- structured information varieties – CSVs, Spreadsheets, MongoDB, SQL databases, and so on.

- Set off / Motion brokers for most generally used workspace apps that hear for occasions in purposes / carry out actions in purposes. For instance, you’ll be able to arrange a workflow to hear for brand new emails at assist@your_company.com, use your documentation and previous e mail conversations as a data base, draft an e mail reply with the decision, and ship the e-mail reply.

- Optimized information ingestion and indexing for every corresponding information ingestion, all of which is straight dealt with within the background by the Nanonets AI Assistant.

- Actual-time sync with information sources.

To get began, you may get on a name with considered one of our AI consultants and we may give you a customized demo & trial of the Nanonets AI Assistant based mostly in your use case.

As soon as arrange, you need to use your Nanonets AI Assistant to –

Chat with Information

Empower your groups with complete, real-time data from all of your information sources.

Create AI Workflows

Use pure language to create and run advanced workflows powered by LLMs that work together with all of your apps and information.

Deploy Chatbots

Construct and Deploy prepared to make use of Customized AI Chatbots that know you inside minutes.

Supercharge your groups with Nanonets AI to attach your apps and information with AI and begin automating duties so your groups can give attention to what actually issues.

Join your information and apps with Nanonets AI Assistant to speak with information, deploy customized chatbots & brokers, and create AI workflows that carry out duties in your apps.