Picture by Writer

Having title is essential for an article’s success. Folks spend just one second (if we imagine Ryan Vacation’s e-book “Belief Me, I am Mendacity” deciding whether or not to click on on the title to open the entire article. The media are obsessive about optimizing clickthrough charge (CTR), the variety of clicks a title receives divided by the variety of occasions the title is proven. Having a click-bait title will increase CTR. The media will doubtless select a title with the next CTR between the 2 titles as a result of this can generate extra income.

I’m not actually into squeezing advert income. It’s extra about spreading my information and experience. And nonetheless, viewers have restricted time and a focus, whereas content material on the Web is just about limitless. So, I need to compete with different content-makers to get viewers’ consideration.

How do I select a correct title for my subsequent article? In fact, I would like a set of choices to select from. Hopefully, I can generate them alone or ask ChatGPT. However what do I do subsequent? As an information scientist, I recommend operating an A/B/N take a look at to grasp which choice is the very best in a data-driven method. However there’s a drawback. First, I must determine shortly as a result of content material expires shortly. Secondly, there is probably not sufficient observations to identify a statistically vital distinction in CTRs as these values are comparatively low. So, there are different choices than ready a few weeks to determine.

Hopefully, there’s a resolution! I can use a “multi-armed bandit” machine studying algorithm that adapts to the info we observe about viewers’ habits. The extra folks click on on a selected choice within the set, the extra visitors we are able to allocate to this feature. On this article, I’ll briefly clarify what a “Bayesian multi-armed bandit” is and present the way it works in apply utilizing Python.

Multi-armed Bandits are machine studying algorithms. The Bayesian sort makes use of Thompson sampling to decide on an choice based mostly on our prior beliefs about likelihood distributions of CTRs which are up to date based mostly on the brand new knowledge afterward. All these likelihood idea and mathematical statistics phrases could sound complicated and daunting. Let me clarify the entire idea utilizing as few formulation as I can.

Suppose there are solely two titles to select from. We don’t know about their CTRs. However we need to have the highest-performing title. We now have a number of choices. The primary one is to decide on whichever title we imagine in additional. That is the way it labored for years within the business. The second allocates 50% of the incoming visitors to the primary title and 50% to the second. This grew to become potential with the rise of digital media, the place you possibly can determine what textual content to point out exactly when a viewer requests a listing of articles to learn. With this strategy, you possibly can make sure that 50% of visitors was allotted to the best-performing choice. Is that this a restrict? In fact not!

Some folks would learn the article inside a few minutes after publishing. Some folks would do it in a few hours or days. This implies we are able to observe how “early” readers responded to completely different titles and shift visitors allocation from 50/50 and allocate a bit bit extra to the better-performing choice. After a while, we are able to once more calculate CTRs and alter the break up. Within the restrict, we need to alter the visitors allocation after every new viewer clicks on or skips the title. We want a framework to adapt visitors allocation scientifically and automatedly.

Right here comes Bayes’ theorem, Beta distribution, and Thompson sampling.

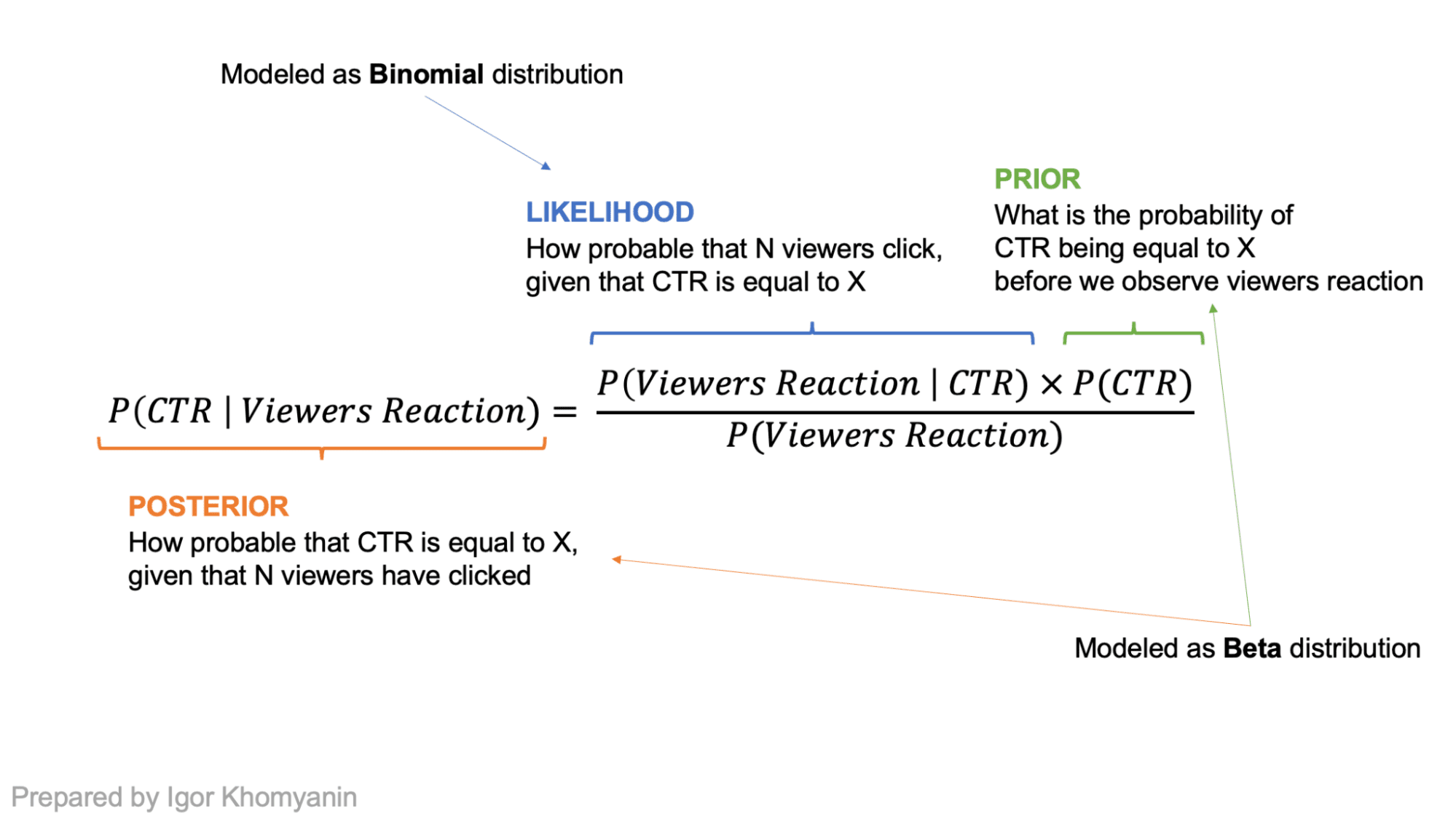

Let’s assume that the CTR of an article is a random variable “theta.” By design, it lies someplace between 0 and 1. If we have now no prior beliefs, it may be any quantity between 0 and 1 with equal likelihood. After we observe some knowledge “x,” we are able to alter our beliefs and have a brand new distribution for “theta” that can be skewed nearer to 0 or 1 utilizing Bayes’ theorem.

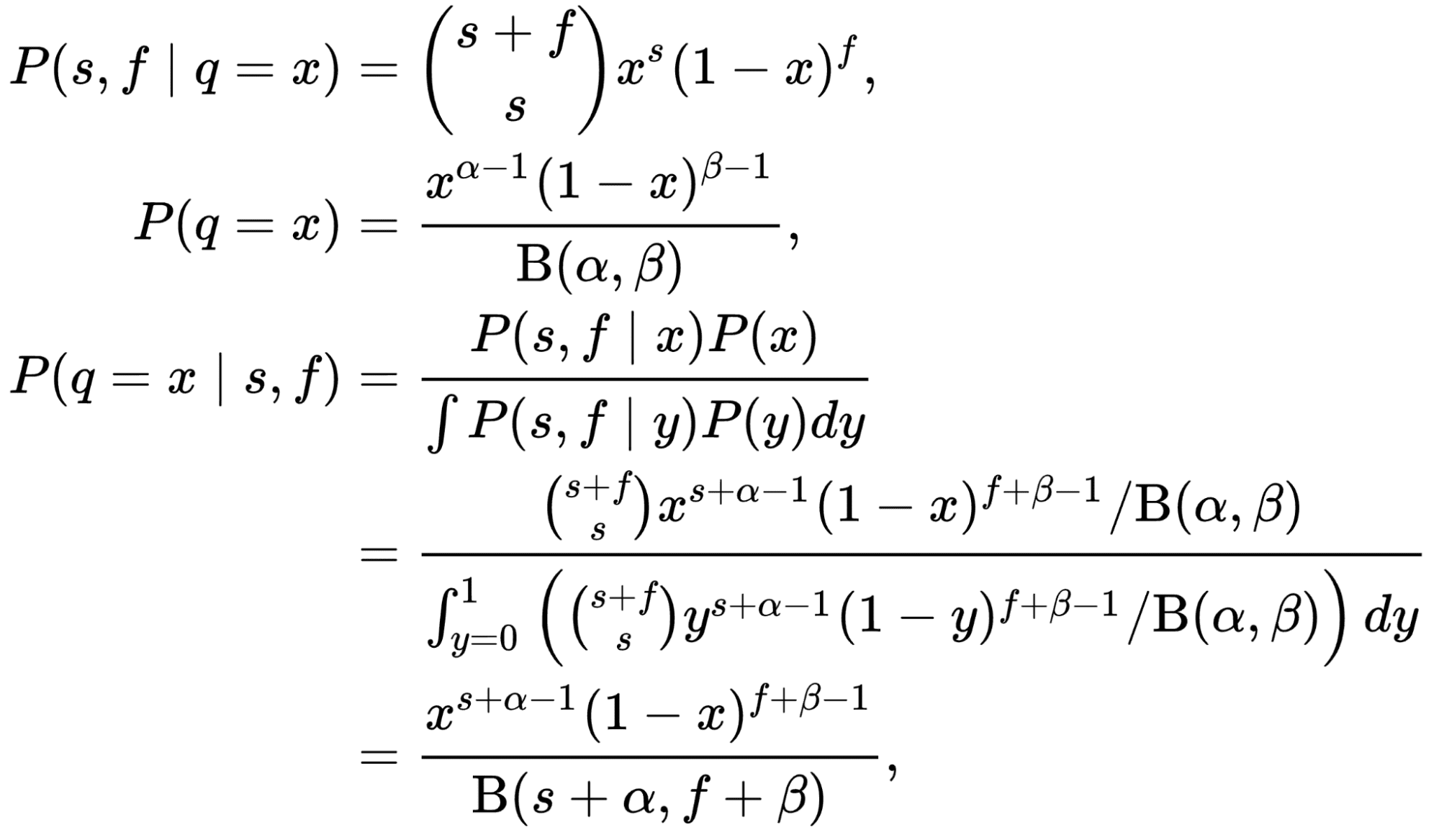

The quantity of people that click on on the title may be modeled as a Binomial distribution the place “n” is the variety of guests who see the title, and “p” is the CTR of the title. That is our probability! If we mannequin the prior (our perception in regards to the distribution of CTR) as a Beta distribution and take binomial probability, the posterior would even be a Beta distribution with completely different parameters! In such circumstances, Beta distribution known as a conjugate prior to the probability.

Proof of that reality will not be that tough however requires some mathematical train that isn’t related within the context of this text. Please check with the attractive proof right here:

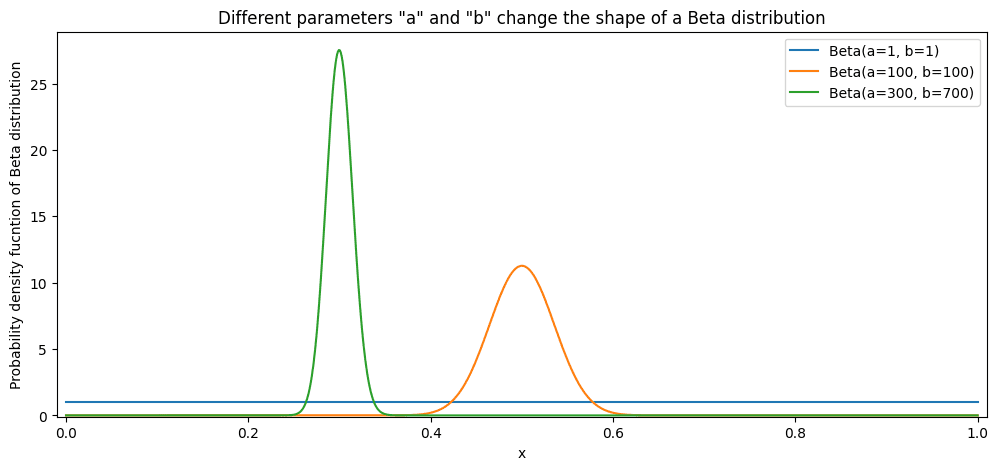

The beta distribution is bounded by 0 and 1, which makes it an ideal candidate to mannequin a distribution of CTR. We are able to begin from “a = 1” and “b = 1” as Beta distribution parameters that mannequin CTR. On this case, we might haven’t any beliefs about distribution, making any CTR equally possible. Then, we are able to begin including noticed knowledge. As you possibly can see, every “success” or “click on” will increase “a” by 1. Every “failure” or “skip” will increase “b” by 1. This skews the distribution of CTR however doesn’t change the distribution household. It’s nonetheless a beta distribution!

We assume that CTR may be modeled as a Beta distribution. Then, there are two title choices and two distributions. How will we select what to point out to a viewer? Therefore, the algorithm known as a “multi-armed bandit.” On the time when a viewer requests a title, you “pull each arms” and pattern CTRs. After that, you evaluate values and present a title with the very best sampled CTR. Then, the viewer both clicks or skips. If the title was clicked, you’ll alter this feature’s Beta distribution parameter “a,” representing “successes.” In any other case, you enhance this feature’s Beta distribution parameter “b,” which means “failures.” This skews the distribution, and for the subsequent viewer, there can be a special likelihood of selecting this feature (or “arm”) in comparison with different choices.

After a number of iterations, the algorithm could have an estimate of CTR distributions. Sampling from this distribution will primarily set off the very best CTR arm however nonetheless permit new customers to discover different choices and readjust allocation.

Effectively, this all works in idea. Is it actually higher than the 50/50 break up we have now mentioned earlier than?

All of the code to create a simulation and construct graphs may be present in my GitHub Repo.

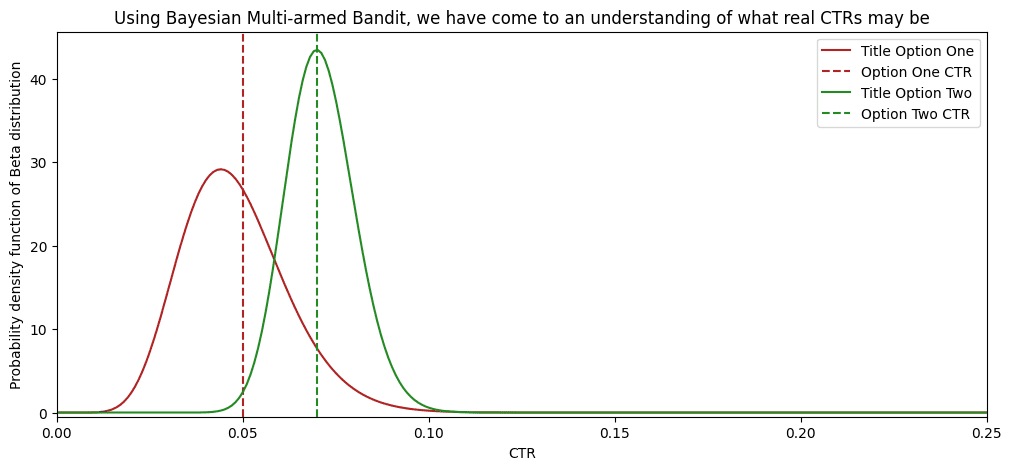

As talked about earlier, we solely have two titles to select from. We now have no prior beliefs about CTRs of this title. So, we begin from a=1 and b=1 for each Beta distributions. I’ll simulate a easy incoming visitors assuming a queue of viewers. We all know exactly whether or not the earlier viewer “clicked” or “skipped” earlier than exhibiting a title to the brand new viewer. To simulate “click on” and “skip” actions, I must outline some actual CTRs. Allow them to be 5% and seven%. It’s important to say that the algorithm is aware of nothing about these values. I would like them to simulate a click on; you’ll have precise clicks in the true world. I’ll flip a super-biased coin for every title that lands heads with a 5% or 7% likelihood. If it landed heads, then there’s a click on.

Then, the algorithm is simple:

- Primarily based on the noticed knowledge, get a Beta distribution for every title

- Pattern CTR from each distribution

- Perceive which CTR is larger and flip a related coin

- Perceive if there was a click on or not

- Improve parameter “a” by 1 if there was a click on; enhance parameter “b” by 1 if there was a skip

- Repeat till there are customers within the queue.

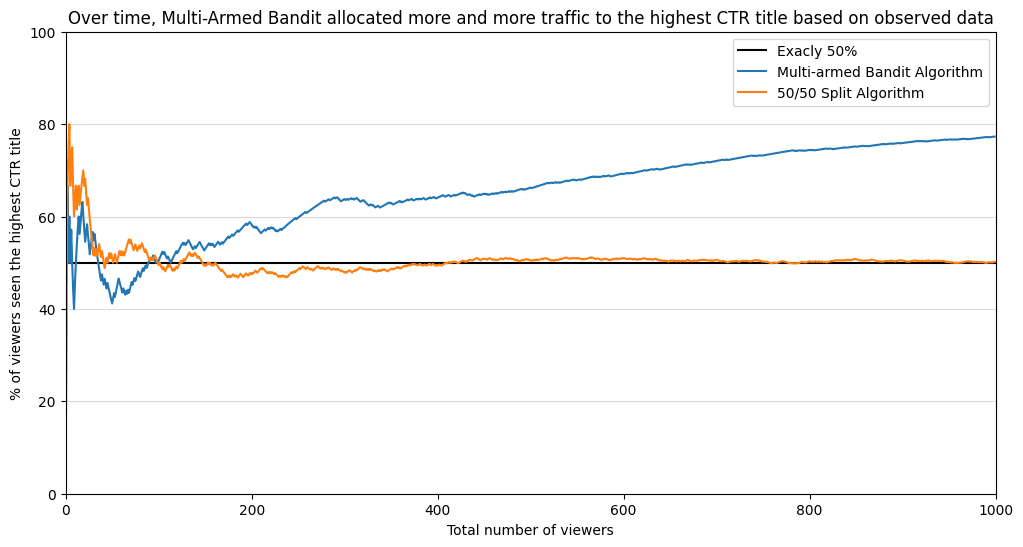

To grasp the algorithm’s high quality, we may even save a price representing a share of viewers uncovered to the second choice because it has the next “actual” CTR. Let’s use a 50/50 break up technique as a counterpart to have a baseline high quality.

Code by Writer

After 1000 customers within the queue, our “multi-armed bandit” already has understanding of what are the CTRs.

And here’s a graph that reveals that such a technique yields higher outcomes. After 100 viewers, the “multi-armed bandit” surpassed a 50% share of viewers supplied the second choice. As a result of an increasing number of proof supported the second title, the algorithm allotted an increasing number of visitors to the second title. Nearly 80% of all viewers have seen the best-performing choice! Whereas within the 50/50 break up, solely 50% of the folks have seen the best-performing choice.

Bayesian Multi-armed Bandit uncovered a further 25% of viewers to a better-performing choice! With extra incoming knowledge, the distinction will solely enhance between these two methods.

In fact, “Multi-armed bandits” will not be good. Actual-time sampling and serving of choices is dear. It could be finest to have infrastructure to implement the entire thing with the specified latency. Furthermore, you might not need to freak out your viewers by altering titles. In case you have sufficient visitors to run a fast A/B, do it! Then, manually change the title as soon as. Nonetheless, this algorithm can be utilized in lots of different functions past media.

I hope you now perceive what a “multi-armed bandit” is and the way it may be used to decide on between two choices tailored to the brand new knowledge. I particularly didn’t concentrate on maths and formulation because the textbooks would higher clarify it. I intend to introduce a brand new know-how and spark an curiosity in it!

In case you have any questions, don’t hesitate to succeed in out on LinkedIn.

The pocket book with all of the code may be present in my GitHub repo.

Igor Khomyanin is a Knowledge Scientist at Salmon, with prior knowledge roles at Yandex and McKinsey. I concentrate on extracting worth from knowledge utilizing Statistics and Knowledge Visualization.