Different methods contain utilizing artificial knowledge units. For instance, Runway, a startup that makes generative fashions for video manufacturing, has skilled a model of the favored image-making mannequin Secure Diffusion on artificial knowledge similar to AI-generated photos of people that fluctuate in ethnicity, gender, occupation, and age. The corporate experiences that fashions skilled on this knowledge set generate extra photos of individuals with darker pores and skin and extra photos of girls. Request a picture of a businessperson, and outputs now embrace girls in headscarves; photos of medical doctors will depict people who find themselves numerous in pores and skin coloration and gender; and so forth.

Critics dismiss these options as Band-Aids on damaged base fashions, hiding somewhat than fixing the issue. However Geoff Schaefer, a colleague of Smith’s at Booz Allen Hamilton who’s head of accountable AI on the agency, argues that such algorithmic biases can expose societal biases in a means that’s helpful in the long term.

For example, he notes that even when specific details about race is faraway from an information set, racial bias can nonetheless skew data-driven decision-making as a result of race may be inferred from folks’s addresses—revealing patterns of segregation and housing discrimination. “We obtained a bunch of information collectively in a single place, and that correlation grew to become actually clear,” he says.

Schaefer thinks one thing related might occur with this technology of AI: “These biases throughout society are going to come out.” And that can result in extra focused policymaking, he says.

However many would balk at such optimism. Simply because an issue is out within the open doesn’t assure it’s going to get mounted. Policymakers are nonetheless making an attempt to handle social biases that have been uncovered years in the past—in housing, hiring, loans, policing, and extra. Within the meantime, people dwell with the results.

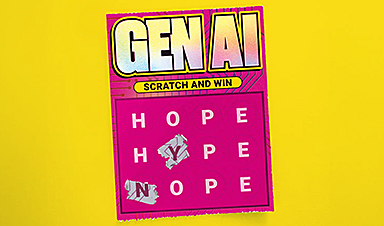

Prediction: Bias will proceed to be an inherent function of most generative AI fashions. However workarounds and rising consciousness might assist policymakers handle the obvious examples.

2

How will AI change the best way we apply copyright?

Outraged that tech corporations ought to revenue from their work with out consent, artists and writers (and coders) have launched class motion lawsuits in opposition to OpenAI, Microsoft, and others, claiming copyright infringement. Getty is suing Stability AI, the agency behind the picture maker Secure Diffusion.

These circumstances are a giant deal. Superstar claimants similar to Sarah Silverman and George R.R. Martin have drawn media consideration. And the circumstances are set to rewrite the principles round what does and doesn’t depend as honest use of one other’s work, no less than within the US.

However don’t maintain your breath. Will probably be years earlier than the courts make their closing selections, says Katie Gardner, a accomplice specializing in intellectual-property licensing on the regulation agency Gunderson Dettmer, which represents greater than 280 AI corporations. By that time, she says, “the expertise might be so entrenched within the economic system that it’s not going to be undone.”

Within the meantime, the tech trade is constructing on these alleged infringements at breakneck tempo. “I don’t count on corporations will wait and see,” says Gardner. “There could also be some authorized dangers, however there are such a lot of different dangers with not maintaining.”

Some corporations have taken steps to restrict the opportunity of infringement. OpenAI and Meta declare to have launched methods for creators to take away their work from future knowledge units. OpenAI now prevents customers of DALL-E from requesting photos within the model of dwelling artists. However, Gardner says, “these are all actions to bolster their arguments within the litigation.”

Google, Microsoft, and OpenAI now provide to guard customers of their fashions from potential authorized motion. Microsoft’s indemnification coverage for its generative coding assistant GitHub Copilot, which is the topic of a category motion lawsuit on behalf of software program builders whose code it was skilled on, would in precept shield those that use it whereas the courts shake issues out. “We’ll take that burden on so the customers of our merchandise don’t have to fret about it,” Microsoft CEO Satya Nadella informed MIT Expertise Evaluate.