Screenshot by Editor

It’s been an attention-grabbing 12 months. Quite a bit has occurred with giant language fashions (LLMs) being on the forefront of the whole lot tech-related. You may have LLMs resembling ChatGPT, Gemini, and extra.

These LLMs are at present run within the cloud, that means they run some other place on another person’s pc. For one thing to be run elsewhere, you may think about how costly it’s. As a result of if it was so low cost, why not run it regionally by yourself pc?

However that’s all modified now. Now you can run completely different LLMs with LM Studio.

LM studio is a device that you should utilize to experiment with native and open-source LLMs. You possibly can run these LLMs in your laptop computer, completely off. There are two methods that you would be able to uncover, obtain and run these LLMs regionally:

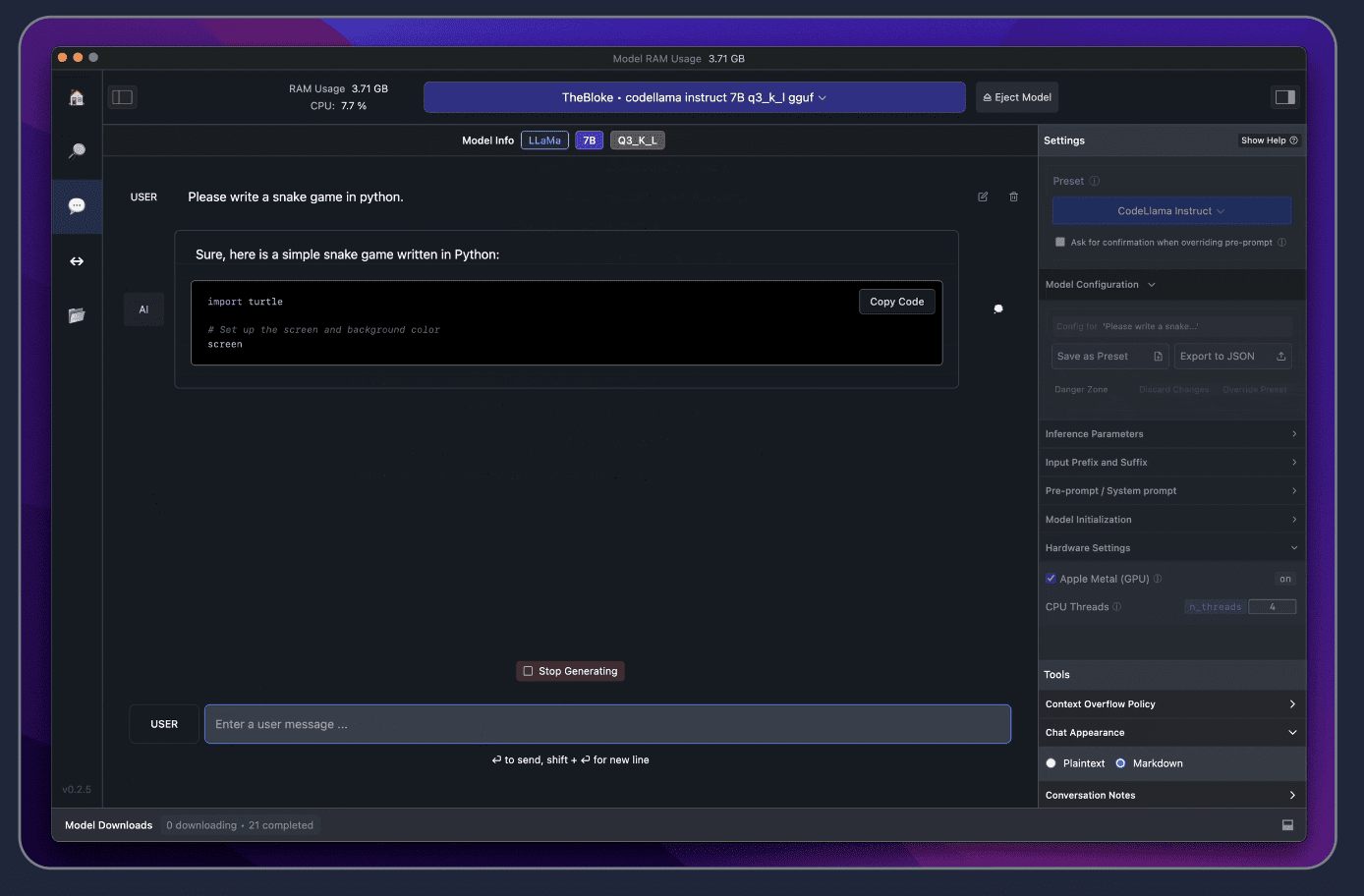

- By way of the in-app Chat UI

- OpenAI appropriate native server

All you must do is obtain any mannequin file that’s appropriate from the HuggingFace repository, and increase accomplished!

So how do I get began?

LM Studio Necessities

Earlier than you may get kickstarted and begin delving into discovering all of the LLMs regionally, you will want these minimal {hardware}/software program necessities:

- M1/M2/M3 Mac

- Home windows PC with a processor that helps AVX2. (Linux is offered in beta)

- 16GB+ of RAM is beneficial

- For PCs, 6GB+ of VRAM is beneficial

- NVIDIA/AMD GPUs supported

You probably have these, you’re able to go!

So what are the steps?

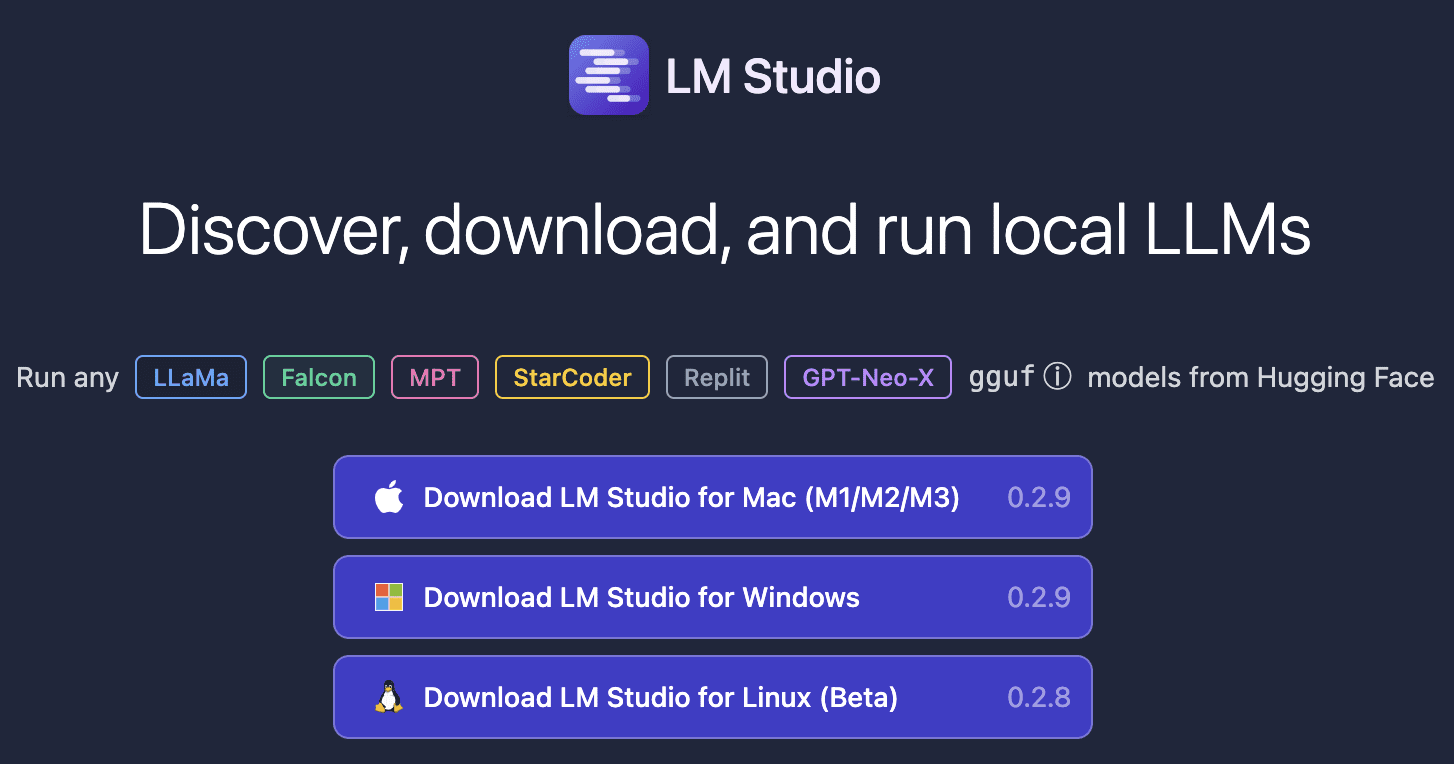

Your first step is to obtain LM Studio for Mac, Home windows, or Linux, which you are able to do right here. The obtain is roughly 400MB, subsequently relying in your web connection, it might take an entire.

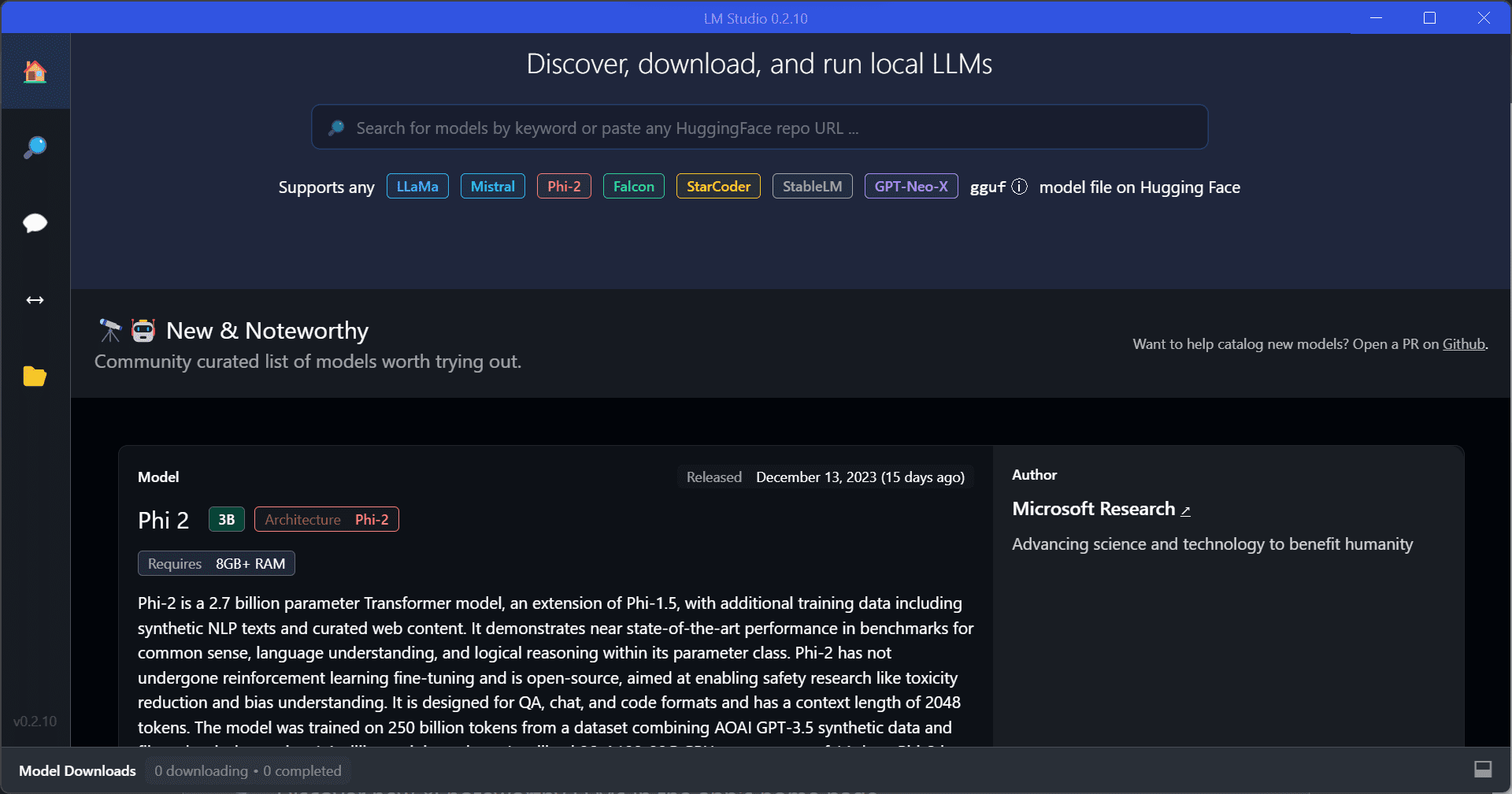

The next step is to decide on a mannequin to obtain. As soon as LM Studio has been launched, click on on the magnifying glass to skim by means of the choices of fashions accessible. Once more, think about that these fashions with be giant, subsequently it might take some time to obtain.

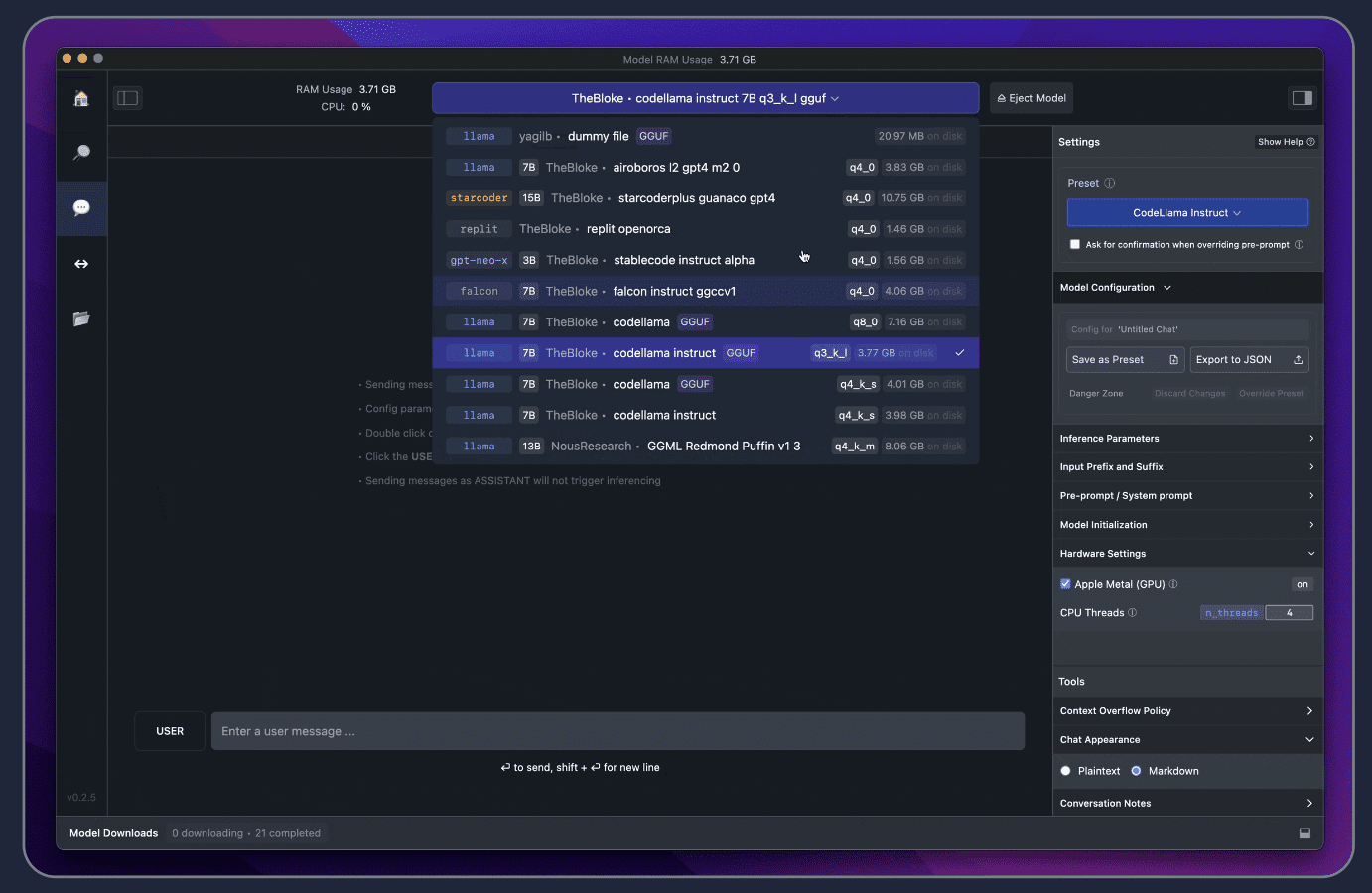

As soon as the mannequin has been downloaded, click on the Speech Bubble on the left and choose your mannequin for it to load.

Able to chit-chat!

There you might have it, that fast and easy to arrange an LLM regionally. If you need to hurry up the response time, you are able to do so by enabling the GPU acceleration on the right-hand aspect.

Do you see how fast that was? Quick proper.

In case you are nervous concerning the assortment of information, it’s good to know that the principle purpose for with the ability to use an LLM regionally is privateness. Subsequently, LM Studio has been designed precisely for that!

Have a go and tell us what you assume within the feedback!

Nisha Arya is a Information Scientist and Freelance Technical Author. She is especially serious about offering Information Science profession recommendation or tutorials and concept based mostly information round Information Science. She additionally needs to discover the alternative ways Synthetic Intelligence is/can profit the longevity of human life. A eager learner, in search of to broaden her tech information and writing abilities, while serving to information others.