My staff and I’ve written over 100 production-ready AI prompts. Our standards are strict: it should show dependable throughout numerous functions and constantly ship the proper outputs.

That is no simple endeavor.

Typically, a immediate can work in 9 instances however fail within the tenth.

Consequently, creating these prompts concerned vital analysis and plenty of trial and error.

Under are some tried-and-true immediate engineering methods we’ve uncovered that will help you construct your individual prompts. I’ll additionally dig into the reasoning behind every strategy so you should use them to unravel your particular challenges.

Getting the settings proper earlier than you write your first immediate

Navigating the world of massive language fashions (LLMs) could be a bit like being an orchestra conductor. The prompts you write – or the enter sequences – are just like the sheet music guiding the efficiency. However there’s extra to it.

As conductors, you even have some knobs to show and sliders to regulate, particularly settings like Temperature and High P. They’re highly effective parameters that may dramatically change the output of your AI ensemble.

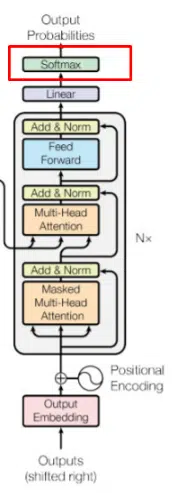

Consider them as your approach to dial up the creativity or rein it in, all occurring at a crucial stage – the softmax layer.

At this layer, your decisions come to life, shaping which phrases the AI picks and the way it strings them collectively.

Right here’s how these settings can remodel the AI’s output and why getting a deal with on them is a game-changer for anybody seeking to grasp the artwork of AI-driven content material creation.

To make sure you’re well-equipped with the important data to know the softmax layer, let’s take a fast journey by the levels of a transformer, ranging from our preliminary enter immediate and culminating within the output on the softmax layer.

Think about we now have the next immediate that we go into GPT: “An important Search engine optimisation issue is…”

- Step 1: Tokenization

- The mannequin converts every phrase right into a numerical token. For instance, “The” could be token 1, “most” token 2098, “essential” token 4322, “Search engine optimisation” token 4, “issue” token 697, and “is” token 6.

- Keep in mind, LLM’s (massive language fashions) take care of quantity representations of phrases and never phrases themselves.

- Step 2: Phrase embeddings (a.ok.a., vectors)

- Every token is reworked right into a phrase embedding. These embeddings are multi-dimensional vectors that encapsulate the that means of every phrase and its linguistic relationships.

- Vectors sound extra difficult than they’re, however think about we might symbolize a extremely easy phrase with three dimensions, comparable to [1, 9, 8]. Every quantity represents a relationship or function. In GPT fashions, there are sometimes 5,000 or extra numbers representing every phrase.

- Step 3: Consideration mechanism

- Utilizing the embeddings of every phrase, plenty of math is completed to check the phrases to one another and perceive their relationships to one another. The mannequin employs attentional weights to judge the context and relationships between phrases. In our sentence, it understands the contextual significance of phrases like “essential” and “Search engine optimisation,” and the way they relate to the idea of an “Search engine optimisation issue.”

- Step 4: Technology of potential subsequent phrases

- Contemplating the complete context of the enter (“An important Search engine optimisation issue is…”), the mannequin generates a listing of contextually acceptable potential subsequent phrases. These may embody phrases like “content material,” “backlinks,” “person expertise,” reflecting widespread Search engine optimisation elements. There are sometimes large lists of phrases, however they’ve various levels of chance that they’d comply with the earlier phrase.

- Step 5: Softmax stage

- Right here, we are able to alter the output by altering the settings.

- The softmax perform is then utilized to those potential subsequent phrases to calculate their possibilities. As an illustration, it’d assign a 40% likelihood to “content material,” 30% to “backlinks,” and 20% to “person expertise.”

- This likelihood distribution is predicated on the mannequin’s coaching and understanding of widespread Search engine optimisation elements following the given immediate.

- Step 6: Choice of the subsequent phrase

- The mannequin then selects the subsequent phrase based mostly on these possibilities, ensuring that the selection is related and contextually acceptable. For instance, if “content material” has the very best likelihood, it could be chosen because the continuation of the sentence.

In the end, the mannequin outputs “An important Search engine optimisation issue is content material.”

This fashion, the complete course of – from tokenization by the softmax stage – ensures that the mannequin’s response is coherent and contextually related to the enter immediate.

With this basis in place – understanding how AI generates an unlimited array of potential phrases, every assigned with particular possibilities – we are able to now pivot to a vital facet: manipulating these hidden lists by adjusting the dials, Temperature and High P.

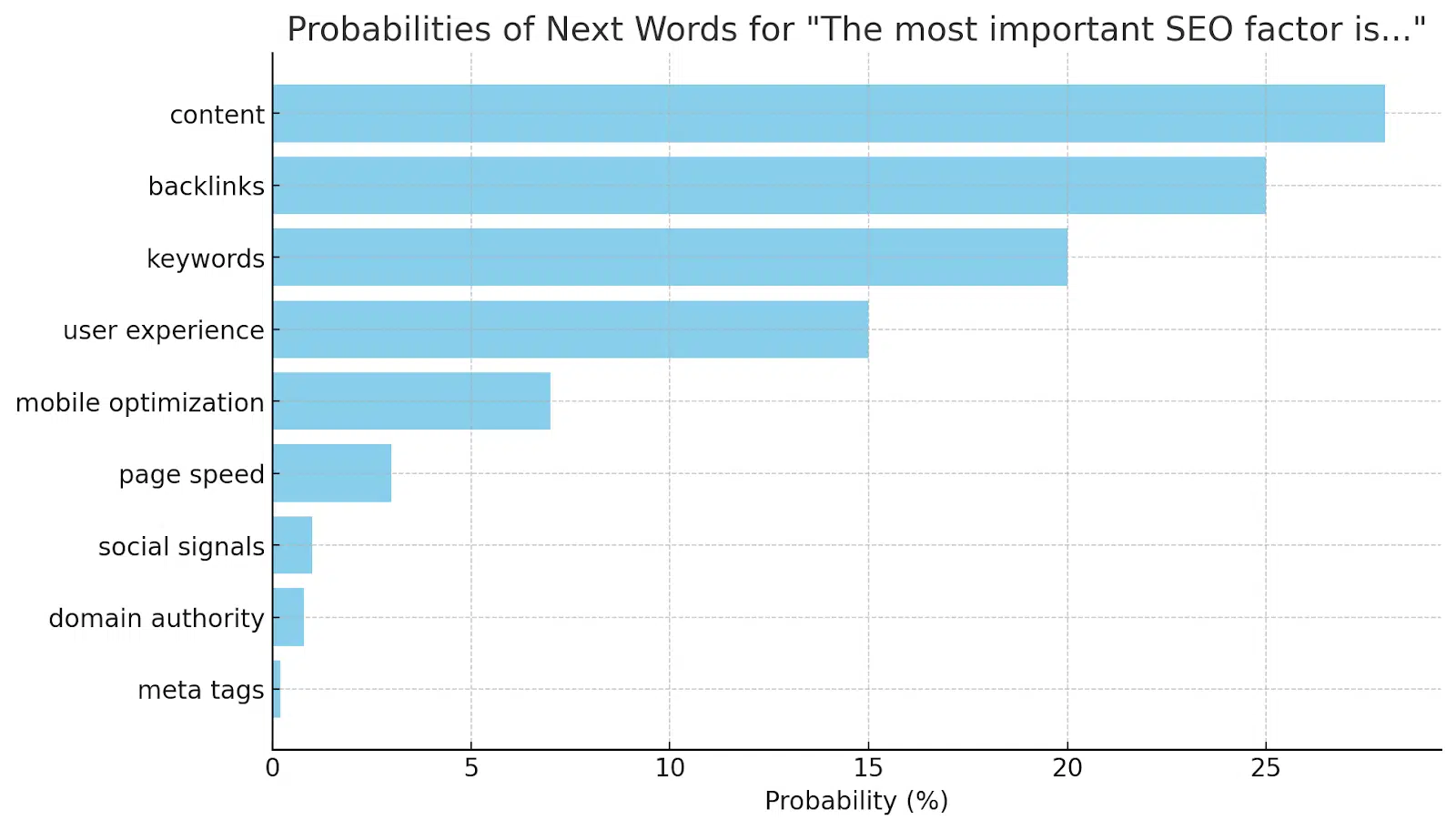

First, think about the LLM has generated the next possibilities for the subsequent phrase within the sentence “An important Search engine optimisation issue is…”:

Adjustable settings: Temperature and High P

Affect of adjusting Temperature

One of the simplest ways to know these is to see how the collection of attainable phrases could be affected by adjusting these settings from one excessive (1) to (0).

Let’s take our sentence from above and assessment what would occur as we alter these settings behind the scenes.

- Excessive Temperature (e.g., 0.9):

- This setting creates a extra even distribution of possibilities, making much less possible phrases extra more likely to be chosen. The adjusted possibilities may appear like this:

- “content material” – 20%

- “backlinks” – 18%

- “key phrases” – 16%

- “person expertise” – 14%

- “cell optimization” – 12%

- “web page pace” – 10%

- “social indicators” – 8%

- “area authority” – 6%

- “meta tags” – 6%

- The output turns into extra diversified and artistic with this setting.

- This setting creates a extra even distribution of possibilities, making much less possible phrases extra more likely to be chosen. The adjusted possibilities may appear like this:

Notice: With a broader collection of potential phrases, there’s an elevated probability that the AI may veer off target.

Image this: if the AI selects “meta tags” from its huge pool of choices, it might probably spin a complete article round why “meta tags” are crucial Search engine optimisation issue. Whereas this stance isn’t generally accepted amongst Search engine optimisation consultants, the article may seem convincing to an outsider.

This illustrates a key danger: with too vast a variety, the AI might create content material that, whereas distinctive, won’t align with established experience, resulting in outputs which might be extra inventive however probably much less correct or related to the sector.

This highlights the fragile stability wanted in managing the AI’s phrase choice course of to make sure the content material stays each revolutionary and authoritative.

- Low Temperature (e.g., 0.3):

- Right here, the mannequin favors essentially the most possible phrases, resulting in a extra conservative output. The chances may alter to:

- “content material” – 40%

- “backlinks” – 35%

- “key phrases” – 20%

- “person expertise” – 4%

- Others – 1% mixed

- This ends in predictable and targeted outputs.

- Right here, the mannequin favors essentially the most possible phrases, resulting in a extra conservative output. The chances may alter to:

Affect of adjusting High P

- Excessive High P (e.g., 0.9):

- The mannequin considers a wider vary of phrases, as much as a cumulative likelihood of 90%. It would embody phrases as much as “web page pace” however exclude the much less possible ones.

- This maintains output variety whereas excluding extraordinarily unlikely choices.

- Low High P (e.g., 0.5):

- The mannequin focuses on the highest phrases till their mixed likelihood reaches 50%, probably contemplating solely “content material,” “backlinks,” and “key phrases.”

- This creates a extra targeted and fewer diversified output.

Software in Search engine optimisation contexts

So let’s focus on a few of the functions of those settings:

- For numerous and artistic content material: A better Temperature will be set to discover unconventional Search engine optimisation elements.

- Mainstream Search engine optimisation methods: Decrease Temperature and High P are appropriate for specializing in established elements like “content material” and “backlinks.”

- Balanced strategy: Reasonable settings provide a mixture of widespread and some unconventional elements, best for normal Search engine optimisation articles.

By understanding and adjusting these settings, SEOs can tailor the LLM’s output to align with numerous content material targets, from detailed technical discussions to broader, inventive brainstorming in Search engine optimisation technique improvement.

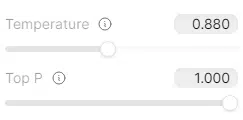

Broader suggestions

- For technical writing: Contemplate a decrease Temperature to take care of technical accuracy, however be conscious that this may cut back the individuality of the content material.

- For key phrase analysis: Excessive Temperature and Excessive High P if you wish to discover extra and distinctive key phrases.

- For inventive content material: A Temperature setting round 0.88 is commonly optimum, providing a great mixture of uniqueness and coherence. Modify High P in keeping with the specified degree of creativity and randomness.

- For pc programming: The place you need extra dependable outputs and normally go along with the most well-liked approach of doing one thing, decrease Temperature and High P parameters make sense.

Get the each day publication search entrepreneurs depend on.

Immediate engineering methods

Now that we’ve coated the foundational settings, let’s dive into the second lever we now have management over – the prompts.

Immediate engineering is essential in harnessing the complete potential of LLMs. Mastering it means we are able to pack extra directions right into a mannequin, gaining finer management over the ultimate output.

In case you’re something like me, you’ve been pissed off when an AI mannequin simply ignores certainly one of your directions. Hopefully, by understanding a number of core concepts, you possibly can cut back this incidence.

1. The persona and viewers sample: Maximizing educational effectivity

In AI, very similar to the human mind, sure phrases carry a community of associations. Consider the Eiffel Tower – it’s not only a construction; it brings to thoughts Paris, France, romance, baguettes, and so on. Equally, in AI language fashions, particular phrases or phrases can evoke a broad spectrum of associated ideas, permitting us to speak advanced concepts in fewer strains.

Implementing the persona sample

The persona sample is an ingenious immediate engineering technique the place you assign a “persona” to the AI at first of your immediate. For instance, saying, “You’re a authorized Search engine optimisation writing professional for client readers,” packs a mess of directions into one sentence.

Discover on the finish of this sentence, I apply what is called the viewers sample, “for client readers.”

Breaking down the persona sample

As an alternative of writing out every of those sentences under and utilizing up a big portion of the instruction area, the persona sample permits us to convey many sentences of directions in a single sentence.

For instance (word that is theoretical), the instruction above might indicate the next.

- “Authorized Search engine optimisation writing professional” suggests a mess of traits:

- Precision and accuracy, as anticipated in authorized writing.

- An understanding of Search engine optimisation rules – key phrase optimization, readability, and structuring for search engine algorithms.

- An professional or systematic strategy to content material creation.

- “For client readers” implies:

- The content material must be accessible and fascinating for most of the people.

- It ought to keep away from heavy authorized jargon and as a substitute use layman’s phrases.

The persona sample is remarkably environment friendly, typically capturing the essence of a number of sentences into only one.

Getting the persona proper is a game-changer. It streamlines your instruction course of and gives helpful area for extra detailed and particular prompts.

This strategy is a great approach to maximize the influence of your prompts whereas navigating the character limitations inherent in AI fashions.

2. Zero shot, one shot, and lots of shot inference strategies

Offering examples as a part of your immediate engineering is a extremely efficient approach, particularly when searching for outputs in a selected format.

You’re basically guiding the mannequin by together with particular examples, permitting it to acknowledge and replicate key patterns and traits from these examples in its output.

This technique ensures that the AI’s responses align intently together with your desired format and elegance, making it an indispensable software for reaching extra focused and related outcomes.

The approach takes on three names.

- Zero shot inference studying: The AI mannequin is given no examples of the specified output.

- One shot inference studying: Entails offering the AI mannequin with a single instance of the specified output.

- Many shot inference studying: Offers the AI mannequin with a number of examples of the specified output.

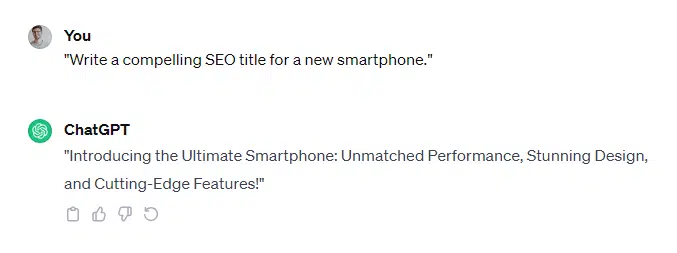

Zero shot inference

- The AI mannequin is prompted to create a title tag with none instance. The immediate straight states the duty.

- Instance immediate: “Create an Search engine optimisation-optimized title tag for a webpage about one of the best chef in Cincinnati.”

Listed here are GPT-4’s responses.

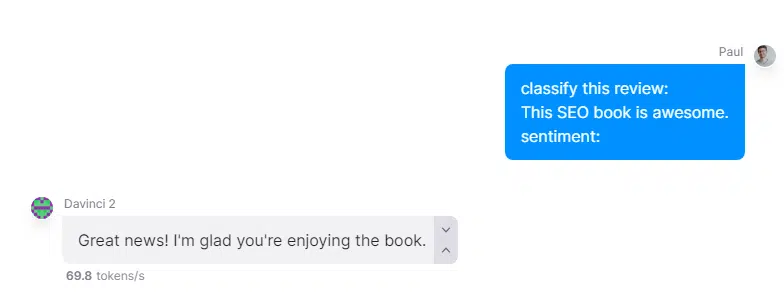

Now let’s see what occurs on a smaller mannequin (OpenAI’s Davinci 2).

As you possibly can see, bigger fashions can typically carry out zero shot prompts, however smaller fashions battle.

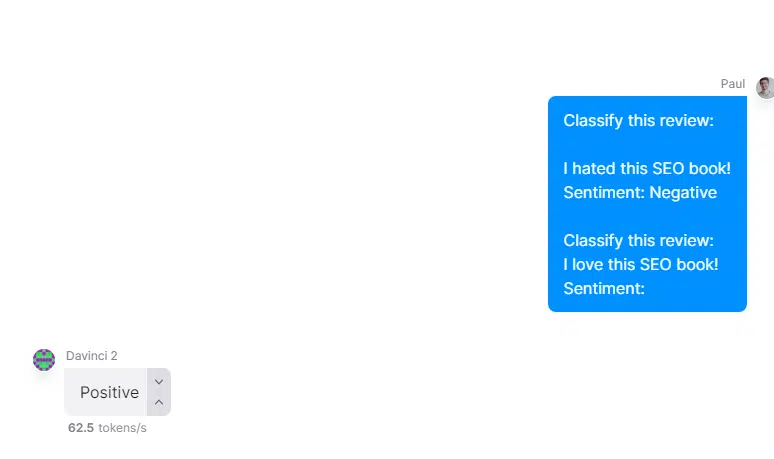

One shot inference

- Right here, you’ve gotten a single instance of an instruction. On this case, we would like a small mannequin (OpenAI’s Davinci 2) to categorise the sentiment of a assessment accurately.

Many shot inference

- Offering a number of examples helps the AI mannequin perceive a spread of attainable approaches to the duty, best for advanced or nuanced necessities.

- Instance immediate: “Create an Search engine optimisation-optimized title tag for a webpage about one of the best chef in Cincinnati, like:

- Uncover the Finest Cooks in Los Angeles – Your Information to Nice Eating

- Atlanta’s High Cooks: Who’s Who within the Culinary Scene

- Discover Elite Cooks in Chicago – A Culinary Journey”

Utilizing the zero shot, one shot, and lots of shot strategies, AI fashions will be successfully guided to provide constant outputs. These methods are particularly helpful in crafting components like title tags, the place precision, relevance, and adherence to Search engine optimisation finest practices are essential.

By tailoring the variety of examples to the mannequin’s capabilities and the duty’s complexity, you possibly can optimize your use of AI for content material creation.

Whereas growing our net utility, we found that offering examples is essentially the most impactful immediate engineering approach.

This strategy is very efficient even with bigger fashions, because the techniques can precisely determine and incorporate the important patterns wanted. This ensures that the generated content material aligns intently together with your supposed targets.

3. ‘Comply with all of my guidelines’ sample

This technique is each easy and efficient in enhancing the precision of AI-generated responses. Including a selected instruction line at first of the immediate can considerably enhance the chance of the AI adhering to all of your pointers.

It’s price noting that directions positioned at first of a immediate usually obtain extra consideration from the AI.

So, should you embody a directive like “don’t skip any steps” or “comply with each instruction” proper on the outset, it units a transparent expectation for the AI to meticulously comply with every a part of your immediate.

This system is especially helpful in situations the place the sequence and completeness of the steps are essential, comparable to in procedural or technical content material. Doing so ensures that the AI pays shut consideration to each element you’ve outlined, resulting in extra thorough and correct outputs.

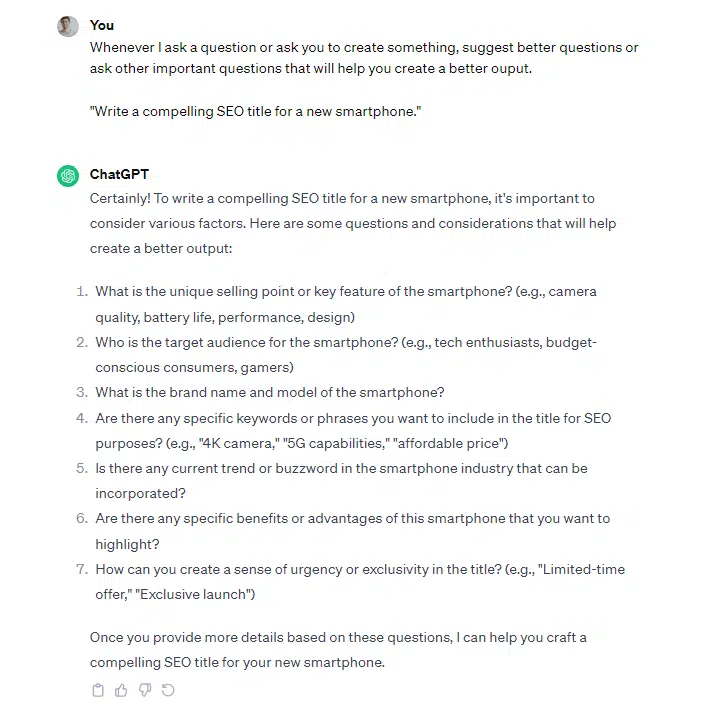

4. Query refinement sample

This can be a simple but highly effective strategy to harnessing the AI’s current data base for higher outcomes. You encourage the AI to generate extra, extra refined questions. These questions, in flip, information the AI towards crafting superior outputs that align extra intently together with your desired outcomes.

This system prompts the AI to delve deeper and query its preliminary understanding or response, uncovering extra nuanced or particular strains of inquiry.

It’s significantly efficient when aiming for an in depth or complete reply, because it pushes the AI to think about points it won’t have initially addressed.

Right here’s an instance for instance this course of in motion:

Earlier than: Query refinement technique

After: Immediate after query refinement technique

5. ‘Make my immediate extra exact’ sample

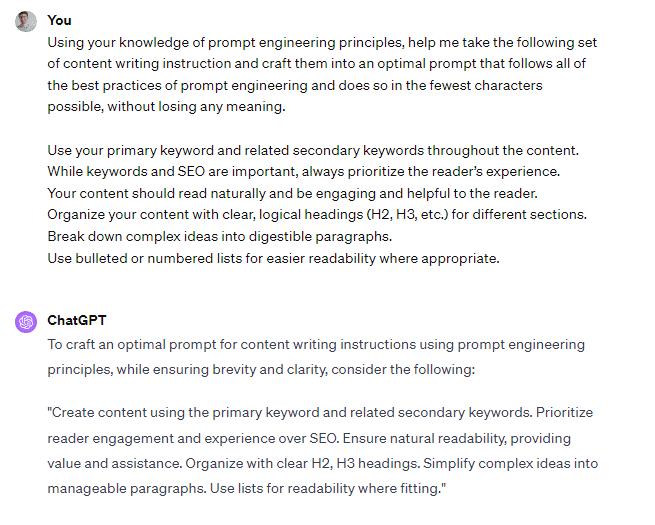

The ultimate immediate engineering approach I’d wish to introduce is a novel, recursive course of the place you feed your preliminary prompts again into GPT.

This enables GPT to behave as a collaborator in refining your prompts, serving to you to pinpoint extra descriptive, exact, and efficient language. It’s a reassuring reminder that you just’re not alone within the artwork of immediate crafting.

This technique entails a little bit of a suggestions loop. You begin together with your authentic immediate, let GPT course of it, after which look at the output to determine areas for enhancement. You may then rephrase or refine your immediate based mostly on these insights and feed it into the system.

This iterative course of can result in extra polished and concise directions, optimizing the effectiveness of your prompts.

Very like the opposite strategies we’ve mentioned, this one might require fine-tuning. Nevertheless, the trouble is commonly rewarded with extra streamlined prompts that talk your intentions clearly and succinctly to the AI, resulting in better-aligned and extra environment friendly outputs.

After implementing your refined prompts, you possibly can interact GPT in a meta-analysis by asking it to determine the patterns it adopted in producing its responses.

Crafting efficient AI prompts for higher outputs

The world of AI-assisted content material creation doesn’t finish right here.

Quite a few different patterns – like “chain of thought,” “cognitive verifier,” “template,” and “tree of ideas” – can increase AI to sort out extra advanced issues and enhance question-answering accuracy.

In future articles, we’ll discover these patterns and the intricate follow of splitting prompts between system and person inputs.

Opinions expressed on this article are these of the visitor writer and never essentially Search Engine Land. Workers authors are listed right here.