Picture by Writer

Earlier than we get into the superb issues about Phi-2. Should you haven’t already learnt about phi-1.5, I’d advise you to have a fast skim over what Microsoft had within the works a number of months in the past Efficient Small Language Fashions: Microsoft’s 1.3 Billion Parameter phi-1.5.

Now you will have the foundations, we will transfer on to studying extra about Phi-2. Microsoft has been working exhausting to launch a variety of small language fashions (SLMs) referred to as ‘Phi’. This sequence of fashions has been proven to realize outstanding efficiency, simply because it was a big language mannequin.

Microsofts first mannequin was Phi-1, the 1.3 billion parameter after which got here Phi-1.5.

We’ve seen Phi-1, Phi-1.5, and now now we have Phi-2.

Phi-2 has change into larger and higher. Larger and higher. It’s a 2.7 billion-parameter language mannequin that has been proven to show excellent reasoning and language understanding capabilities.

Superb for a language mannequin so small proper?

Phi-2 has been proven to outperform fashions that are 25x bigger. And that’s all due to mannequin scaling and coaching information curation. Small, compact, and extremely performant. Resulting from its measurement, Phi-2 is for researchers to discover interpretability, fine-tuning experiments and likewise delve into security enhancements. It’s out there on the Azure AI Studio mannequin catalogue.

The Creation of Phi-2

Microsfts coaching information is a mix of artificial datasets which is used to show the mannequin frequent sense, corresponding to common data in addition to science, principle of thoughts, and every day actions.

The coaching information was chosen fastidiously to make sure that it was filtered with high quality content material that has academic worth. That with the power to scale has taken their 1.3 billion parameter mannequin, Phi-1.5 to a 2.7 billion parameter Phi-2.

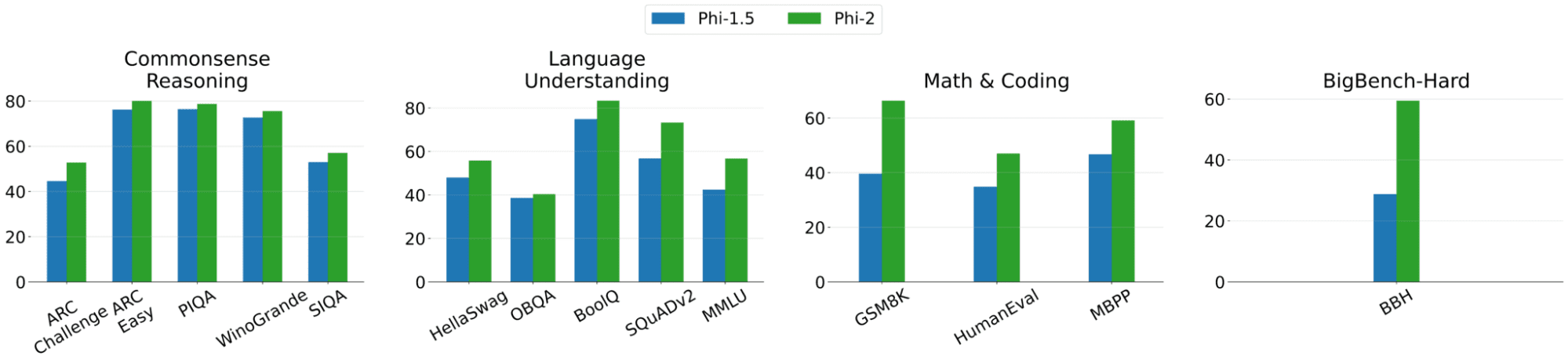

Picture from Microsoft Phi-2

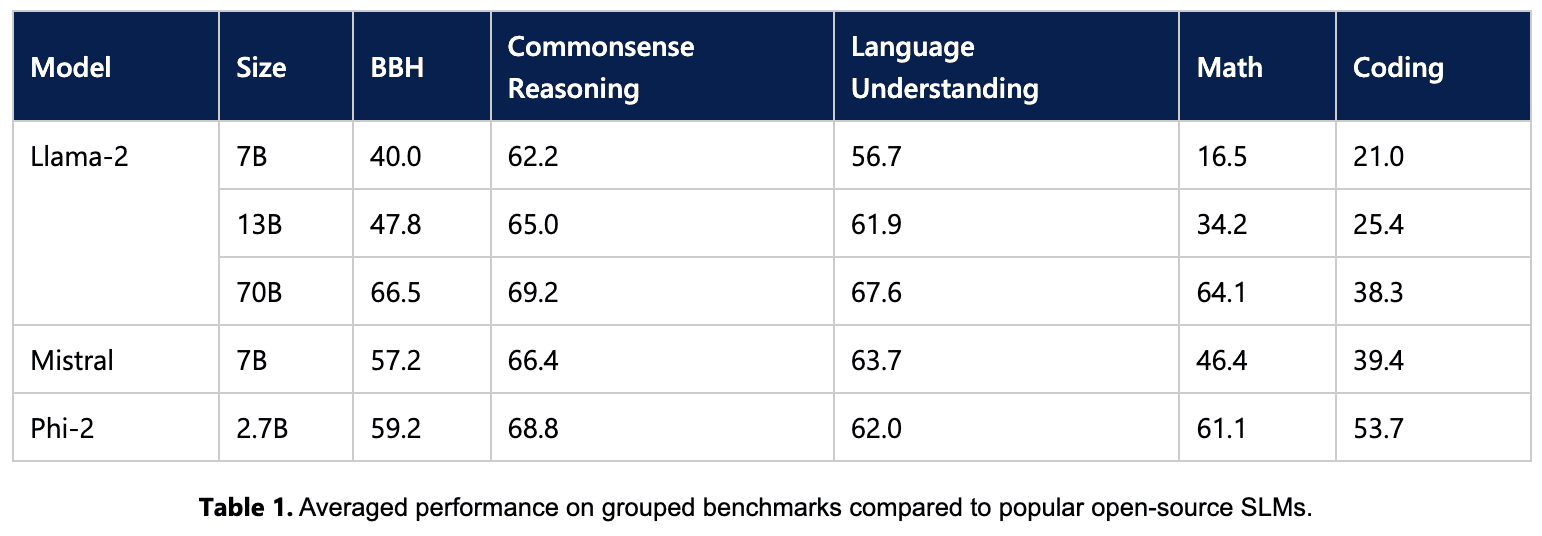

Microsoft put Phi-2 to the take a look at, as they acknowledge the present challenges with mannequin analysis. Assessments had been completed on use circumstances through which they in contrast it to Mistral and Llama-2. The outcomes confirmed that Phi-2 outperformed Mistral-7B and the 70 billion Llama-2 mannequin outperformed Phi-2 in some circumstances as proven under:

Picture from Microsoft Phi-2

Nevertheless, with that being mentioned, Phi-2 nonetheless has its limitations. For instance:

- Inaccuracy: the mannequin has some limitations of manufacturing incorrect code and info, which customers ought to take with a pinch of salt and deal with these outputs as a place to begin.

- Restricted Code Data: Phi-2 coaching information was primarily based on Python together with utilizing frequent packages, subsequently the technology of different languages and scripts will want verification.

- Directions: The mannequin is but to undergo instruction fine-tuning, subsequently it could wrestle to actually perceive the directions that the person offers.

There are additionally different limitations of Phi-2, corresponding to language limitations, societal biases, toxicity, and verbosity.

With that being mentioned, each new services or products has its limitations and Phi-2 has solely been out for every week or so. Subsequently, Microsoft will want phi-2 to get into the arms of the general public to assist them enhance the service and overcome these present limitations.

Microsoft has ended the yr with a small language mannequin that would doubtlessly develop to be probably the most talked about mannequin of 2024. With this being mentioned, to shut the yr – what ought to we anticipate from the language mannequin world for the yr 2024?

Nisha Arya is a Knowledge Scientist and Freelance Technical Author. She is especially enthusiastic about offering Knowledge Science profession recommendation or tutorials and principle primarily based data round Knowledge Science. She additionally needs to discover the other ways Synthetic Intelligence is/can profit the longevity of human life. A eager learner, looking for to broaden her tech data and writing abilities, while serving to information others.