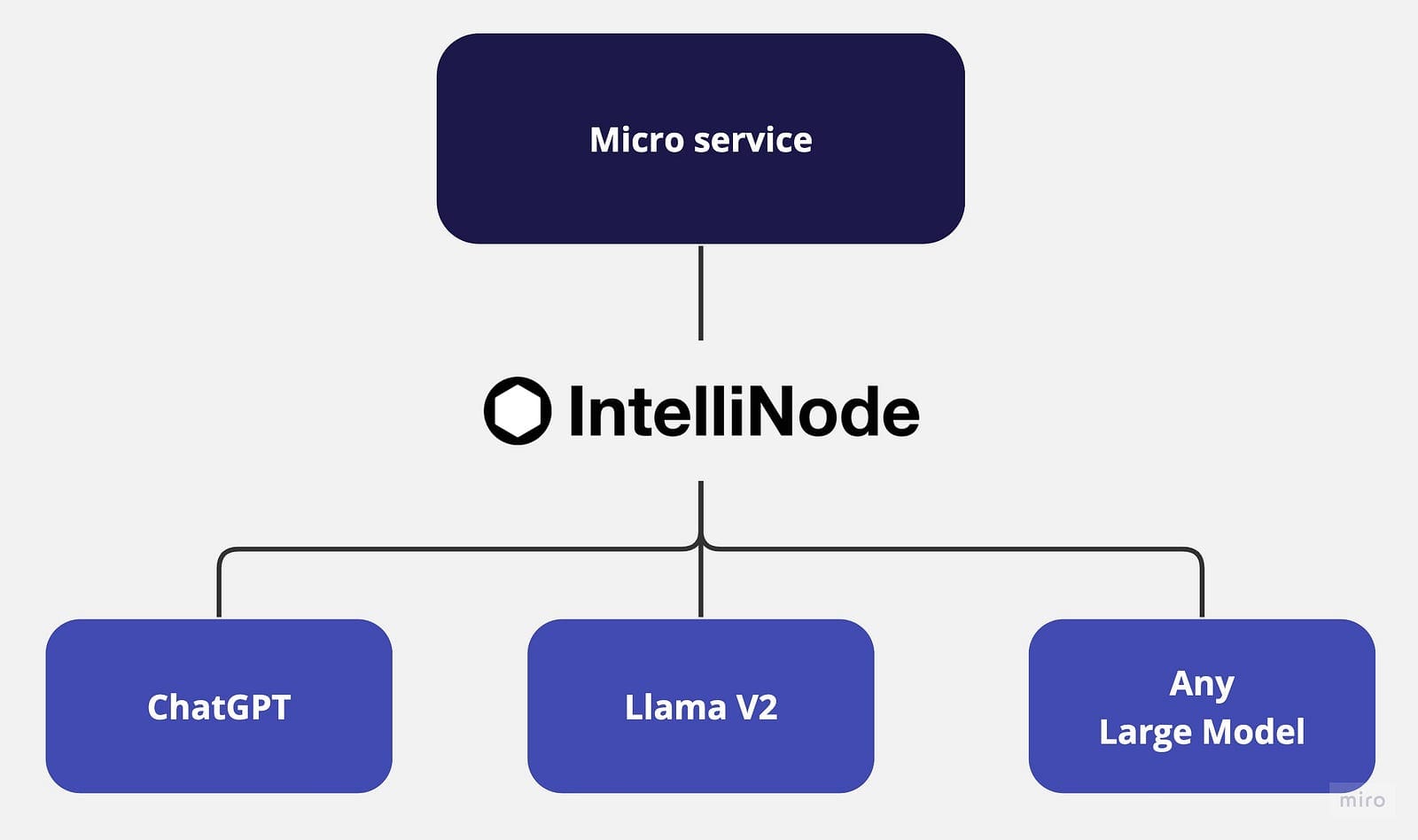

Microservices structure promotes the creation of versatile, unbiased companies with well-defined boundaries. This scalable method permits builders to take care of and evolve companies individually with out affecting your entire software. Nevertheless, realizing the total potential of microservices structure, significantly for AI-powered chat purposes, requires sturdy integration with the newest Giant Language Fashions (LLMs) like Meta Llama V2 and OpenAI’s ChatGPT and different fine-tuned launched primarily based on every software use case to offer a multi-model method for a diversified resolution.

LLMs are large-scale fashions that generate human-like textual content primarily based on their coaching on numerous knowledge. By studying from billions of phrases on the web, LLMs perceive the context and generate tuned content material in varied domains. Nevertheless, the combination of assorted LLMs right into a single software typically poses challenges as a result of requirement of distinctive interfaces, entry endpoints, and particular payloads for every mannequin. So, having a single integration service that may deal with a wide range of fashions improves the structure design and empowers the dimensions of unbiased companies.

This tutorial will introduce you to IntelliNode integrations for ChatGPT and LLaMA V2 in a microservice structure utilizing Node.js and Categorical.

Listed below are a number of chat integration choices offered by IntelliNode:

- LLaMA V2: You possibly can combine the LLaMA V2 mannequin both by way of Replicate’s API for a simple course of or by way of your AWS SageMaker host for an extra management.

LLaMA V2 is a robust open supply Giant Language Mannequin (LLM) that has been pre-trained and fine-tuned with as much as 70B parameters. It excels in complicated reasoning duties throughout varied domains, together with specialised fields like programming and artistic writing. Its coaching methodology entails self-supervised knowledge and alignment with human preferences by means of Reinforcement Studying with Human Suggestions (RLHF). LLaMA V2 surpasses present open-source fashions and is similar to closed-source fashions like ChatGPT and BARD in usability and security.

- ChatGPT: By merely offering your OpenAI API key, IntelliNode module permits integration with the mannequin in a easy chat interface. You possibly can entry ChatGPT by means of GPT 3.5 or GPT 4 fashions. These fashions have been educated on huge quantities of information and fine-tuned to offer extremely contextual and correct responses.

Let’s begin by initializing a brand new Node.js undertaking. Open up your terminal, navigate to your undertaking’s listing, and run the next command:

This command will create a brand new `bundle.json` file to your software.

Subsequent, set up Categorical.js, which shall be used to deal with HTTP requests and responses and intellinode for LLM fashions connection:

npm set up specific

npm set up intellinode

As soon as the set up concludes, create a brand new file named `app.js` in your undertaking’s root listing. then, add the specific initializing code in `app.js`.

Code by Writer

Replicate gives a quick integration path with Llama V2 by means of API key, and IntelliNode gives the chatbot interface to decouple what you are promoting logic from the Replicate backend permitting you to modify between completely different chat fashions.

Let’s begin by integrating with Llama hosted in Reproduction’s backend:

Code by Writer

Get your trial key from replicate.com to activate the combination.

Now, let’s cowl Llama V2 integration by way of AWS SageMaker, offering privateness and further layer of management.

The mixing requires to generate an API endpoint out of your AWS account, first we are going to setup the combination code in our micro service app:

Code by Writer

The next steps are to create a Llama endpoint in your account, when you arrange the API gateway copy the URL to make use of for working the ‘/llama/aws’ service.

To setup a Llama V2 endpoint in your AWS account:

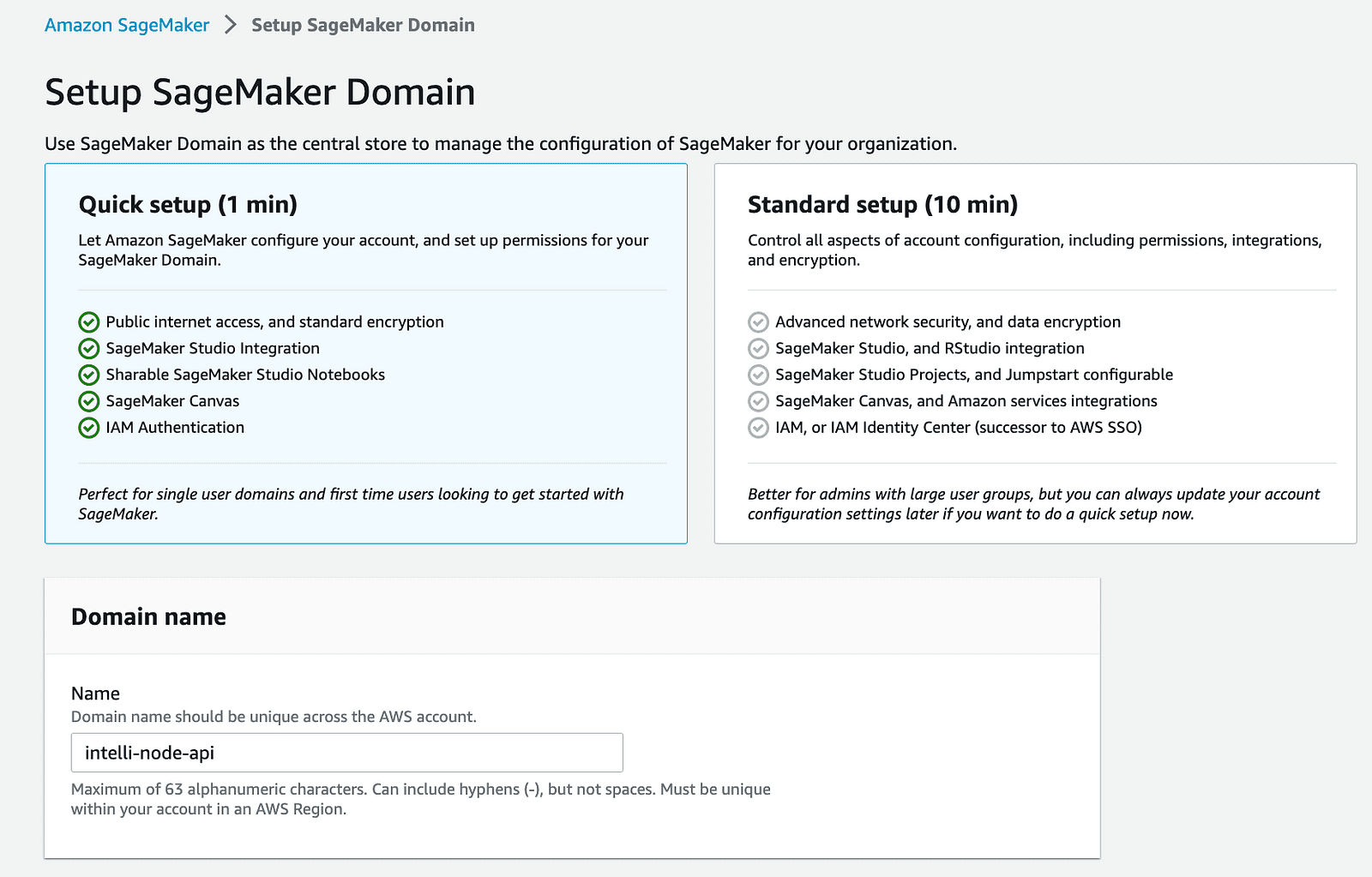

1- SageMaker Service: choose the SageMaker service out of your AWS account and click on on domains.

aws account-select sagemaker

2- Create a SageMaker Area: Start by creating a brand new area in your AWS SageMaker. This step establishes a managed house to your SageMaker operations.

aws account-sagemaker area

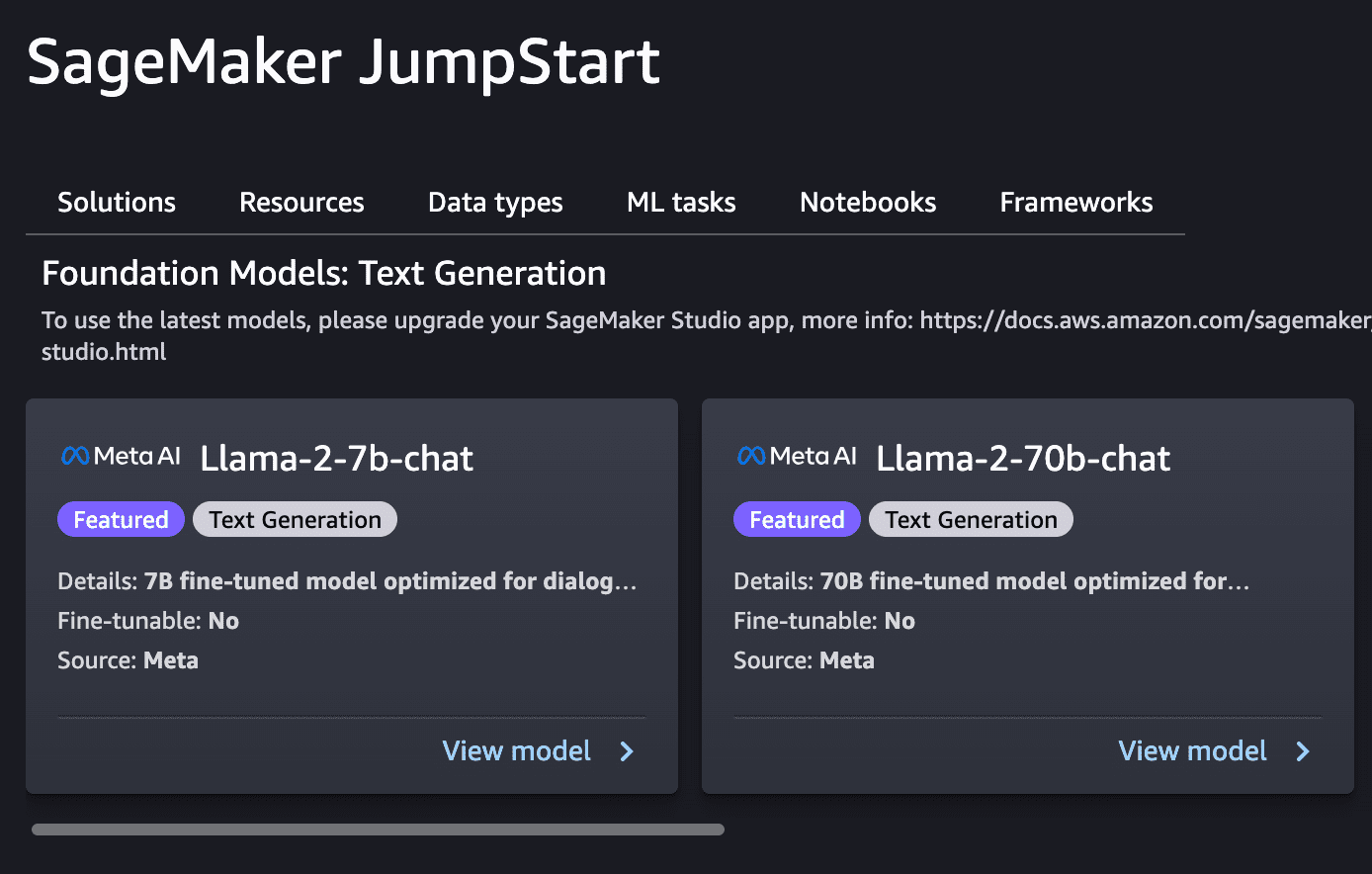

3- Deploy the Llama Mannequin: Make the most of SageMaker JumpStart to deploy the Llama mannequin you intend to combine. It is suggested to start out with the 2B mannequin as a result of increased month-to-month value for working the 70B mannequin.

aws account-sagemaker soar begin

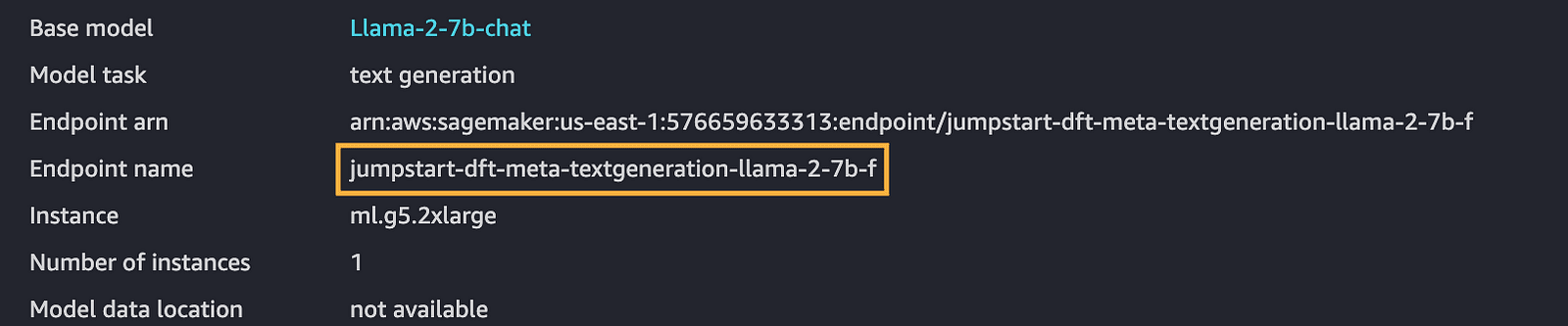

4- Copy the Endpoint Title: After getting a mannequin deployed, be sure that to notice the endpoint title, which is essential for future steps.

aws account-sagemaker endpoint

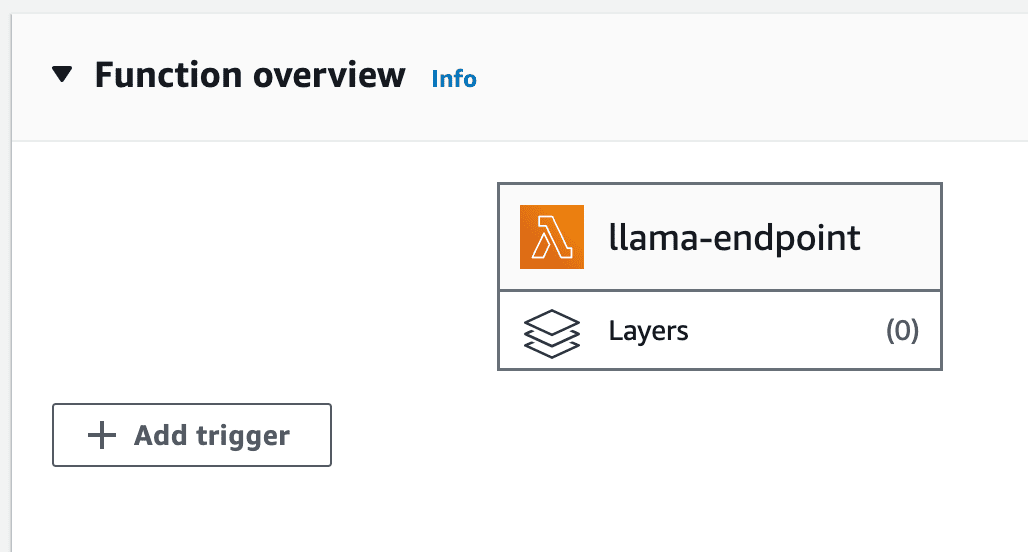

5- Create Lambda Operate: AWS Lambda permits working the back-end code with out managing servers. Create a Node.js lambda operate to make use of for integrating the deployed mannequin.

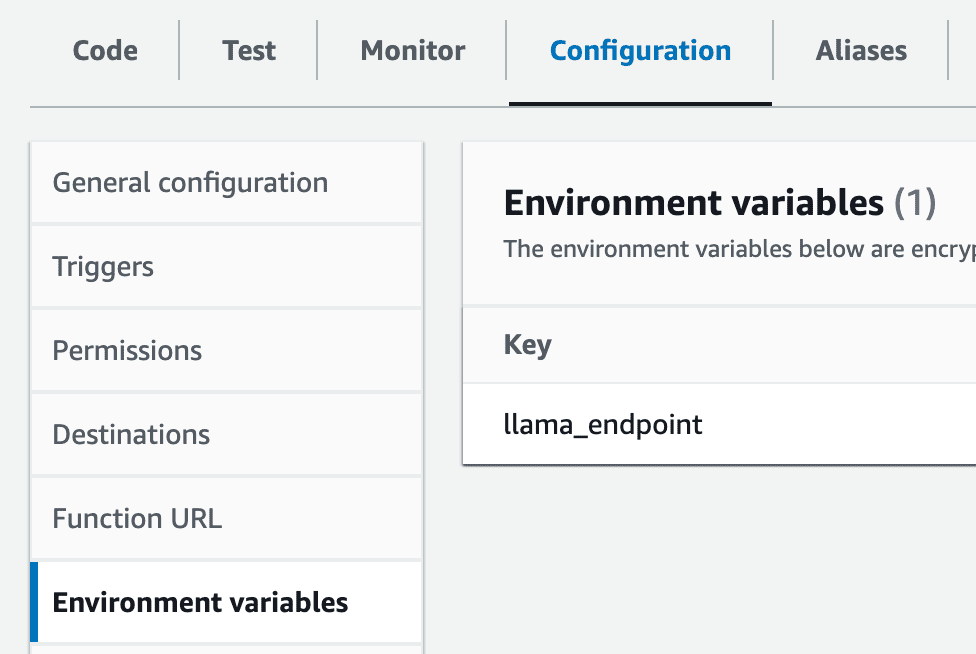

6- Set Up Surroundings Variable: Create an surroundings variable inside your lambda named llama_endpoint with the worth of the SageMaker endpoint.

aws account-lmabda settings

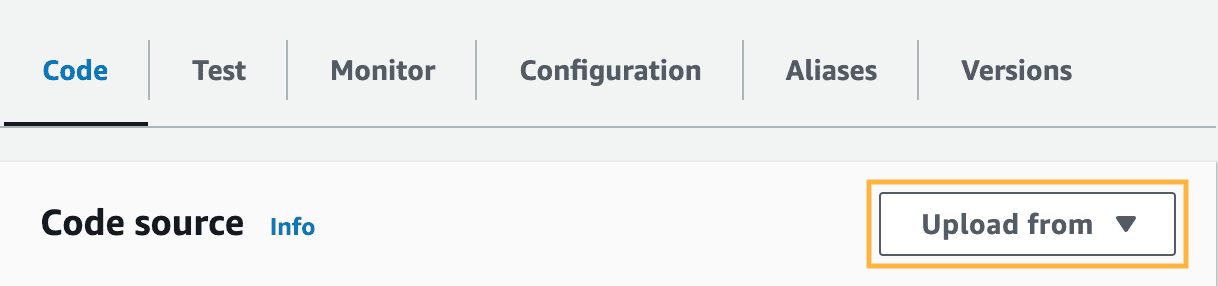

7- Intellinode Lambda Import: It’s essential to import the ready Lambda zip file that establishes a connection to your SageMaker Llama deployment. This export is a zipper file, and it may be discovered within the lambda_llama_sagemaker listing.

aws account-lambda add from zip file

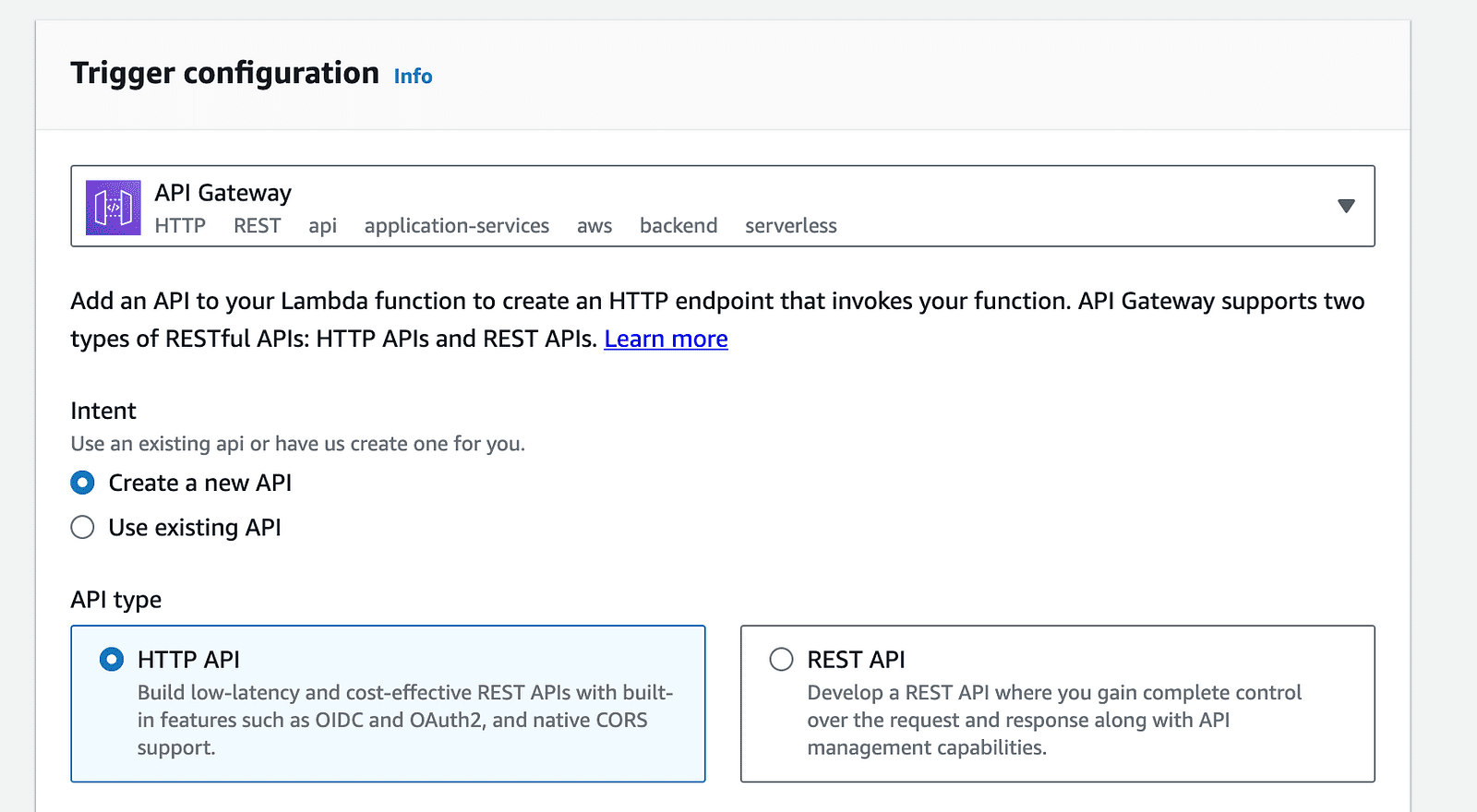

8- API Gateway Configuration: Click on on the “Add set off” possibility on the Lambda operate web page, and choose “API Gateway” from the record of obtainable triggers.

aws account-lambda set off

aws account-api gateway set off

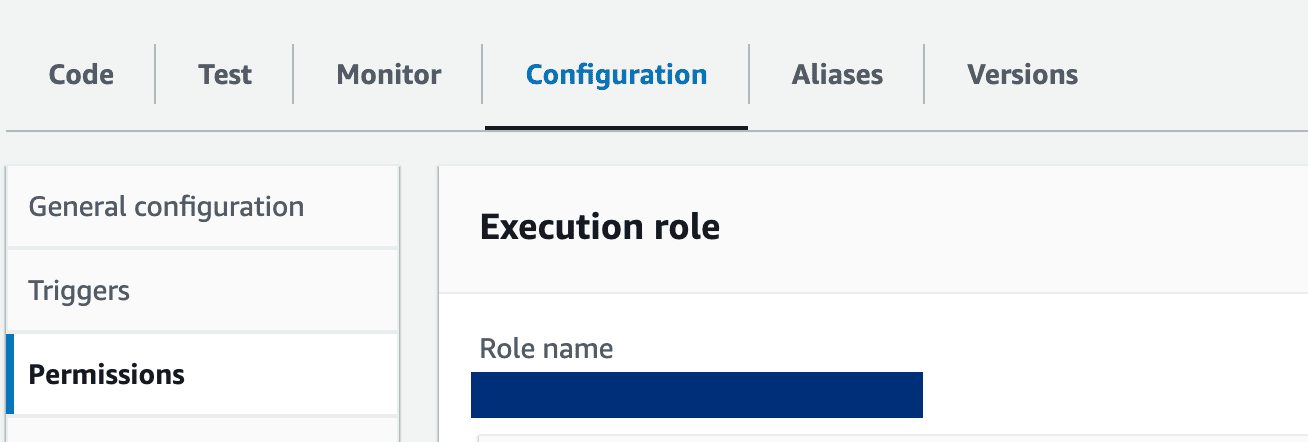

9- Lambda Operate Settings: Replace the lambda function to grant needed permissions to entry SageMaker endpoints. Moreover, the operate’s timeout interval needs to be prolonged to accommodate the processing time. Make these changes within the “Configuration” tab of your Lambda operate.

Click on on the function title to replace the permissions and povides the permission to entry sagemaker:

aws account-lambda function

Lastly, we’ll illustrate the steps to combine Openai ChatGPT as an alternative choice within the micro service structure:

Code by Writer

Get your trial key from platform.openai.com.

First export the API key in your terminal as comply with:

Code by Writer

Then run the node app:

Sort the next url within the browser to check chatGPT service:

http://localhost:3000/chatgpt?message=hi there

We constructed a microservice empowered by the capabilities of Giant Language Fashions reminiscent of Llama V2 and OpenAI’s ChatGPT. This integration opens the door for leveraging infinite enterprise situations powered by superior AI.

By translating your machine studying necessities into decoupled microservices, your software can acquire the advantages of flexibility, and scalability. As a substitute of configuring your operations to go well with the constraints of a monolithic mannequin, the language fashions operate can now be individually managed and developed; this guarantees higher effectivity and simpler troubleshooting and improve administration.

References

- ChatGPT API: hyperlink.

- Reproduction API: hyperlink.

- SageMaker Llama Leap Begin: hyperlink

- IntelliNode Get Began: hyperlink

- Full code GitHub repo: hyperlink

Ahmad Albarqawi is a Engineer and knowledge science grasp at Illinois Urbana-Champaign.