At its core, LangChain is an modern framework tailor-made for crafting purposes that leverage the capabilities of language fashions. It is a toolkit designed for builders to create purposes which can be context-aware and able to refined reasoning.

This implies LangChain purposes can perceive the context, reminiscent of immediate directions or content material grounding responses and use language fashions for complicated reasoning duties, like deciding learn how to reply or what actions to take. LangChain represents a unified method to growing clever purposes, simplifying the journey from idea to execution with its various parts.

Understanding LangChain

LangChain is way more than only a framework; it is a full-fledged ecosystem comprising a number of integral components.

- Firstly, there are the LangChain Libraries, out there in each Python and JavaScript. These libraries are the spine of LangChain, providing interfaces and integrations for numerous parts. They supply a primary runtime for combining these parts into cohesive chains and brokers, together with ready-made implementations for speedy use.

- Subsequent, we now have LangChain Templates. These are a group of deployable reference architectures tailor-made for a wide selection of duties. Whether or not you are constructing a chatbot or a fancy analytical instrument, these templates supply a strong start line.

- LangServe steps in as a flexible library for deploying LangChain chains as REST APIs. This instrument is important for turning your LangChain tasks into accessible and scalable net companies.

- Lastly, LangSmith serves as a developer platform. It is designed to debug, check, consider, and monitor chains constructed on any LLM framework. The seamless integration with LangChain makes it an indispensable instrument for builders aiming to refine and excellent their purposes.

Collectively, these parts empower you to develop, productionize, and deploy purposes with ease. With LangChain, you begin by writing your purposes utilizing the libraries, referencing templates for steerage. LangSmith then helps you in inspecting, testing, and monitoring your chains, making certain that your purposes are always enhancing and prepared for deployment. Lastly, with LangServe, you may simply remodel any chain into an API, making deployment a breeze.

Within the subsequent sections, we’ll delve deeper into learn how to arrange LangChain and start your journey in creating clever, language model-powered purposes.

Automate guide duties and workflows with our AI-driven workflow builder, designed by Nanonets for you and your groups.

Set up and Setup

Are you able to dive into the world of LangChain? Setting it up is easy, and this information will stroll you thru the method step-by-step.

Step one in your LangChain journey is to put in it. You are able to do this simply utilizing pip or conda. Run the next command in your terminal:

pip set up langchain

For many who desire the most recent options and are snug with a bit extra journey, you may set up LangChain straight from the supply. Clone the repository and navigate to the langchain/libs/langchain listing. Then, run:

pip set up -e .

For experimental options, contemplate putting in langchain-experimental. It is a package deal that incorporates cutting-edge code and is meant for analysis and experimental functions. Set up it utilizing:

pip set up langchain-experimental

LangChain CLI is a helpful instrument for working with LangChain templates and LangServe tasks. To put in the LangChain CLI, use:

pip set up langchain-cli

LangServe is important for deploying your LangChain chains as a REST API. It will get put in alongside the LangChain CLI.

LangChain typically requires integrations with mannequin suppliers, knowledge shops, APIs, and many others. For this instance, we’ll use OpenAI’s mannequin APIs. Set up the OpenAI Python package deal utilizing:

pip set up openai

To entry the API, set your OpenAI API key as an surroundings variable:

export OPENAI_API_KEY="your_api_key"

Alternatively, go the important thing straight in your python surroundings:

import os

os.environ['OPENAI_API_KEY'] = 'your_api_key'

LangChain permits for the creation of language mannequin purposes by way of modules. These modules can both stand alone or be composed for complicated use circumstances. These modules are –

- Mannequin I/O: Facilitates interplay with numerous language fashions, dealing with their inputs and outputs effectively.

- Retrieval: Permits entry to and interplay with application-specific knowledge, essential for dynamic knowledge utilization.

- Brokers: Empower purposes to pick applicable instruments based mostly on high-level directives, enhancing decision-making capabilities.

- Chains: Gives pre-defined, reusable compositions that function constructing blocks for software growth.

- Reminiscence: Maintains software state throughout a number of chain executions, important for context-aware interactions.

Every module targets particular growth wants, making LangChain a complete toolkit for creating superior language mannequin purposes.

Together with the above parts, we even have LangChain Expression Language (LCEL), which is a declarative approach to simply compose modules collectively, and this allows the chaining of parts utilizing a common Runnable interface.

LCEL appears one thing like this –

from langchain.chat_models import ChatOpenAI

from langchain.prompts import ChatPromptTemplate

from langchain.schema import BaseOutputParser

# Instance chain

chain = ChatPromptTemplate() | ChatOpenAI() | CustomOutputParser()

Now that we now have lined the fundamentals, we’ll proceed on to:

- Dig deeper into every Langchain module intimately.

- Learn to use LangChain Expression Language.

- Discover frequent use circumstances and implement them.

- Deploy an end-to-end software with LangServe.

- Take a look at LangSmith for debugging, testing, and monitoring.

Let’s get began!

Module I : Mannequin I/O

In LangChain, the core ingredient of any software revolves across the language mannequin. This module offers the important constructing blocks to interface successfully with any language mannequin, making certain seamless integration and communication.

Key Elements of Mannequin I/O

- LLMs and Chat Fashions (used interchangeably):

- LLMs:

- Definition: Pure textual content completion fashions.

- Enter/Output: Take a textual content string as enter and return a textual content string as output.

- Chat Fashions

- LLMs:

- Definition: Fashions that use a language mannequin as a base however differ in enter and output codecs.

- Enter/Output: Settle for a listing of chat messages as enter and return a Chat Message.

- Prompts: Templatize, dynamically choose, and handle mannequin inputs. Permits for the creation of versatile and context-specific prompts that information the language mannequin’s responses.

- Output Parsers: Extract and format info from mannequin outputs. Helpful for changing the uncooked output of language fashions into structured knowledge or particular codecs wanted by the appliance.

LLMs

LangChain’s integration with Massive Language Fashions (LLMs) like OpenAI, Cohere, and Hugging Face is a elementary facet of its performance. LangChain itself doesn’t host LLMs however affords a uniform interface to work together with numerous LLMs.

This part offers an outline of utilizing the OpenAI LLM wrapper in LangChain, relevant to different LLM sorts as nicely. Now we have already put in this within the “Getting Began” part. Allow us to initialize the LLM.

from langchain.llms import OpenAI

llm = OpenAI()

- LLMs implement the Runnable interface, the fundamental constructing block of the LangChain Expression Language (LCEL). This implies they assist

invoke,ainvoke,stream,astream,batch,abatch,astream_logcalls. - LLMs settle for strings as inputs, or objects which could be coerced to string prompts, together with

Checklist[BaseMessage]andPromptValue. (extra on these later)

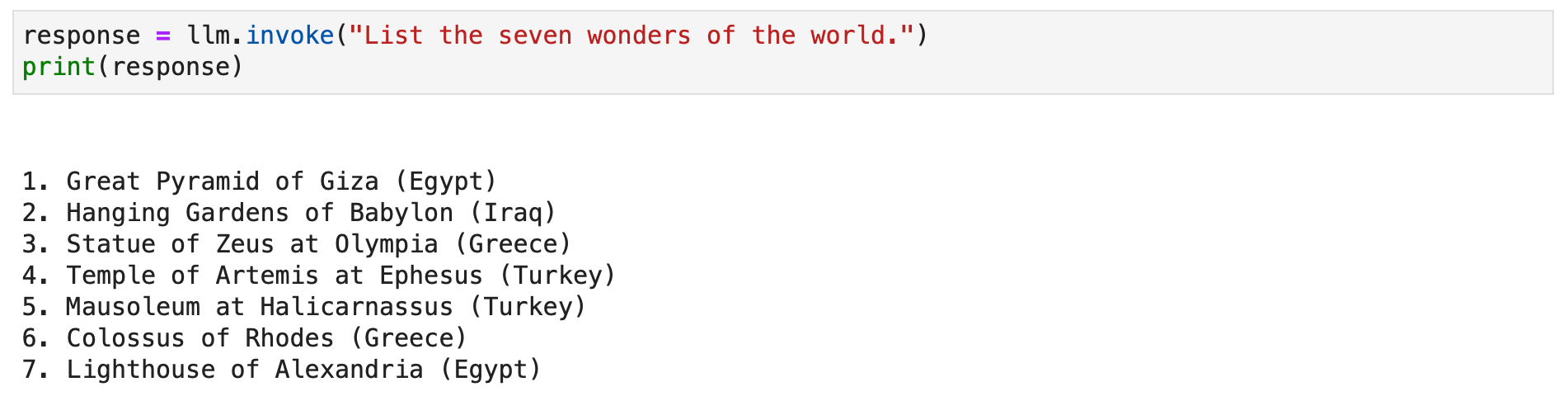

Allow us to have a look at some examples.

response = llm.invoke("Checklist the seven wonders of the world.")

print(response)

You’ll be able to alternatively name the stream methodology to stream the textual content response.

for chunk in llm.stream("The place had been the 2012 Olympics held?"):

print(chunk, finish="", flush=True)

Chat Fashions

LangChain’s integration with chat fashions, a specialised variation of language fashions, is important for creating interactive chat purposes. Whereas they make the most of language fashions internally, chat fashions current a definite interface centered round chat messages as inputs and outputs. This part offers an in depth overview of utilizing OpenAI’s chat mannequin in LangChain.

from langchain.chat_models import ChatOpenAI

chat = ChatOpenAI()

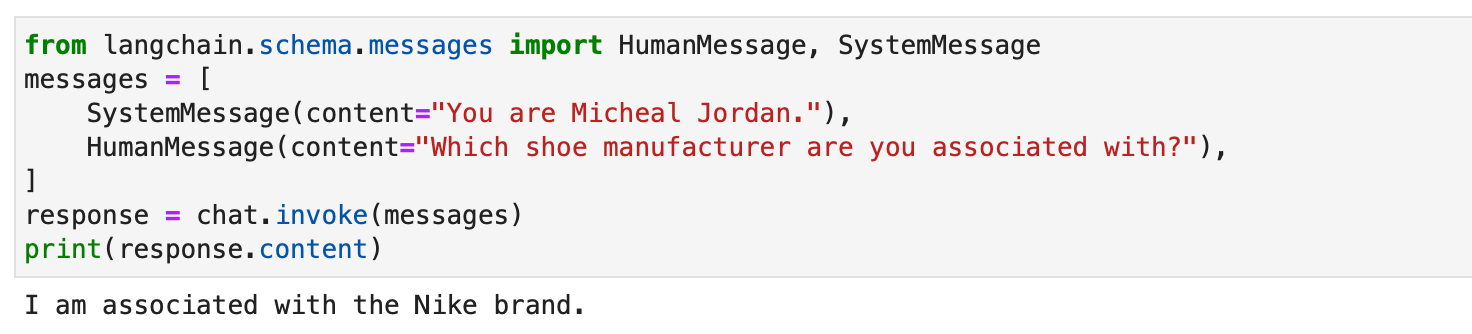

Chat fashions in LangChain work with completely different message sorts reminiscent of AIMessage, HumanMessage, SystemMessage, FunctionMessage, and ChatMessage (with an arbitrary function parameter). Typically, HumanMessage, AIMessage, and SystemMessage are essentially the most often used.

Chat fashions primarily settle for Checklist[BaseMessage] as inputs. Strings could be transformed to HumanMessage, and PromptValue can be supported.

from langchain.schema.messages import HumanMessage, SystemMessage

messages = [

SystemMessage(content="You are Micheal Jordan."),

HumanMessage(content="Which shoe manufacturer are you associated with?"),

]

response = chat.invoke(messages)

print(response.content material)

Prompts

Prompts are important in guiding language fashions to generate related and coherent outputs. They’ll vary from easy directions to complicated few-shot examples. In LangChain, dealing with prompts could be a very streamlined course of, due to a number of devoted courses and features.

LangChain’s PromptTemplate class is a flexible instrument for creating string prompts. It makes use of Python’s str.format syntax, permitting for dynamic immediate technology. You’ll be able to outline a template with placeholders and fill them with particular values as wanted.

from langchain.prompts import PromptTemplate

# Easy immediate with placeholders

prompt_template = PromptTemplate.from_template(

"Inform me a {adjective} joke about {content material}."

)

# Filling placeholders to create a immediate

filled_prompt = prompt_template.format(adjective="humorous", content material="robots")

print(filled_prompt)For chat fashions, prompts are extra structured, involving messages with particular roles. LangChain affords ChatPromptTemplate for this objective.

from langchain.prompts import ChatPromptTemplate

# Defining a chat immediate with numerous roles

chat_template = ChatPromptTemplate.from_messages(

[

("system", "You are a helpful AI bot. Your name is {name}."),

("human", "Hello, how are you doing?"),

("ai", "I'm doing well, thanks!"),

("human", "{user_input}"),

]

)

# Formatting the chat immediate

formatted_messages = chat_template.format_messages(title="Bob", user_input="What's your title?")

for message in formatted_messages:

print(message)

This method permits for the creation of interactive, partaking chatbots with dynamic responses.

Each PromptTemplate and ChatPromptTemplate combine seamlessly with the LangChain Expression Language (LCEL), enabling them to be a part of bigger, complicated workflows. We’ll talk about extra on this later.

Customized immediate templates are generally important for duties requiring distinctive formatting or particular directions. Making a customized immediate template entails defining enter variables and a customized formatting methodology. This flexibility permits LangChain to cater to a wide selection of application-specific necessities. Learn extra right here.

LangChain additionally helps few-shot prompting, enabling the mannequin to be taught from examples. This characteristic is important for duties requiring contextual understanding or particular patterns. Few-shot immediate templates could be constructed from a set of examples or by using an Instance Selector object. Learn extra right here.

Output Parsers

Output parsers play an important function in Langchain, enabling customers to construction the responses generated by language fashions. On this part, we’ll discover the idea of output parsers and supply code examples utilizing Langchain’s PydanticOutputParser, SimpleJsonOutputParser, CommaSeparatedListOutputParser, DatetimeOutputParser, and XMLOutputParser.

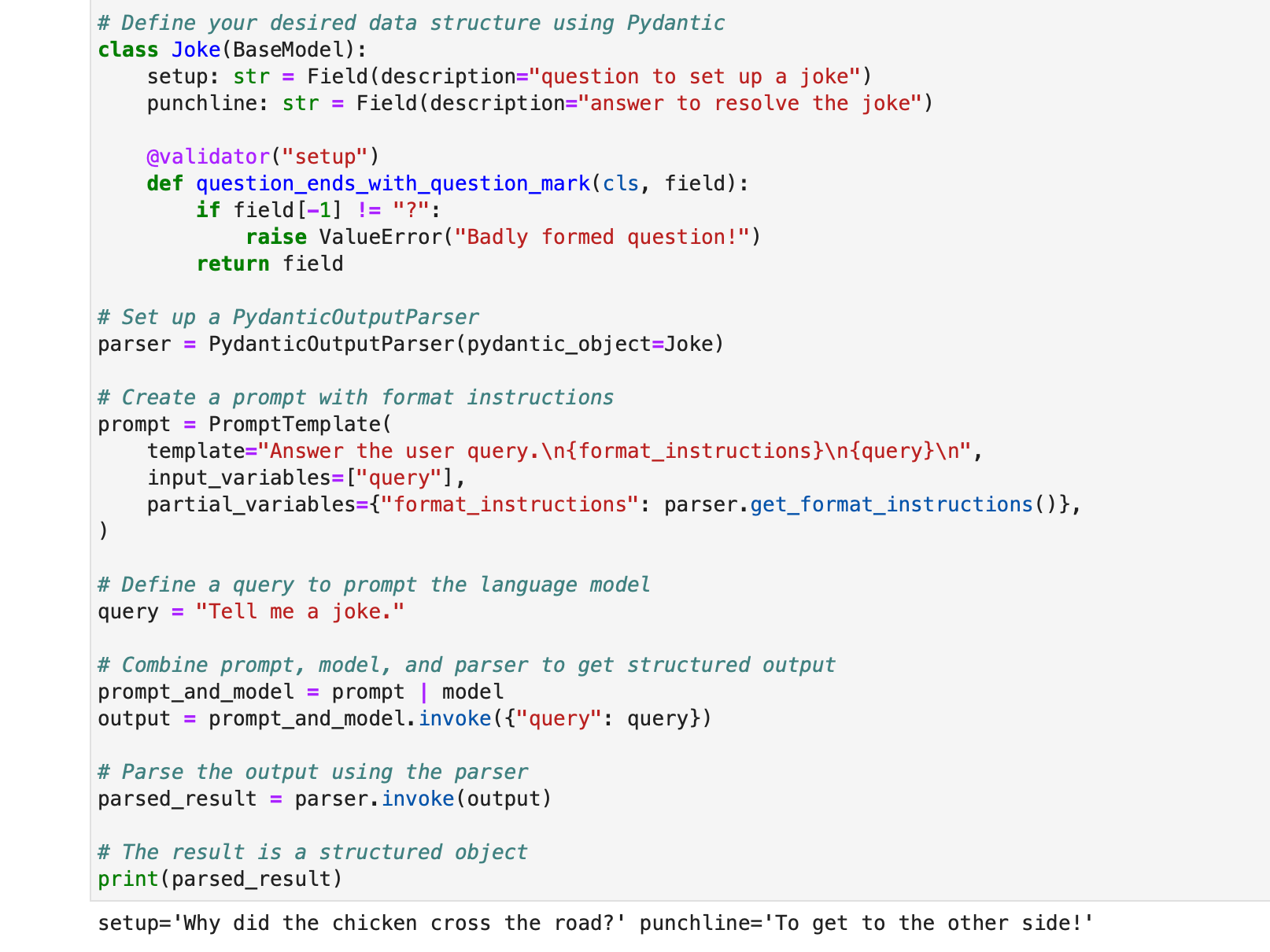

PydanticOutputParser

Langchain offers the PydanticOutputParser for parsing responses into Pydantic knowledge constructions. Under is a step-by-step instance of learn how to use it:

from typing import Checklist

from langchain.llms import OpenAI

from langchain.output_parsers import PydanticOutputParser

from langchain.prompts import PromptTemplate

from langchain.pydantic_v1 import BaseModel, Subject, validator

# Initialize the language mannequin

mannequin = OpenAI(model_name="text-davinci-003", temperature=0.0)

# Outline your required knowledge construction utilizing Pydantic

class Joke(BaseModel):

setup: str = Subject(description="query to arrange a joke")

punchline: str = Subject(description="reply to resolve the joke")

@validator("setup")

def question_ends_with_question_mark(cls, area):

if area[-1] != "?":

increase ValueError("Badly fashioned query!")

return area

# Arrange a PydanticOutputParser

parser = PydanticOutputParser(pydantic_object=Joke)

# Create a immediate with format directions

immediate = PromptTemplate(

template="Reply the person question.n{format_instructions}n{question}n",

input_variables=["query"],

partial_variables={"format_instructions": parser.get_format_instructions()},

)

# Outline a question to immediate the language mannequin

question = "Inform me a joke."

# Mix immediate, mannequin, and parser to get structured output

prompt_and_model = immediate | mannequin

output = prompt_and_model.invoke({"question": question})

# Parse the output utilizing the parser

parsed_result = parser.invoke(output)

# The result's a structured object

print(parsed_result)

The output can be:

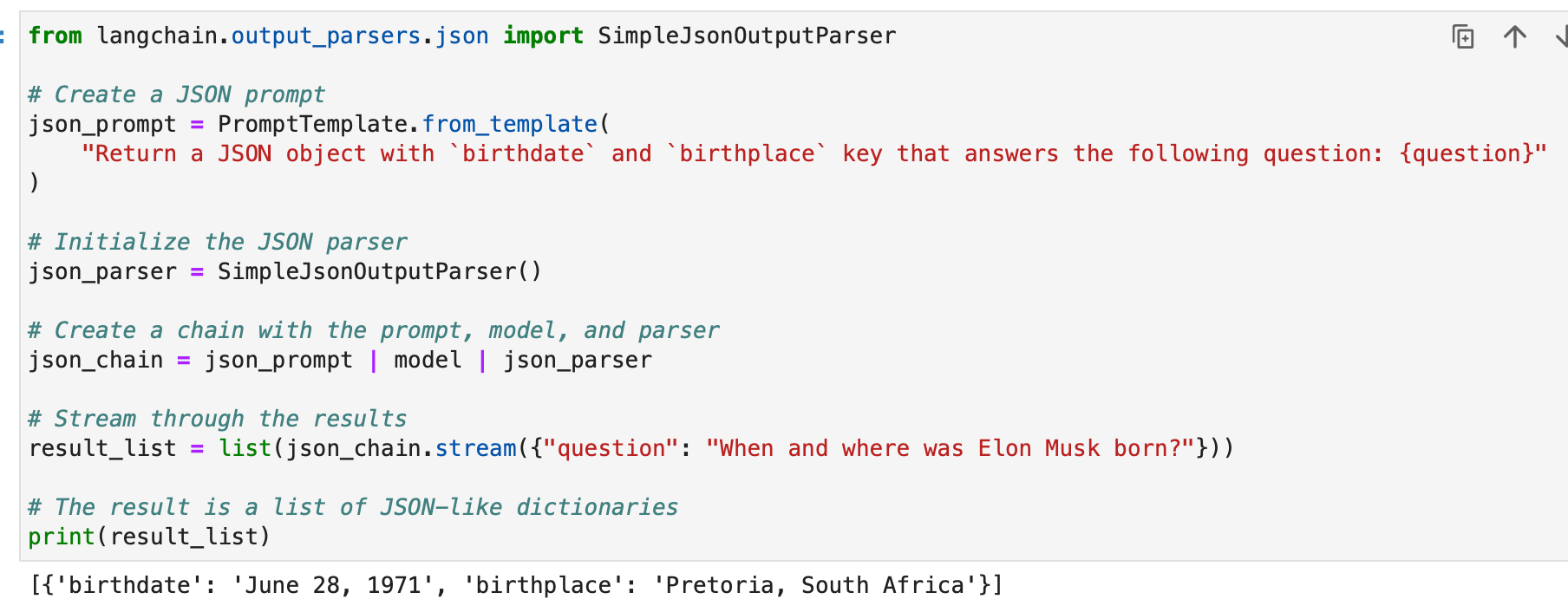

SimpleJsonOutputParser

Langchain’s SimpleJsonOutputParser is used whenever you wish to parse JSON-like outputs. Here is an instance:

from langchain.output_parsers.json import SimpleJsonOutputParser

# Create a JSON immediate

json_prompt = PromptTemplate.from_template(

"Return a JSON object with `birthdate` and `birthplace` key that solutions the next query: {query}"

)

# Initialize the JSON parser

json_parser = SimpleJsonOutputParser()

# Create a series with the immediate, mannequin, and parser

json_chain = json_prompt | mannequin | json_parser

# Stream by way of the outcomes

result_list = listing(json_chain.stream({"query": "When and the place was Elon Musk born?"}))

# The result's a listing of JSON-like dictionaries

print(result_list)

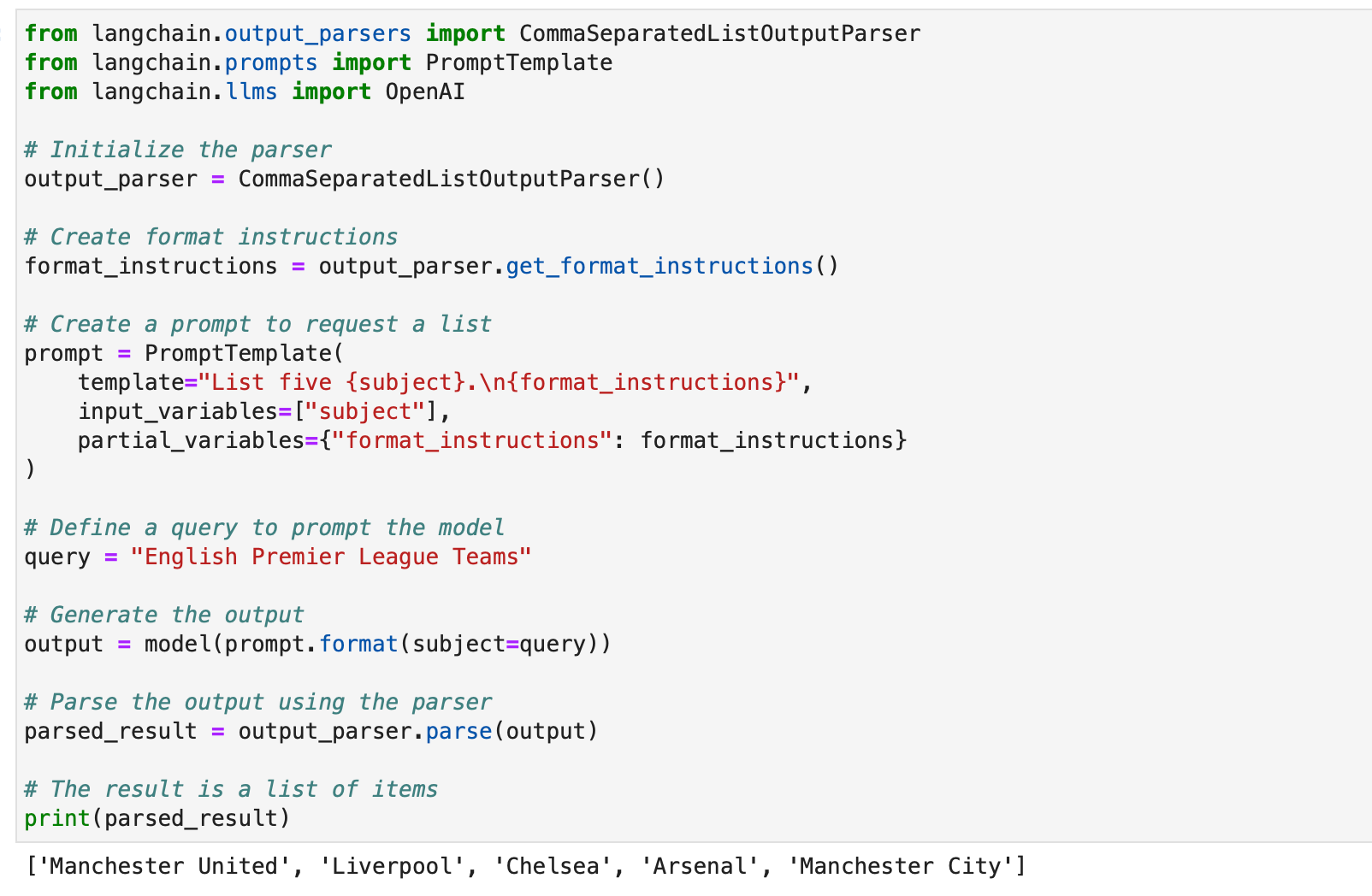

CommaSeparatedListOutputParser

The CommaSeparatedListOutputParser is helpful whenever you wish to extract comma-separated lists from mannequin responses. Here is an instance:

from langchain.output_parsers import CommaSeparatedListOutputParser

from langchain.prompts import PromptTemplate

from langchain.llms import OpenAI

# Initialize the parser

output_parser = CommaSeparatedListOutputParser()

# Create format directions

format_instructions = output_parser.get_format_instructions()

# Create a immediate to request a listing

immediate = PromptTemplate(

template="Checklist 5 {topic}.n{format_instructions}",

input_variables=["subject"],

partial_variables={"format_instructions": format_instructions}

)

# Outline a question to immediate the mannequin

question = "English Premier League Groups"

# Generate the output

output = mannequin(immediate.format(topic=question))

# Parse the output utilizing the parser

parsed_result = output_parser.parse(output)

# The result's a listing of things

print(parsed_result)

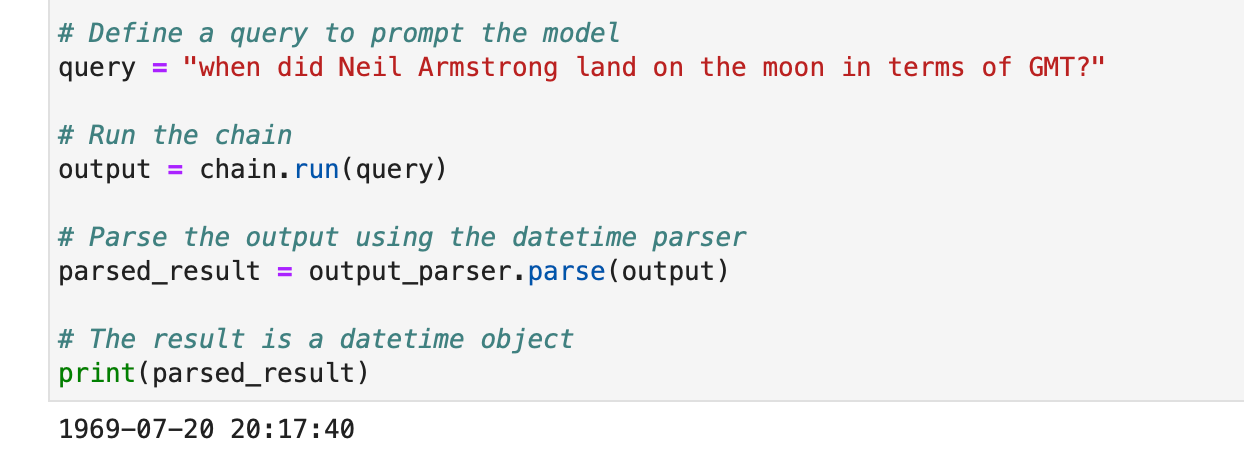

DatetimeOutputParser

Langchain’s DatetimeOutputParser is designed to parse datetime info. Here is learn how to use it:

from langchain.prompts import PromptTemplate

from langchain.output_parsers import DatetimeOutputParser

from langchain.chains import LLMChain

from langchain.llms import OpenAI

# Initialize the DatetimeOutputParser

output_parser = DatetimeOutputParser()

# Create a immediate with format directions

template = """

Reply the person's query:

{query}

{format_instructions}

"""

immediate = PromptTemplate.from_template(

template,

partial_variables={"format_instructions": output_parser.get_format_instructions()},

)

# Create a series with the immediate and language mannequin

chain = LLMChain(immediate=immediate, llm=OpenAI())

# Outline a question to immediate the mannequin

question = "when did Neil Armstrong land on the moon by way of GMT?"

# Run the chain

output = chain.run(question)

# Parse the output utilizing the datetime parser

parsed_result = output_parser.parse(output)

# The result's a datetime object

print(parsed_result)

These examples showcase how Langchain’s output parsers can be utilized to construction numerous varieties of mannequin responses, making them appropriate for various purposes and codecs. Output parsers are a precious instrument for enhancing the usability and interpretability of language mannequin outputs in Langchain.

Automate guide duties and workflows with our AI-driven workflow builder, designed by Nanonets for you and your groups.

Module II : Retrieval

Retrieval in LangChain performs an important function in purposes that require user-specific knowledge, not included within the mannequin’s coaching set. This course of, often called Retrieval Augmented Technology (RAG), entails fetching exterior knowledge and integrating it into the language mannequin’s technology course of. LangChain offers a complete suite of instruments and functionalities to facilitate this course of, catering to each easy and sophisticated purposes.

LangChain achieves retrieval by way of a sequence of parts which we’ll talk about one after the other.

Doc Loaders

Doc loaders in LangChain allow the extraction of information from numerous sources. With over 100 loaders out there, they assist a variety of doc sorts, apps and sources (personal s3 buckets, public web sites, databases).

You’ll be able to select a doc loader based mostly in your necessities right here.

All these loaders ingest knowledge into Doc courses. We’ll discover ways to use knowledge ingested into Doc courses later.

Textual content File Loader: Load a easy .txt file right into a doc.

from langchain.document_loaders import TextLoader

loader = TextLoader("./pattern.txt")

doc = loader.load()

CSV Loader: Load a CSV file right into a doc.

from langchain.document_loaders.csv_loader import CSVLoader

loader = CSVLoader(file_path="./example_data/pattern.csv")

paperwork = loader.load()

We are able to select to customise the parsing by specifying area names –

loader = CSVLoader(file_path="./example_data/mlb_teams_2012.csv", csv_args={

'delimiter': ',',

'quotechar': '"',

'fieldnames': ['MLB Team', 'Payroll in millions', 'Wins']

})

paperwork = loader.load()

PDF Loaders: PDF Loaders in LangChain supply numerous strategies for parsing and extracting content material from PDF information. Every loader caters to completely different necessities and makes use of completely different underlying libraries. Under are detailed examples for every loader.

PyPDFLoader is used for primary PDF parsing.

from langchain.document_loaders import PyPDFLoader

loader = PyPDFLoader("example_data/layout-parser-paper.pdf")

pages = loader.load_and_split()

MathPixLoader is right for extracting mathematical content material and diagrams.

from langchain.document_loaders import MathpixPDFLoader

loader = MathpixPDFLoader("example_data/math-content.pdf")

knowledge = loader.load()

PyMuPDFLoader is quick and consists of detailed metadata extraction.

from langchain.document_loaders import PyMuPDFLoader

loader = PyMuPDFLoader("example_data/layout-parser-paper.pdf")

knowledge = loader.load()

# Optionally go further arguments for PyMuPDF's get_text() name

knowledge = loader.load(possibility="textual content")

PDFMiner Loader is used for extra granular management over textual content extraction.

from langchain.document_loaders import PDFMinerLoader

loader = PDFMinerLoader("example_data/layout-parser-paper.pdf")

knowledge = loader.load()

AmazonTextractPDFParser makes use of AWS Textract for OCR and different superior PDF parsing options.

from langchain.document_loaders import AmazonTextractPDFLoader

# Requires AWS account and configuration

loader = AmazonTextractPDFLoader("example_data/complex-layout.pdf")

paperwork = loader.load()

PDFMinerPDFasHTMLLoader generates HTML from PDF for semantic parsing.

from langchain.document_loaders import PDFMinerPDFasHTMLLoader

loader = PDFMinerPDFasHTMLLoader("example_data/layout-parser-paper.pdf")

knowledge = loader.load()

PDFPlumberLoader offers detailed metadata and helps one doc per web page.

from langchain.document_loaders import PDFPlumberLoader

loader = PDFPlumberLoader("example_data/layout-parser-paper.pdf")

knowledge = loader.load()

Built-in Loaders: LangChain affords all kinds of customized loaders to straight load knowledge out of your apps (reminiscent of Slack, Sigma, Notion, Confluence, Google Drive and lots of extra) and databases and use them in LLM purposes.

The entire listing is right here.

Under are a few examples as an example this –

Instance I – Slack

Slack, a widely-used prompt messaging platform, could be built-in into LLM workflows and purposes.

- Go to your Slack Workspace Administration web page.

- Navigate to

{your_slack_domain}.slack.com/companies/export. - Choose the specified date vary and provoke the export.

- Slack notifies through e-mail and DM as soon as the export is prepared.

- The export ends in a

.zipfile positioned in your Downloads folder or your designated obtain path. - Assign the trail of the downloaded

.zipfile toLOCAL_ZIPFILE. - Use the

SlackDirectoryLoaderfrom thelangchain.document_loaderspackage deal.

from langchain.document_loaders import SlackDirectoryLoader

SLACK_WORKSPACE_URL = "https://xxx.slack.com" # Change together with your Slack URL

LOCAL_ZIPFILE = "" # Path to the Slack zip file

loader = SlackDirectoryLoader(LOCAL_ZIPFILE, SLACK_WORKSPACE_URL)

docs = loader.load()

print(docs)

Instance II – Figma

Figma, a well-liked instrument for interface design, affords a REST API for knowledge integration.

- Acquire the Figma file key from the URL format:

https://www.figma.com/file/{filekey}/sampleFilename. - Node IDs are discovered within the URL parameter

?node-id={node_id}. - Generate an entry token following directions on the Figma Assist Middle.

- The

FigmaFileLoaderclass fromlangchain.document_loaders.figmais used to load Figma knowledge. - Varied LangChain modules like

CharacterTextSplitter,ChatOpenAI, and many others., are employed for processing.

import os

from langchain.document_loaders.figma import FigmaFileLoader

from langchain.text_splitter import CharacterTextSplitter

from langchain.chat_models import ChatOpenAI

from langchain.indexes import VectorstoreIndexCreator

from langchain.chains import ConversationChain, LLMChain

from langchain.reminiscence import ConversationBufferWindowMemory

from langchain.prompts.chat import ChatPromptTemplate, SystemMessagePromptTemplate, AIMessagePromptTemplate, HumanMessagePromptTemplate

figma_loader = FigmaFileLoader(

os.environ.get("ACCESS_TOKEN"),

os.environ.get("NODE_IDS"),

os.environ.get("FILE_KEY"),

)

index = VectorstoreIndexCreator().from_loaders([figma_loader])

figma_doc_retriever = index.vectorstore.as_retriever()

- The

generate_codeperform makes use of the Figma knowledge to create HTML/CSS code. - It employs a templated dialog with a GPT-based mannequin.

def generate_code(human_input):

# Template for system and human prompts

system_prompt_template = "Your coding directions..."

human_prompt_template = "Code the {textual content}. Guarantee it is cellular responsive"

# Creating immediate templates

system_message_prompt = SystemMessagePromptTemplate.from_template(system_prompt_template)

human_message_prompt = HumanMessagePromptTemplate.from_template(human_prompt_template)

# Organising the AI mannequin

gpt_4 = ChatOpenAI(temperature=0.02, model_name="gpt-4")

# Retrieving related paperwork

relevant_nodes = figma_doc_retriever.get_relevant_documents(human_input)

# Producing and formatting the immediate

dialog = [system_message_prompt, human_message_prompt]

chat_prompt = ChatPromptTemplate.from_messages(dialog)

response = gpt_4(chat_prompt.format_prompt(context=relevant_nodes, textual content=human_input).to_messages())

return response

# Instance utilization

response = generate_code("web page prime header")

print(response.content material)

- The

generate_codeperform, when executed, returns HTML/CSS code based mostly on the Figma design enter.

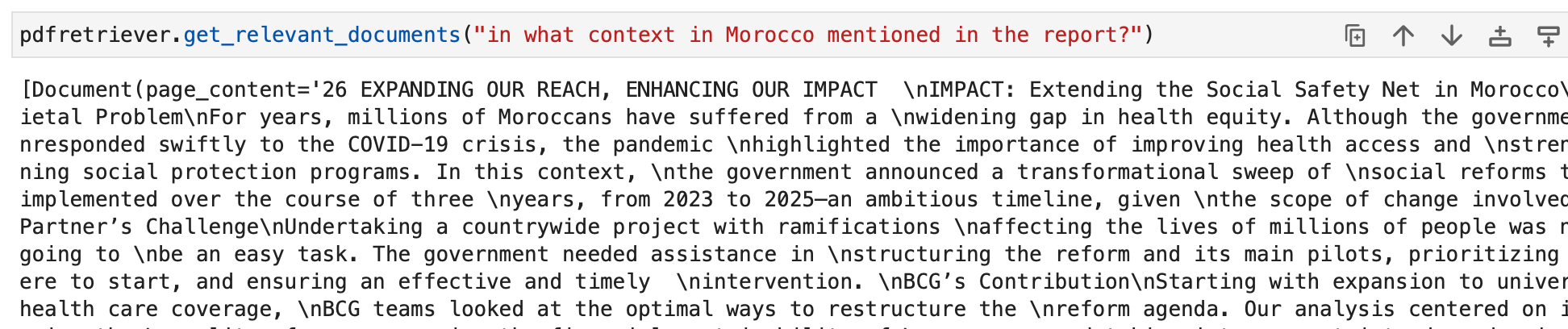

Allow us to now use our information to create a number of doc units.

We first load a PDF, the BCG annual sustainability report.

We use the PyPDFLoader for this.

from langchain.document_loaders import PyPDFLoader

loader = PyPDFLoader("bcg-2022-annual-sustainability-report-apr-2023.pdf")

pdfpages = loader.load_and_split()

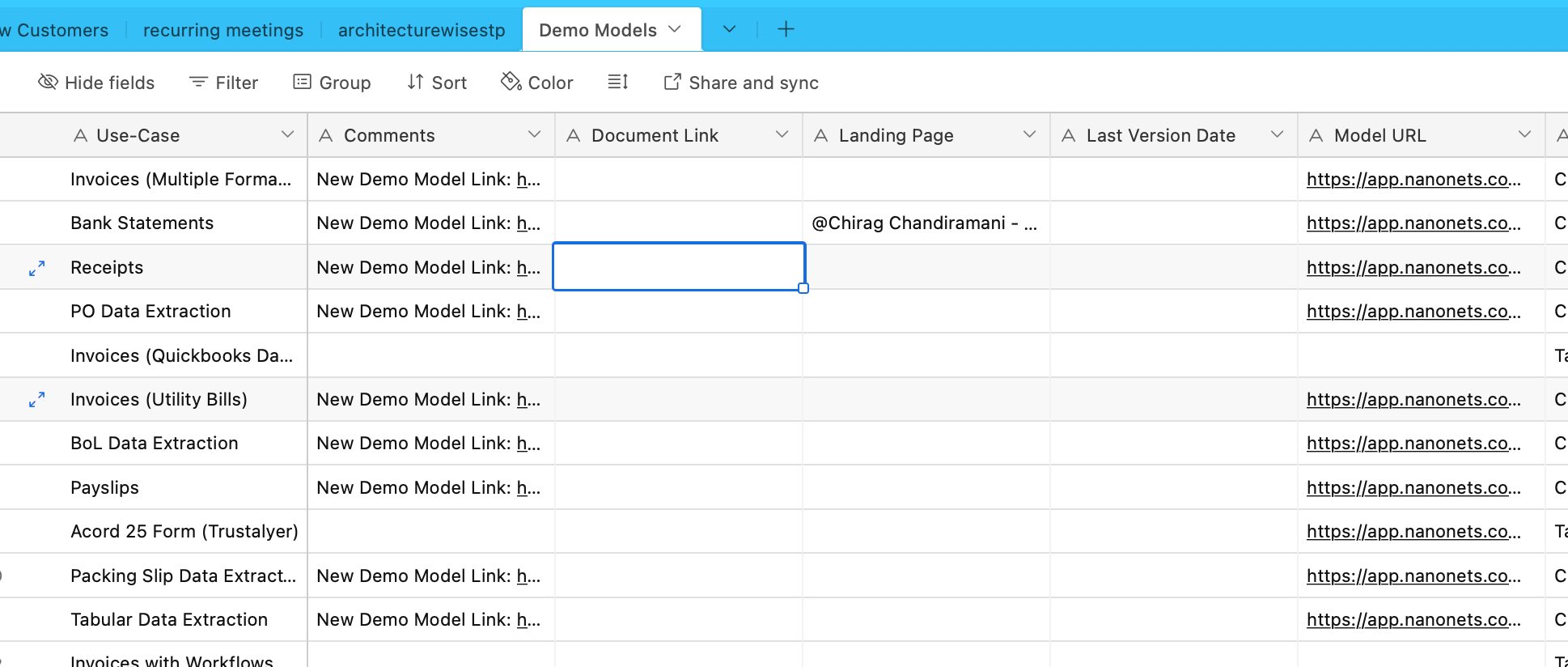

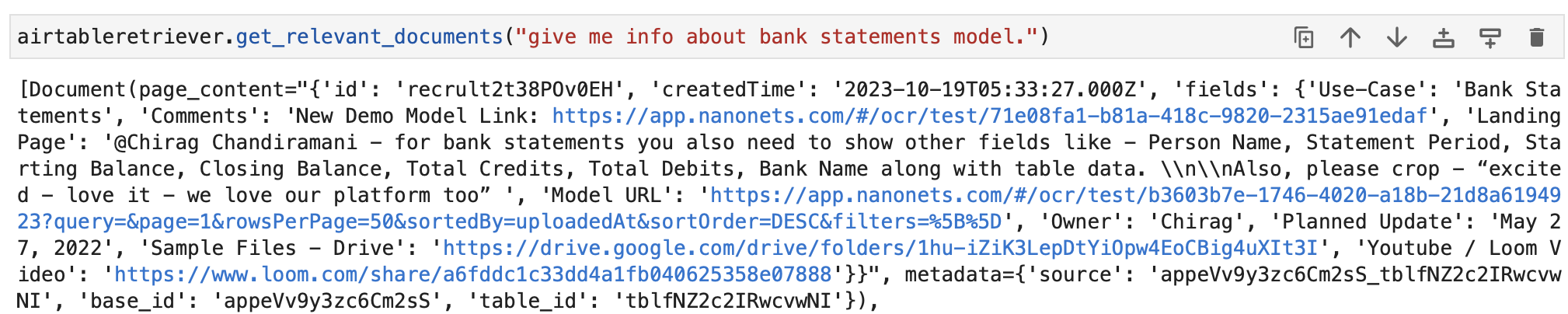

We’ll ingest knowledge from Airtable now. Now we have an Airtable containing details about numerous OCR and knowledge extraction fashions –

Allow us to use the AirtableLoader for this, discovered within the listing of built-in loaders.

from langchain.document_loaders import AirtableLoader

api_key = "XXXXX"

base_id = "XXXXX"

table_id = "XXXXX"

loader = AirtableLoader(api_key, table_id, base_id)

airtabledocs = loader.load()

Allow us to now proceed and discover ways to use these doc courses.

Doc Transformers

Doc transformers in LangChain are important instruments designed to govern paperwork, which we created in our earlier subsection.

They’re used for duties reminiscent of splitting lengthy paperwork into smaller chunks, combining, and filtering, that are essential for adapting paperwork to a mannequin’s context window or assembly particular software wants.

One such instrument is the RecursiveCharacterTextSplitter, a flexible textual content splitter that makes use of a personality listing for splitting. It permits parameters like chunk dimension, overlap, and beginning index. Here is an instance of the way it’s utilized in Python:

from langchain.text_splitter import RecursiveCharacterTextSplitter

state_of_the_union = "Your lengthy textual content right here..."

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=100,

chunk_overlap=20,

length_function=len,

add_start_index=True,

)

texts = text_splitter.create_documents([state_of_the_union])

print(texts[0])

print(texts[1])

One other instrument is the CharacterTextSplitter, which splits textual content based mostly on a specified character and consists of controls for chunk dimension and overlap:

from langchain.text_splitter import CharacterTextSplitter

text_splitter = CharacterTextSplitter(

separator="nn",

chunk_size=1000,

chunk_overlap=200,

length_function=len,

is_separator_regex=False,

)

texts = text_splitter.create_documents([state_of_the_union])

print(texts[0])

The HTMLHeaderTextSplitter is designed to separate HTML content material based mostly on header tags, retaining the semantic construction:

from langchain.text_splitter import HTMLHeaderTextSplitter

html_string = "Your HTML content material right here..."

headers_to_split_on = [("h1", "Header 1"), ("h2", "Header 2")]

html_splitter = HTMLHeaderTextSplitter(headers_to_split_on=headers_to_split_on)

html_header_splits = html_splitter.split_text(html_string)

print(html_header_splits[0])

A extra complicated manipulation could be achieved by combining HTMLHeaderTextSplitter with one other splitter, just like the Pipelined Splitter:

from langchain.text_splitter import HTMLHeaderTextSplitter, RecursiveCharacterTextSplitter

url = "https://instance.com"

headers_to_split_on = [("h1", "Header 1"), ("h2", "Header 2")]

html_splitter = HTMLHeaderTextSplitter(headers_to_split_on=headers_to_split_on)

html_header_splits = html_splitter.split_text_from_url(url)

chunk_size = 500

text_splitter = RecursiveCharacterTextSplitter(chunk_size=chunk_size)

splits = text_splitter.split_documents(html_header_splits)

print(splits[0])

LangChain additionally affords particular splitters for various programming languages, just like the Python Code Splitter and the JavaScript Code Splitter:

from langchain.text_splitter import RecursiveCharacterTextSplitter, Language

python_code = """

def hello_world():

print("Hiya, World!")

hello_world()

"""

python_splitter = RecursiveCharacterTextSplitter.from_language(

language=Language.PYTHON, chunk_size=50

)

python_docs = python_splitter.create_documents([python_code])

print(python_docs[0])

js_code = """

perform helloWorld() {

console.log("Hiya, World!");

}

helloWorld();

"""

js_splitter = RecursiveCharacterTextSplitter.from_language(

language=Language.JS, chunk_size=60

)

js_docs = js_splitter.create_documents([js_code])

print(js_docs[0])

For splitting textual content based mostly on token rely, which is helpful for language fashions with token limits, the TokenTextSplitter is used:

from langchain.text_splitter import TokenTextSplitter

text_splitter = TokenTextSplitter(chunk_size=10)

texts = text_splitter.split_text(state_of_the_union)

print(texts[0])

Lastly, the LongContextReorder reorders paperwork to forestall efficiency degradation in fashions as a consequence of lengthy contexts:

from langchain.document_transformers import LongContextReorder

reordering = LongContextReorder()

reordered_docs = reordering.transform_documents(docs)

print(reordered_docs[0])

These instruments display numerous methods to remodel paperwork in LangChain, from easy textual content splitting to complicated reordering and language-specific splitting. For extra in-depth and particular use circumstances, the LangChain documentation and Integrations part ought to be consulted.

In our examples, the loaders have already created chunked paperwork for us, and this half is already dealt with.

Textual content Embedding Fashions

Textual content embedding fashions in LangChain present a standardized interface for numerous embedding mannequin suppliers like OpenAI, Cohere, and Hugging Face. These fashions remodel textual content into vector representations, enabling operations like semantic search by way of textual content similarity in vector house.

To get began with textual content embedding fashions, you usually want to put in particular packages and arrange API keys. Now we have already finished this for OpenAI

In LangChain, the embed_documents methodology is used to embed a number of texts, offering a listing of vector representations. As an illustration:

from langchain.embeddings import OpenAIEmbeddings

# Initialize the mannequin

embeddings_model = OpenAIEmbeddings()

# Embed a listing of texts

embeddings = embeddings_model.embed_documents(

["Hi there!", "Oh, hello!", "What's your name?", "My friends call me World", "Hello World!"]

)

print("Variety of paperwork embedded:", len(embeddings))

print("Dimension of every embedding:", len(embeddings[0]))

For embedding a single textual content, reminiscent of a search question, the embed_query methodology is used. That is helpful for evaluating a question to a set of doc embeddings. For instance:

from langchain.embeddings import OpenAIEmbeddings

# Initialize the mannequin

embeddings_model = OpenAIEmbeddings()

# Embed a single question

embedded_query = embeddings_model.embed_query("What was the title talked about within the dialog?")

print("First 5 dimensions of the embedded question:", embedded_query[:5])

Understanding these embeddings is essential. Every bit of textual content is transformed right into a vector, the dimension of which is determined by the mannequin used. As an illustration, OpenAI fashions usually produce 1536-dimensional vectors. These embeddings are then used for retrieving related info.

LangChain’s embedding performance just isn’t restricted to OpenAI however is designed to work with numerous suppliers. The setup and utilization may barely differ relying on the supplier, however the core idea of embedding texts into vector house stays the identical. For detailed utilization, together with superior configurations and integrations with completely different embedding mannequin suppliers, the LangChain documentation within the Integrations part is a precious useful resource.

Vector Shops

Vector shops in LangChain assist the environment friendly storage and looking of textual content embeddings. LangChain integrates with over 50 vector shops, offering a standardized interface for ease of use.

Instance: Storing and Looking out Embeddings

After embedding texts, we will retailer them in a vector retailer like Chroma and carry out similarity searches:

from langchain.vectorstores import Chroma

db = Chroma.from_texts(embedded_texts)

similar_texts = db.similarity_search("search question")

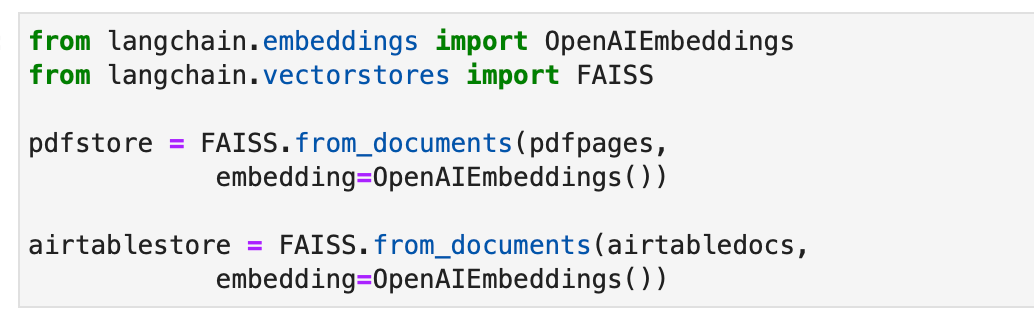

Allow us to alternatively use the FAISS vector retailer to create indexes for our paperwork.

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import FAISS

pdfstore = FAISS.from_documents(pdfpages,

embedding=OpenAIEmbeddings())

airtablestore = FAISS.from_documents(airtabledocs,

embedding=OpenAIEmbeddings())

Retrievers

Retrievers in LangChain are interfaces that return paperwork in response to an unstructured question. They’re extra normal than vector shops, specializing in retrieval fairly than storage. Though vector shops can be utilized as a retriever’s spine, there are different varieties of retrievers as nicely.

To arrange a Chroma retriever, you first set up it utilizing pip set up chromadb. Then, you load, cut up, embed, and retrieve paperwork utilizing a sequence of Python instructions. Here is a code instance for establishing a Chroma retriever:

from langchain.embeddings import OpenAIEmbeddings

from langchain.text_splitter import CharacterTextSplitter

from langchain.vectorstores import Chroma

full_text = open("state_of_the_union.txt", "r").learn()

text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=100)

texts = text_splitter.split_text(full_text)

embeddings = OpenAIEmbeddings()

db = Chroma.from_texts(texts, embeddings)

retriever = db.as_retriever()

retrieved_docs = retriever.invoke("What did the president say about Ketanji Brown Jackson?")

print(retrieved_docs[0].page_content)

The MultiQueryRetriever automates immediate tuning by producing a number of queries for a person enter question and combines the outcomes. Here is an instance of its easy utilization:

from langchain.chat_models import ChatOpenAI

from langchain.retrievers.multi_query import MultiQueryRetriever

query = "What are the approaches to Activity Decomposition?"

llm = ChatOpenAI(temperature=0)

retriever_from_llm = MultiQueryRetriever.from_llm(

retriever=db.as_retriever(), llm=llm

)

unique_docs = retriever_from_llm.get_relevant_documents(question=query)

print("Variety of distinctive paperwork:", len(unique_docs))

Contextual Compression in LangChain compresses retrieved paperwork utilizing the context of the question, making certain solely related info is returned. This entails content material discount and filtering out much less related paperwork. The next code instance exhibits learn how to use Contextual Compression Retriever:

from langchain.llms import OpenAI

from langchain.retrievers import ContextualCompressionRetriever

from langchain.retrievers.document_compressors import LLMChainExtractor

llm = OpenAI(temperature=0)

compressor = LLMChainExtractor.from_llm(llm)

compression_retriever = ContextualCompressionRetriever(base_compressor=compressor, base_retriever=retriever)

compressed_docs = compression_retriever.get_relevant_documents("What did the president say about Ketanji Jackson Brown")

print(compressed_docs[0].page_content)

The EnsembleRetriever combines completely different retrieval algorithms to realize higher efficiency. An instance of mixing BM25 and FAISS Retrievers is proven within the following code:

from langchain.retrievers import BM25Retriever, EnsembleRetriever

from langchain.vectorstores import FAISS

bm25_retriever = BM25Retriever.from_texts(doc_list).set_k(2)

faiss_vectorstore = FAISS.from_texts(doc_list, OpenAIEmbeddings())

faiss_retriever = faiss_vectorstore.as_retriever(search_kwargs={"ok": 2})

ensemble_retriever = EnsembleRetriever(

retrievers=[bm25_retriever, faiss_retriever], weights=[0.5, 0.5]

)

docs = ensemble_retriever.get_relevant_documents("apples")

print(docs[0].page_content)

MultiVector Retriever in LangChain permits querying paperwork with a number of vectors per doc, which is helpful for capturing completely different semantic features inside a doc. Strategies for creating a number of vectors embody splitting into smaller chunks, summarizing, or producing hypothetical questions. For splitting paperwork into smaller chunks, the next Python code can be utilized:

python

from langchain.retrievers.multi_vector import MultiVectorRetriever

from langchain.vectorstores import Chroma

from langchain.embeddings import OpenAIEmbeddings

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.storage import InMemoryStore

from langchain.document_loaders from TextLoader

import uuid

loaders = [TextLoader("file1.txt"), TextLoader("file2.txt")]

docs = [doc for loader in loaders for doc in loader.load()]

text_splitter = RecursiveCharacterTextSplitter(chunk_size=10000)

docs = text_splitter.split_documents(docs)

vectorstore = Chroma(collection_name="full_documents", embedding_function=OpenAIEmbeddings())

retailer = InMemoryStore()

id_key = "doc_id"

retriever = MultiVectorRetriever(vectorstore=vectorstore, docstore=retailer, id_key=id_key)

doc_ids = [str(uuid.uuid4()) for _ in docs]

child_text_splitter = RecursiveCharacterTextSplitter(chunk_size=400)

sub_docs = [sub_doc for doc in docs for sub_doc in child_text_splitter.split_documents([doc])]

for sub_doc in sub_docs:

sub_doc.metadata[id_key] = doc_ids[sub_docs.index(sub_doc)]

retriever.vectorstore.add_documents(sub_docs)

retriever.docstore.mset(listing(zip(doc_ids, docs)))

Producing summaries for higher retrieval as a consequence of extra targeted content material illustration is one other methodology. Here is an instance of producing summaries:

from langchain.chat_models import ChatOpenAI

from langchain.prompts import ChatPromptTemplate

from langchain.schema.output_parser import StrOutputParser

from langchain.schema.doc import Doc

chain = (lambda x: x.page_content) | ChatPromptTemplate.from_template("Summarize the next doc:nn{doc}") | ChatOpenAI(max_retries=0) | StrOutputParser()

summaries = chain.batch(docs, {"max_concurrency": 5})

summary_docs = [Document(page_content=s, metadata={id_key: doc_ids[i]}) for i, s in enumerate(summaries)]

retriever.vectorstore.add_documents(summary_docs)

retriever.docstore.mset(listing(zip(doc_ids, docs)))

Producing hypothetical questions related to every doc utilizing LLM is one other method. This may be finished with the next code:

features = [{"name": "hypothetical_questions", "parameters": {"questions": {"type": "array", "items": {"type": "string"}}}}]

from langchain.output_parsers.openai_functions import JsonKeyOutputFunctionsParser

chain = (lambda x: x.page_content) | ChatPromptTemplate.from_template("Generate 3 hypothetical questions:nn{doc}") | ChatOpenAI(max_retries=0).bind(features=features, function_call={"title": "hypothetical_questions"}) | JsonKeyOutputFunctionsParser(key_name="questions")

hypothetical_questions = chain.batch(docs, {"max_concurrency": 5})

question_docs = [Document(page_content=q, metadata={id_key: doc_ids[i]}) for i, questions in enumerate(hypothetical_questions) for q in questions]

retriever.vectorstore.add_documents(question_docs)

retriever.docstore.mset(listing(zip(doc_ids, docs)))

The Mum or dad Doc Retriever is one other retriever that strikes a steadiness between embedding accuracy and context retention by storing small chunks and retrieving their bigger guardian paperwork. Its implementation is as follows:

from langchain.retrievers import ParentDocumentRetriever

loaders = [TextLoader("file1.txt"), TextLoader("file2.txt")]

docs = [doc for loader in loaders for doc in loader.load()]

child_splitter = RecursiveCharacterTextSplitter(chunk_size=400)

vectorstore = Chroma(collection_name="full_documents", embedding_function=OpenAIEmbeddings())

retailer = InMemoryStore()

retriever = ParentDocumentRetriever(vectorstore=vectorstore, docstore=retailer, child_splitter=child_splitter)

retriever.add_documents(docs, ids=None)

retrieved_docs = retriever.get_relevant_documents("question")

A self-querying retriever constructs structured queries from pure language inputs and applies them to its underlying VectorStore. Its implementation is proven within the following code:

from langchain.chat_models from ChatOpenAI

from langchain.chains.query_constructor.base from AttributeInfo

from langchain.retrievers.self_query.base from SelfQueryRetriever

metadata_field_info = [AttributeInfo(name="genre", description="...", type="string"), ...]

document_content_description = "Temporary abstract of a film"

llm = ChatOpenAI(temperature=0)

retriever = SelfQueryRetriever.from_llm(llm, vectorstore, document_content_description, metadata_field_info)

retrieved_docs = retriever.invoke("question")

The WebResearchRetriever performs net analysis based mostly on a given question –

from langchain.retrievers.web_research import WebResearchRetriever

# Initialize parts

llm = ChatOpenAI(temperature=0)

search = GoogleSearchAPIWrapper()

vectorstore = Chroma(embedding_function=OpenAIEmbeddings())

# Instantiate WebResearchRetriever

web_research_retriever = WebResearchRetriever.from_llm(vectorstore=vectorstore, llm=llm, search=search)

# Retrieve paperwork

docs = web_research_retriever.get_relevant_documents("question")

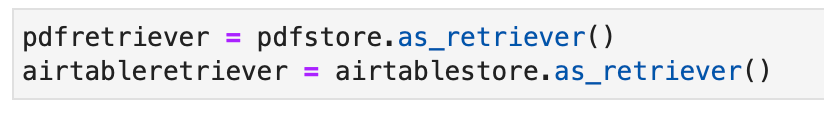

For our examples, we will additionally use the usual retriever already carried out as a part of our vector retailer object as follows –

We are able to now question the retrievers. The output of our question can be doc objects related to the question. These can be finally utilized to create related responses in additional sections.

Automate guide duties and workflows with our AI-driven workflow builder, designed by Nanonets for you and your groups.

Module III : Brokers

LangChain introduces a strong idea referred to as “Brokers” that takes the concept of chains to an entire new stage. Brokers leverage language fashions to dynamically decide sequences of actions to carry out, making them extremely versatile and adaptive. In contrast to conventional chains, the place actions are hardcoded in code, brokers make use of language fashions as reasoning engines to resolve which actions to take and in what order.

The Agent is the core part accountable for decision-making. It harnesses the ability of a language mannequin and a immediate to find out the following steps to realize a selected goal. The inputs to an agent usually embody:

- Instruments: Descriptions of accessible instruments (extra on this later).

- Consumer Enter: The high-level goal or question from the person.

- Intermediate Steps: A historical past of (motion, instrument output) pairs executed to succeed in the present person enter.

The output of an agent could be the following motion to take actions (AgentActions) or the ultimate response to ship to the person (AgentFinish). An motion specifies a instrument and the enter for that instrument.

Instruments

Instruments are interfaces that an agent can use to work together with the world. They allow brokers to carry out numerous duties, reminiscent of looking the net, working shell instructions, or accessing exterior APIs. In LangChain, instruments are important for extending the capabilities of brokers and enabling them to perform various duties.

To make use of instruments in LangChain, you may load them utilizing the next snippet:

from langchain.brokers import load_tools

tool_names = [...]

instruments = load_tools(tool_names)

Some instruments might require a base Language Mannequin (LLM) to initialize. In such circumstances, you may go an LLM as nicely:

from langchain.brokers import load_tools

tool_names = [...]

llm = ...

instruments = load_tools(tool_names, llm=llm)

This setup lets you entry quite a lot of instruments and combine them into your agent’s workflows. The entire listing of instruments with utilization documentation is right here.

Allow us to have a look at some examples of Instruments.

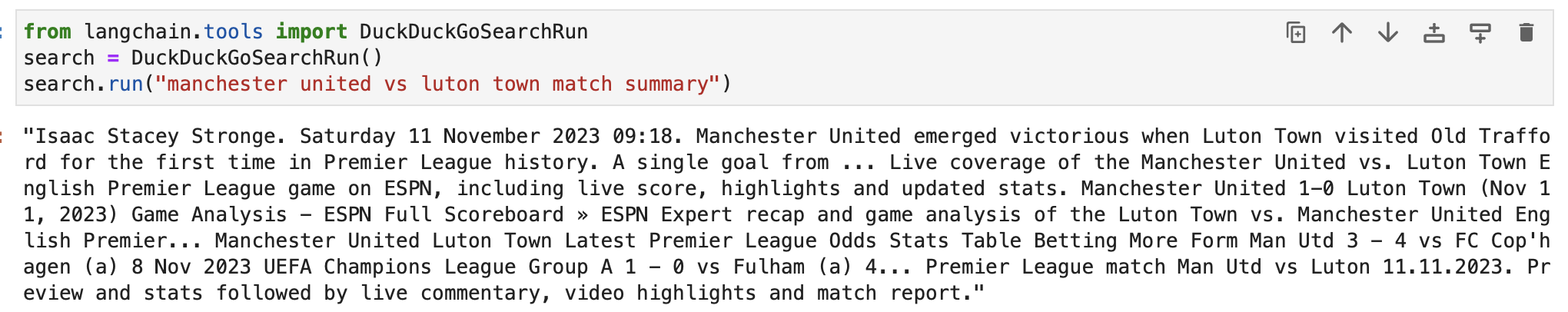

DuckDuckGo

The DuckDuckGo instrument allows you to carry out net searches utilizing its search engine. Here is learn how to use it:

from langchain.instruments import DuckDuckGoSearchRun

search = DuckDuckGoSearchRun()

search.run("manchester united vs luton city match abstract")

DataForSeo

The DataForSeo toolkit lets you receive search engine outcomes utilizing the DataForSeo API. To make use of this toolkit, you may must arrange your API credentials. Here is learn how to configure the credentials:

import os

os.environ["DATAFORSEO_LOGIN"] = "<your_api_access_username>"

os.environ["DATAFORSEO_PASSWORD"] = "<your_api_access_password>"

As soon as your credentials are set, you may create a DataForSeoAPIWrapper instrument to entry the API:

from langchain.utilities.dataforseo_api_search import DataForSeoAPIWrapper

wrapper = DataForSeoAPIWrapper()

outcome = wrapper.run("Climate in Los Angeles")

The DataForSeoAPIWrapper instrument retrieves search engine outcomes from numerous sources.

You’ll be able to customise the kind of outcomes and fields returned within the JSON response. For instance, you may specify the outcome sorts, fields, and set a most rely for the variety of prime outcomes to return:

json_wrapper = DataForSeoAPIWrapper(

json_result_types=["organic", "knowledge_graph", "answer_box"],

json_result_fields=["type", "title", "description", "text"],

top_count=3,

)

json_result = json_wrapper.outcomes("Invoice Gates")

This instance customizes the JSON response by specifying outcome sorts, fields, and limiting the variety of outcomes.

You too can specify the placement and language on your search outcomes by passing further parameters to the API wrapper:

customized_wrapper = DataForSeoAPIWrapper(

top_count=10,

json_result_types=["organic", "local_pack"],

json_result_fields=["title", "description", "type"],

params={"location_name": "Germany", "language_code": "en"},

)

customized_result = customized_wrapper.outcomes("espresso close to me")

By offering location and language parameters, you may tailor your search outcomes to particular areas and languages.

You’ve the pliability to decide on the search engine you wish to use. Merely specify the specified search engine:

customized_wrapper = DataForSeoAPIWrapper(

top_count=10,

json_result_types=["organic", "local_pack"],

json_result_fields=["title", "description", "type"],

params={"location_name": "Germany", "language_code": "en", "se_name": "bing"},

)

customized_result = customized_wrapper.outcomes("espresso close to me")

On this instance, the search is personalized to make use of Bing because the search engine.

The API wrapper additionally lets you specify the kind of search you wish to carry out. As an illustration, you may carry out a maps search:

maps_search = DataForSeoAPIWrapper(

top_count=10,

json_result_fields=["title", "value", "address", "rating", "type"],

params={

"location_coordinate": "52.512,13.36,12z",

"language_code": "en",

"se_type": "maps",

},

)

maps_search_result = maps_search.outcomes("espresso close to me")

This customizes the search to retrieve maps-related info.

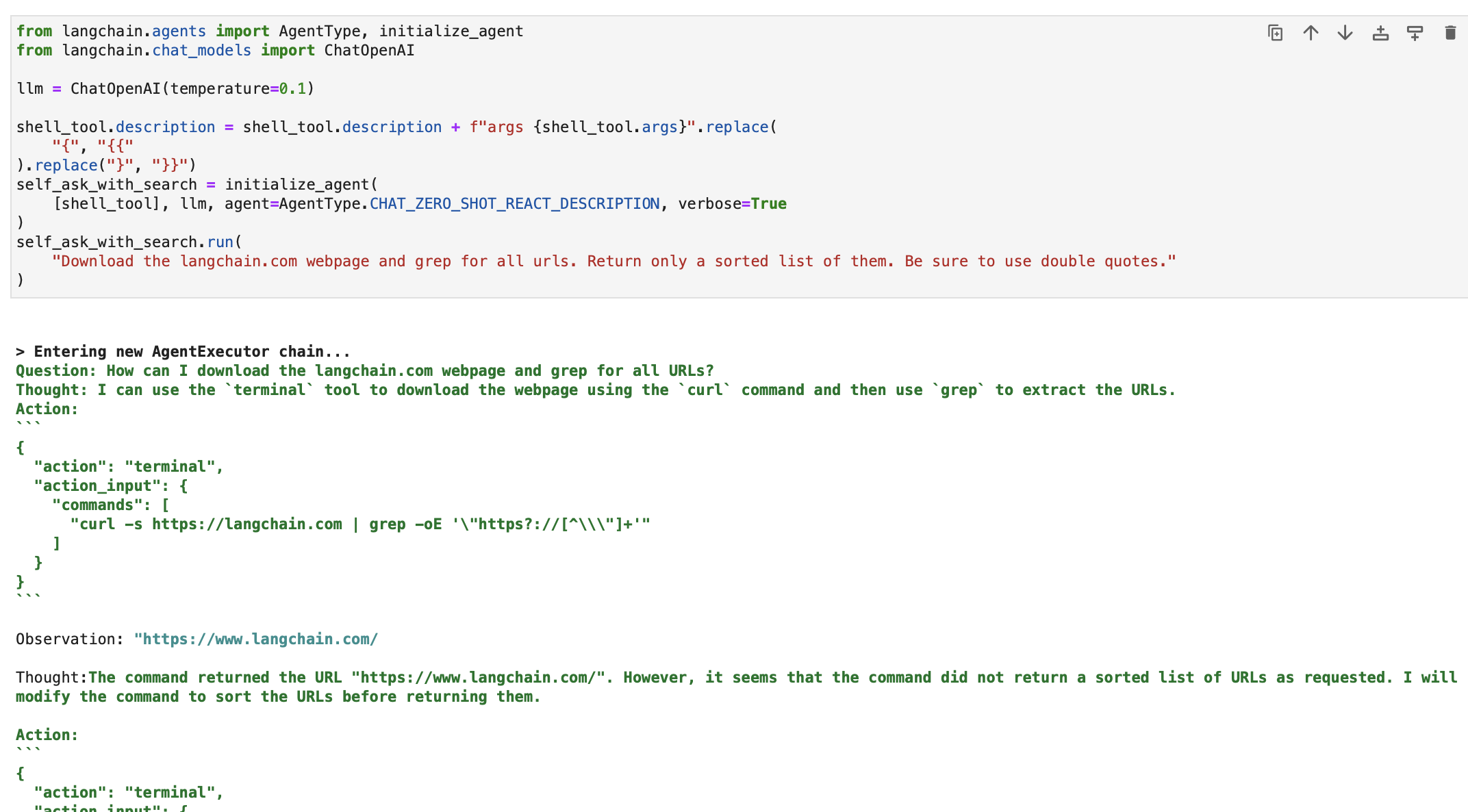

Shell (bash)

The Shell toolkit offers brokers with entry to the shell surroundings, permitting them to execute shell instructions. This characteristic is highly effective however ought to be used with warning, particularly in sandboxed environments. Here is how you should use the Shell instrument:

from langchain.instruments import ShellTool

shell_tool = ShellTool()

outcome = shell_tool.run({"instructions": ["echo 'Hello World!'", "time"]})

On this instance, the Shell instrument runs two shell instructions: echoing “Hiya World!” and displaying the present time.

You’ll be able to present the Shell instrument to an agent to carry out extra complicated duties. Here is an instance of an agent fetching hyperlinks from an internet web page utilizing the Shell instrument:

from langchain.brokers import AgentType, initialize_agent

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI(temperature=0.1)

shell_tool.description = shell_tool.description + f"args {shell_tool.args}".change(

"{", "{{"

).change("}", "}}")

self_ask_with_search = initialize_agent(

[shell_tool], llm, agent=AgentType.CHAT_ZERO_SHOT_REACT_DESCRIPTION, verbose=True

)

self_ask_with_search.run(

"Obtain the langchain.com webpage and grep for all urls. Return solely a sorted listing of them. Make sure you use double quotes."

)

On this state of affairs, the agent makes use of the Shell instrument to execute a sequence of instructions to fetch, filter, and kind URLs from an internet web page.

The examples offered display among the instruments out there in LangChain. These instruments finally lengthen the capabilities of brokers (explored in subsequent subsection) and empower them to carry out numerous duties effectively. Relying in your necessities, you may select the instruments and toolkits that finest fit your undertaking’s wants and combine them into your agent’s workflows.

Again to Brokers

Let’s transfer on to brokers now.

The AgentExecutor is the runtime surroundings for an agent. It’s accountable for calling the agent, executing the actions it selects, passing the motion outputs again to the agent, and repeating the method till the agent finishes. In pseudocode, the AgentExecutor may look one thing like this:

next_action = agent.get_action(...)

whereas next_action != AgentFinish:

statement = run(next_action)

next_action = agent.get_action(..., next_action, statement)

return next_action

The AgentExecutor handles numerous complexities, reminiscent of coping with circumstances the place the agent selects a non-existent instrument, dealing with instrument errors, managing agent-produced outputs, and offering logging and observability in any respect ranges.

Whereas the AgentExecutor class is the first agent runtime in LangChain, there are different, extra experimental runtimes supported, together with:

- Plan-and-execute Agent

- Child AGI

- Auto GPT

To realize a greater understanding of the agent framework, let’s construct a primary agent from scratch, after which transfer on to discover pre-built brokers.

Earlier than we dive into constructing the agent, it is important to revisit some key terminology and schema:

- AgentAction: This can be a knowledge class representing the motion an agent ought to take. It consists of a

instrumentproperty (the title of the instrument to invoke) and atool_inputproperty (the enter for that instrument). - AgentFinish: This knowledge class signifies that the agent has completed its process and may return a response to the person. It usually features a dictionary of return values, typically with a key “output” containing the response textual content.

- Intermediate Steps: These are the data of earlier agent actions and corresponding outputs. They’re essential for passing context to future iterations of the agent.

In our instance, we’ll use OpenAI Operate Calling to create our agent. This method is dependable for agent creation. We’ll begin by making a easy instrument that calculates the size of a phrase. This instrument is helpful as a result of language fashions can generally make errors as a consequence of tokenization when counting phrase lengths.

First, let’s load the language mannequin we’ll use to manage the agent:

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI(mannequin="gpt-3.5-turbo", temperature=0)

Let’s check the mannequin with a phrase size calculation:

llm.invoke("what number of letters within the phrase educa?")

The response ought to point out the variety of letters within the phrase “educa.”

Subsequent, we’ll outline a easy Python perform to calculate the size of a phrase:

from langchain.brokers import instrument

@instrument

def get_word_length(phrase: str) -> int:

"""Returns the size of a phrase."""

return len(phrase)

We have created a instrument named get_word_length that takes a phrase as enter and returns its size.

Now, let’s create the immediate for the agent. The immediate instructs the agent on learn how to purpose and format the output. In our case, we’re utilizing OpenAI Operate Calling, which requires minimal directions. We’ll outline the immediate with placeholders for person enter and agent scratchpad:

from langchain.prompts import ChatPromptTemplate, MessagesPlaceholder

immediate = ChatPromptTemplate.from_messages(

[

(

"system",

"You are a very powerful assistant but not great at calculating word lengths.",

),

("user", "{input}"),

MessagesPlaceholder(variable_name="agent_scratchpad"),

]

)

Now, how does the agent know which instruments it may well use? We’re counting on OpenAI perform calling language fashions, which require features to be handed individually. To supply our instruments to the agent, we’ll format them as OpenAI perform calls:

from langchain.instruments.render import format_tool_to_openai_function

llm_with_tools = llm.bind(features=[format_tool_to_openai_function(t) for t in tools])

Now, we will create the agent by defining enter mappings and connecting the parts:

That is LCEL language. We’ll talk about this later intimately.

from langchain.brokers.format_scratchpad import format_to_openai_function_messages

from langchain.brokers.output_parsers import OpenAIFunctionsAgentOutputParser

agent = (

{

"enter": lambda x: x["input"],

"agent_scratchpad": lambda x: format_to_openai

_function_messages(

x["intermediate_steps"]

),

}

| immediate

| llm_with_tools

| OpenAIFunctionsAgentOutputParser()

)

We have created our agent, which understands person enter, makes use of out there instruments, and codecs output. Now, let’s work together with it:

agent.invoke({"enter": "what number of letters within the phrase educa?", "intermediate_steps": []})

The agent ought to reply with an AgentAction, indicating the following motion to take.

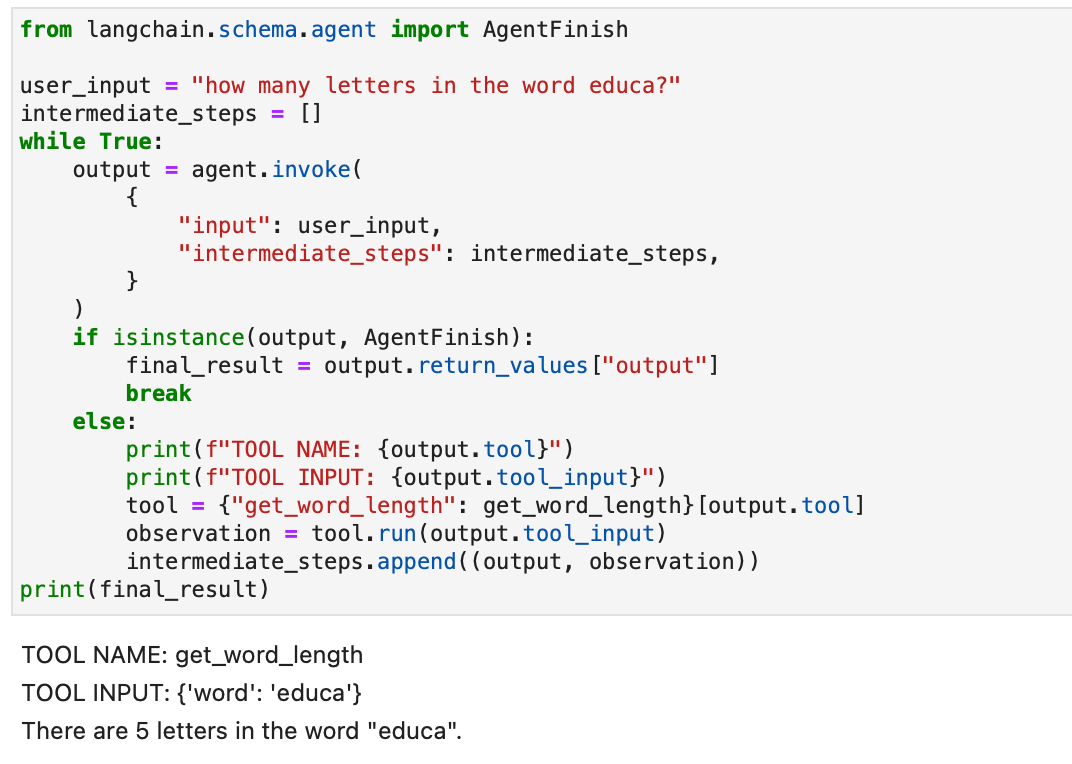

We have created the agent, however now we have to write a runtime for it. The best runtime is one which constantly calls the agent, executes actions, and repeats till the agent finishes. Here is an instance:

from langchain.schema.agent import AgentFinish

user_input = "what number of letters within the phrase educa?"

intermediate_steps = []

whereas True:

output = agent.invoke(

{

"enter": user_input,

"intermediate_steps": intermediate_steps,

}

)

if isinstance(output, AgentFinish):

final_result = output.return_values["output"]

break

else:

print(f"TOOL NAME: {output.instrument}")

print(f"TOOL INPUT: {output.tool_input}")

instrument = {"get_word_length": get_word_length}[output.tool]

statement = instrument.run(output.tool_input)

intermediate_steps.append((output, statement))

print(final_result)

On this loop, we repeatedly name the agent, execute actions, and replace the intermediate steps till the agent finishes. We additionally deal with instrument interactions throughout the loop.

To simplify this course of, LangChain offers the AgentExecutor class, which encapsulates agent execution and affords error dealing with, early stopping, tracing, and different enhancements. Let’s use AgentExecutor to work together with the agent:

from langchain.brokers import AgentExecutor

agent_executor = AgentExecutor(agent=agent, instruments=instruments, verbose=True)

agent_executor.invoke({"enter": "what number of letters within the phrase educa?"})

AgentExecutor simplifies the execution course of and offers a handy approach to work together with the agent.

Reminiscence can be mentioned intimately later.

The agent we have created to date is stateless, which means it does not bear in mind earlier interactions. To allow follow-up questions and conversations, we have to add reminiscence to the agent. This entails two steps:

- Add a reminiscence variable within the immediate to retailer chat historical past.

- Hold monitor of the chat historical past throughout interactions.

Let’s begin by including a reminiscence placeholder within the immediate:

from langchain.prompts import MessagesPlaceholder

MEMORY_KEY = "chat_history"

immediate = ChatPromptTemplate.from_messages(

[

(

"system",

"You are a very powerful assistant but not great at calculating word lengths.",

),

MessagesPlaceholder(variable_name=MEMORY_KEY),

("user", "{input}"),

MessagesPlaceholder(variable_name="agent_scratchpad"),

]

)

Now, create a listing to trace the chat historical past:

from langchain.schema.messages import HumanMessage, AIMessage

chat_history = []

Within the agent creation step, we’ll embody the reminiscence as nicely:

agent = (

{

"enter": lambda x: x["input"],

"agent_scratchpad": lambda x: format_to_openai_function_messages(

x["intermediate_steps"]

),

"chat_history": lambda x: x["chat_history"],

}

| immediate

| llm_with_tools

| OpenAIFunctionsAgentOutputParser()

)

Now, when working the agent, be certain to replace the chat historical past:

input1 = "what number of letters within the phrase educa?"

outcome = agent_executor.invoke({"enter": input1, "chat_history": chat_history})

chat_history.lengthen([

HumanMessage(content=input1),

AIMessage(content=result["output"]),

])

agent_executor.invoke({"enter": "is that an actual phrase?", "chat_history": chat_history})

This allows the agent to keep up a dialog historical past and reply follow-up questions based mostly on earlier interactions.

Congratulations! You’ve got efficiently created and executed your first end-to-end agent in LangChain. To delve deeper into LangChain’s capabilities, you may discover:

- Completely different agent sorts supported.

- Pre-built Brokers

- Methods to work with instruments and power integrations.

Agent Sorts

LangChain affords numerous agent sorts, every fitted to particular use circumstances. Listed here are among the out there brokers:

- Zero-shot ReAct: This agent makes use of the ReAct framework to decide on instruments based mostly solely on their descriptions. It requires descriptions for every instrument and is very versatile.

- Structured enter ReAct: This agent handles multi-input instruments and is appropriate for complicated duties like navigating an internet browser. It makes use of a instruments’ argument schema for structured enter.

- OpenAI Features: Particularly designed for fashions fine-tuned for perform calling, this agent is appropriate with fashions like gpt-3.5-turbo-0613 and gpt-4-0613. We used this to create our first agent above.

- Conversational: Designed for conversational settings, this agent makes use of ReAct for instrument choice and makes use of reminiscence to recollect earlier interactions.

- Self-ask with search: This agent depends on a single instrument, “Intermediate Reply,” which appears up factual solutions to questions. It is equal to the unique self-ask with search paper.

- ReAct doc retailer: This agent interacts with a doc retailer utilizing the ReAct framework. It requires “Search” and “Lookup” instruments and is just like the unique ReAct paper’s Wikipedia instance.

Discover these agent sorts to search out the one which most closely fits your wants in LangChain. These brokers can help you bind set of instruments inside them to deal with actions and generate responses. Be taught extra on learn how to construct your individual agent with instruments right here.

Prebuilt Brokers

Let’s proceed our exploration of brokers, specializing in prebuilt brokers out there in LangChain.

Gmail

LangChain affords a Gmail toolkit that lets you join your LangChain e-mail to the Gmail API. To get began, you may must arrange your credentials, that are defined within the Gmail API documentation. Upon getting downloaded the credentials.json file, you may proceed with utilizing the Gmail API. Moreover, you may want to put in some required libraries utilizing the next instructions:

pip set up --upgrade google-api-python-client > /dev/null

pip set up --upgrade google-auth-oauthlib > /dev/null

pip set up --upgrade google-auth-httplib2 > /dev/null

pip set up beautifulsoup4 > /dev/null # Optionally available for parsing HTML messages

You’ll be able to create the Gmail toolkit as follows:

from langchain.brokers.agent_toolkits import GmailToolkit

toolkit = GmailToolkit()

You too can customise authentication as per your wants. Behind the scenes, a googleapi useful resource is created utilizing the next strategies:

from langchain.instruments.gmail.utils import build_resource_service, get_gmail_credentials

credentials = get_gmail_credentials(

token_file="token.json",

scopes=["https://mail.google.com/"],

client_secrets_file="credentials.json",

)

api_resource = build_resource_service(credentials=credentials)

toolkit = GmailToolkit(api_resource=api_resource)

The toolkit affords numerous instruments that can be utilized inside an agent, together with:

GmailCreateDraft: Create a draft e-mail with specified message fields.GmailSendMessage: Ship e-mail messages.GmailSearch: Seek for e-mail messages or threads.GmailGetMessage: Fetch an e-mail by message ID.GmailGetThread: Seek for e-mail messages.

To make use of these instruments inside an agent, you may initialize the agent as follows:

from langchain.llms import OpenAI

from langchain.brokers import initialize_agent, AgentType

llm = OpenAI(temperature=0)

agent = initialize_agent(

instruments=toolkit.get_tools(),

llm=llm,

agent=AgentType.STRUCTURED_CHAT_ZERO_SHOT_REACT_DESCRIPTION,

)

Listed here are a few examples of how these instruments can be utilized:

- Create a Gmail draft for enhancing:

agent.run(

"Create a gmail draft for me to edit of a letter from the angle of a sentient parrot "

"who's trying to collaborate on some analysis together with her estranged buddy, a cat. "

"By no means might you ship the message, nevertheless."

)

- Seek for the most recent e-mail in your drafts:

agent.run("Might you search in my drafts for the most recent e-mail?")

These examples display the capabilities of LangChain’s Gmail toolkit inside an agent, enabling you to work together with Gmail programmatically.

SQL Database Agent

This part offers an outline of an agent designed to work together with SQL databases, notably the Chinook database. This agent can reply normal questions on a database and recuperate from errors. Please notice that it’s nonetheless in energetic growth, and never all solutions could also be right. Be cautious when working it on delicate knowledge, as it might carry out DML statements in your database.

To make use of this agent, you may initialize it as follows:

from langchain.brokers import create_sql_agent

from langchain.brokers.agent_toolkits import SQLDatabaseToolkit

from langchain.sql_database import SQLDatabase

from langchain.llms.openai import OpenAI

from langchain.brokers import AgentExecutor

from langchain.brokers.agent_types import AgentType

from langchain.chat_models import ChatOpenAI

db = SQLDatabase.from_uri("sqlite:///../../../../../notebooks/Chinook.db")

toolkit = SQLDatabaseToolkit(db=db, llm=OpenAI(temperature=0))

agent_executor = create_sql_agent(

llm=OpenAI(temperature=0),

toolkit=toolkit,

verbose=True,

agent_type=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

)

This agent could be initialized utilizing the ZERO_SHOT_REACT_DESCRIPTION agent sort. It’s designed to reply questions and supply descriptions. Alternatively, you may initialize the agent utilizing the OPENAI_FUNCTIONS agent sort with OpenAI’s GPT-3.5-turbo mannequin, which we utilized in our earlier consumer.

Disclaimer

- The question chain might generate insert/replace/delete queries. Be cautious, and use a customized immediate or create a SQL person with out write permissions if wanted.

- Bear in mind that working sure queries, reminiscent of “run the largest question potential,” might overload your SQL database, particularly if it incorporates tens of millions of rows.

- Knowledge warehouse-oriented databases typically assist user-level quotas to restrict useful resource utilization.

You’ll be able to ask the agent to explain a desk, such because the “playlisttrack” desk. Here is an instance of learn how to do it:

agent_executor.run("Describe the playlisttrack desk")

The agent will present details about the desk’s schema and pattern rows.

When you mistakenly ask a few desk that does not exist, the agent can recuperate and supply details about the closest matching desk. For instance:

agent_executor.run("Describe the playlistsong desk")

The agent will discover the closest matching desk and supply details about it.

You too can ask the agent to run queries on the database. As an illustration:

agent_executor.run("Checklist the full gross sales per nation. Which nation's clients spent essentially the most?")

The agent will execute the question and supply the outcome, such because the nation with the very best complete gross sales.

To get the full variety of tracks in every playlist, you should use the next question:

agent_executor.run("Present the full variety of tracks in every playlist. The Playlist title ought to be included within the outcome.")

The agent will return the playlist names together with the corresponding complete monitor counts.

In circumstances the place the agent encounters errors, it may well recuperate and supply correct responses. As an illustration:

agent_executor.run("Who're the highest 3 finest promoting artists?")

Even after encountering an preliminary error, the agent will modify and supply the right reply, which, on this case, is the highest 3 best-selling artists.

Pandas DataFrame Agent

This part introduces an agent designed to work together with Pandas DataFrames for question-answering functions. Please notice that this agent makes use of the Python agent underneath the hood to execute Python code generated by a language mannequin (LLM). Train warning when utilizing this agent to forestall potential hurt from malicious Python code generated by the LLM.

You’ll be able to initialize the Pandas DataFrame agent as follows:

from langchain_experimental.brokers.agent_toolkits import create_pandas_dataframe_agent

from langchain.chat_models import ChatOpenAI

from langchain.brokers.agent_types import AgentType

from langchain.llms import OpenAI

import pandas as pd

df = pd.read_csv("titanic.csv")

# Utilizing ZERO_SHOT_REACT_DESCRIPTION agent sort

agent = create_pandas_dataframe_agent(OpenAI(temperature=0), df, verbose=True)

# Alternatively, utilizing OPENAI_FUNCTIONS agent sort

# agent = create_pandas_dataframe_agent(

# ChatOpenAI(temperature=0, mannequin="gpt-3.5-turbo-0613"),

# df,

# verbose=True,

# agent_type=AgentType.OPENAI_FUNCTIONS,

# )

You’ll be able to ask the agent to rely the variety of rows within the DataFrame:

agent.run("what number of rows are there?")

The agent will execute the code df.form[0] and supply the reply, reminiscent of “There are 891 rows within the dataframe.”

You too can ask the agent to filter rows based mostly on particular standards, reminiscent of discovering the variety of folks with greater than 3 siblings:

agent.run("how many individuals have greater than 3 siblings")

The agent will execute the code df[df['SibSp'] > 3].form[0] and supply the reply, reminiscent of “30 folks have greater than 3 siblings.”

If you wish to calculate the sq. root of the typical age, you may ask the agent:

agent.run("whats the sq. root of the typical age?")

The agent will calculate the typical age utilizing df['Age'].imply() after which calculate the sq. root utilizing math.sqrt(). It would present the reply, reminiscent of “The sq. root of the typical age is 5.449689683556195.”

Let’s create a replica of the DataFrame, and lacking age values are crammed with the imply age:

df1 = df.copy()

df1["Age"] = df1["Age"].fillna(df1["Age"].imply())

Then, you may initialize the agent with each DataFrames and ask it a query:

agent = create_pandas_dataframe_agent(OpenAI(temperature=0), [df, df1], verbose=True)

agent.run("what number of rows within the age column are completely different?")

The agent will evaluate the age columns in each DataFrames and supply the reply, reminiscent of “177 rows within the age column are completely different.”

Jira Toolkit

This part explains learn how to use the Jira toolkit, which permits brokers to work together with a Jira occasion. You’ll be able to carry out numerous actions reminiscent of trying to find points and creating points utilizing this toolkit. It makes use of the atlassian-python-api library. To make use of this toolkit, it’s essential to set surroundings variables on your Jira occasion, together with JIRA_API_TOKEN, JIRA_USERNAME, and JIRA_INSTANCE_URL. Moreover, you might must set your OpenAI API key as an surroundings variable.

To get began, set up the atlassian-python-api library and set the required surroundings variables:

%pip set up atlassian-python-api

import os

from langchain.brokers import AgentType

from langchain.brokers import initialize_agent

from langchain.brokers.agent_toolkits.jira.toolkit import JiraToolkit

from langchain.llms import OpenAI

from langchain.utilities.jira import JiraAPIWrapper

os.environ["JIRA_API_TOKEN"] = "abc"

os.environ["JIRA_USERNAME"] = "123"

os.environ["JIRA_INSTANCE_URL"] = "https://jira.atlassian.com"

os.environ["OPENAI_API_KEY"] = "xyz"

llm = OpenAI(temperature=0)

jira = JiraAPIWrapper()

toolkit = JiraToolkit.from_jira_api_wrapper(jira)

agent = initialize_agent(

toolkit.get_tools(), llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True

)

You’ll be able to instruct the agent to create a brand new subject in a selected undertaking with a abstract and outline:

agent.run("make a brand new subject in undertaking PW to remind me to make extra fried rice")

The agent will execute the required actions to create the difficulty and supply a response, reminiscent of “A brand new subject has been created in undertaking PW with the abstract ‘Make extra fried rice’ and outline ‘Reminder to make extra fried rice’.”

This lets you work together together with your Jira occasion utilizing pure language directions and the Jira toolkit.

Automate guide duties and workflows with our AI-driven workflow builder, designed by Nanonets for you and your groups.

Module IV : Chains

LangChain is a instrument designed for using Massive Language Fashions (LLMs) in complicated purposes. It offers frameworks for creating chains of parts, together with LLMs and different varieties of parts. Two main frameworks

- The LangChain Expression Language (LCEL)

- Legacy Chain interface

The LangChain Expression Language (LCEL) is a syntax that enables for intuitive composition of chains. It helps superior options like streaming, asynchronous calls, batching, parallelization, retries, fallbacks, and tracing. For instance, you may compose a immediate, mannequin, and output parser in LCEL as proven within the following code:

from langchain.prompts import ChatPromptTemplate

from langchain.schema import StrOutputParser

mannequin = ChatOpenAI(mannequin="gpt-3.5-turbo", temperature=0)

immediate = ChatPromptTemplate.from_messages([

("system", "You're a very knowledgeable historian who provides accurate and eloquent answers to historical questions."),

("human", "{question}")

])

runnable = immediate | mannequin | StrOutputParser()

for chunk in runnable.stream({"query": "What are the seven wonders of the world"}):

print(chunk, finish="", flush=True)

Alternatively, the LLMChain is an possibility just like LCEL for composing parts. The LLMChain instance is as follows:

from langchain.chains import LLMChain

chain = LLMChain(llm=mannequin, immediate=immediate, output_parser=StrOutputParser())

chain.run(query="What are the seven wonders of the world")

Chains in LangChain will also be stateful by incorporating a Reminiscence object. This enables for knowledge persistence throughout calls, as proven on this instance:

from langchain.chains import ConversationChain

from langchain.reminiscence import ConversationBufferMemory

dialog = ConversationChain(llm=chat, reminiscence=ConversationBufferMemory())

dialog.run("Reply briefly. What are the primary 3 colours of a rainbow?")

dialog.run("And the following 4?")

LangChain additionally helps integration with OpenAI’s function-calling APIs, which is helpful for acquiring structured outputs and executing features inside a series. For getting structured outputs, you may specify them utilizing Pydantic courses or JsonSchema, as illustrated under:

from langchain.pydantic_v1 import BaseModel, Subject

from langchain.chains.openai_functions import create_structured_output_runnable

from langchain.chat_models import ChatOpenAI

from langchain.prompts import ChatPromptTemplate

class Particular person(BaseModel):

title: str = Subject(..., description="The individual's title")

age: int = Subject(..., description="The individual's age")

fav_food: Optionally available[str] = Subject(None, description="The individual's favourite meals")

llm = ChatOpenAI(mannequin="gpt-4", temperature=0)

immediate = ChatPromptTemplate.from_messages([

# Prompt messages here

])

runnable = create_structured_output_runnable(Particular person, llm, immediate)

runnable.invoke({"enter": "Sally is 13"})

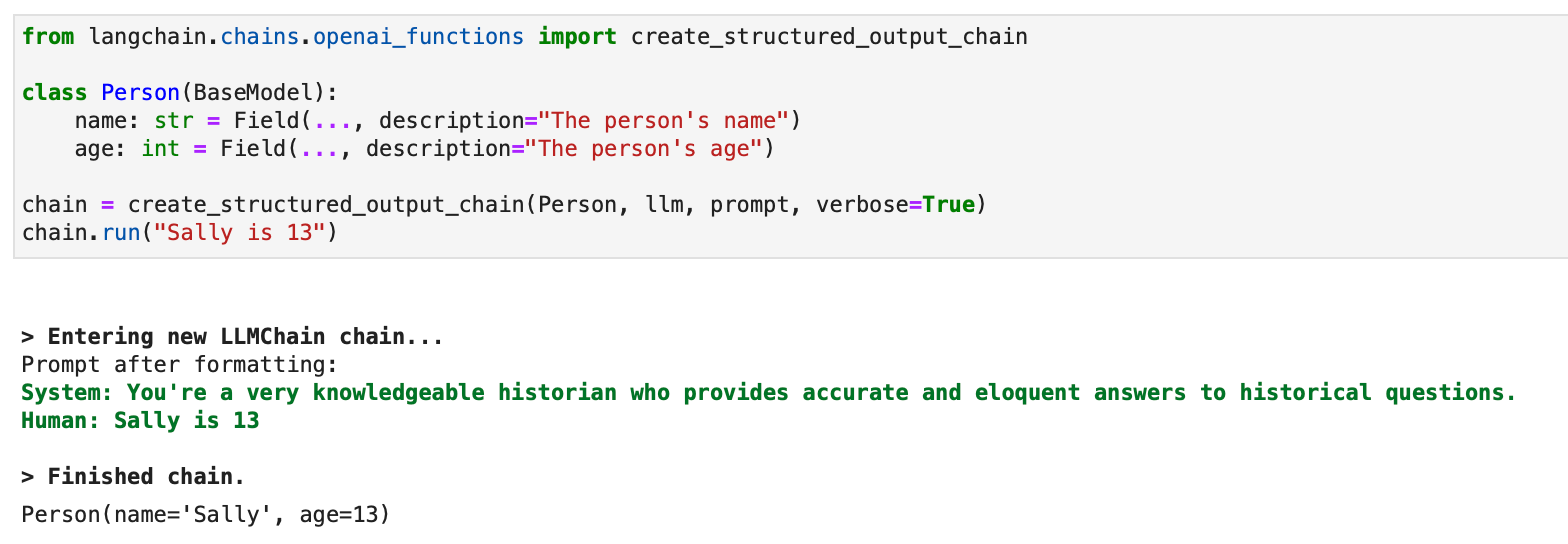

For structured outputs, a legacy method utilizing LLMChain can be out there:

from langchain.chains.openai_functions import create_structured_output_chain

class Particular person(BaseModel):

title: str = Subject(..., description="The individual's title")

age: int = Subject(..., description="The individual's age")

chain = create_structured_output_chain(Particular person, llm, immediate, verbose=True)

chain.run("Sally is 13")

LangChain leverages OpenAI features to create numerous particular chains for various functions. These embody chains for extraction, tagging, OpenAPI, and QA with citations.