Picture by Freepik

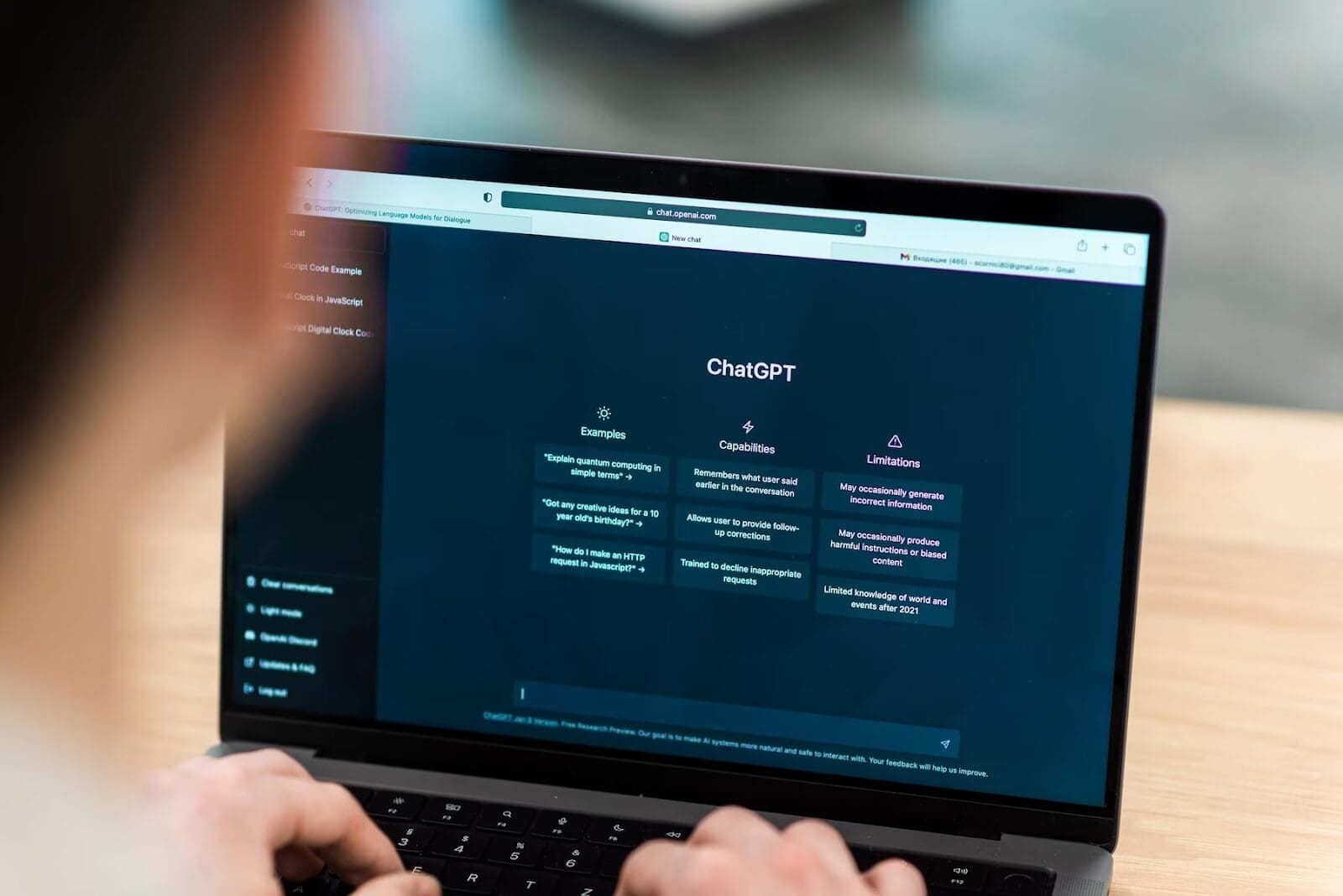

OpenAI, ChatGPT, the GPT-series, and Massive Language Fashions (LLMs) basically – in case you are remotely related to the AI career or a technologist, chances are high excessive that you just’d hear these phrases in virtually all your enterprise conversations as of late.

And the hype is actual. We can’t name it a bubble anymore. In any case, this time, the hype resides as much as its guarantees.

Who would have thought that machines might perceive and revert in human-like intelligence and do virtually all these duties beforehand thought of human forte, together with inventive purposes of music, writing poetry, and even programming purposes?

The ever present proliferation of LLMs in our lives has made us all inquisitive about what lies beneath this highly effective know-how.

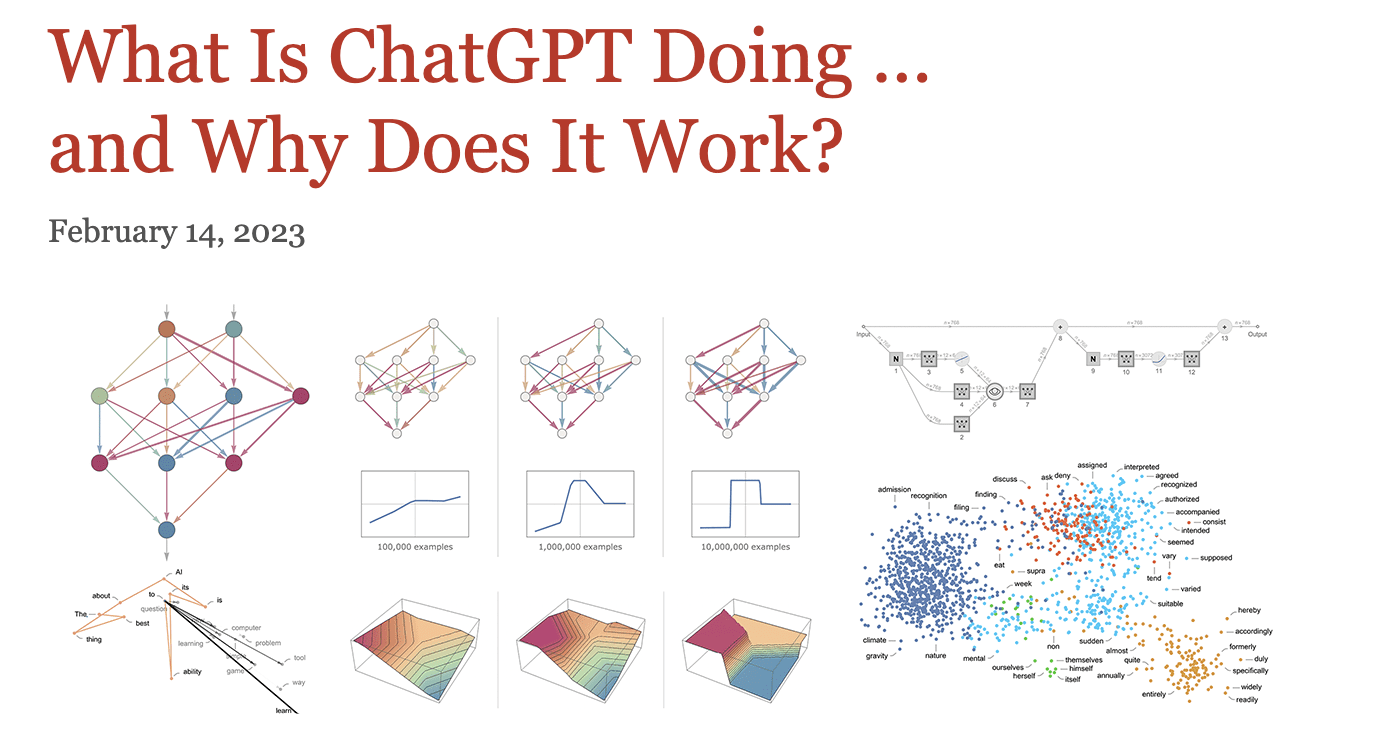

So, in case you are holding your self again due to the gory-looking particulars of algorithms and the complexities of the AI area, I extremely advocate this useful resource to study all about “What Is ChatGPT Doing … and Why Does It Work?”

Picture from Stephen Wolfram Writings

Sure, that is the title of the article by Wolfram.

Why am I recommending this? As a result of it’s essential to know absolutely the necessities of machine studying and the way deep neural networks are associated to human brains earlier than studying about Transformers, LLMs, and even Generative AI.

It seems to be like a mini-book which is literature by itself, however take your time with the size of this useful resource.

On this article, I’ll share how one can begin studying it to make the ideas simpler to understand.

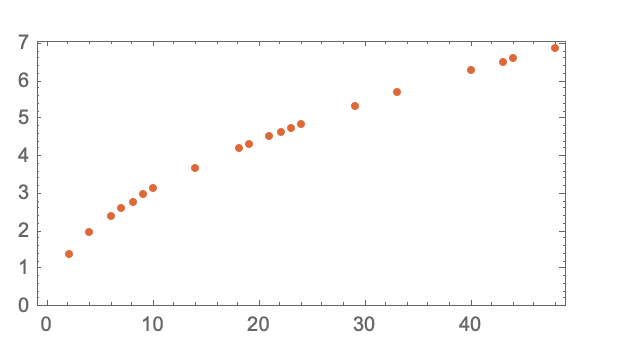

Its key spotlight is the deal with the ‘mannequin’ a part of “Massive Language Fashions”, illustrated by an instance of the time it takes the ball to succeed in the bottom from every ground.

Picture from Stephen Wolfram Writings

There are two methods to realize this – repeating this train from every ground or constructing a mannequin that might compute it.

On this instance, there exists an underlying mathematical formulation that makes it simpler to calculate, however how would one estimate such a phenomenon utilizing a ‘mannequin’?

The very best guess could be to suit a straight line for estimating the variable of curiosity, on this case, time.

A extra profound learn into this part would clarify that there’s by no means a “model-less mannequin”, which seamlessly takes you to the various deep studying ideas.

You’ll study {that a} mannequin is a posh perform that takes in sure variables as enter and leads to an output, say a quantity in digit recognition duties.

The article goes from digit recognition to a typical cat vs. canine classifier to lucidly clarify what options are picked by every layer, beginning with the define of the cat. Notably, the primary few layers of a neural community select sure elements of photographs, like the perimeters of objects.

Picture by Freepik

Key Terminologies

Along with explaining the function of a number of layers, a number of sides of deep studying algorithms are additionally defined, similar to:

Structure Of Neural Networks

It’s a mixture of artwork and science, says the submit – “However principally issues have been found by trial and error, including concepts and tips which have progressively constructed important lore about how one can work with neural nets”.

Epochs

Epochs are an efficient option to remind the mannequin of a specific instance to get it to “do not forget that instance”

Since repeating the identical instance a number of occasions isn’t sufficient, it is very important present totally different variations of the examples to the neural web.

Weights (Parameters)

You need to have heard that one of many LLMs has whopping 175B parameters. Properly, that reveals how the construction of the mannequin varies based mostly on how the knobs are adjusted.

Basically, parameters are the “knobs you may flip” to suit the info. The submit highlights that the precise studying technique of neural networks is all about discovering the suitable weights – “In the long run, it’s all about figuring out what weights will greatest seize the coaching examples which were given”

Generalization

The neural networks study to “interpolate between the proven examples in an affordable approach”.

This generalization helps to foretell unseen data by studying from a number of input-output examples.

Loss Perform

However how do we all know what is affordable? It’s outlined by how far the output values are from the anticipated values, that are encapsulated within the loss perform.

It provides us a “distance between the values we’ve obtained and the true values”. To cut back this distance, the weights are iteratively adjusted, however there have to be a option to systemically cut back the weights in a path that takes the shortest path.

Gradient Descent

Discovering the steepest path to descent on a weight panorama is known as gradient descent.

It’s all about discovering the proper weights that greatest symbolize the bottom reality by navigating the load panorama.

Backpropagation

Proceed studying by means of the idea of backpropagation, which takes the loss perform and works backward to progressively discover weights to reduce the related loss.

Hyperparameters

Along with weights (aka the parameters), there are hyperparameters that embody totally different decisions of the loss perform, loss minimization, and even selecting how massive a “batch” of examples needs to be.

Neural Networks For Complicated Issues

Using neural networks for advanced issues is broadly mentioned. Nonetheless, the logic beneath such an assumption was unclear till this submit which explains how a number of weight variables in a high-dimensional house allow varied instructions that may result in the minimal.

Now, evaluate this with fewer variables, which suggests the potential for getting caught in an area minimal with no path to get out.

Keep tuned for a follow-up submit on how one can construct upon this data to know how chatgpt works.

Vidhi Chugh is an AI strategist and a digital transformation chief working on the intersection of product, sciences, and engineering to construct scalable machine studying methods. She is an award-winning innovation chief, an creator, and a global speaker. She is on a mission to democratize machine studying and break the jargon for everybody to be part of this transformation.