For the reason that introduction of generative AI, giant language fashions (LLMs) have conquered the world and located their means into search engines like google.

However is it doable to proactively affect AI efficiency through giant language mannequin optimization (LLMO) or generative AI optimization (GAIO)?

This text discusses the evolving panorama of search engine marketing and the unsure way forward for LLM optimization in AI-powered search engines like google, with insights from knowledge science specialists.

What’s LLM optimization or generative AI optimization (GAIO)?

GAIO goals to assist corporations place their manufacturers and merchandise within the outputs of main LLMs, comparable to GPT and Google Bard, outstanding as these fashions can affect many future buy selections.

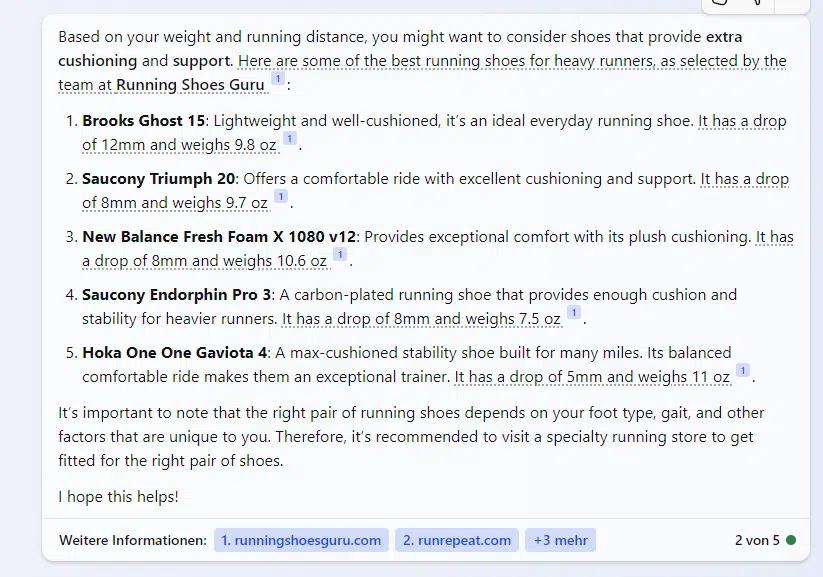

For instance, for those who search Bing Chat for the most effective trainers for a 96-kilogram runner who runs 20 kilometers per week, Brooks, Saucony, Hoka and New Steadiness footwear will likely be prompt.

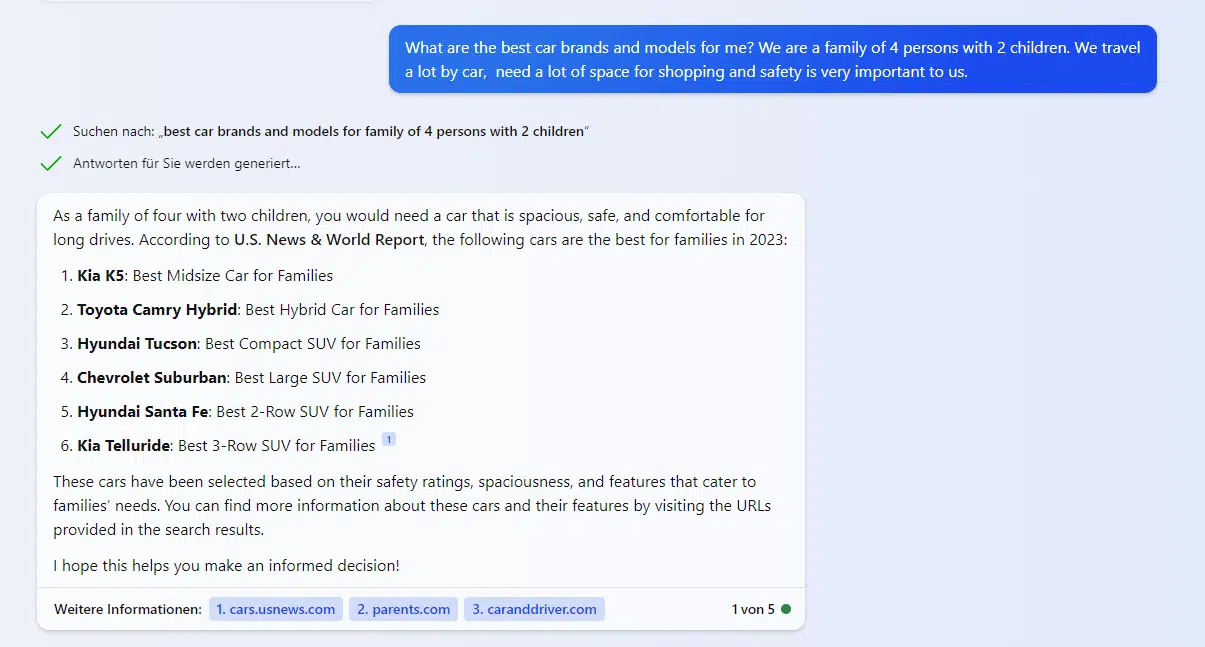

If you ask Bing Chat for secure, family-friendly vehicles which are large enough for procuring and journey, it suggests Kia, Toyota, Hyundai and Chevrolet fashions.

The strategy of potential strategies comparable to LLM optimization is to provide desire to sure manufacturers and merchandise when coping with corresponding transaction-oriented questions.

How are these suggestions made?

Solutions from Bing Chat and different generative AI instruments are at all times contextual. The AI largely makes use of impartial secondary sources comparable to commerce magazines, information websites, affiliation and public establishment web sites, and blogs as a supply for suggestions.

The output of generative AI is predicated on the dedication of statistical frequencies. The extra usually phrases seem in sequence within the supply knowledge, the extra doubtless it’s that the specified phrase is the proper one within the output.

Phrases continuously talked about within the coaching knowledge are statistically extra related or semantically extra carefully associated.

Which manufacturers and merchandise are talked about in a sure context will be defined by the best way LLMs work.

LLMs in motion

Trendy transformer-based LLMs comparable to GPT or Bard are based mostly on a statistical evaluation of the co-occurrence of tokens or phrases.

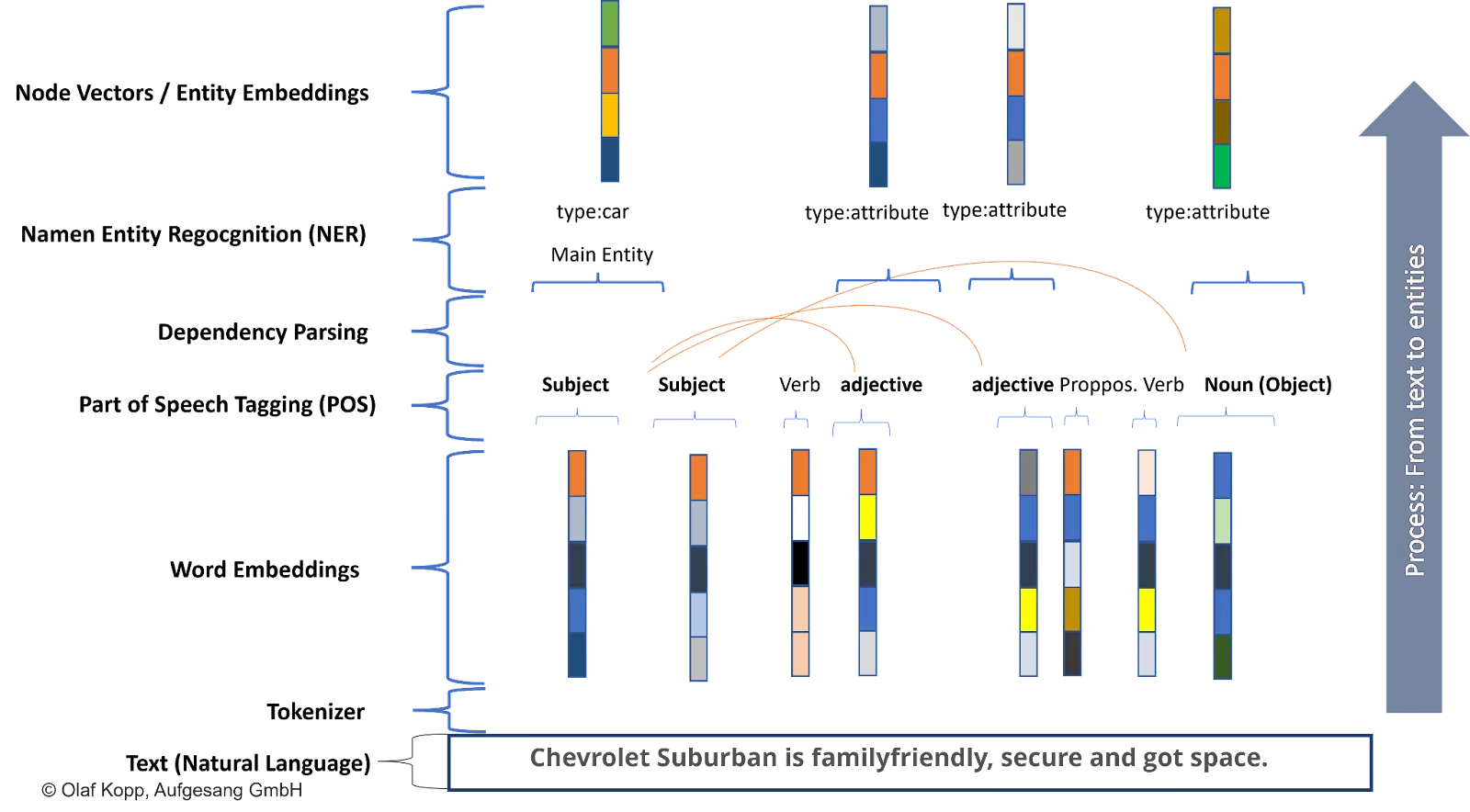

To do that, texts and knowledge are damaged down into tokens for machine processing and positioned in semantic areas utilizing vectors. Vectors will also be complete phrases (Word2Vec), entities (Node2Vec), and attributes.

In semantics, the semantic area can be described as an ontology. Since LLMs rely extra on statistics than semantics, they aren’t ontologies. Nevertheless, the AI will get nearer to semantic understanding as a result of quantity of information.

Semantic proximity will be decided by Euclidean distance or cosine angle measure in semantic area.

If an entity is continuously talked about in reference to sure different entities or properties within the coaching knowledge, there’s a excessive statistical likelihood of a semantic relationship.

The strategy of this processing known as transformer-based pure language processing.

NLP describes a course of of remodeling pure language right into a machine-understandable type that permits communication between people and machines.

NLP contains pure language understanding (NLU) and pure language technology (NLG).

When coaching LLMs, the main focus is on NLU, and when outputting AI-generated outcomes, the main focus is on NLG.

Figuring out entities through named entity extraction performs a particular function in semantic understanding and an entity’s that means inside a thematic ontology.

Because of the frequent co-occurrence of sure phrases, these vectors transfer nearer collectively within the semantic area: the semantic proximity will increase, and the likelihood of membership will increase.

The outcomes are output through NLG based on statistical likelihood.

For instance, suppose the Chevrolet Suburban is usually talked about within the context of household and security.

In that case, the LLM can affiliate this entity with sure attributes comparable to secure or family-friendly. There’s a excessive statistical likelihood that this automotive mannequin is related to these attributes.

Get the every day e-newsletter search entrepreneurs depend on.

Can the outputs of generative AI be influenced proactively?

I have not heard conclusive solutions to this query, solely unfounded hypothesis.

To get nearer to a solution, it is smart to strategy it from a knowledge science perspective. In different phrases, from individuals who know the way giant language fashions work.

I requested three knowledge science specialists from my community. Right here’s what they mentioned.

Kai Spriestersbach, Utilized AI researcher and search engine marketing veteran:

- “Theoretically, it is definitely doable, and it can’t be dominated out that political actors or states would possibly go to such lengths. Frankly, I truly assume some do. Nevertheless, from a purely sensible standpoint, for enterprise advertising, I do not see this as a viable option to deliberately affect the “opinion” or notion of an AI, until it is also influencing public opinion on the identical time, as an example, by conventional PR or branding.

- “With business giant language fashions, it’s not publicly disclosed what coaching knowledge is used, nor how it’s filtered and weighted. Furthermore, business suppliers make the most of alignment methods to make sure the AI’s responses are as impartial and uncontroversial as doable, regardless of the coaching knowledge.

- “Finally, one must be certain that over 50% of the statements within the coaching knowledge mirror the specified sentiment, which within the excessive case means flooding the web with posts and texts, hoping they get integrated into the coaching knowledge.”

Barbara Lampl, Behavioral mathematician and COO at Genki:

- “It is theoretically doable to affect an LLM by a synchronized effort of content material, PR, and mentions, the information science mechanics will underscore the rising challenges and diminishing rewards of such an strategy.

- “The endeavor’s complexity, when analyzed by the lens of information science, turns into much more pronounced and arguably unfeasible.”

Philip Ehring, Head of Enterprise Intelligence at Reverse-Retail:

- “The dynamics between LLMs and methods like ChatGPT and search engine marketing finally stay the identical on the finish of the equation. Solely the attitude of optimization will change to a different software, that’s in actual fact nothing greater than a greater interface for classical data retrieval methods…

- “In the long run, it is an optimization for a hybrid metasearch engine with a pure language person interface that summarizes the outcomes for you.”

The next factors will be made out of a knowledge science perspective:

- For big business language fashions, the coaching database is just not public, and tuning methods are used to make sure impartial and uncontroversial responses. To embed the specified opinion within the AI, greater than 50% of the coaching knowledge must mirror that opinion, which might be extraordinarily tough to affect.

- It’s tough to make a significant influence as a result of big quantity of information and statistical significance.

- The dynamics of community proliferation, time components, mannequin regularization, suggestions loops, and financial prices are obstacles.

- As well as, the delay in mannequin updates makes it tough to affect.

- Because of the giant variety of co-occurrences that must be created, relying available on the market, it is just doable to affect the output of a generative AI with regard to 1’s personal merchandise and model with higher dedication to PR and advertising.

- One other problem is to determine the sources that will likely be used as coaching knowledge for the LLMs.

- The core dynamics between LLMs and methods like ChatGPT or BARD and search engine marketing stay constant. The one change is within the optimization perspective, which shifts to a greater interface for classical data retrieval.

- ChatGPT’s fine-tuning course of includes a reinforcement studying layer that generates responses based mostly on discovered contexts and prompts.

- Conventional search engines like google like Google and Bing are used to focus on high quality content material and domains like Wikipedia or GitHub. The mixing of fashions like BERT into these methods has been a identified development. Google’s BERT modifications how data retrieval understands person queries and contexts.

- Consumer enter has lengthy directed the main focus of net crawls for LLMs. The chance of an LLM utilizing content material from a crawl for coaching is influenced by the doc’s findability on the net.

- Whereas LLMs excel at computing similarities, they don’t seem to be as proficient at offering factual solutions or fixing logical duties. To handle this, Retrieval-Augmented Era (RAG) makes use of exterior knowledge shops to supply higher, sourced solutions.

- The mixing of net crawling gives twin advantages: bettering ChatGPT’s relevance and coaching, and enhancing search engine marketing. A problem stays in human labeling and rating of prompts and responses for reinforcement studying.

- The prominence of content material in LLM coaching is influenced by its relevance and discoverability. The influence of particular content material on an LLM is difficult to quantify, however having one’s model acknowledged inside a context is a major achievement.

- RAG mechanics additionally enhance the standard of responses through the use of higher-ranked content material. This presents an optimization alternative by aligning content material with potential solutions.

- The evolution in search engine marketing is not a very new strategy however a shift in perspective. It includes understanding which search engines like google are prioritized by methods like ChatGPT, incorporating prompt-generated key phrases into analysis, concentrating on related pages for content material, and structuring content material for optimum point out in responses.

- Finally, the purpose is to optimize for a hybrid metasearch engine with a pure language interface that summarizes outcomes for customers.

How might the coaching knowledge for the LLMs be chosen?

There are two doable approaches right here: E-E-A-T and rating.

We will assume that the suppliers of the well-known LLMs solely use sources as coaching knowledge that meet a sure high quality commonplace and are reliable.

There could be a option to choose these sources utilizing Google’s E-E-A-T idea. Relating to entities, Google can use the Information Graph for fact-checking and fine-tuning the LLM.

The second strategy, as prompt by Philipp Ehring, is to pick coaching knowledge based mostly on relevance and high quality decided by the precise rating course of. So, top-ranking content material to the corresponding queries and prompts are routinely used for coaching the LLMs.

This strategy assumes that the data retrieval wheel doesn’t should be reinvented and that search engines like google depend on established analysis procedures to pick coaching knowledge. This may then embody E-E-A-T along with relevance analysis.

Nevertheless, checks on Bing Chat and SGE haven’t proven any clear correlations between the referenced sources and the rankings.

Influencing AI-powered search engine marketing

It stays to be seen whether or not LLM optimization or GAIO will actually change into a reliable technique for influencing LLMs when it comes to their very own targets.

On the information science facet, there’s skepticism. Some SEOs imagine in it.

If so, there are the next targets that must be achieved:

- Set up your personal media through E-E-A-T as a supply of coaching knowledge.

- Generate mentions of your model and merchandise in certified media.

- Create co-competitions of your personal model with different related entities and attributes in certified media.

- Turn out to be a part of the data graph.

I’ve defined what measures to take to realize this within the article Easy methods to enhance E-A-T for web sites and entities.

The possibilities of success with LLM optimization improve with the scale of the market. The extra area of interest a market is, the better it’s to place your self as a model within the respective thematic context.

Which means that fewer co-occurrences within the certified media are required to be related to the related attributes and entities within the LLMs. The bigger the market, the tougher that is, as many market contributors have giant PR and advertising sources and an extended historical past.

GAIO or LLM optimization requires considerably extra sources than traditional search engine marketing to affect public notion.

At this level, I want to consult with my idea of Digital Authority Administration. You may learn extra about this within the article Authority Administration: A New Self-discipline within the Age of SGE and E-E-A-T.

Suppose LLM optimization seems to be a wise search engine marketing technique. In that case, giant manufacturers can have important benefits in search engine positioning and generative AI outcomes sooner or later because of their PR and advertising sources.

One other perspective is that one can proceed in search engine marketing as earlier than since well-ranking content material will also be used for coaching the LLMs concurrently. There, one must also take note of co-occurrences between manufacturers/merchandise and attributes or different entities and optimize for them.

Nevertheless, checks on Bing Chat and SBU haven’t but proven clear correlations between referenced sources and rankings.

Which of those approaches would be the future for search engine marketing is unclear and can solely change into obvious when SGE is lastly launched.

Opinions expressed on this article are these of the visitor writer and never essentially Search Engine Land. Employees authors are listed right here.