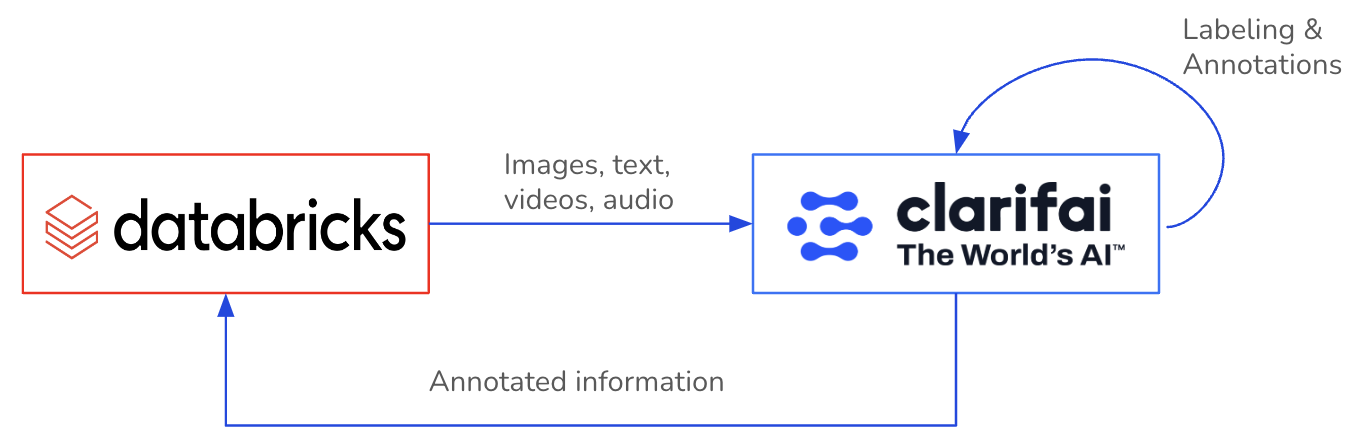

Databricks, the info and AI firm, combines the most effective of information warehouses and information lakes to supply an open and unified platform for information and AI. And the Clarifai and Databricks partnership now permits our joint clients to realize insights from their visible and textual information at scale.

A significant bottleneck for a lot of AI initiatives or purposes is having a adequate quantity of, a adequate high quality of, and sufficiently labeled information. Deriving worth from unstructured information turns into an entire lot easier when you may annotate immediately the place you already belief your enterprise information to. Why construct information pipelines and use a number of instruments when a single one will suffice?

ClarifaiPySpark SDK empowers Databricks customers to create and provoke machine studying workflows, carry out information annotations, and entry different options. Therefore, it resolves the complexities linked to cross-platform information entry, annotation processes, and the efficient extraction of insights from large-scale visible and textual datasets.

On this weblog, we are going to discover the ClarifaiPySpark SDK to allow a connection between Clarifai and Databricks, facilitating bi-directional import and export of information whereas enabling the retrieval of information annotations out of your Clarifai purposes to Databricks.

Set up

Set up ClarifaiPyspark SDK in your Databricks workspace (in a pocket book) with the under command:

Start by acquiring your PAT token from the directions right here and configuring it as a Databricks secret. Signup right here.

In Clarifai, purposes function the basic unit for creating initiatives. They home your information, annotations, fashions, workflows, predictions, and searches. Be happy to create a number of purposes and modify or take away them as wanted.

Seamlessly integrating your Clarifai App with Databricks by means of ClarifaiPyspark SDK is a simple course of. The SDK could be utilized inside your Ipython pocket book or python script information in your Databricks workspace.

Generate a Clarifai PySpark Occasion

Create a ClarifaiPyspark shopper object to ascertain a connection along with your Clarifai App.

Get hold of the dataset object for the particular dataset inside your App. If it does not exist, this may routinely create a brand new dataset throughout the App.

On this preliminary model of the SDK, we have targeted on a situation the place customers can seamlessly switch their dataset from Databricks volumes or an S3 bucket to their Clarifai App. After annotating the info throughout the App, customers can export each the info and its annotations from the App, permitting them to retailer it of their most popular format. Now, let’s discover the technical points of conducting this.

Ingesting Knowledge from Databricks into the Clarifai App

The ClarifaiPyspark SDK affords numerous strategies for ingesting/importing your dataset from each Databricks Volumes and AWS S3 buckets, offering you the liberty to pick probably the most appropriate method. Let’s discover how one can ingest information into your Clarifai app utilizing these strategies.

1. Add from Quantity folder

In case your dataset photographs or textual content information are saved inside a Databricks quantity, you may immediately add the info information from the amount to your Clarifai App. Please make sure that the folder solely comprises photographs/textual content information. If the folder identify serves because the label for all the pictures inside it, you may set the labels parameter to True.

2. Add from CSV

You possibly can populate the dataset from a CSV that should embody these important columns: ‘inputid’ and ‘enter’. Further supported columns within the CSV are ‘ideas’, ‘metadata’, and ‘geopoints’. The ‘enter’ column can comprise a file URL or path, or it will possibly have uncooked textual content. If the ‘ideas’ column exists within the CSV, set ‘labels=True’. You even have the choice to make use of a CSV file immediately out of your AWS S3 bucket. Merely specify the ‘supply’ parameter as ‘s3’ in such circumstances.

3. Add from Delta desk

You possibly can make use of a delta desk to populate a dataset in your App. The desk ought to embody these important columns: ‘inputid’ and ‘enter’. Moreover, the delta desk helps extra columns equivalent to ‘ideas,’ ‘metadata,’ and ‘geopoints.’ The ‘enter’ column is flexible, permitting it to comprise file URLs or paths, in addition to uncooked textual content. If the ‘ideas’ column is current within the desk, bear in mind to allow the ‘labels’ parameter by setting it to ‘True.’ You even have the selection to make use of a delta desk saved inside your AWS S3 bucket by offering its S3 path.

4. Add from Dataframe

You possibly can add a dataset from a dataframe that ought to embody these required columns: ‘inputid’ and ‘enter’. Moreover, the dataframe helps different columns equivalent to ‘ideas’, ‘metadata’, and ‘geopoints’. The ‘enter’ column can accommodate file URLs or paths, or it will possibly maintain uncooked textual content. If the dataframe comprises the ‘ideas’ column, set ‘labels=True’.

5. Add with Customized Dataloader

In case your dataset is saved in another format or requires preprocessing, you have got the pliability to provide a customized dataloader class object. You possibly can discover numerous dataloader examples for reference right here. The required information & folders for dataloader needs to be saved in Databricks quantity storage.

Fetching Dataset Info from Clarifai App

The ClarifaiPyspark SDK supplies numerous methods to entry your dataset from the Clarifai App to a Databricks quantity. Whether or not you are serious about retrieving enter particulars or downloading enter information into your quantity storage, we’ll stroll you thru the method.

1. Retrieve information file particulars in JSON format

To entry details about the info information inside your Clarifai App’s dataset, you should use the next perform which returns a JSON response. Chances are you’ll use the ‘input_type’ parameter for retrieving the small print for a selected sort of information file equivalent to ‘picture’, ‘video’, ‘audio’, or ‘textual content’.

2. Retrieve information file particulars as a dataframe

You can even get hold of enter particulars in a structured dataframe format, that includes columns equivalent to ‘input_id,’ ‘image_url/text_url,’ ‘image_info/text_info,’ ‘input_created_at,’ and ‘input_modified_at.’ Remember to specify the ‘input_type’ when utilizing this perform. Please observe that the the JSON response may embody extra attributes.

3. Obtain picture/textual content information from Clarifai App to Databricks Quantity

With this perform, you may immediately obtain the picture/textual content information out of your Clarifai App’s dataset to your Databricks quantity. You may have to specify the storage path within the quantity for the obtain and use the response obtained from list_inputs() because the parameter.

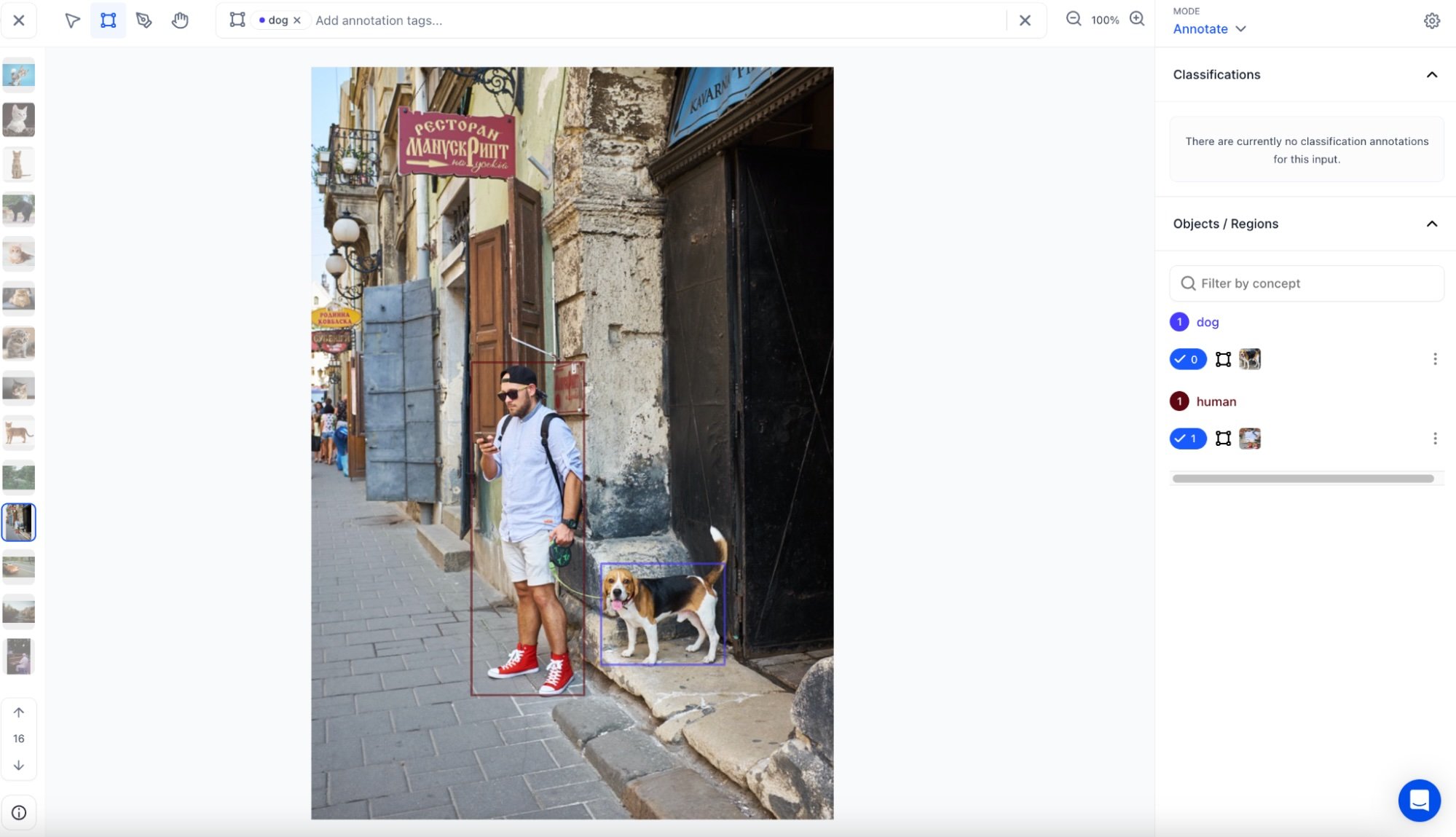

Fetching Annotations from Clarifai App

As you might bear in mind, the Clarifai platform lets you annotate your information in numerous methods, together with bounding bins, segmentations, or easy labels. After annotating your dataset throughout the Clarifai App, we provide the aptitude to extract all annotations from the app in both JSON or dataframe format. From there, you have got the pliability to retailer it as you like, equivalent to changing it right into a delta desk or saving it as a CSV file.

1. Retrieve annotation particulars in JSON format

To acquire annotations inside your Clarifai App’s dataset, you may make the most of the next perform, which supplies a JSON response. Moreover, you have got the choice to specify a listing of enter IDs for which you require annotations.

2. Retrieve annotation particulars as a dataframe

You can even purchase annotations in a structured dataframe format, together with columns like annotation_id’, ‘annotation’, ‘annotation_user_id’, ‘iinput_id’, ‘annotation_created_at’ and ‘annotation_modified_at’. If obligatory, you may specify a listing of enter IDs for which you require annotations. Please observe that the JSON response might comprise supplementary attributes.

3. Purchase inputs with their related annotations in a dataframe

You might have the aptitude to retrieve each enter particulars and their corresponding annotations concurrently utilizing the next perform. This perform produces a dataframe that consolidates information from each the annotations and inputs dataframes, as described within the capabilities talked about earlier.

Instance

Let’s undergo an instance the place you fetch the annotations out of your Clarifai App’s dataset and retailer them right into a delta stay desk on Databricks.

Conclusion

On this weblog we walked by means of the combination between Databricks and Clarifai utilizing the ClarifaiPyspark SDK. The SDK covers a spread of strategies for ingesting and retrieving datasets, offering you with the flexibility to go for probably the most appropriate method on your particular necessities. Whether or not you might be importing information from Databricks volumes or AWS S3 buckets, exporting information and annotations to most popular codecs, or using customized information loaders, the SDK affords a sturdy array of functionalities. Right here’s our SDK GitHub repository – hyperlink.

Extra options and enhancements will likely be launched within the close to future to make sure a deepening integration between Databricks and Clarifai. Keep tuned for extra updates and enhancements and ship us any suggestions to product-feedback@clarifai.com.