Picture by Freepik

Pure Language Processing, or NLP, is a subject inside synthetic intelligence for machines to have the flexibility to know textual knowledge. NLP analysis has existed for a very long time, however solely lately has it change into extra outstanding with the introduction of massive knowledge and better computational processing energy.

With the NLP subject turning into greater, many researchers would attempt to enhance the machine’s functionality to know the textual knowledge higher. By means of a lot progress, many strategies are proposed and utilized within the NLP subject.

This text will evaluate numerous strategies for processing textual content knowledge within the NLP subject. This text will deal with discussing RNN, Transformers, and BERT as a result of it’s the one that’s typically utilized in analysis. Let’s get into it.

Recurrent Neural Community or RNN was developed in 1980 however solely lately gained attraction within the NLP subject. RNN is a selected kind throughout the neural community household used for sequential knowledge or knowledge that may’t be unbiased of one another. Sequential knowledge examples are time sequence, audio, or textual content sentence knowledge, mainly any sort of knowledge with significant order.

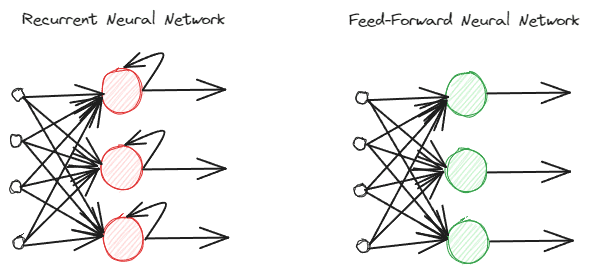

RNNs are totally different from common feed-forward neural networks as they course of data otherwise. Within the regular feed-forward, the data is processed following the layers. Nonetheless, RNN is utilizing a loop cycle on the data enter as consideration. To grasp the variations, let’s see the picture beneath.

Picture by Creator

As you may see, the RNNs mannequin implements a loop cycle throughout the data processing. RNNs would think about the present and former knowledge enter when processing this data. That’s why the mannequin is appropriate for any kind of sequential knowledge.

If we take an instance within the textual content knowledge, think about now we have the sentence “I get up at 7 AM”, and now we have the phrase as enter. Within the feed-forward neural community, after we attain the phrase “up,” the mannequin would already overlook the phrases “I,” “wake,” and “up.” Nonetheless, RNNs would use each output for every phrase and loop them again so the mannequin wouldn’t overlook.

Within the NLP subject, RNNs are sometimes utilized in many textual purposes, reminiscent of textual content classification and era. It’s typically utilized in word-level purposes reminiscent of A part of Speech tagging, next-word era, and many others.

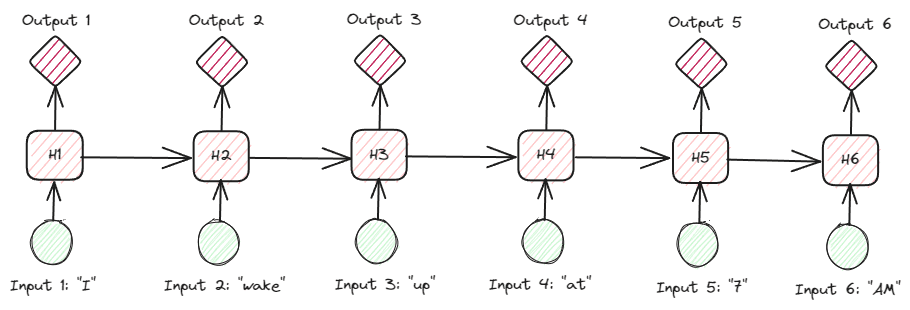

Wanting on the RNNs extra in-depth on the textual knowledge, there are numerous forms of RNNs. For instance, the beneath picture is the many-to-many varieties.

Picture by Creator

Wanting on the picture above, we will see that the output for every step (time-step in RNN) is processed one step at a time, and each iteration all the time considers the earlier data.

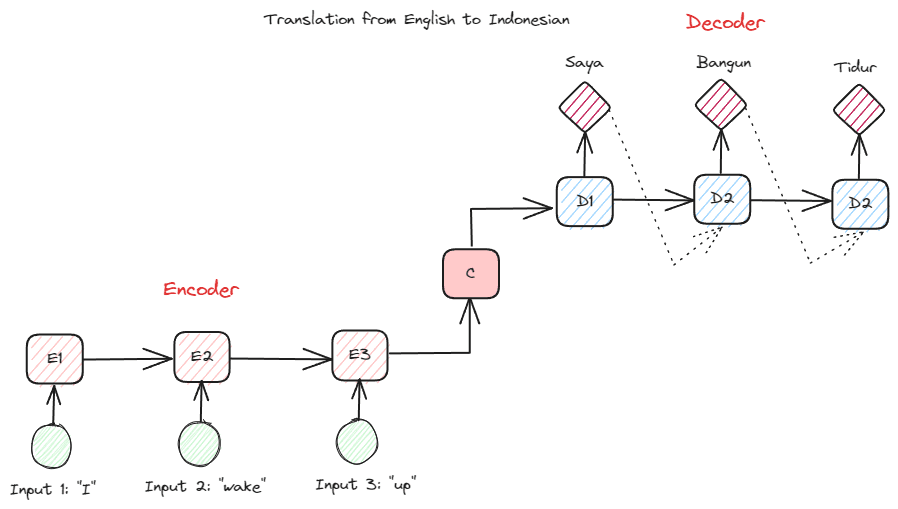

One other RNN kind utilized in many NLP purposes is the encoder-decoder kind (Sequence-to-Sequence). The construction is proven within the picture beneath.

Picture by Creator

This construction introduces two components which are used within the mannequin. The primary half is named Encoder, which is part that receives knowledge sequence and creates a brand new illustration based mostly on it. The illustration can be used within the second a part of the mannequin, which is the decoder. With this construction, the enter and output lengths don’t essentially have to be equal. The instance use case is a language translation, which regularly doesn’t have the identical size between the enter and output.

There are numerous advantages of utilizing RNNs to course of pure language knowledge, together with:

- RNN can be utilized to course of textual content enter with out size limitations.

- The mannequin shares the identical weights throughout on a regular basis steps, which permits the neural community to make use of the identical parameter in every step.

- Having the reminiscence of previous enter makes RNN appropriate for any sequential knowledge.

However, there are a number of disadvantages as effectively:

- RNN is vulnerable to each vanishing and exploding gradients. That is the place the gradient result’s the near-zero worth (vanishing), inflicting community weight to solely be up to date for a tiny quantity, or the gradient result’s so vital (exploding) that it assigns an unrealistic huge significance to the community.

- Very long time of coaching due to the sequential nature of the mannequin.

- Quick-term reminiscence implies that the mannequin begins to overlook the longer the mannequin is educated. There may be an extension of RNN referred to as LSTM to alleviate this drawback.

Transformers is an NLP mannequin structure that tries to unravel the sequence-to-sequence duties beforehand encountered within the RNNs. As talked about above, RNNs have issues with short-term reminiscence. The longer the enter, the extra outstanding the mannequin was in forgetting the data. That is the place the eye mechanism may assist remedy the issue.

The eye mechanism is launched within the paper by Bahdanau et al. (2014) to unravel the lengthy enter drawback, particularly with encoder-decoder kind of RNNs. I’d not clarify the eye mechanism intimately. Mainly, it’s a layer that enables the mannequin to deal with the important a part of the mannequin enter whereas having the output prediction. For instance, the phrase enter “Clock” would correlate extremely with “Jam” in Indonesian if the duty is for translation.

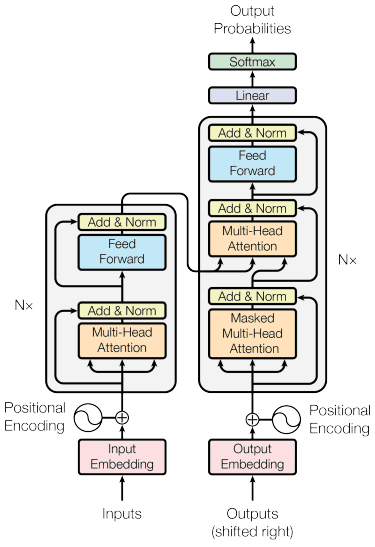

The transformers mannequin is launched by Vaswani et al. (2017). The structure is impressed by the encoder-decoder RNN and constructed with the eye mechanism in thoughts and doesn’t course of knowledge in sequential order. The general transformers mannequin is structured just like the picture beneath.

Transformers Structure (Vaswani et al. 2017)

Within the construction above, the transformers encode the info vector sequence into the phrase embedding with positional encoding in place whereas utilizing the decoding to rework knowledge into the unique type. With the eye mechanism in place, the encoding can given significance in accordance with the enter.

Transformers present few benefits in comparison with the opposite mannequin, together with:

- The parallelization course of will increase the coaching and inference pace.

- Able to processing longer enter, which gives a greater understanding of the context

There are nonetheless some disadvantages to the transformers mannequin:

- Excessive computational processing and demand.

- The eye mechanism may require the textual content to be cut up due to the size restrict it could possibly deal with.

- Context is perhaps misplaced if the cut up had been finished flawed.

BERT

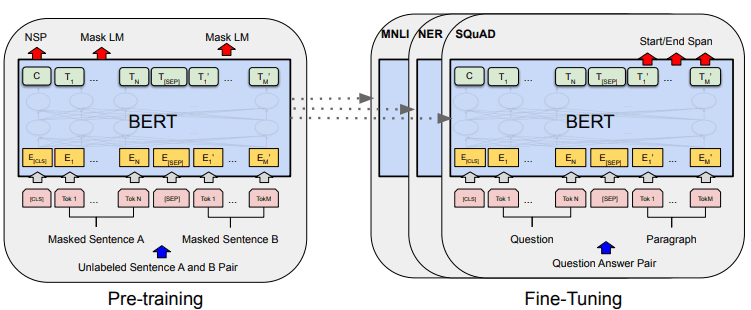

BERT, or Bidirectional Encoder Representations from Transformers, is a mannequin developed by Devlin et al. (2019) that includes two steps (pre-training and fine-tuning) to create the mannequin. If we evaluate, BERT is a stack of transformers encoder (BERT Base has 12 Layers whereas BERT Massive has 24 layers).

BERT’s general mannequin growth could be proven within the picture beneath.

BERT general procedures (Devlin et al. (2019)

Pre-training duties provoke the mannequin’s coaching on the identical time, and as soon as it’s finished, the mannequin could be fine-tuned for numerous downstream duties (question-answering, classification, and many others.).

What makes BERT particular is that it’s the first unsupervised bidirectional language mannequin that’s pre-trained on textual content knowledge. BERT was beforehand pre-trained on the whole Wikipedia and e book corpus, consisting of over 3000 million phrases.

BERT is taken into account bidirectional as a result of it didn’t learn knowledge enter sequentially (from left to proper or vice versa), however the transformer encoder learn the entire sequence concurrently.

In contrast to directional fashions, which learn the textual content enter sequentially (left-to-right or right-to-left), the Transformer encoder reads the whole sequence of phrases concurrently. That’s why the mannequin is taken into account bidirectional and permits the mannequin to know the entire context of the enter knowledge.

To attain bidirectional, BERT makes use of two strategies:

- Masks Language Mannequin (MLM) — Phrase masking approach. The approach would masks 15% of the enter phrases and attempt to predict this masked phrase based mostly on the non-masked phrase.

- Subsequent Sentence Prediction (NSP) — BERT tries to be taught the connection between sentences. The mannequin has pairs of sentences as the info enter and tries to foretell if the next sentence exists within the authentic doc.

There are a couple of benefits to utilizing BERT within the NLP subject, together with:

- BERT is straightforward to make use of for pre-trained numerous NLP downstream duties.

- Bidirectional makes BERT perceive the textual content context higher.

- It’s a well-liked mannequin that has a lot assist from the neighborhood

Though, there are nonetheless a couple of disadvantages, together with:

- Requires excessive computational energy and lengthy coaching time for some downstream process fine-tuning.

- The BERT mannequin may end in an enormous mannequin requiring a lot greater storage.

- It’s higher to make use of for complicated duties because the efficiency for easy duties isn’t a lot totally different than utilizing less complicated fashions.

NLP has change into extra outstanding lately, and far analysis has targeted on enhancing the purposes. On this article, we focus on three NLP strategies which are typically used:

- RNN

- Transformers

- BERT

Every of the strategies has its benefits and downsides, however general, we will see the mannequin evolving in a greater means.

Cornellius Yudha Wijaya is a knowledge science assistant supervisor and knowledge author. Whereas working full-time at Allianz Indonesia, he likes to share Python and Knowledge suggestions by way of social media and writing media.

Cornellius Yudha Wijaya is a knowledge science assistant supervisor and knowledge author. Whereas working full-time at Allianz Indonesia, he likes to share Python and Knowledge suggestions by way of social media and writing media.