Picture by Editor

Giant Language Fashions (LLMs) like OpenAI’s GPT-3, Google’s BERT, and Meta’s LLaMA are revolutionizing varied sectors with their capacity to generate a big selection of textual content?—?from advertising and marketing copy and knowledge science scripts to poetry.

Regardless that ChatGPT’s intuitive interface has managed to be in most individuals’s units at the moment, there’s nonetheless an unlimited panorama of untapped potential for utilizing LLMs in numerous software program integrations.

The primary drawback?

Most functions require extra fluid and native communication with LLMs.

And that is exactly the place LangChain kicks in!

If you’re keen on Generative AI and LLMs, this tutorial is tailored for you.

So… let’s begin!

Simply in case you might have been dwelling inside a cave and haven’t gotten any information recently, I’ll briefly clarify Giant Language Fashions or LLMs.

An LLM is a complicated synthetic intelligence system constructed to imitate human-like textual understanding and technology. By coaching on monumental knowledge units, these fashions discern intricate patterns, grasp linguistic subtleties, and produce coherent outputs.

When you surprise how one can work together with these AI-powered fashions, there are two important methods to take action:

- The most typical and direct manner is speaking or chatting with the mannequin. It includes crafting a immediate, sending it to the AI-powered mannequin, and getting a text-based output as a response.

- One other technique is changing textual content into numerical arrays. This course of includes composing a immediate for the AI and receiving a numerical array in return. What is usually generally known as an “embedding”. It has skilled a current surge in Vector Databases and semantic search.

And it’s exactly these two important issues that LangChain tries to deal with. If you’re keen on the primary issues of interacting with LLMs, you may verify this text right here.

LangChain is an open-source framework constructed round LLMs. It brings to the desk an arsenal of instruments, parts, and interfaces that streamline the structure of LLM-driven functions.

With LangChain, partaking with language fashions, interlinking numerous parts, and incorporating belongings like APIs and databases turn out to be a breeze. This intuitive framework considerably simplifies the LLM software growth journey.

The core concept of Lengthy Chain is that we are able to join collectively totally different parts or modules, often known as chains, to create extra subtle LLM-powered options.

Listed below are some standout options of LangChain:

- Customizable immediate templates to standardize our interactions.

- Chain hyperlink parts tailor-made for classy use instances.

- Seamless integration with main language fashions, together with OpenAI’s GPTs and people on HuggingFace Hub.

- Modular parts for a mix-and-match strategy to evaluate any particular drawback or job.

Picture by Creator

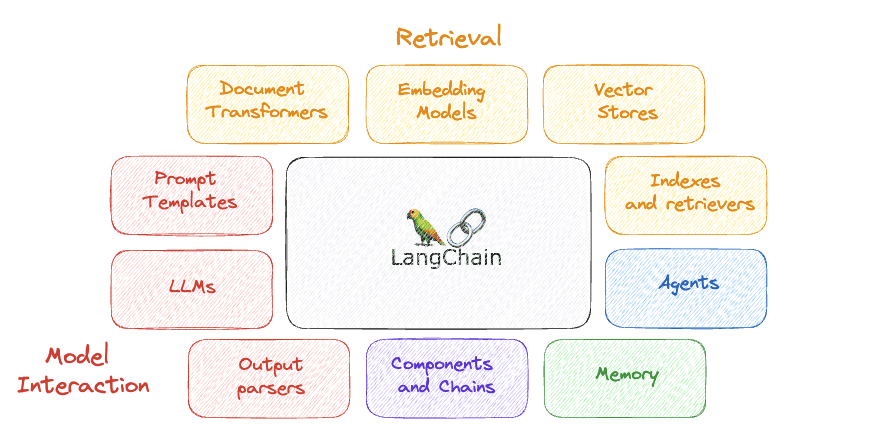

LangChain is distinguished by its concentrate on adaptability and modular design.

The primary concept behind LangChain is breaking down the pure language processing sequence into particular person components, permitting builders to customise workflows based mostly on their necessities.

Such versatility positions LangChain as a first-rate alternative for constructing AI options in several conditions and industries.

A few of its most necessary parts are…

Picture by Creator

1. LLMs

LLMs are basic parts that leverage huge quantities of coaching knowledge to grasp and generate human-like textual content. They’re on the core of many operations inside LangChain, offering the required language processing capabilities to research, interpret, and reply to textual content enter.

Utilization: Powering chatbots, producing human-like textual content for varied functions, aiding in info retrieval, and performing different language processing

2. Immediate templates

Prompts are basic for interacting with LLM, and when engaged on particular duties, their construction tends to be related. Immediate templates, that are preset prompts usable throughout chains, permit standardization of “prompts” by including particular values. This enhances the adaptability and customization of any LLM.

Utilization: Standardizing the method of interacting with LLMs.

3. Output Parsers

Output parsers are parts that take the uncooked output from a previous stage within the chain and convert it right into a structured format. This structured knowledge can then be used extra successfully in subsequent phases or delivered as a response to the tip consumer.

Utilization: For example, in a chatbot, an output parser may take the uncooked textual content response from a language mannequin, extract key items of data, and format them right into a structured reply.

4. Parts and chains

In LangChain, every part acts as a module chargeable for a specific job within the language processing sequence. These parts might be related to type chains for custom-made workflows.

Utilization: Producing sentiment detection and response generator chains in a particular chatbot.

5. Reminiscence

Reminiscence in LangChain refers to a part that gives a storage and retrieval mechanism for info inside a workflow. This part permits for the non permanent or persistent storage of information that may be accessed and manipulated by different parts in the course of the interplay with the LLM.

Utilization: That is helpful in situations the place knowledge must be retained throughout totally different phases of processing, for instance, storing dialog historical past in a chatbot to offer context-aware responses.

6. Brokers

Brokers are autonomous parts able to taking actions based mostly on the info they course of. They’ll work together with different parts, exterior techniques, or customers, to carry out particular duties inside a LangChain workflow.

Utilization: For example, an agent may deal with consumer interactions, course of incoming requests, and coordinate the move of information by the chain to generate acceptable responses.

7. Indexes and Retrievers

Indexes and Retrievers play a vital position in managing and accessing knowledge effectively. Indexes are knowledge constructions holding info and metadata from the mannequin’s coaching knowledge. Alternatively, retrievers are mechanisms that work together with these indexes to fetch related knowledge based mostly on specified standards and permit the mannequin to answer higher by supplying related context.

Utilization: They’re instrumental in shortly fetching related knowledge or paperwork from a big dataset, which is important for duties like info retrieval or query answering.

8. Doc Transformers

In LangChain, Doc Transformers are specialised parts designed to course of and rework paperwork in a manner that makes them appropriate for additional evaluation or processing. These transformations might embrace duties equivalent to textual content normalization, characteristic extraction, or the conversion of textual content into a unique format.

Utilization: Getting ready textual content knowledge for subsequent processing phases, equivalent to evaluation by machine studying fashions or indexing for environment friendly retrieval.

9. Embedding Fashions

They’re used to transform textual content knowledge into numerical vectors in a high-dimensional house. These fashions seize semantic relationships between phrases and phrases, enabling a machine-readable illustration. They type the muse for varied downstream Pure Language Processing (NLP) duties throughout the LangChain ecosystem.

Utilization: Facilitating semantic searches, similarity comparisons, and different machine-learning duties by offering a numerical illustration of textual content.

10. Vector shops

Kind of database system that specializes to retailer and search info by way of embeddings, basically analyzing numerical representations of text-like knowledge. VectorStore serves as a storage facility for these embeddings.

Utilization: Permitting environment friendly search based mostly on semantic similarity.

Putting in it utilizing PIP

The very first thing we’ve to do is make sure that we’ve LangChain put in in the environment.

Atmosphere setup

Using LangChain sometimes means integrating with numerous mannequin suppliers, knowledge shops, APIs, amongst different parts. And as you already know, like all integration, supplying the related and proper API keys is essential for LangChain’s operation.

Think about we wish to use our OpenAI API. We will simply accomplish this in two methods:

- Organising key as an atmosphere variable

or

import os

os.environ['OPENAI_API_KEY'] = “...”

When you select to not set up an atmosphere variable, you might have the choice to offer the important thing instantly by the openai_api_key named parameter when initiating the OpenAI LLM class:

- Instantly arrange the important thing within the related class.

from langchain.llms import OpenAI

llm = OpenAI(openai_api_key="...")

Switching between LLMs turns into simple

LangChain offers an LLM class that permits us to work together with totally different language mannequin suppliers, equivalent to OpenAI and Hugging Face.

It’s fairly straightforward to get began with any LLM, as essentially the most primary and easiest-to-implement performance of any LLM is simply producing textual content.

Nevertheless, asking the exact same immediate to totally different LLMs directly will not be really easy.

That is the place LangChain kicks in…

Getting again to the best performance of any LLM, we are able to simply construct an software with LangChain that will get a string immediate and returns the output of our designated LLM..

Code by Creator

We will merely use the identical immediate and get the response of two totally different fashions inside few traces of code!

Code by Creator

Spectacular… proper?

Giving construction to our prompts with immediate templates

A standard situation with Language Fashions (LLMs) is their incapability to escalate advanced functions. LangChain addresses this by providing an answer to streamline the method of making prompts, which is commonly extra intricate than simply defining a job because it requires outlining the AI’s persona and making certain factual accuracy. A major a part of this includes repetitive boilerplate textual content. LangChain alleviates this by providing immediate templates, which auto-include boilerplate textual content in new prompts, thus simplifying immediate creation and making certain consistency throughout totally different duties.

Code by Creator

Getting structured responses with output parsers

In chat-based interactions, the mannequin’s output is merely textual content. But, inside software program functions, having a structured output is preferable because it permits for additional programming actions. For example, when producing a dataset, receiving the response in a particular format equivalent to CSV or JSON is desired. Assuming a immediate might be crafted to elicit a constant and suitably formatted response from the AI, there is a want for instruments to handle this output. LangChain caters to this requirement by providing output parser instruments to deal with and make the most of the structured output successfully.

Code by Creator

You may go verify the entire code on my GitHub.

Not way back, the superior capabilities of ChatGPT left us in awe. But, the technological atmosphere is ever-changing, and now instruments like LangChain are at our fingertips, permitting us to craft excellent prototypes from our private computer systems in only a few hours.

LangChain, a freely out there Python platform, offers a way for customers to develop functions anchored by LLMs (Language Mannequin Fashions). This platform delivers a versatile interface to quite a lot of foundational fashions, streamlining immediate dealing with and performing as a nexus for components like immediate templates, extra LLMs, exterior info, and different assets by way of brokers, as of the present documentation.

Think about chatbots, digital assistants, language translation instruments, and sentiment evaluation utilities; all these LLM-enabled functions come to life with LangChain. Builders make the most of this platform to craft custom-tailored language mannequin options addressing distinct necessities.

Because the horizon of pure language processing expands, and its adoption deepens, the realm of its functions appears boundless.

Josep Ferrer is an analytics engineer from Barcelona. He graduated in physics engineering and is at the moment working within the Knowledge Science discipline utilized to human mobility. He’s a part-time content material creator centered on knowledge science and expertise. You may contact him on LinkedIn, Twitter or Medium.