It’s no secret to anybody that high-performing ML fashions must be equipped with giant volumes of high quality coaching information. With out having the info, there’s hardly a approach a corporation can leverage AI and self-reflect to develop into extra environment friendly and make better-informed choices. The method of turning into a data-driven (and particularly AI-driven) firm is understood to be not simple.

28% of corporations that undertake AI cite lack of entry to information as a motive behind failed deployments. – KDNuggets

Moreover, there are points with errors and biases inside current information. They’re considerably simpler to mitigate by numerous processing methods, however this nonetheless impacts the supply of reliable coaching information. It’s a significant issue, however the lack of coaching information is a a lot more durable drawback, and fixing it’d contain many initiatives relying on the maturity degree.

Moreover information availability and biases there’s one other facet that is essential to say: information privateness. Each corporations and people are persistently selecting to stop information they personal for use for mannequin coaching by third events. The shortage of transparency and laws round this subject is well-known and had already develop into a catalyst of lawmaking throughout the globe.

Nonetheless, within the broad panorama of data-oriented applied sciences, there’s one which goals to resolve the above-mentioned issues from a bit of sudden angle. This expertise is artificial information. Artificial information is produced by simulations with numerous fashions and eventualities or sampling methods of current information sources to create new information that’s not sourced from the true world.

Artificial information can exchange or increase current information and be used for coaching ML fashions, mitigating bias, and defending delicate or regulated information. It’s low cost and might be produced on demand in giant portions in keeping with specified statistics.

Artificial datasets maintain the statistical properties of the unique information used as a supply: methods that generate the info get hold of a joint distribution that additionally might be custom-made if needed. In consequence, artificial datasets are much like their actual sources however don’t comprise any delicate info. That is particularly helpful in extremely regulated industries equivalent to banking and healthcare, the place it may possibly take months for an worker to get entry to delicate information due to strict inside procedures. Utilizing artificial information on this surroundings for testing, coaching AI fashions, detecting fraud and different functions simplifies the workflow and reduces the time required for improvement.

All this additionally applies to coaching giant language fashions since they’re educated totally on public information (e.g. OpenAI ChatGPT was educated on Wikipedia, elements of net index, and different public datasets), however we predict that it’s artificial information is an actual differentiator going additional since there’s a restrict of accessible public information for coaching fashions (each bodily and authorized) and human created information is dear, particularly if it requires specialists.

Producing Artificial Knowledge

There are numerous strategies of manufacturing artificial information. They are often subdivided into roughly 3 main classes, every with its benefits and downsides:

- Stochastic course of modeling. Stochastic fashions are comparatively easy to construct and don’t require a number of computing sources, however since modeling is targeted on statistical distribution, the row-level information has no delicate info. The best instance of stochastic course of modeling might be producing a column of numbers primarily based on some statistical parameters equivalent to minimal, most, and common values and assuming the output information follows some recognized distribution (e.g. random or Gaussian).

- Rule-based information technology. Rule-based techniques enhance statistical modeling by together with information that’s generated in keeping with guidelines outlined by people. Guidelines might be of varied complexity, however high-quality information requires complicated guidelines and tuning by human specialists which limits the scalability of the strategy.

- Deep studying generative fashions. By making use of deep studying generative fashions, it’s doable to coach a mannequin with actual information and use that mannequin to generate artificial information. Deep studying fashions are in a position to seize extra complicated relationships and joint distributions of datasets, however at a better complexity and compute prices.

Additionally, it’s value mentioning that present LLMs can be used to generate artificial information. It doesn’t require in depth setup and might be very helpful on a smaller scale (or when accomplished simply on a person request) as it may possibly present each structured and unstructured information, however on a bigger scale it is perhaps dearer than specialised strategies. Let’s not overlook that state-of-the-art fashions are liable to hallucinations so statistical properties of artificial information that comes from LLM must be checked earlier than utilizing it in eventualities the place distribution issues.

An attention-grabbing instance that may function an illustration of how the usage of artificial information requires a change in method to ML mannequin coaching is an method to mannequin validation.

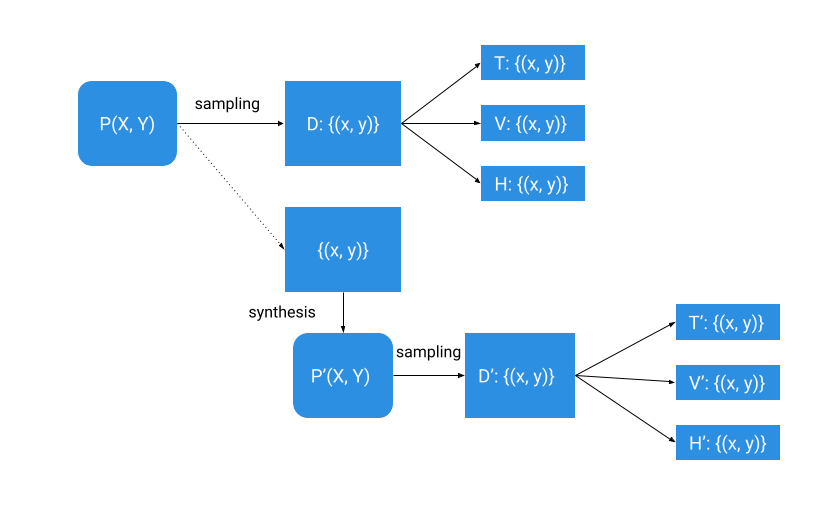

In conventional information modeling, we have now a dataset (D) that may be a set of observations drawn from some unknown real-world course of (P) that we wish to mannequin. We divide that dataset right into a coaching subset (T), a validation subset (V) and a holdout (H) and use it to coach a mannequin and estimate its accuracy.

To do artificial information modeling, we synthesize a distribution P’ from our preliminary dataset and pattern it to get the artificial dataset (D’). We subdivide the artificial dataset right into a coaching subset (T’), a validation subset (V’), and a holdout (H’) like we subdivided the true dataset. We wish distribution P’ to be as virtually near P as doable since we would like the accuracy of a mannequin educated on artificial information to be as near the accuracy of a mannequin educated on actual information (in fact, all artificial information ensures must be held).

When doable, artificial information modeling also needs to use the validation (V) and holdout (H) information from the unique supply information (D) for mannequin analysis to make sure that the mannequin educated on artificial information (T’) performs properly on real-world information.

So, a great artificial information answer ought to enable us to mannequin P(X, Y) as precisely as doable whereas preserving all privateness ensures held.

Though the broader use of artificial information for mannequin coaching requires altering and enhancing current approaches, in our opinion, it’s a promising expertise to deal with present issues with information possession and privateness. Its correct use will result in extra correct fashions that may enhance and automate the choice making course of considerably lowering the dangers related to the usage of personal information.

Concerning the creator

Nick Volynets is a senior information engineer working with the workplace of the CTO the place he enjoys being on the coronary heart of DataRobot innovation. He’s excited about giant scale machine studying and captivated with AI and its influence.