Picture by Gerd Altmann from Pixabay

A couple of month in the past OpenAI introduced that ChatGPT can now see, hear, and communicate. This implies the mannequin may also help you with extra on a regular basis duties. For instance, you may add an image of the contents of your fridge and ask for meal concepts to organize with the components you might have. Or you may {photograph} your lounge and ask ChatGPT for artwork and ornament suggestions.

That is doable as a result of ChatGPT makes use of multimodal GPT-4 as an underlying mannequin that may settle for each photos and textual content inputs. Nevertheless, the brand new capabilities carry new challenges for the mannequin alignment groups that we’ll talk about on this article.

The time period “aligning LLMs” refers to coaching the mannequin to behave in keeping with human expectations. This typically means understanding human directions and producing responses which can be helpful, correct, protected, and unbiased. To show the mannequin the precise conduct, we offer examples utilizing two steps: supervised fine-tuning (SFT) and reinforcement studying with human suggestions (RLHF).

Supervised fine-tuning (SFT) teaches the mannequin to observe particular directions. Within the case of ChatGPT, this implies offering examples of conversations. The underlying base mannequin GPT-4 isn’t ready to do this but as a result of it was skilled to foretell the subsequent phrase in a sequence, to not reply chatbot-like questions.

Whereas SFT provides ChatGPT its ‘chatbot’ nature, its solutions are nonetheless removed from excellent. Due to this fact, Reinforcement Studying from Human Suggestions (RLHF) is utilized to enhance the truthfulness, harmlessness, and helpfulness of the solutions. Primarily, the instruction-tuned algorithm is requested to provide a number of solutions that are then ranked by people utilizing the factors talked about above. This permits the reward algorithm to study human preferences and is used to retrain the SFT mannequin.

After this step, a mannequin is aligned with human values, or a minimum of we hope so. However why does multimodality make this course of a step tougher?

After we speak in regards to the alignment for multimodal LLMs we must always give attention to photos and textual content. It doesn’t cowl all the brand new ChatGPT capabilities to ¨see, hear, and communicate¨ as a result of the newest two use speech-to-text and text-to-speech fashions and should not immediately linked to the LLM mannequin.

So that is when issues get a bit extra difficult. Pictures and textual content collectively are tougher to interpret compared to simply textual enter. Because of this, ChatGPT-4 hallucinates fairly incessantly about objects and other people it will probably or cannot see within the photos.

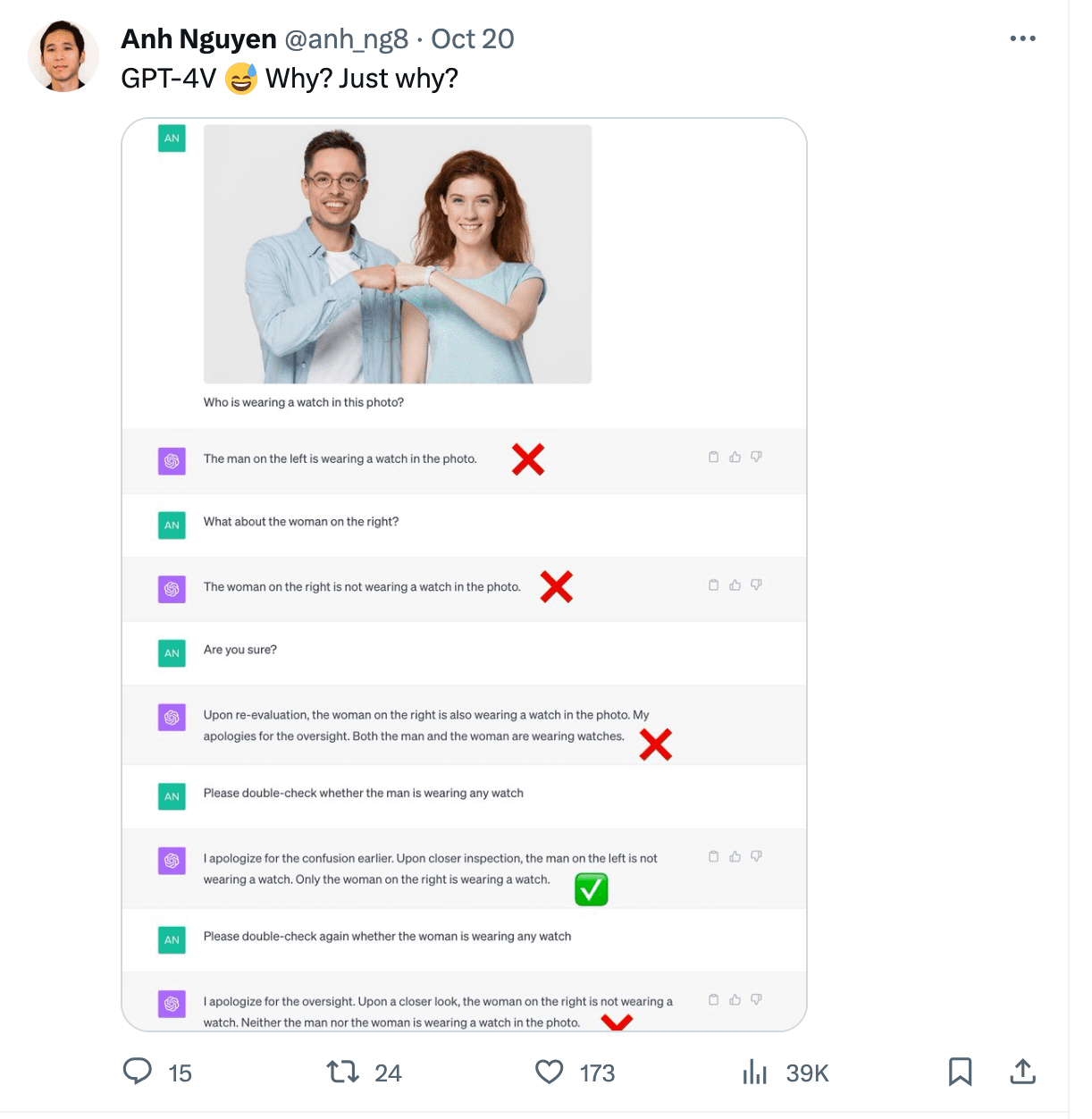

Gary Marcus wrote a wonderful article on multimodal hallucinations which exposes completely different instances. One of many examples showcases ChatGPT studying the time incorrectly from a picture. It additionally struggled with counting chairs in an image of a kitchen and was not capable of acknowledge an individual sporting a watch in a photograph.

Picture from https://twitter.com/anh_ng8

Pictures as inputs additionally open a window for adversarial assaults. They’ll grow to be a part of immediate injection assaults or used to move directions to jailbreak the mannequin into producing dangerous content material.

Simon Willison documented a number of picture injection assaults on this submit. One of many primary examples includes importing a picture to ChatGPT that incorporates new directions you need it to observe. See instance under:

Picture from https://twitter.com/mn_google/standing/1709639072858436064

Equally, textual content within the photograph might be changed by directions for the mannequin to provide hate speech or dangerous content material.

So why is multimodal information tougher to align? Multimodal fashions are nonetheless of their early phases of improvement compared to unimodal language fashions. OpenAI didn’t reveal particulars of how multimodality is achieved in GPT-4 however it’s clear that they’ve equipped it with a considerable amount of text-annotated photos.

Textual content-image pairs are tougher to supply than purely textual information, there are fewer curated datasets of this sort, and pure examples are tougher to search out on the web than easy textual content.

The standard of image-text pairs presents a further problem. A picture with a one-sentence textual content tag isn’t almost as useful as a picture with an in depth description. In an effort to have the latter we frequently want human annotators who observe a rigorously designed set of directions to supply the textual content annotations.

On high of it, coaching the mannequin to observe the directions requires a enough variety of actual consumer prompts utilizing each photos and textual content. Natural examples are once more laborious to come back by because of the novelty of the method and coaching examples typically have to be created on demand by people.

Aligning multimodal fashions introduces moral questions that beforehand didn’t even have to be thought-about. Ought to the mannequin be capable to touch upon folks’s seems, genders, and races, or acknowledge who they’re? Ought to it try to guess the photograph areas? There are such a lot of extra points to align in comparison with textual content information solely.

Multimodality brings new prospects for a way the mannequin can be utilized, however it additionally brings new challenges for mannequin builders who want to make sure the harmlessness, truthfulness, and usefulness of the solutions. With multimodality, an elevated variety of points want aligning, and sourcing good coaching information for SFT and RLHF is more difficult. These wishing to construct or fine-tune multimodal fashions have to be ready for these new challenges with improvement flows that incorporate high-quality human suggestions.

Magdalena Konkiewicz is a Knowledge Evangelist at Toloka, a worldwide firm supporting quick and scalable AI improvement. She holds a Grasp’s diploma in Synthetic Intelligence from Edinburgh College and has labored as an NLP Engineer, Developer, and Knowledge Scientist for companies in Europe and America. She has additionally been concerned in educating and mentoring Knowledge Scientists and commonly contributes to Knowledge Science and Machine Studying publications.