For years, devoted Star Trek followers have been utilizing AI in an try to make a model of the acclaimed collection Deep Area 9 that appears respectable on trendy TVs. It sounds a bit ridiculous, however I used to be shocked to seek out that it’s truly fairly good — definitely ok that media firms ought to concentrate (as a substitute of simply sending me copyright strikes).

I used to be impressed earlier this 12 months to observe the present, a fan favourite that I often noticed on TV when it aired however by no means actually thought twice about. After seeing Star Trek: The Subsequent Era’s revelatory remaster, I felt I must revisit its much less galaxy-trotting, extra ensemble-focused sibling. Maybe, I assumed, it was in the course of an in depth remastering course of as properly. Nope!

Sadly, I used to be to seek out out that, though the TNG remaster was an enormous triumph technically, the timing coincided with the rise of streaming companies, that means the costly Blu-ray set bought poorly. The method price greater than $10 million, and if it didn’t repay for the franchise’s most reliably widespread collection, there’s no manner the powers that be do it once more for DS9, well-loved however far much less bankable.

What this implies is that if you wish to watch DS9 (or Voyager for that matter), you must watch it kind of on the high quality wherein it was broadcast again within the ’90s. Like TNG, it was shot on movie however transformed to video tape at roughly 480p decision. And though the DVDs supplied higher picture high quality than the broadcasts (because of issues like pulldown and colour depth) they had been nonetheless, in the end, restricted by the format wherein the present was completed.

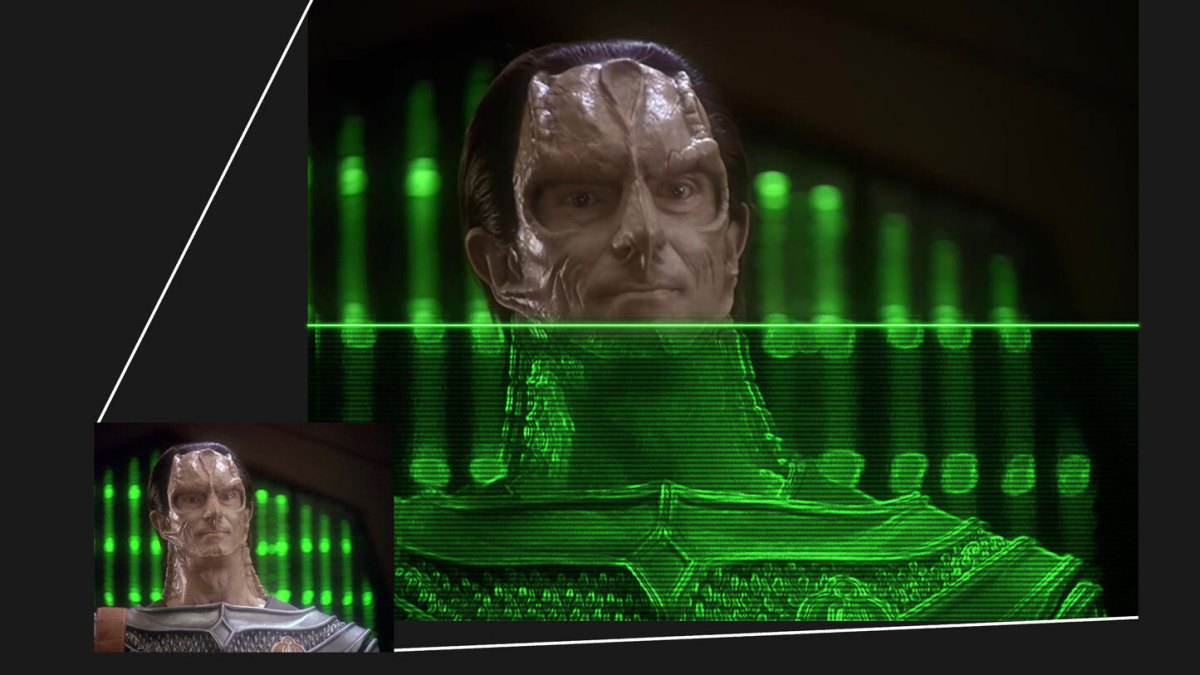

Not nice, proper? And that is about pretty much as good because it will get, particularly early on. Picture credit: Paramount

For TNG, they went again to the unique negatives and mainly re-edited the complete present, redoing results and compositing, involving nice price and energy. Maybe which will occur within the twenty fifth century for DS9, however at current there aren’t any plans, and even when they introduced it tomorrow, years would cross earlier than it got here out.

So: as a would-be DS9 watcher, spoiled by the attractive TNG rescan, and who dislikes the concept of a shabby NTSC broadcast picture being proven on my beautiful 4K display screen, the place does that depart me? Because it seems: not alone.

To boldly upscale…

For years, followers of reveals and films left behind by the HD practice have labored surreptitiously to seek out and distribute higher variations than what’s made formally out there. Probably the most well-known instance is the unique Star Wars trilogy, which was irreversibly compromised by George Lucas throughout the official remaster course of, main followers to seek out different sources for sure scenes: laserdiscs, restricted editions, promotional media, forgotten archival reels, and so forth. These completely unofficial editions are a relentless work in progress, and in recent times have begun to implement new AI-based instruments as properly.

These instruments are largely about clever upscaling and denoising, the latter of which is of extra concern within the Star Wars world, the place among the authentic movie footage is extremely grainy or degraded. However you would possibly suppose that upscaling, making a picture larger, is a comparatively easy course of — why get AI concerned?

Actually there are easy methods to upscale, or convert a video’s decision to a better one. That is performed mechanically when you’ve got a 720p sign going to a 4K TV, as an example. The 1280×720 decision picture doesn’t seem all tiny within the middle of the 3840×2160 show — it will get stretched by an element of three in every course in order that it matches the display screen; however whereas the picture seems larger, it’s nonetheless 720p in decision and element.

A easy, quick algorithm like bilinear filtering makes a smaller picture palatable on an enormous display screen even when it’s not an actual 2x or 3x stretch, and there are some scaling strategies that work higher with some media (as an example animation, or pixel artwork). However general you would possibly pretty conclude that there isn’t a lot to be gained by a extra intensive course of.

And that’s true to an extent, till you begin down the almost bottomless rabbit gap of making an improved upscaling course of that truly provides element. However how are you going to “add” element that the picture doesn’t already include? Properly, it does include it — or reasonably, indicate it.

Right here’s a quite simple instance. Think about a previous TV exhibiting a picture of a inexperienced circle on a background that fades from blue to crimson (I used this CRT filter for a fundamental mockup).

You’ll be able to see it’s a circle, in fact, however for those who had been to look carefully it’s truly fairly fuzzy the place the circle and background meet, proper, and stepped within the colour gradient? It’s restricted by the decision and by the video codec and broadcast technique, to not point out the sub-pixel format and phosphors of an previous TV.

But when I requested you to recreate that picture in excessive decision and colour, you might truly accomplish that with higher high quality than you’d ever seen it, crisper and with smoother colours. How? As a result of there’s extra info implicit within the picture than merely what you see. If you happen to’re moderately positive what was there earlier than these particulars had been misplaced when it was encoded, you’ll be able to put them again, like so:

There’s much more element carried within the picture that simply isn’t clearly seen — so actually, we aren’t including however recovering it. On this instance I’ve made the change excessive for impact (it’s reasonably jarring, in actual fact), however in photographic imagery it’s normally a lot much less stark.

Clever embiggening

The above is a quite simple instance of recovering element, and it’s truly one thing that’s been performed systematically for years in restoration efforts throughout quite a few fields, digital and analog. However whilst you can see it’s attainable to create a picture with extra obvious element than the unique, you additionally see that it’s solely attainable due to a sure degree of understanding or intelligence about that picture. A easy mathematical formulation can’t do it. Thankfully, we’re properly past the times when a easy mathematical formulation is our solely means to enhance picture high quality.

From open supply instruments to branded ones from Adobe and Nvidia, upscaling software program has grow to be far more mainstream as graphics playing cards able to doing the advanced calculations essential to do them have proliferated. The necessity to gracefully improve a clip or screenshot from low decision to excessive is commonplace lately throughout dozens of industries and contexts.

Video results suites now incorporate advanced picture evaluation and context-sensitive algorithms, in order that as an example pores and skin or hair is handled otherwise than the floor of water or the hull of a starship. Every parameter and algorithm will be adjusted and tweaked individually relying on the consumer’s want or the imagery being upscaled. Among the many most used choices is Topaz, a set of video processing instruments that make use of machine studying strategies.

Picture Credit: Topaz AI

The difficulty with these instruments is twofold. First, the intelligence solely goes to date: settings that is perhaps good for a scene in house are completely unsuitable for an inside scene, or a jungle or boxing match. In truth even a number of pictures inside one scene might require completely different approaches: completely different angles, options, hair varieties, lighting. Discovering and locking in these Goldilocks settings is a variety of work.

Second, these algorithms aren’t low cost or (particularly on the subject of open supply instruments) straightforward. You don’t simply pay for a Topaz license — you must run it on one thing, and each picture you set by means of it makes use of a non-trivial quantity of computing energy. Calculating the varied parameters for a single body would possibly take a number of seconds, and when you think about there are 30 frames per second for 45 minutes per episode, out of the blue you’re working your $1,000 GPU at its restrict for hours and hours at a time — maybe to only throw away the outcomes if you discover a higher mixture of settings a bit of later. Or possibly you pay for calculating within the cloud, and now your passion has one other month-to-month payment.

Thankfully, there are individuals like Joel Hruska, for whom this painstaking, pricey course of is a ardour mission.

“I attempted to observe the present on Netflix,” he instructed me in an interview. “It was abominable.”

Like me and plenty of (however not that many) others, he eagerly anticipated an official remaster of this present, the way in which Star Wars followers anticipated a complete remaster of the unique Star Wars trilogy theatrical minimize. Neither neighborhood acquired what they wished.

“I’ve been ready 10 years for Paramount to do it, they usually haven’t,” he mentioned. So he joined with the opposite, more and more properly outfitted followers who had been taking issues into their very own arms.

Time, terabytes, and style

Hruska has documented his work in a collection of posts on ExtremeTech, and is all the time cautious to clarify that he’s doing this for his personal satisfaction and to not generate profits or launch publicly. Certainly, it’s laborious to think about even knowledgeable VFX artist going to the lengths Hruska has to discover the capabilities of AI upscaling and making use of it to this present particularly.

“This isn’t a boast, however I’m not going to lie,” he started. “I’ve labored on this generally for 40-60 hours per week. I’ve encoded the episode ‘Sacrifice of Angels’ over 9,000 occasions. I did 120 Handbrake encodes — I examined each single adjustable parameter to see what the outcomes can be. I’ve needed to dedicate 3.5 terabytes to particular person episodes, only for the intermediate information. I’ve brute-forced this to an infinite diploma… and I’ve failed so many occasions.”

He confirmed me one episode he’d encoded that actually seemed prefer it had been correctly remastered by a group of consultants — to not the purpose the place you suppose it was shot in 4K and HDR, however simply so that you aren’t continually considering “my god, did TV actually appear to be this?” on a regular basis.

“I can create an episode of DS9 that appears prefer it was filmed in early 720p. If you happen to watch it from 7-8 ft again, it seems to be fairly good. But it surely has been an extended and winding highway to enchancment,” he admitted. The episode he shared was “a compilation of 30 completely different upscales from 4 completely different variations of the video.”

Picture credit: Joel Hruska/Paramount

Sounds excessive, sure. However it is usually an attention-grabbing demonstration of the capabilities and limitations of AI upscaling. The intelligence it has may be very small in scale, extra involved with pixels and contours and gradients than the way more subjective qualities of what seems to be “good” or “pure.” And identical to tweaking a photograph a method would possibly carry out somebody’s eyes however blow out their pores and skin, and one other manner vice versa, an iterative and multi-layered strategy is required.

The method, then, is much much less automated than you would possibly anticipate — it’s a matter of style, familiarity with the tech, and serendipity. In different phrases, it’s an artwork.

“The extra I’ve performed, the extra I’ve found you can pull element out of surprising locations,” he mentioned. “You’re taking these completely different encodes and mix them collectively, you draw element out in several methods. One is for sharpness and readability, the following is for therapeutic some injury, however if you put them on prime of one another, what you get is a particular model of the unique video that emphasizes sure elements and regresses any injury you probably did.”

“You’re not alleged to run video by means of Topaz 17 occasions; it’s frowned on. But it surely works! Plenty of the previous rulebook doesn’t apply,” he mentioned. “If you happen to attempt to go the best route, you’ll get a playable video however it is going to have movement errors [i.e. video artifacts]. How a lot does that trouble you? Some individuals don’t give a shit! However I’m doing this for individuals like me.”

Like so many ardour tasks, the viewers is proscribed. “I want I might launch my work, I actually do,” Hruska admitted. “However it might paint a goal on my again.” For now it’s for him and fellow Trek followers to take pleasure in in, if not secret, a minimum of believable deniability.

Actual time with Odo

Anybody can see that AI-powered instruments and companies are trending towards accessibility. The sort of picture evaluation that Google and Apple as soon as needed to do within the cloud can now be performed in your telephone. Voice synthesis will be performed regionally as properly, and shortly we might have ChatGPT-esque conversational AI that doesn’t must telephone residence. What enjoyable that will likely be!

That is enabled by a number of components, certainly one of which is extra environment friendly devoted chips. GPUs have performed the job properly however had been initially designed for one thing else. Now, small chips are being constructed from the bottom as much as carry out the sort of math on the coronary heart of many machine studying fashions, and they’re more and more present in telephones, TVs, laptops, you title it.

Actual time clever picture upscaling is neither easy nor straightforward to do correctly, however it’s clear to only about everybody within the trade that it’s a minimum of a part of the way forward for digital content material.

Think about the bandwidth financial savings if Netflix might ship a 720p sign that seemed 95% pretty much as good as a 4K one when your TV upscales it — working the particular Netflix algorithms. (In truth Netflix already does one thing like this, although that’s a narrative for an additional time).

Think about if the newest recreation all the time ran at 144 frames per second in 4K, as a result of truly it’s rendered at a decrease decision and intelligently upscaled each 7 microseconds. (That’s what Nvidia envisions with DLSS and different processes its newest playing cards allow.)

Immediately, the ability to do that continues to be a bit of past the common laptop computer or pill, and even highly effective GPUs doing real-time upscaling can produce artifacts and errors because of their extra one-size-fits-all algorithms.

Copyright strike me down, and… (you understand the remaining)

The strategy of rolling your personal upscaled DS9 (or for that matter Babyon 5, or another present or movie that by no means acquired the dignity of a high-definition remaster) is definitely the authorized one, or the closest factor to it. However the easy reality is that there’s all the time somebody with extra time and experience, who will do the job higher — and, generally, they’ll even add the ultimate product to a torrent web site.

That’s what truly set me on the trail to studying all this — funnily sufficient, the best option to discover out if one thing is offered to observe in prime quality is commonly to have a look at piracy websites, which in some ways are refreshingly easy. Trying to find a title, 12 months, and high quality degree (like 1080p or 4K) rapidly reveals whether or not it has had a latest, respectable launch. Whether or not you then go purchase the Blu-ray (more and more an excellent funding) or take different measures is between you, god, and your web supplier.

A consultant scene, imperfect however higher than the unique.

I had initially looked for “Deep Area 9 720p,” in my innocence, and noticed this AI upscaled model listed. I assumed “there’s no manner they’ll put sufficient lipstick on that pig to…” then I deserted my metaphor as a result of the obtain had completed, and I used to be watching it and making a “not dangerous” face.

The model I acquired clocks in at round 400 megabytes per 45-minute episode, low by most requirements, and whereas there are clearly smoothing points, badly interpolated particulars, and different points, it was nonetheless worlds forward of the “official” model. As the standard of the supply materials improves in later seasons, this contributes to improved upscaling as properly. Watching it let me benefit from the present with out considering an excessive amount of about its format limitations; it appeared kind of as I (wrongly) bear in mind it trying.

There are issues, sure — generally element is misplaced as a substitute of gained, reminiscent of what you see within the header picture, the inexperienced bokeh being smeared right into a glowing line. However in movement and on the entire it’s an enchancment, particularly within the discount of digital noise and poorly outlined edges. I fortunately binged 5 – 6 episodes, pleasantly shocked.

A day or two later I acquired an electronic mail from my web supplier saying I’d obtained a DMCA criticism, a copyright strike. In case you had been questioning why this put up doesn’t have extra screenshots.

Now, I’d argue that what I did was technically unlawful, however not flawed. As a fair-weather Amazon Prime subscriber with a free Paramount+ trial, I had entry to these episodes, however in poor high quality. Why shouldn’t I, as a matter of truthful use, go for a fan-enhanced model of the content material I’m already watching legally? For that matter, why not reveals which have had botched remasters, like Buffy the Vampire Slayer (which additionally will be discovered upscaled) or reveals unavailable because of licensing shenanigans?

Okay, it wouldn’t maintain up in courtroom. However I’m hoping historical past will likely be my decide, not some ignorant gavel-jockey who thinks AI is what the Fonz mentioned after slapping the jukebox.

The true query, nonetheless, is why Paramount, or CBS, and anybody else sitting on properties like DS9 haven’t embraced the potential of clever upscaling. It’s gone from extremely technical oddity to simply leveraged choice, one thing a handful of sensible individuals might do in per week or two. If some nameless fan can create the worth I skilled with ease (or relative ease — little doubt a good quantity of labor went into it), why not professionals?

“I’m pals with VFX individuals, and there are folks that labored on the present that need nothing greater than to remaster it. However not all of the individuals at Paramount perceive the worth of previous Trek,” Hruska mentioned.

They need to be those to do it; they’ve the data and the experience. If the studio cared, the way in which they cared about TNG, they might make one thing higher than something I might make. But when they don’t care, then the neighborhood will all the time do higher.