Inflection AI, the creators of the PI AI Private Assistant introduced the creation of a strong new massive language mannequin known as Inflection-2 that outperforms Google’s PaLM language mannequin throughout a spread of benchmarking datasets.

Pi Private Assistant

Pi is a private assistant that’s accessible on the net and as an app for Android and Apple cellular units.

It may also be added as a contact in WhatsApp and accessed by way of Fb and Instagram direct message.

Pi is designed to be a chatbot assistant that may reply questions, analysis something from merchandise, science, or merchandise and it may well perform like a dialogue companion that dispenses recommendation.

The brand new LLM can be included into PI AI quickly after present process security testing.

Inflection-2 Massive Language Mannequin

Inflection-2 is a big language mannequin that outperforms Google’s PaLM 2 Massive mannequin, which is at the moment Google’s most refined mannequin.

Inflection-2 was examined throughout a number of benchmarks and in contrast in opposition to PaLM 2 and Meta’s LLaMA 2 and different massive language fashions (LLMs).

For instance, Google’s PaLM 2 barely edged previous Inflection-2 on the Pure Questions corpus, a dataset of real-world questions.

PaLM 2 scored 37.5 and Inflection-2 scored 37.3, with each outperforming LLaMA 2, which scored 33.0.

MMLU – Huge Multitask Language Understanding

Inflection AI printed the benchmarking scores on the MMLU dataset, which is designed to check LLMs in a manner that’s just like testing people.

The take a look at is on 57 topics in STEM (Science, Know-how, Engineering, and Math) and a variety of different topics like legislation.

The aim of the dataset is to determine the place the LLM is strongest and the place it’s weak.

In keeping with the analysis paper for this benchmarking dataset:

“We suggest a brand new take a look at to measure a textual content mannequin’s multitask accuracy.

The take a look at covers 57 duties together with elementary arithmetic, US historical past, pc science, legislation, and extra.

To achieve excessive accuracy on this take a look at, fashions should possess intensive world information and drawback fixing skill.

We discover that whereas most up-to-date fashions have close to random-chance accuracy, the very largest GPT-3 mannequin improves over random probability by virtually 20 share factors on common.

Nonetheless, on each one of many 57 duties, the most effective fashions nonetheless want substantial enhancements earlier than they’ll attain expert-level accuracy.

Fashions even have lopsided efficiency and ceaselessly have no idea when they’re improper.

Worse, they nonetheless have near-random accuracy on some socially vital topics reminiscent of morality and legislation.

By comprehensively evaluating the breadth and depth of a mannequin’s tutorial {and professional} understanding, our take a look at can be utilized to research fashions throughout many duties and to determine vital shortcomings.”

These are the MMLU benchmarking dataset scores so as of weakest to strongest:

- LLaMA 270b 68.9

- GPT-3.5 70.0

- Grok-1 73.0

- PaLM-2 Massive 78.3

- Claude-2 _CoT 78.5

- Inflection-2 79.6

- GPT-4 86.4

As may be seen above, solely GPT-4 scores larger than Inflection-2.

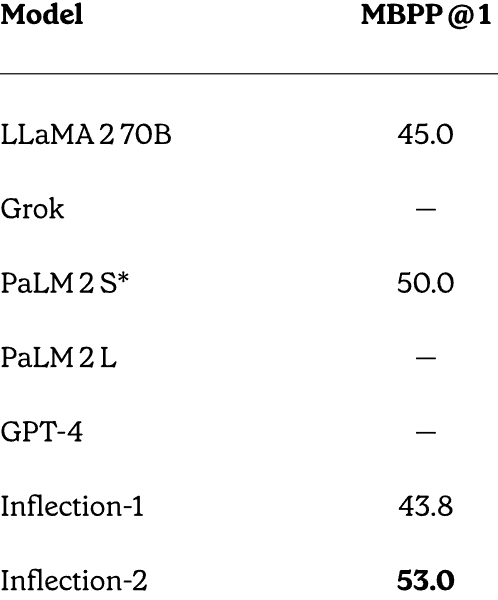

MBPP – Code and Math Reasoning Efficiency

Inflection AI did a face to face comparability between GPT-4, PaLM 2, LLaMA and Inflection-2 on math and code reasoning checks and did surprisingly nicely contemplating that it was not particularly educated for fixing math issues.

The benchmarking dataset used is known as MBPP (Largely Fundamental Python Programming) This dataset consists of over 1,000 crowd-sourced Python programming issues.

What makes the scores particularly notable is that Inflection AI examined in opposition to PaLM-2S, which is a variant massive language mannequin that was particularly fine-tuned for coding.

MBPP Scores:

- LLaMA-2 70B: 45.0

- PaLM-2S: 50.0

- Inflection-2: 53.0

Screenshot of Full MBPP Scores

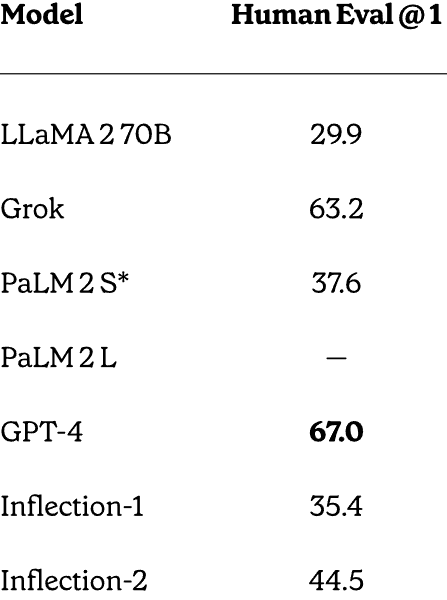

HumanEval Dataset Check

Inflection-2 additionally outperformed PaLM-2 on the HumanEval drawback fixing dataset that was developed and launched by OpenAI.

Hugging Face describes this dataset:

“The HumanEval dataset launched by OpenAI consists of 164 programming issues with a perform sig- nature, docstring, physique, and a number of other unit checks.

They had been handwritten to make sure to not be included within the coaching set of code technology fashions.

The programming issues are written in Python and comprise English pure textual content in feedback and docstrings.

The dataset was handcrafted by engineers and researchers at OpenAI.”

These are the scores:

- LLaMA-2 70B: 29.9

- PaLM-2S: 37.6

- Inflection-2: 44.5

- GPT-4: 67.0

As may be seen above, solely GPT-4 scored larger than Inflection-2. But it ought to once more be famous that Inflection-2 was not fine-tuned to resolve these sorts of issues, which makes these scores a powerful achievement.

Screenshot of Full HumanEval Scores

Inflection AI explains why these scores are vital:

“Outcomes on math and coding benchmarks.

While our major purpose for Inflection-2 was to not optimize for these coding skills, we see robust efficiency on each from our pre-trained mannequin.

It’s potential to additional improve our mannequin’s coding capabilities by fine-tuning on a code-heavy dataset.”

An Even Extra Highly effective LLM Is Coming

The Inflection AI announcement acknowledged that Inflection-2 was educated on 5,000 NVIDIA H100 GPUs. They’re planning on coaching a good bigger mannequin on a 22,000 GPU cluster, a number of orders larger than the 5,000 GPU cluster Inflection-2 was educated on.

Google and OpenAI are dealing with robust competitors from each closed and open supply startups. Inflection AI joins the highest ranks of startups with highly effective AI below improvement.

The PI private assistant is a conversational AI platform with an underlying expertise that’s state-of-the-art with the opportunity of turning into much more highly effective than different platforms that cost for entry.

Learn the official announcement:

Inflection-2: The Subsequent Step Up

Go to PI private assistant on-line

Featured Picture by Shutterstock/Malchevska