Picture created by Creator with Midjourney

- Skeleton-of-Thought (SoT) is an progressive immediate engineering approach that minimizes technology latency in Massive Language Fashions (LLMs), enhancing their effectivity

- By making a skeleton of the reply after which parallelly elaborating on every level, SoT emulates human pondering, selling extra dependable and on-target AI responses

- Implementing SoT in initiatives can considerably expedite problem-solving and reply technology, particularly in situations demanding structured and environment friendly output from AI

SoT is an preliminary try at data-centric optimization for effectivity, and reveal the potential of pushing LLMs to suppose extra like a human for reply high quality.

Immediate engineering is floor zero within the battle for leveraging the potential of generative AI. By devising efficient prompts, and prompt-writing methodologies, we are able to information AI in understanding the consumer’s intentions and addressing these intentions successfully. One notable approach on this realm is the Chain-of-Thought (CoT) methodology, which instructs the generative AI mannequin to elucidate its logic step-by-step whereas approaching a activity or responding to a question. Constructing upon CoT, a brand new and promising approach referred to as Skeleton-of-Thought (SoT) has emerged, which goals to refine the way in which AI processes and outputs data, within the hopes of consequently selling extra dependable and on-target responses.

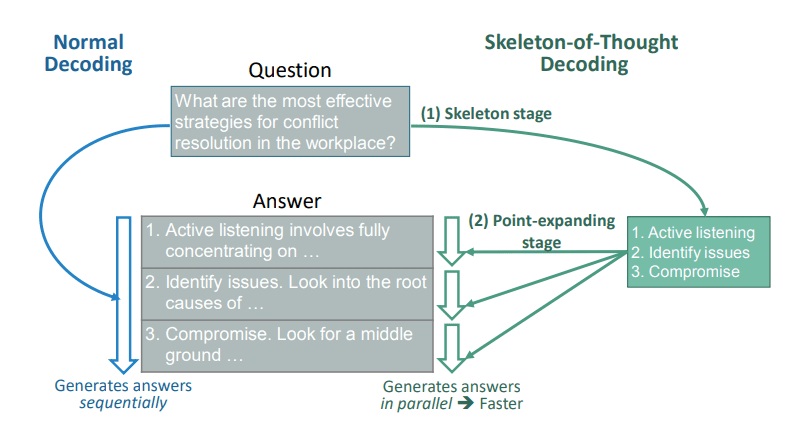

The genesis of Skeleton-of-Thought arises from the endeavor to reduce the technology latency inherent in massive language fashions (LLMs). Not like the sequential decoding strategy, SoT emulates human pondering by first producing a solution’s skeleton, then filling within the particulars in parallel, dashing up the inference course of considerably. When in comparison with CoT, SoT not solely encourages a structured response but additionally effectively organizes the technology course of for enhanced efficiency in generative textual content programs.

Determine 1: The Skeleton-of-Thought course of (from Skeleton-of-Thought: Massive Language Fashions Can Do Parallel Decoding)

As talked about above, implementing SoT entails prompting the LLM to create a skeleton of the problem-solving or answer-generating course of, adopted by parallel elaboration on every level. This methodology may be notably helpful in situations requiring environment friendly and structured output from AI. As an example, when processing massive datasets or answering complicated queries, SoT can considerably expedite the response time, offering a streamlined workflow. By integrating SoT into present immediate engineering methods, immediate engineers can harness the potential of generative textual content extra successfully, reliably, and rapidly.

Maybe one of the simplest ways to display SoT is by instance prompts.

Instance 1

- Query: Describe the method of photosynthesis.

- Skeleton: Photosynthesis happens in vegetation, includes changing mild power to chemical power, creating glucose and oxygen.

- Level-expansion: Elaborate on mild absorption, chlorophyll’s position, the Calvin cycle, and oxygen launch.

Instance 2

- Query: Clarify the causes of the Nice Despair.

- Skeleton: The Nice Despair was attributable to inventory market crash, financial institution failures, and diminished shopper spending.

- Level-expansion: Delve into Black Tuesday, the banking disaster of 1933, and the affect of diminished buying energy.

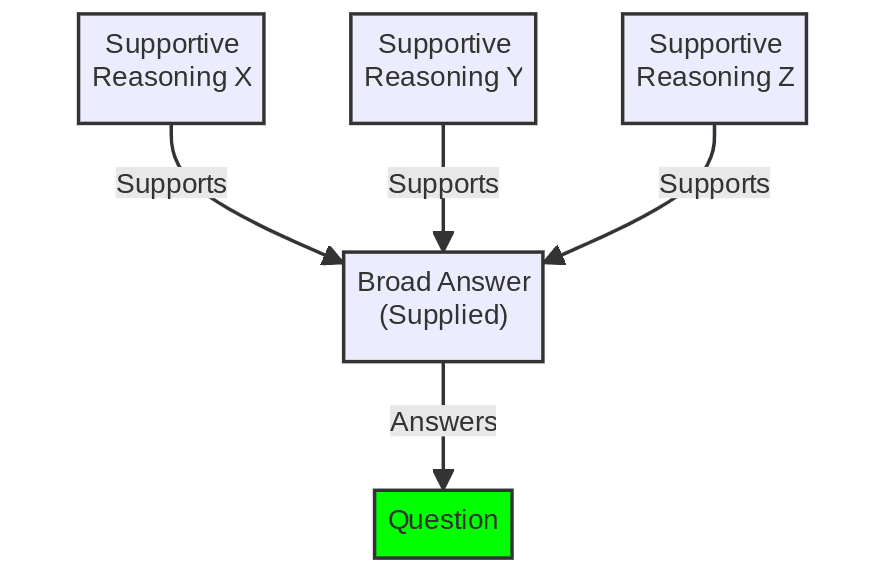

These examples display how SoT prompts facilitate a structured, step-by-step strategy to answering complicated questions. It additionally exhibits the workflow: pose a query or outline a purpose, give the LLM a broad or inclusive reply from which to elaborate supportive reasoning backward from, after which explicitly current these supportive reasoning points and ask particularly immediate it to take action.

Determine 2: The Skeleton-of-Although simplified course of (Picture by Creator)

Whereas SoT affords a structured strategy to problem-solving, it is probably not appropriate for all situations. Figuring out the best use circumstances and understanding its implementation are vital. Furthermore, the transition from sequential to parallel processing may require a shift in system design or further assets. Nonetheless, overcoming these hurdles can unveil the potential of SoT in enhancing the effectivity and reliability of generative textual content duties.

The SoT approach, constructing on the CoT methodology, affords a brand new strategy in immediate engineering. It not solely expedites the technology course of but additionally fosters a structured and dependable output. By exploring and integrating SoT in initiatives, practitioners can considerably improve the efficiency and value of generative textual content, driving in direction of extra environment friendly and insightful options.

Matthew Mayo (@mattmayo13) holds a Grasp’s diploma in laptop science and a graduate diploma in knowledge mining. As Editor-in-Chief of KDnuggets, Matthew goals to make complicated knowledge science ideas accessible. His skilled pursuits embody pure language processing, machine studying algorithms, and exploring rising AI. He’s pushed by a mission to democratize data within the knowledge science neighborhood. Matthew has been coding since he was 6 years outdated.