Picture by Freepik

This complete article will focus on time-based information visualization utilizing Python with the Pandas library. As , time-series information is a treasure trove of insights, and with the skillful resampling approach, you possibly can rework uncooked temporal information into visually compelling narratives. Whether or not you are an information fanatic, scientist, analyst, or simply interested by unraveling the tales hidden inside time-based information, this text enable you with the data and instruments to revamp your information visualization abilities. So, let’s begin discussing the Pandas resampling methods and switch information into informative and charming temporal masterpieces.

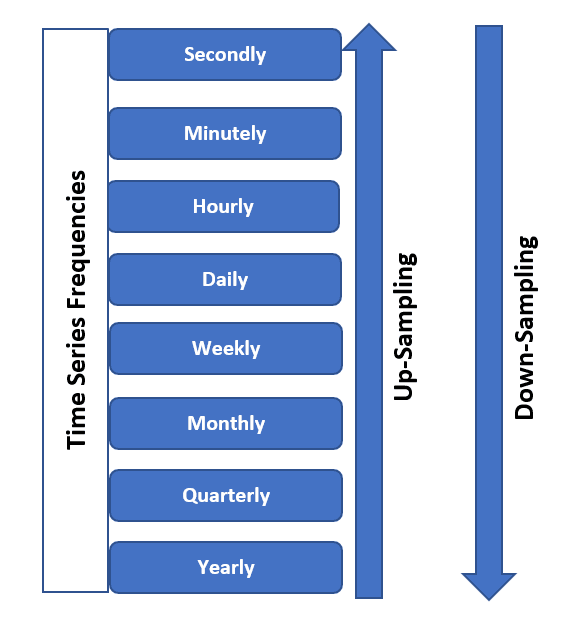

Whereas working with time-based information visualization, Information resampling is essential and really helpful. It lets you management the granularity of the information to extract significant insights and create visually compelling representations to grasp it higher. Within the under image, you possibly can observe that you would be able to both upsample or downsample your time collection information when it comes to frequencies based mostly in your necessities.

Picture from SQLRelease

Principally, the 2 major functions of knowledge resampling are talked about under:

- Granularity Adjustment: Amassing the massive information lets you change the time intervals at which information factors are collected or aggregated. You may get solely the important data as a substitute of getting the noise. This can assist you take away the noisy information, which converts the information to extra manageable for visualization.

- Alignment: It additionally helps align information from a number of sources with completely different time intervals, guaranteeing consistency when creating visualizations or conducting analyses.

For Instance,

Suppose you will have every day inventory worth information for a selected firm that you’re getting from a inventory alternate, and also you intention to visualise the long-term tendencies with out together with the noisy information factors in your evaluation. So, to do that, you possibly can resample this every day information to a month-to-month frequency by taking the common closing worth for every month, and in consequence, the scale of the information for visualization objective lower, and your evaluation can present higher insights.

import pandas as pd

# Pattern every day inventory worth information

information = {

'Date': pd.date_range(begin="2023-01-01", intervals=365, freq='D'),

'StockPrice': [100 + i + 10 * (i % 7) for i in range(365)]

}

df = pd.DataFrame(information)

# Resample to month-to-month frequency

monthly_data = df.resample('M', on='Date').imply()

print(monthly_data.head())

Within the above instance, you will have noticed that we now have resampled the every day information into month-to-month intervals and calculated the imply closing worth for every month, resulting from which you bought the smoother, much less noisy illustration of the inventory worth information, making it simpler to determine long-term tendencies and patterns for choice making.

When working with time-series information, the primary parameter for resampling is the frequency, which you need to choose accurately to get insightful and sensible visualizations. Principally, there’s a tradeoff between granularity, which suggests how detailed the information is, and readability, which implies how properly the information patterns are revealed.

For Instance,

Think about you will have temperature information recorded each minute for a 12 months. Suppose it’s a must to visualize the annual temperature pattern; utilizing minute-level information would lead to an excessively dense and cluttered plot. Alternatively, should you combination the information to yearly averages, you may lose priceless data.

# Pattern minute-level temperature information

information = {

'Timestamp': pd.date_range(begin="2023-01-01", intervals=525600, freq='T'),

'Temperature': [20 + 10 * (i % 1440) / 1440 for i in range(525600)]

}

df = pd.DataFrame(information)

# Resample to completely different frequencies

daily_avg = df.resample('D', on='Timestamp').imply()

monthly_avg = df.resample('M', on='Timestamp').imply()

yearly_avg = df.resample('Y', on='Timestamp').imply()

print(daily_avg.head())

print(monthly_avg.head())

print(yearly_avg.head())

On this instance, we resample the minute-level temperature information into every day, month-to-month, and yearly averages. Relying in your analytical or visualization targets, you possibly can select the extent of element that finest serves your objective. Each day averages reveal every day temperature patterns, whereas yearly averages present a high-level overview of annual tendencies.

By deciding on the optimum resampling frequency, you possibly can stability the quantity of knowledge element with the readability of your visualizations, guaranteeing your viewers can simply discern the patterns and insights you wish to convey.

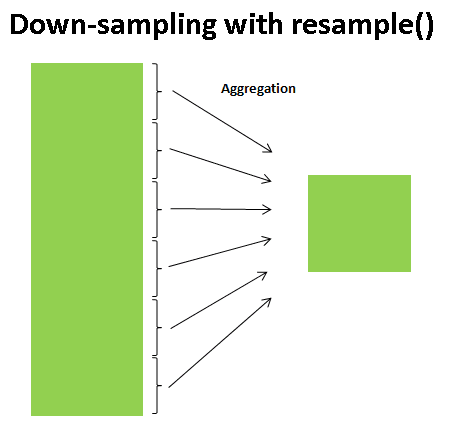

When working with time-based information, it is important to grasp numerous aggregation strategies and methods. These strategies help you successfully summarize and analyze your information, revealing completely different features of your time-based data. Normal aggregation strategies embrace calculating sums and means or making use of customized capabilities.

Picture from TowardsDataScience

For Instance,

Take into account you will have a dataset containing every day gross sales information for a retail retailer over a 12 months. You wish to analyze the yearly income pattern. To do that, you need to use aggregation strategies to calculate the whole gross sales for every month and 12 months.

# Pattern every day gross sales information

information = {

'Date': pd.date_range(begin="2023-01-01", intervals=365, freq='D'),

'Gross sales': [1000 + i * 10 + 5 * (i % 30) for i in range(365)]

}

df = pd.DataFrame(information)

# Calculate month-to-month and yearly gross sales with the aggregation methodology

monthly_totals = df.resample('M', on='Date').sum()

yearly_totals = df.resample('Y', on='Date').sum()

print(monthly_totals.head())

print(yearly_totals.head())

On this instance, we resample the every day gross sales information into month-to-month and yearly totals utilizing the sum() aggregation methodology. By doing this, you possibly can analyze the gross sales pattern at completely different ranges of granularity. Month-to-month totals present insights into differences due to the season, whereas yearly totals give a high-level overview of the annual efficiency.

Relying in your particular evaluation necessities, you may also use different aggregation strategies like calculating means and medians or making use of customized capabilities relying on the dataset distribution, which is significant in line with the issue. These strategies help you extract priceless insights out of your time-based information by summarizing it in a manner that is smart on your evaluation or visualization targets.

Dealing with lacking information is a vital side of working with time collection, guaranteeing that your visualizations and analyses stay correct and informative even when coping with gaps in your information.

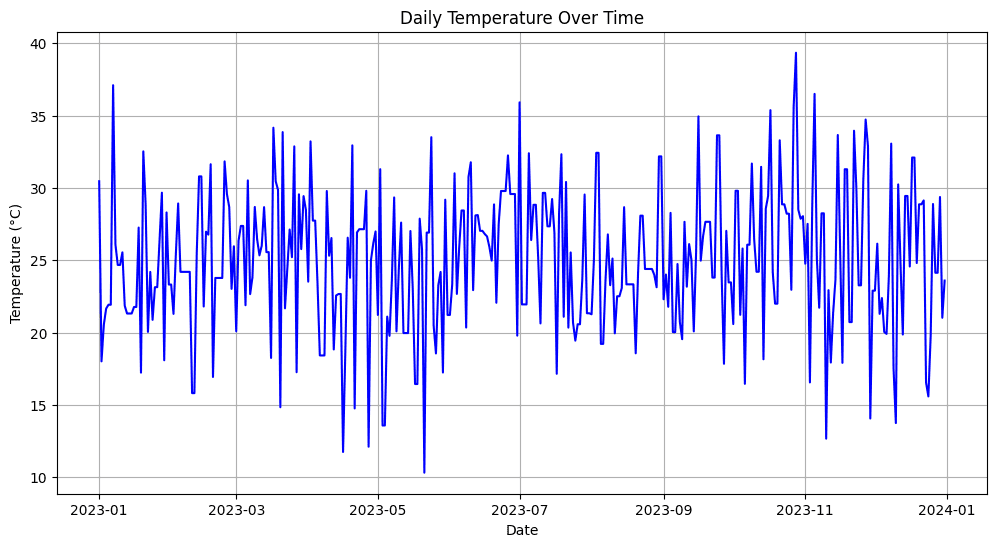

For Instance,

Think about you are working with a historic temperature dataset, however some days have lacking temperature readings resulting from gear malfunctions or information assortment errors. You could deal with these lacking values to create significant visualizations and keep information integrity.

# Pattern temperature information with lacking values

information = {

'Date': pd.date_range(begin="2023-01-01", intervals=365, freq='D'),

'Temperature': [25 + np.random.randn() * 5 if np.random.rand() > 0.2 else np.nan for _ in range(365)]

}

df = pd.DataFrame(information)

# Ahead-fill lacking values (fill with the day prior to this's temperature)

df['Temperature'].fillna(methodology='ffill', inplace=True)

# Visualize the temperature information

import matplotlib.pyplot as plt

plt.determine(figsize=(12, 6))

plt.plot(df['Date'], df['Temperature'], label="Temperature", colour="blue")

plt.title('Each day Temperature Over Time')

plt.xlabel('Date')

plt.ylabel('Temperature (°C)')

plt.grid(True)

plt.present()

Output:

Picture by Creator

Within the above instance, you possibly can see that firstly, we now have simulated the lacking temperature values (about 20% of the information) after which used the forward-fill (ffill) methodology to fill within the gaps, which signifies that the lacking values are changed with the temperature from the day prior to this.

Subsequently, dealing with the lacking information ensures that your visualizations precisely signify the underlying tendencies and patterns within the time collection, stopping gaps from distorting your insights or deceptive your viewers. Varied methods, reminiscent of interpolation or backward-filling, may be employed based mostly on the character of the information and the analysis query.

Information resampling in pandas lets you visualize tendencies and patterns in sequential or time-based information, which additional lets you accumulate insights and successfully talk the outcomes to others. In consequence, you’ll find clear and informative visible representations of your information to spotlight the completely different parts, together with tendencies, seasonality, and irregular patterns (presumably the noise within the information)

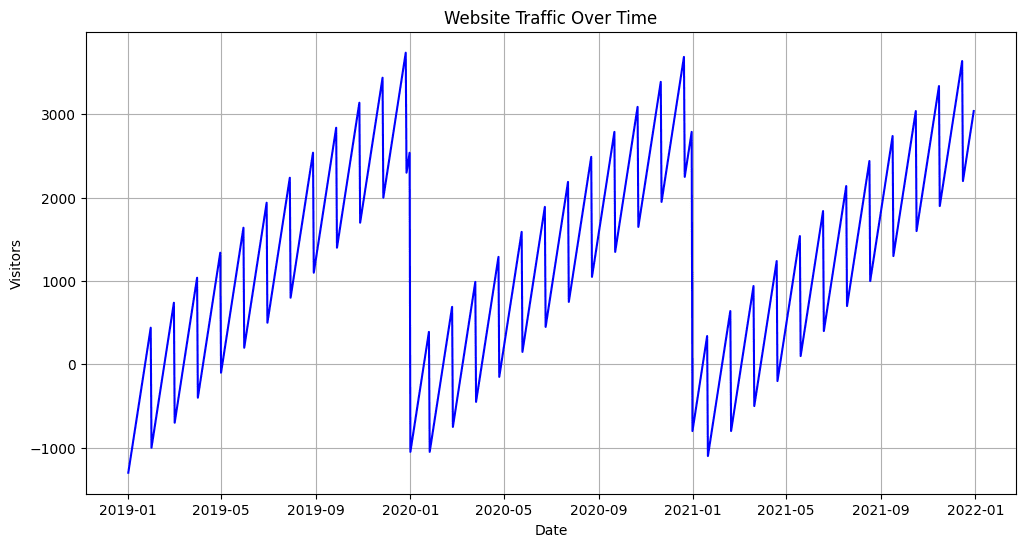

For Instance,

Suppose you will have a dataset containing every day web site visitors information collected over the previous years. You intention to visualise the general visitors pattern within the subsequent years, determine any seasonal patterns, and spot irregular spikes or dips in visitors.

# Pattern every day web site visitors information

information = {

'Date': pd.date_range(begin="2019-01-01", intervals=1095, freq='D'),

'Guests': [500 + 10 * ((i % 365) - 180) + 50 * (i % 30) for i in range(1095)]

}

df = pd.DataFrame(information)

# Create a line plot to visualise the pattern

plt.determine(figsize=(12, 6))

plt.plot(df['Date'], df['Visitors'], label="Each day Guests", colour="blue")

plt.title('Web site Site visitors Over Time')

plt.xlabel('Date')

plt.ylabel('Guests')

plt.grid(True)

# Add seasonal decomposition plot

from statsmodels.tsa.seasonal import seasonal_decompose

outcome = seasonal_decompose(df['Visitors'], mannequin="additive", freq=365)

outcome.plot()

plt.present()

Output:

Picture by Creator

Within the above instance, we now have first created a line plot to visualise the every day web site visitors pattern over time. This plot describes the general development and any irregular patterns within the dataset. Additionally, to decompose the information into completely different parts, we use the seasonal decomposition approach from the statsmodels library, together with pattern, seasonality, and residual parts.

This manner, you possibly can successfully talk the web site’s visitors tendencies, seasonality, and anomalies to stakeholders, which reinforces your potential to derive necessary insights from time-based information and convert it into data-driven choices.

Colab Pocket book hyperlink: https://colab.analysis.google.com/drive/19oM7NMdzRgQrEDfRsGhMavSvcHx79VDK#scrollTo=nHg3oSjPfS-Y

On this article, we mentioned the time-based resampling of knowledge in Python. So, to conclude our session, let’s summarize the details lined on this article:

- Time-based resampling is a robust approach for reworking and summarizing time-series information to get higher insights for decision-making.

- Cautious number of resampling frequency is crucial to stability granularity and readability in information visualization.

- Aggregation strategies like sum, imply, and customized capabilities assist reveal completely different features of time-based information.

- Efficient visualization methods assist in figuring out tendencies, seasonality, and irregular patterns, facilitating clear communication of findings.

- Actual-world use instances in finance, climate forecasting, and social media analytics exhibit the wide-ranging impression of time-based resampling.

Aryan Garg is a B.Tech. Electrical Engineering scholar, at present within the remaining 12 months of his undergrad. His curiosity lies within the area of Net Growth and Machine Studying. He have pursued this curiosity and am wanting to work extra in these instructions.