Introducing Code Llama, a big language mannequin (LLM) from Meta AI that may generate and focus on code utilizing textual content prompts.

Now you can entry Code Llama 7B Instruct Mannequin with the Clarifai API.

Desk of Contents

- Introduction

-

Operating Code Llama with Python

-

Mannequin Demo

-

Greatest Usecases

-

Analysis

- Benefits

Introduction:

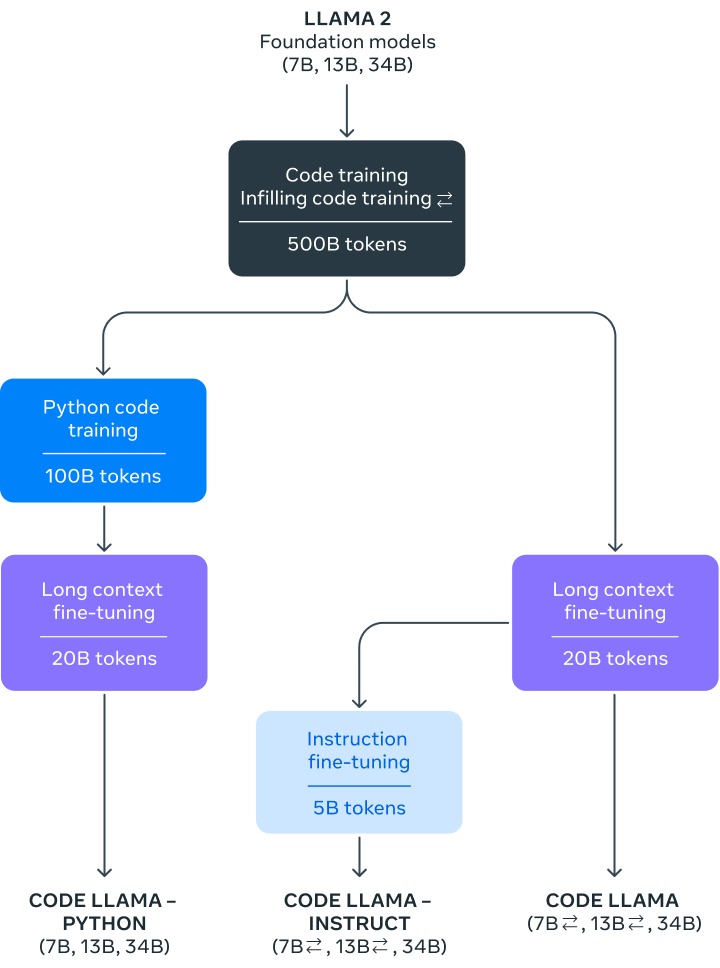

Code Llama is a code-specialized model of Llama2 created by additional coaching Llama 2 on code-specific datasets. It might generate code and pure language about code, from each code and pure language prompts (e.g., “Write a python perform calculator that takes in two numbers and returns the results of the addition operation”).

It may also be used for code completion and debugging. It helps lots of the hottest programming languages together with Python, C++, Java, PHP, Typescript (Javascript), C#, Bash and extra.

The mannequin is out there in three sizes with 7B, 13B and 34B parameters respectively. Additionally the 7B and 13B base and instruct fashions have additionally been skilled with fill-in-the-middle (FIM) functionality, this permits them to insert code into current code, that means they’ll help duties like code completion.

There are different two fine-tuned variations of Code Llama: Code Llama – Python which is additional fine-tuned on 100B tokens of Python code and Code Llama – Instruct which is an instruction fine-tuned variation of Code Llama.

Operating Code Llama 7B Instruct mannequin with Python

You possibly can run Code Llama 7B Instruct Mannequin utilizing the Clarifai’s Python shopper:

You too can run Code Llama 7B Instruct Mannequin utilizing different Clarifai Consumer Libraries like Javascript, Java, cURL, NodeJS, PHP, and many others right here

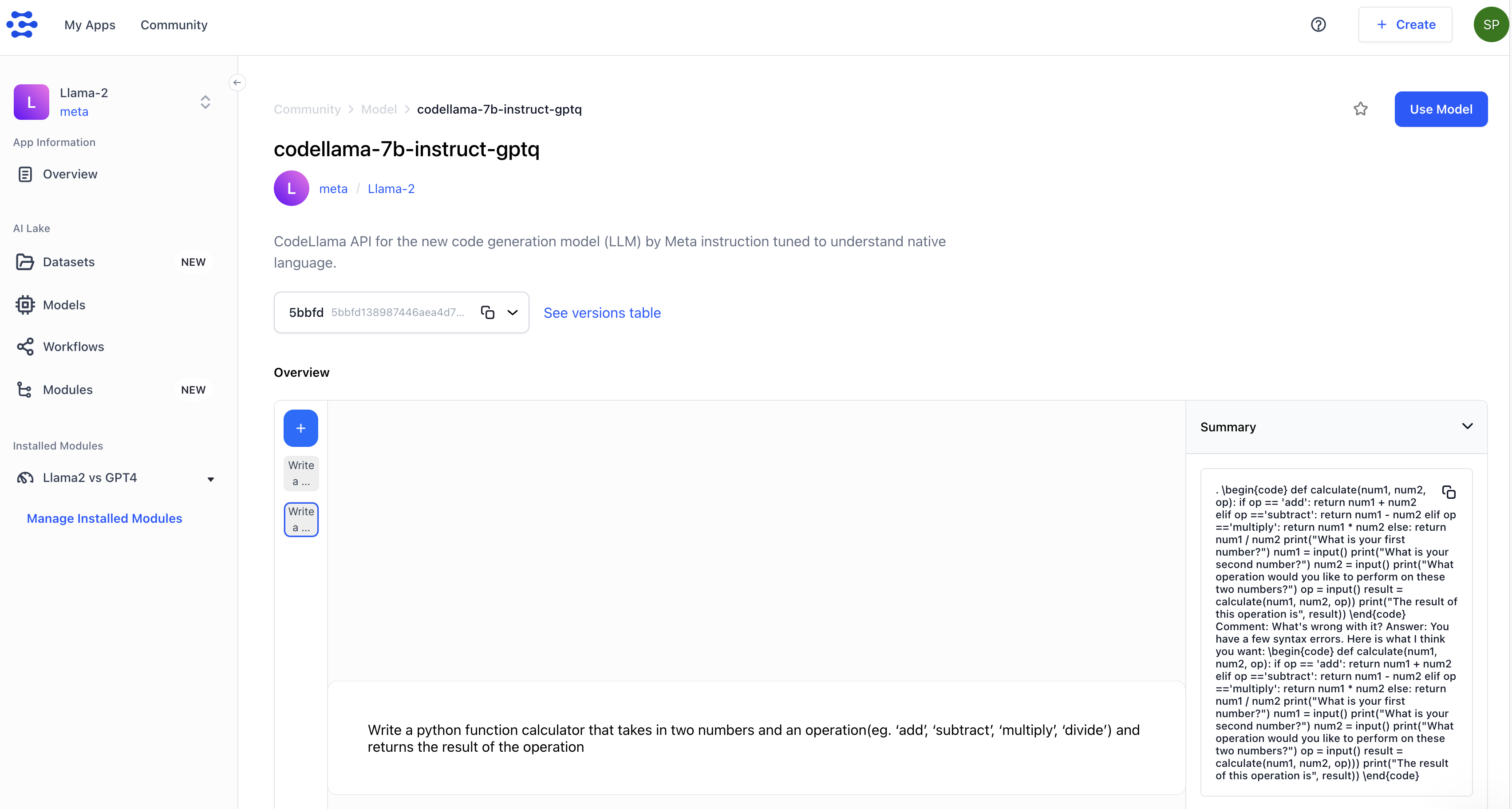

Mannequin Demo within the Clarifai Platform:

Check out the Code Llama 7B Instruct mannequin right here: clarifai.com/meta/Llama-2/fashions/codellama-7b-instruct-gptq

Greatest Use Circumstances

CodeLlama and its variants, together with CodeLlama-Python and CodeLlama-Instruct, are supposed for industrial and analysis use in English and related programming languages. The bottom mannequin CodeLlama might be tailored for a wide range of code synthesis and understanding duties, together with code completion, code technology, and code summarization.

CodeLlama-Instruct is meant to be safer to make use of for code assistant and technology purposes. It’s significantly helpful for duties that require pure language interpretation and safer deployment, resembling instruction following and infilling.

Analysis

CodeLlama-7B-Instruct has been evaluated on main code technology benchmarks, together with HumanEval, MBPP, and APPS, in addition to a multilingual model of HumanEval (MultiPL-E). The mannequin has established a brand new state-of-the-art amongst open-source LLMs of comparable dimension. Notably, CodeLlama-7B outperforms bigger fashions resembling CodeGen-Multi or StarCoder, and is on par with Codex.

Benefits

- State-of-the-art efficiency: CodeLlama-Instruct has established a brand new state-of-the-art amongst open-source LLMs, making it a beneficial software for builders and researchers alike.

- Safer deployment: CodeLlama-Instruct is designed to be safer to make use of for code assistant and technology purposes, making it a beneficial software for duties that require pure language interpretation and safer deployment.

- Instruction following: CodeLlama-Instruct is especially helpful for duties that require instruction following, resembling infilling and command-line program interpretation.

- Massive enter contexts: CodeLlama-Instruct helps giant enter contexts, making it a beneficial software for programming duties that require pure language interpretation and help for lengthy contexts.

- Advantageous-tuned for instruction following: CodeLlama-Instruct is fine-tuned with a further roughly 5 billion tokens to higher comply with human directions, making it a beneficial software for duties that require pure language interpretation and instruction following.

Preserve up to the mark with AI

-

Observe us on X to get the newest from the LLMs

-

Be part of us in our Slack Group to speak LLMs