Generated utilizing ideogram.ai with the immediate: “A photograph of LLAMA with the banner written “QLora” on it., 3d render, wildlife images”

It was a dream to fine-tune a 7B mannequin on a single GPU without spending a dime on Google Colab till just lately. On 23 Might 2023, Tim Dettmers and his group submitted a revolutionary paper[1] on fine-tuning Quantized Massive Language Fashions.

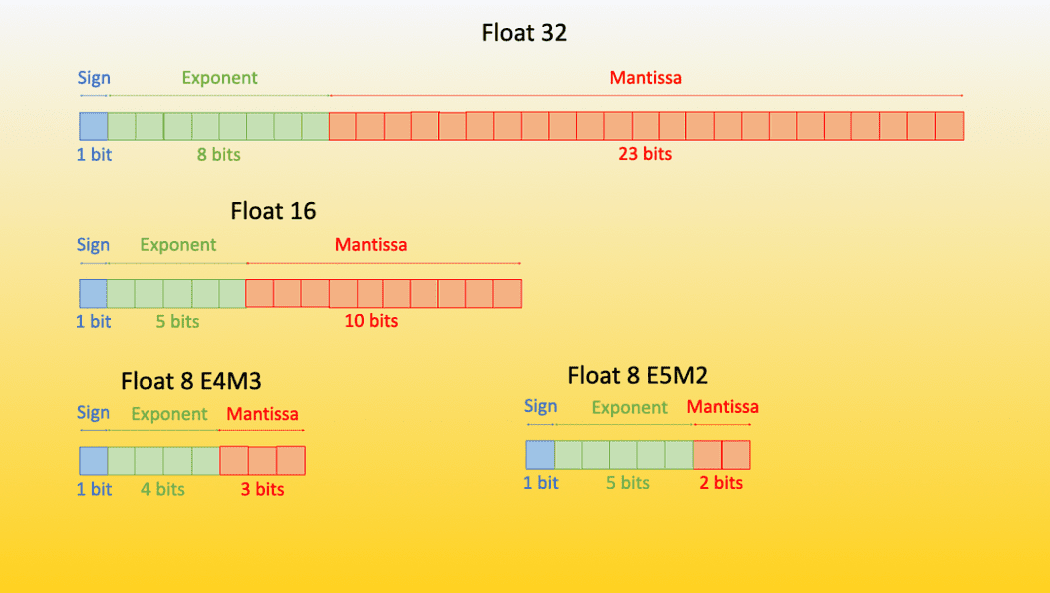

A Quantized mannequin is a mannequin that has its weights in an information sort that’s decrease than the information sort on which it was skilled. For instance, should you practice a mannequin in a 32-bit floating level, after which convert these weights to a decrease information sort reminiscent of 16/8/4 bit floating level such that there’s minimal to no impact on the efficiency of the mannequin.

Supply [2]

We’re not going to speak a lot in regards to the idea of quantization right here, You possibly can confer with the wonderful weblog submit by Hugging-Face[2][3] and a very good YouTube video[4] by Tim Dettmers himself to grasp the underlying idea.

Briefly, it may be stated that QLora means:

Superb-Tuning a Quantized Massive Language fashions utilizing Low Rank Adaptation Matrices (LoRA)[5]

Let’s leap straight into the code:

It is very important perceive that the massive language fashions are designed to take directions, this was first launched within the 2021 ACL paper[6]. The concept is easy, we give a language mannequin an instruction, and it follows the instruction and performs that activity. So the dataset that we need to fine-tune our mannequin must be within the instruct format, if not we are able to convert it.

One of many widespread codecs is the instruct format. We might be utilizing the Alpaca Immediate Template[7] which is

Beneath is an instruction that describes a activity, paired with an enter that gives additional context. Write a response that appropriately completes the request.

### Instruction:

{instruction}

### Enter:

{enter}

### Response:

{response}

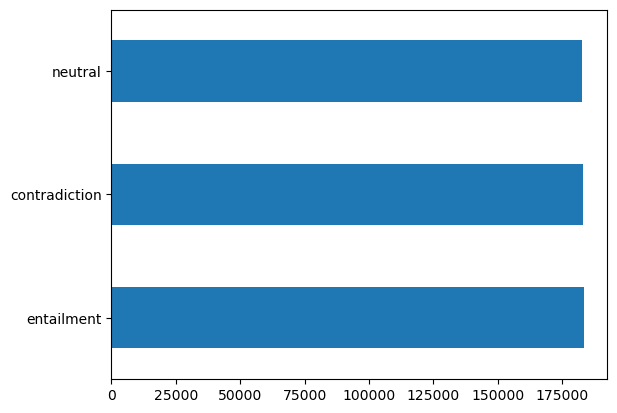

We might be utilizing the SNLI dataset which is a dataset that has 2 sentences and the connection between them whether or not they’re contradiction, entailment of one another, or impartial. We might be utilizing it to generate contradiction for a sentence utilizing LLAMAv2. We are able to load this dataset merely utilizing pandas.

import pandas as pd

df = pd.read_csv('snli_1.0_train_matched.csv')

df['gold_label'].value_counts().plot(type='barh')

Labels Distribution

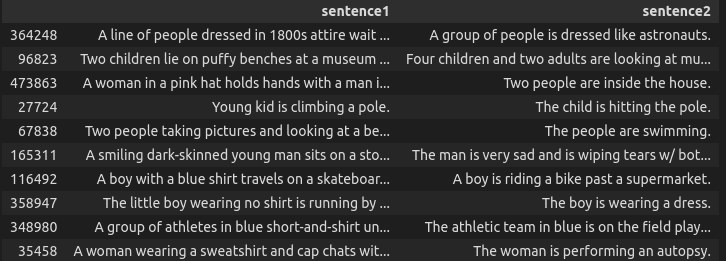

We are able to see just a few random contradiction examples right here.

df[df['gold_label'] == 'contradiction'].pattern(10)[['sentence1', 'sentence2']]

Contradiction Examples from SNLI

Now we are able to create a small operate that takes solely the contradictory sentences and converts the dataset instruct format.

def convert_to_format(row):

sentence1 = row['sentence1']

sentence2 = row['sentence2']ccccc

immediate = """Beneath is an instruction that describes a activity paired with enter that gives additional context. Write a response that appropriately completes the request."""

instruction = """Given the next sentence, your job is to generate the negation for it within the json format"""

enter = str(sentence1)

response = f"""```json

{{'orignal_sentence': '{sentence1}', 'generated_negation': '{sentence2}'}}

```

"""

if len(enter.strip()) == 0: # immediate + 2 new traces + ###instruction + new line + enter + new line + ###response

textual content = immediate + "nn### Instruction:n" + instruction + "n### Response:n" + response

else:

textual content = immediate + "nn### Instruction:n" + instruction + "n### Enter:n" + enter + "n" + "n### Response:n" + response

# we want 4 columns for auto practice, instruction, enter, output, textual content

return pd.Collection([instruction, input, response, text])

new_df = df[df['gold_label'] == 'contradiction'][['sentence1', 'sentence2']].apply(convert_to_format, axis=1)

new_df.columns = ['instruction', 'input', 'output', 'text']

new_df.to_csv('snli_instruct.csv', index=False)

Right here is an instance of the pattern information level:

"Beneath is an instruction that describes a activity paired with enter that gives additional context. Write a response that appropriately completes the request.

### Instruction:

Given the next sentence, your job is to generate the negation for it within the json format

### Enter:

A pair enjoying with a bit of boy on the seashore.

### Response:

```json

{'orignal_sentence': 'A pair enjoying with a bit of boy on the seashore.', 'generated_negation': 'A pair watch a bit of lady play by herself on the seashore.'}

```

Now we now have our dataset within the appropriate format, let’s begin with fine-tuning. Earlier than beginning it, let’s set up the mandatory packages. We might be utilizing speed up, peft (Parameter environment friendly Superb Tuning), mixed with Hugging Face Bits and bytes and transformers.

!pip set up -q speed up==0.21.0 peft==0.4.0 bitsandbytes==0.40.2 transformers==4.31.0 trl==0.4.7

import os

import torch

from datasets import load_dataset

from transformers import (

AutoModelForCausalLM,

AutoTokenizer,

BitsAndBytesConfig,

HfArgumentParser,

TrainingArguments,

pipeline,

logging,

)

from peft import LoraConfig, PeftModel

from trl import SFTTrainer

You possibly can add the formatted dataset to the drive and cargo it within the Colab.

from google.colab import drive

import pandas as pd

drive.mount('/content material/drive')

df = pd.read_csv('/content material/drive/MyDrive/snli_instruct.csv')

You possibly can convert it to the Hugging Face dataset format simply utilizing from_pandas methodology, this might be useful in coaching the mannequin.

from datasets import Dataset

dataset = Dataset.from_pandas(df)

We might be utilizing the already quantized LLamav2 mannequin which is supplied by abhishek/llama-2–7b-hf-small-shards. Let’s outline some hyperparameters and variables right here:

# The mannequin that you just need to practice from the Hugging Face hub

model_name = "abhishek/llama-2-7b-hf-small-shards"

# Superb-tuned mannequin identify

new_model = "llama-2-contradictor"

################################################################################

# QLoRA parameters

################################################################################

# LoRA consideration dimension

lora_r = 64

# Alpha parameter for LoRA scaling

lora_alpha = 16

# Dropout chance for LoRA layers

lora_dropout = 0.1

################################################################################

# bitsandbytes parameters

################################################################################

# Activate 4-bit precision base mannequin loading

use_4bit = True

# Compute dtype for 4-bit base fashions

bnb_4bit_compute_dtype = "float16"

# Quantization sort (fp4 or nf4)

bnb_4bit_quant_type = "nf4"

# Activate nested quantization for 4-bit base fashions (double quantization)

use_nested_quant = False

################################################################################

# TrainingArguments parameters

################################################################################

# Output listing the place the mannequin predictions and checkpoints might be saved

output_dir = "./outcomes"

# Variety of coaching epochs

num_train_epochs = 1

# Allow fp16/bf16 coaching (set bf16 to True with an A100)

fp16 = False

bf16 = False

# Batch measurement per GPU for coaching

per_device_train_batch_size = 4

# Batch measurement per GPU for analysis

per_device_eval_batch_size = 4

# Variety of replace steps to build up the gradients for

gradient_accumulation_steps = 1

# Allow gradient checkpointing

gradient_checkpointing = True

# Most gradient regular (gradient clipping)

max_grad_norm = 0.3

# Preliminary studying fee (AdamW optimizer)

learning_rate = 1e-5

# Weight decay to use to all layers besides bias/LayerNorm weights

weight_decay = 0.001

# Optimizer to make use of

optim = "paged_adamw_32bit"

# Studying fee schedule

lr_scheduler_type = "cosine"

# Variety of coaching steps (overrides num_train_epochs)

max_steps = -1

# Ratio of steps for a linear warmup (from 0 to studying fee)

warmup_ratio = 0.03

# Group sequences into batches with similar size

# Saves reminiscence and hurries up coaching significantly

group_by_length = True

# Save checkpoint each X updates steps

save_steps = 0

# Log each X updates steps

logging_steps = 100

################################################################################

# SFT parameters

################################################################################

# Most sequence size to make use of

max_seq_length = None

# Pack a number of brief examples in the identical enter sequence to extend effectivity

packing = False

# Load the whole mannequin on the GPU 0

device_map = {"": 0}

Most of those are fairly simple hyper-parameters having these default values. You possibly can all the time confer with the documentation for extra particulars.

We are able to now merely use BitsAndBytesConfig class to create the config for 4-bit fine-tuning.

compute_dtype = getattr(torch, bnb_4bit_compute_dtype)

bnb_config = BitsAndBytesConfig(

load_in_4bit=use_4bit,

bnb_4bit_quant_type=bnb_4bit_quant_type,

bnb_4bit_compute_dtype=compute_dtype,

bnb_4bit_use_double_quant=use_nested_quant,

)

Now we are able to load the bottom mannequin with 4 bit BitsAndBytesConfig and tokenizer for Superb-Tuning.

tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True)

tokenizer.pad_token = tokenizer.eos_token

tokenizer.padding_side = "proper"

mannequin = AutoModelForCausalLM.from_pretrained(

model_name,

quantization_config=bnb_config,

device_map=device_map

)

mannequin.config.use_cache = False

mannequin.config.pretraining_tp = 1

We are able to now create the LoRA config and set the coaching parameters.

# Load LoRA configuration

peft_config = LoraConfig(

lora_alpha=lora_alpha,

lora_dropout=lora_dropout,

r=lora_r,

bias="none",

task_type="CAUSAL_LM",

)

# Set coaching parameters

training_arguments = TrainingArguments(

output_dir=output_dir,

num_train_epochs=num_train_epochs,

per_device_train_batch_size=per_device_train_batch_size,

gradient_accumulation_steps=gradient_accumulation_steps,

optim=optim,

save_steps=save_steps,

logging_steps=logging_steps,

learning_rate=learning_rate,

weight_decay=weight_decay,

fp16=fp16,

bf16=bf16,

max_grad_norm=max_grad_norm,

max_steps=max_steps,

warmup_ratio=warmup_ratio,

group_by_length=group_by_length,

lr_scheduler_type=lr_scheduler_type,

report_to="tensorboard"

)

Now we are able to merely use SFTTrainer which is supplied by trl from HuggingFace to start out the coaching.

# Set supervised fine-tuning parameters

coach = SFTTrainer(

mannequin=mannequin,

train_dataset=dataset,

peft_config=peft_config,

dataset_text_field="textual content", # that is the textual content column in dataset

max_seq_length=max_seq_length,

tokenizer=tokenizer,

args=training_arguments,

packing=packing,

)

# Practice mannequin

coach.practice()

# Save skilled mannequin

coach.mannequin.save_pretrained(new_model)

It will begin the coaching for the variety of epochs you’ve gotten set above. As soon as the mannequin is skilled, ensure that to reserve it within the drive with the intention to load it once more (as you must restart the session within the colab). You possibly can retailer the mannequin within the drive through zip and mv command.

!zip -r llama-contradictor.zip outcomes llama-contradictor

!mv llama-contradictor.zip /content material/drive/MyDrive

Now while you restart the Colab session, you’ll be able to transfer it again to your session once more.

!unzip /content material/drive/MyDrive/llama-contradictor.zip -d .

It’s essential load the bottom mannequin once more and merge it with the fine-tuned LoRA matrices. This may be finished utilizing merge_and_unload() operate.

tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True)

tokenizer.pad_token = tokenizer.eos_token

tokenizer.padding_side = "proper"

base_model = AutoModelForCausalLM.from_pretrained(

"abhishek/llama-2-7b-hf-small-shards",

low_cpu_mem_usage=True,

return_dict=True,

torch_dtype=torch.float16,

device_map={"": 0},

)

mannequin = PeftModel.from_pretrained(base_model, '/content material/llama-contradictor')

mannequin = mannequin.merge_and_unload()

pipe = pipeline(activity="text-generation", mannequin=mannequin, tokenizer=tokenizer, max_length=200)

You possibly can take a look at your mannequin by merely passing within the inputs in the identical immediate template that we now have outlined above.

prompt_template = """### Instruction:

Given the next sentence, your job is to generate the negation for it within the json format

### Enter:

{}

### Response:

"""

sentence = "The climate forecast predicts a sunny day with a excessive temperature round 30 levels Celsius, excellent for a day on the seashore with family and friends."

input_sentence = prompt_template.format(sentence.strip())

end result = pipe(input_sentence)

print(end result)

### Instruction:

Given the next sentence, your job is to generate the negation for it within the json format

### Enter:

The climate forecast predicts a sunny day with a excessive temperature round 30 levels Celsius, excellent for a day on the seashore with family and friends.

### Response:

```json

{

"sentence": "The climate forecast predicts a sunny day with a excessive temperature round 30 levels Celsius, excellent for a day on the seashore with family and friends.",

"negation": "The climate forecast predicts a wet day with a low temperature round 10 levels Celsius, not superb for a day on the seashore with family and friends."

}

```

There might be many occasions when the mannequin will carry on predicting even after the response is generated as a result of token restrict. On this case, it’s essential to add a post-processing operate that filters the JSON half which is what we want. This may be finished utilizing a easy Regex.

import re

import json

def format_results(s):

sample = r'```jsonn(.*?)n```'

# Discover all occurrences of JSON objects within the string

json_matches = re.findall(sample, s, re.DOTALL)

if not json_matches:

# attempt to discover 2nd sample

sample = r'{.*?"sentence":.*?"negation":.*?}'

json_matches = re.findall(sample, s)

# Return the primary JSON object discovered, or None if no match is discovered

return json.masses(json_matches[0]) if json_matches else None

This gives you the required output as an alternative of the mannequin repeating random output tokens.

On this weblog, you discovered the fundamentals of QLora, fine-tuning a LLama v2 mannequin on Colab utilizing QLora, Instruction Tuning, and a pattern template from the Alpaca dataset that can be utilized to instruct tune a mannequin additional.

References

[1]: QLoRA: Environment friendly Finetuning of Quantized LLMs, 23 Might 2023, Tim Dettmers et al.

[2]: https://huggingface.co/weblog/hf-bitsandbytes-integration

[3]: https://huggingface.co/weblog/4bit-transformers-bitsandbytes

[4]: https://www.youtube.com/watch?v=y9PHWGOa8HA

[5]: https://arxiv.org/abs/2106.09685

[6]: https://aclanthology.org/2022.acl-long.244/

[7]: https://crfm.stanford.edu/2023/03/13/alpaca.html

[8]: Colab Pocket book by @maximelabonne https://colab.analysis.google.com/drive/1PEQyJO1-f6j0S_XJ8DV50NkpzasXkrzd?usp=sharing

Ahmad Anis is a passionate Machine Studying Engineer and Researcher at present working at redbuffer.ai. Past his day job, Ahmad actively engages with the Machine Studying neighborhood. He serves as a regional lead for Cohere for AI, a nonprofit devoted to open science, and is an AWS Neighborhood Builder. Ahmad is an energetic contributor at Stackoverflow, the place he has 2300+ factors. He has contributed to many well-known open-source initiatives, together with Shap-E by OpenAI.