A scorching potato: Nvidia has up to now dominated the AI accelerator enterprise throughout the server and knowledge heart market. Now, the corporate is enhancing its software program choices to ship an improved AI expertise to customers of GeForce and different RTX GPUs in desktop and workstation techniques.

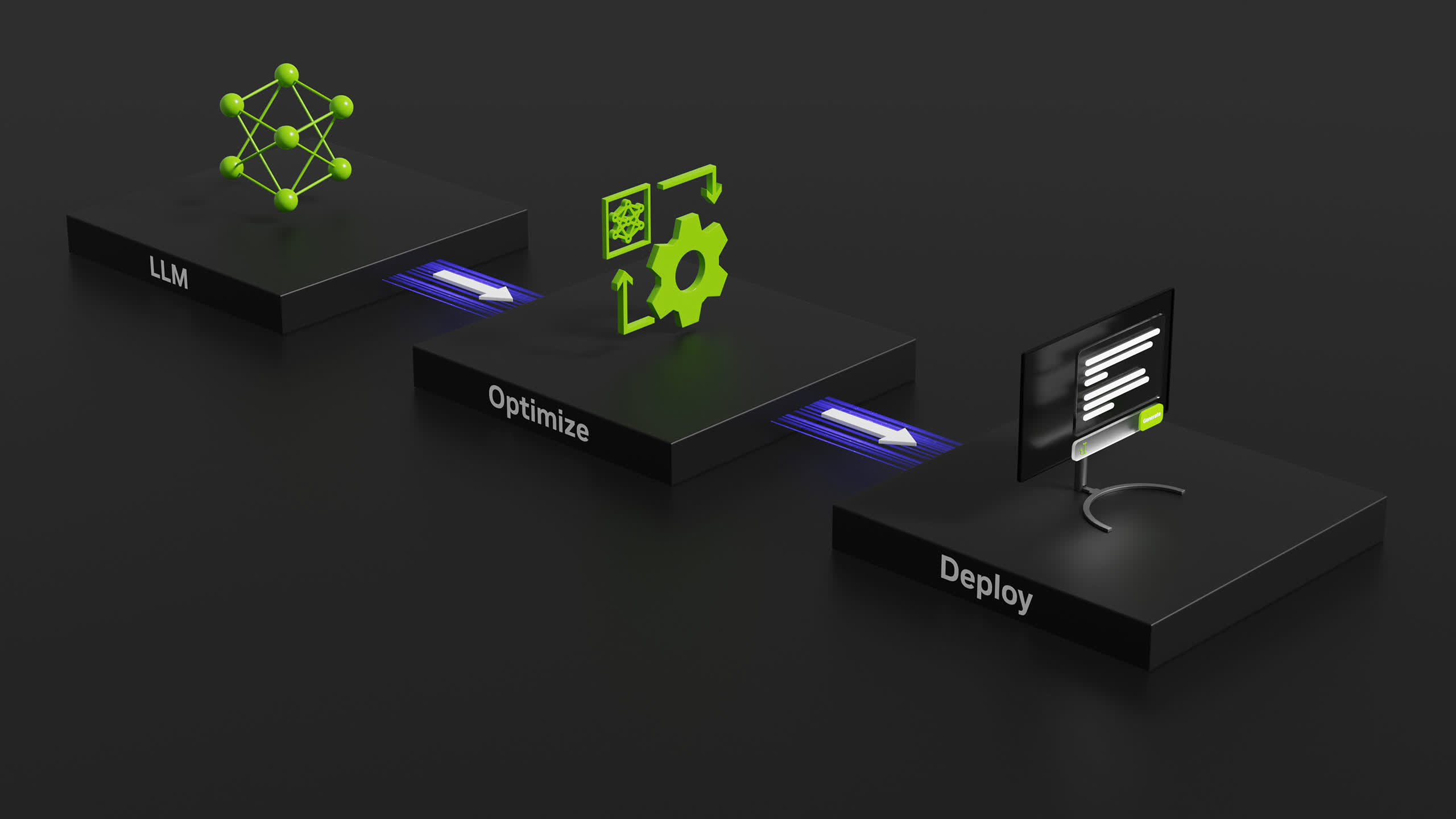

Nvidia will quickly launch TensorRT-LLM, a brand new open-source library designed to speed up generative AI algorithms on GeForce RTX {and professional} RTX GPUs. The newest graphics chips from the Santa Clara company embrace devoted AI processors referred to as Tensor Cores, which at the moment are offering native AI {hardware} acceleration to greater than 100 million Home windows PCs and workstations.

On an RTX-equipped system, TensorRT-LLM can seemingly ship as much as 4x quicker inference efficiency for the newest and most superior AI giant language fashions (LLM) like Llama 2 and Code Llama. Whereas TensorRT was initially launched for knowledge heart purposes, it’s now out there for Home windows PCs geared up with highly effective RTX graphics chips.

Trendy LLMs drive productiveness and are central to AI software program, as famous by Nvidia. Due to TensorRT-LLM (and an RTX GPU), LLMs can function extra effectively, leading to a considerably improved person expertise. Chatbots and code assistants can produce a number of distinctive auto-complete outcomes concurrently, permitting customers to pick out the most effective response from the output.

The brand new open-source library can also be useful when integrating an LLM algorithm with different applied sciences, as famous by Nvidia. That is significantly helpful in retrieval-augmented era (RAG) situations the place an LLM is mixed with a vector library or database. RAG options allow an LLM to generate responses primarily based on particular datasets (comparable to person emails or web site articles), permitting for extra focused and related solutions.

Nvidia has introduced that TensorRT-LLM will quickly be out there for obtain via the Nvidia Developer web site. The corporate already offers optimized TensorRT fashions and a RAG demo with GeForce information on ngc.nvidia.com and GitHub.

Whereas TensorRT is primarily designed for generative AI professionals and builders, Nvidia can also be engaged on further AI-based enhancements for conventional GeForce RTX clients. TensorRT can now speed up high-quality picture era utilizing Steady Diffusion, because of options like layer fusion, precision calibration, and kernel auto-tuning.

Along with this, Tensor Cores inside RTX GPUs are being utilized to boost the standard of low-quality web video streams. RTX Video Tremendous Decision model 1.5, included within the newest launch of GeForce Graphics Drivers (model 545.84), improves video high quality and reduces artifacts in content material performed at native decision, because of superior “AI pixel processing” know-how.