Within the quickly evolving panorama of synthetic intelligence (AI), open supply basis fashions are rising as a driving drive for innovation and democratization. Whereas tech giants as soon as held the reins with proprietary fashions, the collaborative energy of the open-source neighborhood is now difficult the established order.

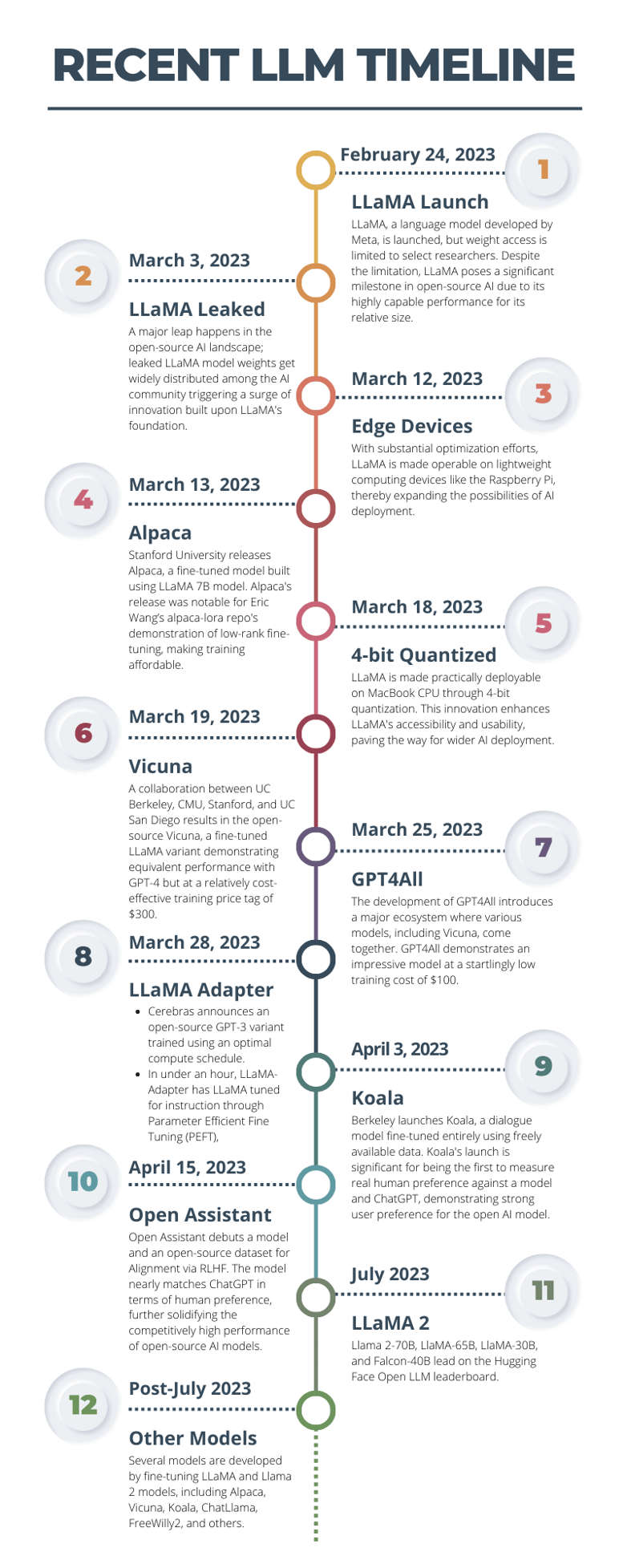

Massive Language Fashions (LLMs), exemplifying this paradigm shift, are breaking free from the confines of some firms and unleashing immense potential for personalization and widespread adoption. The unintentional launch of the LLaMA collection by Meta in February 2023 was a turning level, spurring a wave of creativity and breakthroughs inside the open-source neighborhood.

On this weblog, we discover the importance of open-source basis fashions, specializing in the LLaMA journey and the outstanding developments they bring about to the forefront of AI improvement.

The Rise of Open-Supply Basis Fashions: Empowering AI Innovation and Democratization

Open-source basis fashions change into an increasing number of essential every single day. Proprietary fashions backed by tech giants reminiscent of Google and OpenAI as soon as led the best way, however in latest instances, they have been more and more challenged by the collective energy of the open-source neighborhood.

Basis fashions reminiscent of Massive Language Fashions (LLMs) embody the themes of this shift within the AI panorama. This variation in dynamics, from holding onto tightly managed proprietary fashions to embracing the openness of data sharing and community-based innovation, guarantees a ripple of potential advantages throughout the board. By unshackling these basis fashions from the confines of some tech firms, the potential for large-scale innovation, customization, and democratization of AI vastly will increase.

In Might 2023, a leaked memo from a Google researcher solid doubt on the corporate’s future in AI, stating that it has “no moat” within the business. On this memo, a “moat” referred to a aggressive benefit that an organization has over different firms in the identical business, making it exhausting for rivals to achieve market share. The researcher said that regardless of their prominence, neither Google nor OpenAI has such a moat, implying that they lack distinctive, unique options or benefits of their AI fashions. Open supply basis fashions, the memo claimed, had been about to eat their lunch.

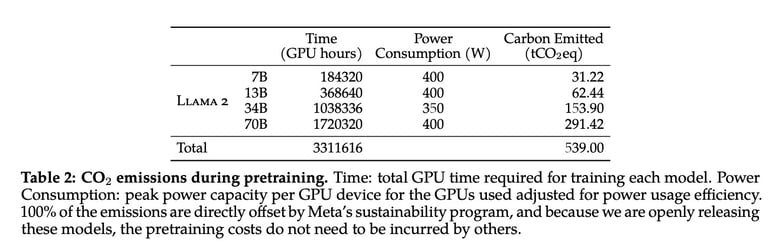

Whereas this argument has benefit, it fails to deal with one essential level: all of the unbelievable success of open supply basis fashions has been based mostly on fashions created by firms that had been both leaked or deliberately launched as open-source. To elucidate why that is the case, the small print of the present state-of-the-art LLM, GPT-4, lately slipped out and indicated that the mannequin has 1.8 trillion parameters throughout 120 layers, costing $63 million to coach. The LLaMA 2 fashions we’re about to debate used a complete of 3.3 million GPU hours to coach. One would possibly say that’s at the least considerably of a moat.

Credit score: LLaMA 2 paper

An incident that exemplifies the leveraging of business fashions in open supply was the unintentional launch, adopted by the official launch of highly effective LLMs developed underneath the LLaMA (Massive Language Mannequin Meta AI) collection.

Unintended Affect: The Unintended Launch of LLaMA and its Penalties

The eyes of the AI neighborhood had been turned in direction of Meta in February 2023, when it launched the LLaMA (Massive Language Mannequin – Meta AI) collection. LLaMA was certainly a promising leap within the subject of AI fashions. Nevertheless, Meta supposed to maintain these fashions underneath proprietary management, limiting its widespread availability. Barely two weeks after its launch, the mannequin was leaked on-line in an surprising flip of occasions.

The preliminary reactions inside the neighborhood ranged from shock to disbelief. Not solely did the unintentional launch violate Meta’s possession and management over LLaMA, nevertheless it additionally raised issues concerning the potential misuse of such superior fashions. Moreover, it raised questions on authorized and moral challenges associated to the unauthorized use of proprietary content material.

But, the unintentional launch of LLaMA set in movement a surge of innovation inside the open-source neighborhood. As builders and researchers worldwide acquired the mannequin, its potential purposes started to unfold. Improvements began showing quickly, with mannequin enhancements, high quality upgrades, multimodality, and lots of extra developments conceived inside a remarkably brief span after the discharge.

Meta’s Proactive Transfer: The Evolution and Developments of LLaMA 2

Because the wave of innovation swept via the open-source neighborhood following the unintended launch of the unique LLaMA, Meta determined to harness this sudden increase in creativity and engagement. As an alternative of preventing to regain management, the corporate selected to prepared the ground by formally releasing the second iteration of the mannequin, LLaMA 2.

Proactively, in making LLaMA 2 utterly open, Meta unlocked the potential for numerous builders and researchers to contribute to its improvement. The importance of LLaMA 2 was underlined by its spectacular parameter rely and heightened efficiency capabilities, rivaling many closed-source fashions.

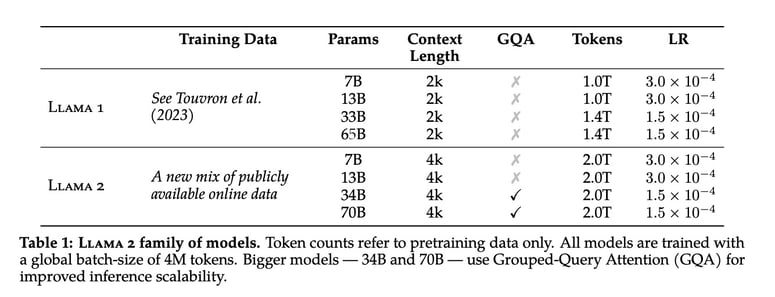

The rollout of LLaMA 2 brings with it an array of each pre-trained and fine-tuned Massive Language Fashions (LLMs) boasting a scale from 7 billion (7B) as much as an extremely hefty 70 billion (70B) parameters. These fashions symbolize a notable development over their LLaMA 1 counterparts, on condition that they’ve been educated on 40% extra tokens, function an impressively prolonged context size of 4,000 tokens, and make the most of grouped-query consideration for the extremely environment friendly inference of the 70B mannequin.

But, what holds actual promise on this launch is the assortment of fine-tuned fashions particularly tailor-made for dialogue purposes – LLaMA 2-Chat. These fashions have been optimized by way of Reinforcement Studying from Human Suggestions (RLHF), resulting in measurable enhancements in security and helpfulness benchmarks. Notably, based mostly on human evaluations, the efficiency stage of LLaMA 2-Chat fashions is comfortably according to and surpasses that of quite a few open fashions, reaching parity with the capabilities of ChatGPT.

The LLaMA Revolution: Unleashing Open-Supply AI Developments

The transition from the unintentional launch of the unique LLaMA to the official launch of LLaMA 2 heralds an unprecedented shift within the AI panorama. Firstly, the incident revitalizes the tradition of open-source improvement. An inflow of latest concepts and enhancements has emerged as builders worldwide are allowed to experiment with, iterate upon, and contribute to the LLaMA fashions.

Democratizing entry to such superior AI fashions has leveled the taking part in subject. The open-source variations of LLaMA fashions allow people, smaller firms, and researchers with restricted sources to take part in AI improvement utilizing state-of-the-art instruments, thus erasing the monopoly beforehand held by big tech firms.

Lastly, the utterly open nature of LLaMA fashions signifies that any upgrades, enhancements, and improvements can be instantly accessible to all. This cyclic movement of shared information stimulates a wholesome suggestions loop, propelling the general tempo and breadth of AI developments to unprecedented ranges.

This so-called “LLaMA revolution” thus presents a compelling blueprint for AI’s collective, collaborative future. And whereas questions on moral and security implications stay, there isn’t any ignoring the whirlwind of innovation that has been unleashed.

OpenAI and Meta launched into two distinctive paths. Initially, OpenAI was deeply rooted in moral issues and held lofty aspirations for worldwide change. Nevertheless, as time went on, they turned extra inward-focused, shifting away from a spirit of open innovation. This shift in direction of a extra closed method drew criticism, particularly as a consequence of their inflexible stance on AI improvement. Alternatively, Meta began with a extra restricted method, which many criticized for its slim AI practices. But, surprisingly, Meta’s method had a major influence on the AI business, notably via its instrumental function within the improvement of PyTorch.

A lot of this innovation has been pushed by Low-Rank Adaptation (LoRA), a useful expertise that has been instrumental in fine-tuning sizable AI fashions cost-effectively. Developed by Stanford College’s Eric Wang, LoRA is a technique that confines the modifications made in the course of the fine-tuning part to a low-rank issue of the pre-trained mannequin’s parameters. This considerably reduces the complexity and computational necessities of the fine-tuning course of, making it extra environment friendly and inexpensive.

The function of LoRA is important within the broader improvement and development of open-source AI. It was showcased via Stanford’s improvement of Alpaca, a fine-tuned mannequin constructed utilizing the LLaMA 7B mannequin. This demonstration confirmed that coaching highly effective AI fashions utilizing LoRA could possibly be carried out affordably and with nice efficiency.

How Does Meta Profit from Making Fashions Open-Supply?

Meta advantages considerably from the innovation that the open-source neighborhood can spur. When AI methods are made open-source, builders and researchers worldwide can experiment with and enhance them. This collective knowledge and ingenuity of the worldwide AI neighborhood can drive the expertise ahead at a tempo and breadth not possible for a single group to attain. Open-sourcing their fashions can even drive economies of scale and effectiveness. It permits for consolidating computational sources, minimizing redundancies in system design, and stopping the proliferation of remoted initiatives. This probably reduces computational useful resource prices and the related carbon footprint and opens a pathway for intensive security and efficiency enhancements.

Credit score: LLaMA 2 paper

Making LLMs extra extensively accessible for industrial use will doubtless drive product integrations. The elevated capacity of different companies to leverage Meta’s LLaMA fashions in quite a few purposes might additionally not directly feed again into Meta’s services by surfacing new use instances and integration factors.

Meta’s contribution to open-source AI reinforces its place as a pacesetter in AI development. The corporate is dedicated to furthering technological progress for the collective profit quite than limiting developments to its proprietary use. This could generate goodwill within the developer neighborhood in direction of Meta, strengthening its presence and affect inside the ecosystem.

Whereas open-sourcing LLaMA does carry up a number of challenges, the transfer additionally supplies substantial advantages and alternatives for Meta. It ensures Meta stays not only a contributor, however a driver, within the ongoing journey of AI development. Maybe most significantly, it strikes an enormous blow to the first-mover benefit of OpenAI’s ChatGPT fashions, which caught the whole business when ChatGPT appeared in late 2022.

Future Views and Obstacles

As we transfer in direction of the widespread use of open supply basis fashions like LLaMA, one of the pivotal elements to think about is the potential penalties and challenges that this shift would possibly introduce. These surges in technological development undeniably include their fair proportion of obstacles, spanning the arenas of security, ethics, and potential misuse of data.

In exploring future views, it is important to notice Meta’s constant emphasis on open analysis and neighborhood collaboration. They’ve a strong historical past of open-sourcing code, datasets, and instruments, facilitating exploratory analysis and large-scale manufacturing deployment for distinguished AI labs and corporations. Meta’s open releases have spurred analysis in mannequin effectivity, medication, and conversational security research, additional amplifying the worth of shared sources.

Regardless of these strides, Meta acknowledges the potential dangers and societal implications of brazenly releasing highly effective AI fashions like LLaMA. Though they’ve put prohibitions towards unlawful or dangerous use instances, misuse of those fashions stays a major menace. The democratization of entry to superior LLaMA fashions will put such expertise into extra palms, rising the opportunity of unregulated use.

As these fashions change into built-in with an array of business merchandise, this accountability of moral and secure use additionally extends to builders leveraging these fashions. In anticipation of potential privacy- and content-related dangers, Meta stresses the necessity for builders to comply with greatest practices for accountable improvement. As new merchandise are constructed and deployed, managing these dangers turns into a shared accountability amongst all stakeholders within the AI subject.

Different logistical and environmental challenges have emerged. The compute prices for pretraining such Massive Language Fashions (LLMs) are excessively excessive for smaller organizations. Additionally, an unchecked proliferation of enormous fashions might exacerbate the sector’s carbon footprint.

The silver lining right here lies in Meta’s obvious perception in collective knowledge and the ingenuity of the AI-practitioner neighborhood. Meta contends that adopting an open method will yield the advantages of this expertise and guarantee its security. The shared accountability inside the neighborhood can result in higher, safer fashions whereas stopping the onus of progress and security work from falling solely on giant firms’ shoulders.

Navigating this new terrain of open-source basis fashions will undeniably current challenges starting from moral boundaries to digital safety. Thus, safeguarding towards these dangers whereas maximizing the advantages of such fashions is an endeavor that may form the longer term trajectory of AI.

Wish to keep up-to-date with the AI world? We repeatedly publish the most recent information and developments in AI. Subscribe to our publication.